Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

Imagga

AWS Rekognition

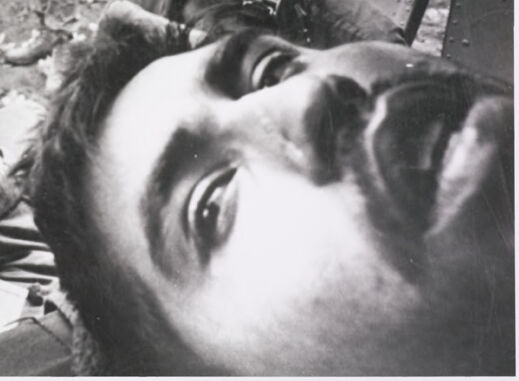

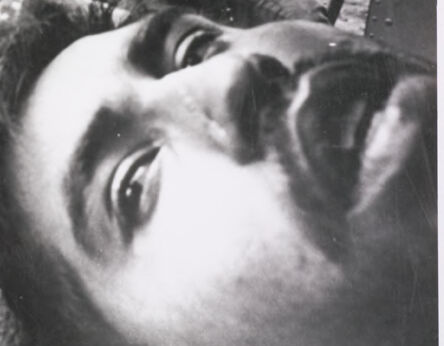

| Age | 22-30 |

| Gender | Male, 99.6% |

| Calm | 57.6% |

| Disgusted | 17.3% |

| Confused | 13.5% |

| Surprised | 7.9% |

| Fear | 6.9% |

| Angry | 4.4% |

| Sad | 2.4% |

| Happy | 1% |

Feature analysis

Amazon

Clarifai

AWS Rekognition

| Person | 99.6% | |

Categories

Imagga

created on 2022-06-03

| streetview architecture | 43.2% | |

| paintings art | 24.2% | |

| nature landscape | 12.9% | |

| pets animals | 5.2% | |

| food drinks | 4.8% | |

| macro flowers | 3.5% | |

| people portraits | 3.1% | |

| beaches seaside | 1.3% | |

Captions

Microsoft

created by unknown on 2022-06-03

| a group of people standing in front of a crowd | 88.2% | |

| a group of people standing in front of a crowd posing for the camera | 85.9% | |

| a group of people standing in front of a crowd of people | 85.8% | |

Clarifai

No captions written

Salesforce

Created by general-english-image-caption-blip on 2025-05-12

a photograph of a man is being taken from a vehicle

Created by general-english-image-caption-blip-2 on 2025-07-01

a soldier is being treated by a medic in a helicopter

OpenAI GPT

Created by gpt-4o-2024-08-06 on 2025-06-23

The response was filtered due to the prompt triggering Azure OpenAI's content management policy. The content filter results follow.

hate: safe

self-harm: safe

sexual: safe

violence: high

Anthropic Claude

Created by anthropic.claude-3-haiku-20240307-v1:0 on 2025-06-11

The image depicts a scene from what appears to be a military or combat situation. It shows several soldiers in the field, some providing medical attention to an injured individual lying on a stretcher. The soldiers are wearing helmets and combat gear, suggesting this is likely a wartime or conflict setting. The image has a black and white, documentary-style aesthetic, conveying a sense of the harsh realities of the situation. While the image contains human subjects, I will refrain from identifying or naming any individuals shown, in accordance with the instructions provided.

Created by us.anthropic.claude-3-5-sonnet-20241022-v2:0 on 2025-06-11

This appears to be a black and white photograph from what looks like the Vietnam War era. It shows military personnel tending to someone who appears to be wounded and lying on what seems to be a medical stretcher or evacuation equipment. The image shows soldiers wearing combat helmets and military fatigues. The setting appears to be outdoors in rough terrain. The photograph captures the grim reality of battlefield medicine and casualty evacuation during wartime. The composition creates an intimate perspective of military medical care in the field.

Meta Llama

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-06-08

The image depicts a black and white photograph of a man lying on the ground, surrounded by a group of soldiers. The man is positioned in the foreground, with his head tilted back and his eyes closed. He appears to be injured or unconscious.

In the background, several soldiers are gathered around the man, some of whom are wearing helmets and uniforms. They seem to be assessing the situation or providing medical attention. The scene is set against a backdrop of a desolate landscape, with debris and rubble scattered about.

The overall atmosphere of the image suggests that it was taken during a time of conflict or crisis, possibly during the Vietnam War. The presence of soldiers and the injured man implies a sense of urgency and danger. The image conveys a sense of chaos and disorder, with the soldiers and the man in the foreground creating a sense of tension and drama.

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-06-08

This image depicts a black-and-white photograph of soldiers in a war zone, with one soldier lying on the ground and others standing around him. The scene appears to be set in a field or open area, with debris scattered about.

In the foreground, the soldier on the ground is positioned at an angle, with his head tilted upwards and his body partially obscured by the camera's perspective. He wears a helmet and has a serious expression on his face.

Behind him, several other soldiers are visible, some of whom are also wearing helmets. They seem to be engaged in various activities, such as examining equipment or discussing something among themselves. The overall atmosphere of the image suggests a sense of tension and urgency, as if the soldiers are preparing for or responding to a critical situation.

The background of the image is blurry, but it appears to show a desolate landscape with no visible buildings or structures. The sky above is overcast, adding to the somber mood of the scene.

Overall, this image captures a moment of intensity and focus among a group of soldiers in a war-torn environment.

Amazon Nova

Created by amazon.nova-lite-v1:0 on 2025-06-08

The black-and-white image depicts a scene of urgency and distress, likely in a military or wartime context. In the foreground, a soldier is seen lying on a stretcher, his face showing signs of pain and shock. Another soldier, wearing a helmet, appears to be administering medical aid or performing a procedure on the injured soldier. Behind them, a third soldier stands, also wearing a helmet, and appears to be observing or assisting. The background is blurred, but it seems to depict a chaotic and damaged environment, possibly a battlefield or a makeshift medical facility.

Created by amazon.nova-pro-v1:0 on 2025-06-08

The black-and-white photo depicts a scene of chaos and urgency, possibly taken during a war. A man is lying on the ground, with his face close to the camera, and he appears to be injured. He is wearing a helmet, and his body is covered in dirt and debris. Behind him, a few people are standing, and one of them is holding a gun. In the distance, there are trees and a pile of rubble.

Google Gemini

Created by gemini-2.0-flash-lite on 2025-05-14

The image is a black and white photograph depicting a scene of medical care during a military operation, likely in Vietnam.

Here's a breakdown:

- Focus: The main focus of the image is the injured soldier in the foreground. His face is visible, showing his eyes wide open as if in pain or shock, reflecting a serious injury. He is lying on a stretcher, likely inside a helicopter or a medical transport vehicle.

- Medical Personnel: Several figures in military uniforms are attending to the injured man. One is focused on the patient, attending to his wound. The other appears to be securing the stretcher.

- Environment: The background suggests a battlefield or a war zone. Other soldiers can be seen.

- Helicopter/Transport: Parts of the helicopter or medical transport vehicle are visible, reinforcing the idea of rapid evacuation or in-field medical care.

The overall tone is somber and highlights the grim reality of war and the immediate need for medical assistance.

Created by gemini-2.0-flash on 2025-06-16

The black and white photograph appears to be a scene from the Vietnam War. The central focus is a close-up of an injured soldier lying on a stretcher or some form of makeshift bed. The man's face is prominent, conveying a sense of vulnerability and pain.

Around the injured man, several other soldiers are tending to him. One soldier is looking down at the injured man, while another is wearing a helmet and focusing intently on providing medical care. In the background, another soldier is standing with his hands on his hips, appearing watchful and concerned.

The environment suggests a war zone. The ground looks rough and uneven, and there are remnants of debris scattered around. The image is marked by a sense of urgency and the harsh realities of war.

Mistral Pixtral

Created by us.mistral.pixtral-large-2502-v1:0 on 2025-06-01

The black-and-white photograph depicts a scene that appears to be from a military context, likely during a conflict or war. Here is a detailed summary:

Setting: The image is taken outdoors in what looks like a rugged, possibly forested or jungle area. There is a military helicopter in the background, indicating an evacuation or medical transport scenario.

Individuals:

- Foreground: A man is lying on a stretcher, looking upwards. His expression suggests pain or distress. He is shirtless and appears to be injured.

- Middle Ground: Another individual, possibly a soldier, is bending over the man on the stretcher, seemingly attending to him. This person is wearing a military uniform and a helmet.

- Background: Another soldier is standing near the helicopter, wearing a helmet and uniform. He appears to be observing the scene or waiting.

Helicopter: The helicopter is partially visible, with its door open. This suggests that it is being used for medical evacuation or transport.

Atmosphere: The overall mood of the image is intense and urgent, reflecting the seriousness of the situation. The presence of the helicopter and the injured man on the stretcher indicates a medical emergency in a combat zone.

This image likely captures a moment of medical evacuation during a military operation, highlighting the efforts to provide care and transport for injured personnel.

Qwen

Created by Qwen/Qwen2.5-VL-7B-Instruct on 2025-06-29

This is a black-and-white photograph depicting a somber wartime scene. Several individuals, dressed in military uniforms and helmets, are gathered around a person lying on the ground. The person on the ground appears to be receiving medical attention, as one of the soldiers is holding what seems to be a bandage or medical supplies. The background features a rocky, barren landscape, and the structure on the right side of the image looks like part of a vehicle or a piece of heavy machinery. The overall mood of the image is serious and suggests an emergency or medical situation during a conflict.

Created by Qwen/Qwen2.5-VL-72B-Instruct on 2025-06-29

This is a black-and-white photograph that captures a poignant and intense moment, likely from a wartime scenario. In the foreground, a soldier is lying down, appearing to be injured, with his face in an expression of pain or shock. He is partially inside what seems to be an aircraft, as indicated by the metal frame and straps visible in the frame. Another soldier is holding the injured man, possibly providing support.

In the background, several other soldiers can be seen. They are wearing helmets and uniforms, suggesting they are part of a military unit. The environment around them appears to be a battlefield or a damaged area, with debris and ruins visible. The overall mood of the image is somber and tense, reflecting the harsh realities of war.