Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

Microsoft

Imagga

AWS Rekognition

| Age | 29-45 |

| Gender | Female, 69.8% |

| Confused | 2.8% |

| Happy | 2.2% |

| Angry | 5.3% |

| Sad | 46.9% |

| Calm | 33.7% |

| Disgusted | 6.4% |

| Surprised | 2.7% |

Feature analysis

Amazon

Clarifai

AWS Rekognition

| Person | 99.3% | |

Categories

Imagga

created on 2019-06-04

| paintings art | 99.8% | |

Captions

Microsoft

created by unknown on 2019-06-04

Clarifai

No captions written

Salesforce

Created by general-english-image-caption-blip on 2025-05-21

a photograph of three black and white photographs of three men

Created by general-english-image-caption-blip-2 on 2025-07-06

four portraits of men in suits and ties

OpenAI GPT

Created by gpt-4o-2024-11-20 on 2025-06-10

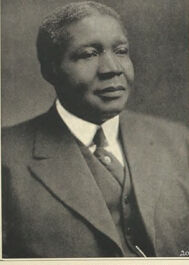

This image features three separate portrait photographs, each framed with a border, positioned side by side on a single piece of paper. Below each photo, there are printed captions identifying the individuals and their accomplishments.

- On the left, the caption reads: "ROBERT R. MOTON, HAMPTON GRADUATE (1890) Principal of Tuskegee Institute."

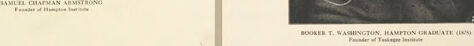

- In the center, the caption reads: "SAMUEL CHAPMAN ARMSTRONG Founder of Hampton Institute."

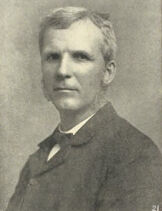

- On the right, the caption reads: "BOOKER T. WASHINGTON, HAMPTON GRADUATE (1875) Founder of Tuskegee Institute."

All figures appear in formal attire, such as suits or jackets, reflecting historical significance. The layout is simple and uniform, emphasizing the achievements and contributions of the individuals featured.

Created by gpt-4o-2024-08-06 on 2025-06-10

The image is a collage featuring three individual portraits, each mounted on a light-colored background. Below each portrait, there is a caption providing information about the individual portrayed.

The left portrait is labeled "Robert R. Moton, Hampton Graduate (1890) / Principal of Tuskegee Institute."

The middle portrait is labeled "Samuel Chapman Armstrong / Founder of Hampton Institute."

The right portrait is labeled "Booker T. Washington, Hampton Graduate (1875) / Founder of Tuskegee Institute."

Each figure is dressed in formal attire, including suits and ties. The background of each portrait is plain, highlighting the formal nature of the photographs.

Anthropic Claude

Created by anthropic.claude-3-haiku-20240307-v1:0 on 2025-06-14

The image contains three black and white portraits. The first portrait shows a man with a serious expression, wearing a suit. The second portrait shows a man with a stern expression, wearing a suit and tie. The third portrait shows an older man with a serious expression, wearing a suit and tie.

Created by us.anthropic.claude-3-opus-20240229-v1:0 on 2025-06-14

The image shows three black and white portrait photographs of African American men wearing suits. Each photograph is labeled with a name and title underneath. The men appear to be important historical figures, likely from the late 19th or early 20th century based on their clothing style and the aged appearance of the photographs. The portraits are formally posed and the men have serious, dignified expressions. The photographs are arranged in a row on what looks like an old document or book page.

Created by us.anthropic.claude-3-5-sonnet-20241022-v2:0 on 2025-06-14

This image shows three formal portrait photographs arranged side by side, appearing to be from a historical document or album. The photographs are black and white and show three men in formal attire from what appears to be the late 19th or early 20th century. Each man is wearing a suit with a tie and is photographed in a traditional portrait style against a plain background. The photos are mounted on light-colored paper or cardboard with text captions beneath them. The middle photograph shows a person identified as Samuel Chapman Armstrong, while the outer photos show Hampton graduates. The style and presentation suggest these are institutional or academic portraits, likely connected to Hampton University's history.

Meta Llama

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-06-10

The image presents a collection of three black-and-white photographs, each featuring a different individual, accompanied by their names and titles.

- The first photograph is of Robert R. Moton, a Hampton Graduate (1899), and the founder of Tuskegee Institute.

- The photograph is in black and white.

- The individual is wearing a suit and tie.

- The background of the photograph is a plain backdrop.

- The second photograph is of Samuel Chapman Armstrong, the founder of Hampton Institute.

- The photograph is in black and white.

- The individual is wearing a suit and tie.

- The background of the photograph is a plain backdrop.

- The third photograph is of Booker T. Washington, a Hampton Graduate (1879), and the founder of Tuskegee Institute.

- The photograph is in black and white.

- The individual is wearing a suit and tie.

- The background of the photograph is a plain backdrop.

The image showcases three prominent figures in education and their contributions to the establishment of notable institutions.

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-06-10

This image is a scanned page from a book or document, featuring three black-and-white photographs of men in suits. The page has a beige background with a darker beige border at the top and bottom.

The three photographs are arranged side by side, each with a caption below it. The captions include the name of the individual, their title or position, and the year they graduated from Hampton Institute. The names listed are Robert R. Moton, Samuel Chapman Armstrong, and Booker T. Washington.

The overall atmosphere of the image suggests that it is a historical document or archive, possibly related to the history of Hampton Institute or the lives of these notable individuals.

Amazon Nova

Created by amazon.nova-lite-v1:0 on 2025-06-09

The image is a black-and-white photograph featuring three portraits of men. Each portrait is printed on a white background and is framed by a thin white border. The portraits are arranged in a row, with the first portrait on the left, the second in the middle, and the third on the right. Each portrait has a small text box at the bottom with the man's name and the year of graduation. The text also mentions their association with Hampton Institute. The men are dressed in suits and ties, with serious expressions on their faces.

Created by amazon.nova-pro-v1:0 on 2025-06-09

The image shows three black-and-white portraits of men. The men are wearing formal attire, including ties, jackets, and shirts. The portraits are arranged in a row, with one portrait on the left, one in the middle, and one on the right. Each portrait has a label with the name of the person and the year they graduated from Hampton Institute. The image has a vintage look, suggesting that it may be an old photograph.

Google Gemini

Created by gemini-2.0-flash on 2025-05-26

The image shows a collage of three black and white portraits displayed on a gray background. Each portrait is printed on a separate sheet of paper and arranged side-by-side.

On the left, the portrait depicts Robert B. Moton, a Hampton graduate and Principal of Tuskegee Institute. He is dressed in a suit and tie.

In the center is a portrait of Samuel Chapman Armstrong, the Founder of Hampton Institute. He is also wearing a suit.

On the right, the portrait is of Booker T. Washington, a Hampton graduate and Founder of Tuskegee Institute. He is dressed in a suit and bow tie, with a vest visible beneath his jacket.

Created by gemini-2.0-flash-lite on 2025-05-26

Here's a description of the image:

The image is a historical black and white photograph featuring three portrait shots of prominent figures. The three portraits are mounted on a larger, neutral-toned background, possibly a page from an album.

- Left Portrait: This portrait is of Robert R. Moton, a Hampton Graduate from 1890 and the Principal of Tuskegee Institute. He is wearing a suit, looking to his right.

- Middle Portrait: This is of Samuel Chapman Armstrong, the Founder of Hampton Institute. He is facing forward, wearing a dark jacket and a small tie.

- Right Portrait: This portrait is of Booker T. Washington, a Hampton Graduate from 1875, and the Founder of Tuskegee Institute. He is wearing a suit and tie, facing the viewer.

The portraits are evenly spaced and have the names and their respective titles written under each one.

Mistral Pixtral

Created by us.mistral.pixtral-large-2502-v1:0 on 2025-06-05

The image displays three formal portraits of distinguished individuals associated with Hampton Institute (now Hampton University). Each portrait is accompanied by a caption identifying the person and their affiliation with the institution.

Left Portrait:

- Individual: Robert S. Moton

- Affiliation: Hampton Graduate (1890)

- Position: President of Tuskegee Institute

- Description: The portrait shows a man in a suit and tie, looking to the side. The image is framed and has a classic, formal appearance.

Middle Portrait:

- Individual: Samuel Chapman Armstrong

- Affiliation: Founder of Hampton Institute

- Description: The portrait depicts a man with a beard and mustache, dressed in a suit and tie, looking directly at the camera. The image has a formal and dignified appearance.

Right Portrait:

- Individual: Booker T. Washington

- Affiliation: Hampton Graduate (1875)

- Position: President of Tuskegee Institute

- Description: The portrait shows a man in a suit and bow tie, looking directly at the camera. The image is framed and has a formal and respectful appearance.

The portraits are presented in a classic black-and-white style, highlighting the historical significance of these individuals in the context of education and leadership at historically black colleges and universities.

Qwen

Created by Qwen/Qwen2.5-VL-7B-Instruct on 2025-07-11

The image is a black and white photograph featuring three separate portraits of men, each in a formal pose. The portraits are arranged side by side on a beige background, and each is labeled with the name of the individual and their title or association with an educational institution.

First Portrait (Left): The individual is dressed in formal attire, wearing a suit and tie. The text at the bottom reads "ROBERT R. MOTON, HAMPTON GRADUATE (1900)." This indicates that Robert R. Moton is a graduate of Hampton Institute, and the date 1900 suggests the year of his graduation.

Second Portrait (Center): Another individual is also dressed in formal attire, wearing a suit. The text at the bottom reads "SAMUEL CHAPMAN ARMSTRONG, Founder of Hampton Institute." This text identifies the person as Samuel Chapman Armstrong, the founder of Hampton Institute.

Third Portrait (Right): The final portrait features an individual in formal attire as well, wearing a suit and tie. The text at the bottom reads "BOURKE T. WASHINGTON, HAMPTON GRADUATE (1885)." This indicates that Booker T. Washington is also a graduate of Hampton Institute, with the year 1885 marking his graduation.

All three portraits are presented in a respectful and formal manner, common for portraits of notable figures from the late 19th and early 20th centuries. The individuals appear to be influential in the context of Hampton Institute, as their roles and connections to the institution are clearly highlighted in the text below each portrait.

Created by Qwen/Qwen2.5-VL-72B-Instruct on 2025-07-11

This image displays three black-and-white portrait photographs arranged side by side, each depicting a man dressed in formal attire from a historical period. The portraits appear to be of significant individuals, possibly figures in education or leadership, as suggested by their professional demeanor and the text beneath their images.

Left Photograph:

- The man is dressed in a suit with a tie, looking slightly to the side with a composed expression.

- The text below identifies him as "ROBERT R. MOTON, HAMPTON GRADUATE."

Middle Photograph:

- This man also appears in formal attire, wearing a dark suit and white shirt. His expression is serious as he looks directly at the camera.

- The text identifies him as "SAMUEL CHAPMAN ARMSTRONG."

Right Photograph:

- The man is wearing a dark suit and a bow tie, looking directly at the camera with a serious expression.

- The text below identifies him as "BOOKER T. WASHINGTON, HAMPTON GRADUATE."

The background of the image is beige, and the photographs are set against white frames, giving the image a vintage, historical appearance. The text at the bottom of each photograph provides their names and affiliations, indicating their importance and possibly their roles in education.