Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

Microsoft

AWS Rekognition

| Age | 22-34 |

| Gender | Male, 95% |

| Calm | 96.6% |

| Sad | 1.3% |

| Confused | 0.5% |

| Angry | 0.4% |

| Disgusted | 0.4% |

| Happy | 0.3% |

| Surprised | 0.3% |

| Fear | 0.2% |

Feature analysis

Amazon

| Person | 99% | |

Categories

Imagga

| paintings art | 79.7% | |

| streetview architecture | 12.9% | |

| text visuals | 3.2% | |

| interior objects | 1.8% | |

| food drinks | 1.5% | |

Captions

Microsoft

created by unknown on 2021-04-03

| a group of people standing in front of a door | 56.2% | |

| a person standing in front of a door | 56.1% | |

| a group of people standing in front of a window | 52.2% | |

Google Gemini

Created by gemini-2.0-flash on 2025-05-11

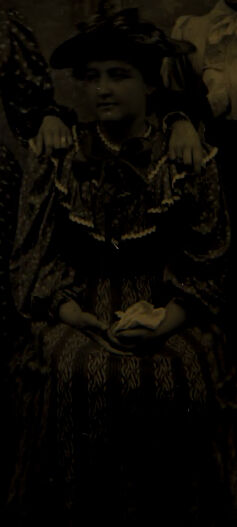

The image appears to be an antique or vintage photograph, possibly a tintype or ambrotype, given its aged and somewhat deteriorated condition. The image is dark, with significant fading and discoloration, which makes it difficult to discern fine details.

There are three figures in the image, who seem to be women or young ladies from the late 19th or early 20th century, judging from their attire. One woman is seated in a chair at the front of the image. She is wearing a dress with a high collar and ornate detailing around the neckline, and she is wearing a hat. She appears to be holding something in her hands. To her left is another woman who stands and wears a long, dark-colored dress or gown with a polka dot print. Standing to the right of the seated woman is a third person. They are wearing a light-colored shirt with a bow tie and a dark-colored skirt or pants, and their head is adorned with a hat.

The background is a painted studio backdrop, which includes architectural elements like a building or castle with turrets and windows and some foliage or trees. This was a common practice in portrait photography of the era.

The edges of the image show the wear and tear of time, including discoloration and small areas of damage, suggesting that this photograph is quite old and has been stored or displayed for many years.

Created by gemini-2.0-flash-lite on 2025-05-11

Here's a description of the image:

Overall Impression: This is a vintage photograph, likely from the late 19th or early 20th century, judging by the clothing and overall aesthetic. The photograph appears to be in a somewhat poor condition, with a dark, faded appearance and noticeable damage around the edges.

Subject Matter: The photograph features three women. One is seated in the center, and the other two are standing on either side of her. The woman on the left is wearing a dress with a patterned (possibly polka dots) long sleeves and a high neckline. She has her right hand at her side. The seated woman wears a hat and patterned dress that has a ruffled design and lace trim. She has her hands in her lap, holding a small, folded item (possibly a handkerchief). The woman on the right has dark hair and wears a hat. She is wearing a white blouse that has a large bow in the front and has a jacket and skirt combination. The woman is standing beside the seated woman with her right arm around the seated woman.

Setting & Background: The background appears to be a studio setting, indicated by the backdrop, which is indistinct but shows architectural features like what could be a building. There are some decorative elements and possibly some foliage.

Style & Technique: The photograph appears to be in the style of the Victorian or Edwardian eras. The lighting is subdued. The dark tone of the image is likely due to the age of the photograph or the photographic process used, but it obscures some of the details.

Condition: The photograph is in a state of apparent wear. The edges are chipped and damaged. The image has faded.