Machine Generated Data

Tags

Color Analysis

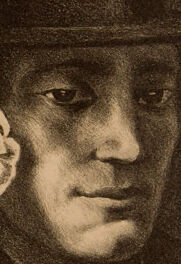

Face analysis

Amazon

Microsoft

AWS Rekognition

| Age | 24-38 |

| Gender | Male, 94.2% |

| Surprised | 0.1% |

| Disgusted | 0.3% |

| Angry | 0.6% |

| Fear | 0.1% |

| Calm | 91.8% |

| Happy | 0.1% |

| Sad | 6.9% |

| Confused | 0.1% |

Feature analysis

Amazon

| Person | 99.1% | |

Categories

Imagga

| paintings art | 99.9% | |

Captions

Microsoft

created on 2019-10-30

| an old photo of a person | 80.6% | |

| old photo of a person | 78.7% | |

| an old photo of a person | 76.2% | |

OpenAI GPT

Created by gpt-4 on 2025-01-30

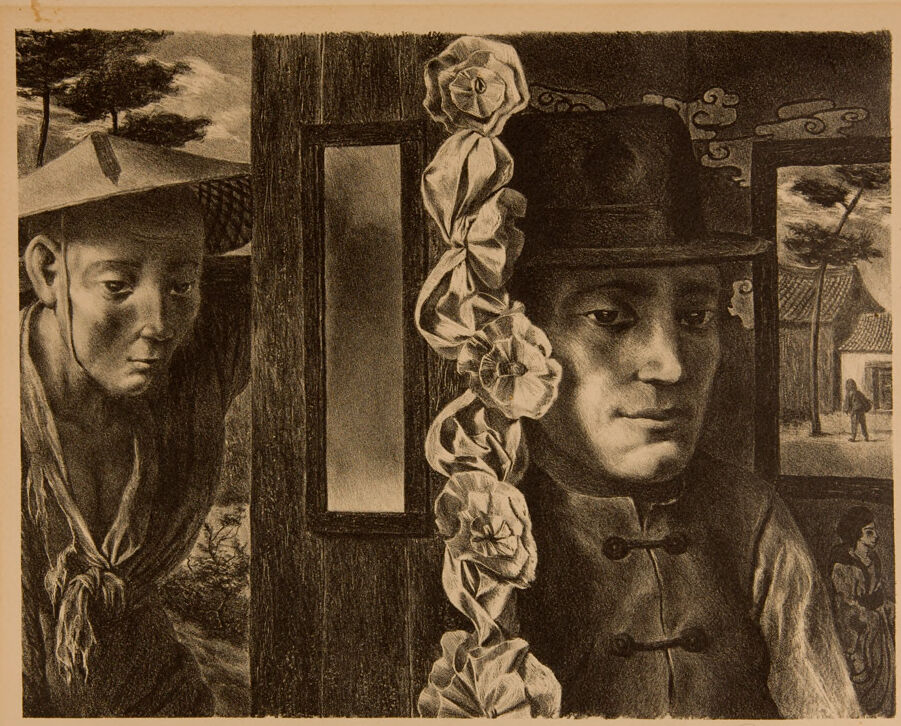

This is a printed image depicting a surreal scene where elements are combined in an unusual way. Two prominent rectangular areas have been obscured. On the left part of the composition, there's a countryside landscape with trees, hills, and a clear sky. Toward the right, a second vignette within the same picture shows a small figure standing near a building with traditional architectural elements, possibly from an earlier historical period. Between these areas, there appears to be a section of a wooden door or panel featuring ornamental flower details. The lower right corner of the artwork has a signature or inscription by the creator. The image has a vintage or aged look with warm tones and visible paper texture around the borders, suggesting it may be an old print or drawing.

Created by gpt-4o-2024-05-13 on 2025-01-30

The image is an intricate and multi-layered artwork featuring various visual elements. There is an ornate vertical floral design running through the center, resembling stylized flowers. The background includes a mix of architectural details and natural elements such as trees, rooftops, and people, creating a depth-filled scene. On one side, there is a detailed depiction of what appears to be traditional clothing, indicating cultural or historical context. The overall composition is rich with textures and carefully etched details, blending indoor and outdoor scenes seamlessly.

Anthropic Claude

Created by claude-3-5-sonnet-20241022 on 2025-01-30

This is a black and white artistic photograph or print that appears to be set in East Asia, likely China. The composition shows two figures separated by an ornate vertical decoration of fabric flowers or ribbons. The scene includes traditional architectural elements visible in the background, with pagoda-style rooflines and detailed woodwork. Traditional Chinese clothing styles are depicted, including a cap on one figure and formal attire on the other. The image has a thoughtful, contemplative quality, enhanced by the sepia-like tones and careful attention to light and shadow. In the background, there appear to be additional figures and buildings, creating depth in the scene. The artistic technique appears to be a form of printmaking or photographic process, with rich tonal gradations.

Created by claude-3-opus-20240229 on 2025-01-30

The image is a charcoal or pencil drawing depicting two figures, likely from Asia based on their facial features and clothing. The figures are shown in a contemplative or pensive pose, with serious expressions on their faces. One figure is looking straight ahead while the other looks slightly downward. The drawing has rich contrasts between light and dark tones, giving it a somber and introspective mood. In the background, there appear to be some architectural elements like a window or doorway. The image is framed with texture that looks like aged or torn paper. Overall, it is an evocative portrait drawing rendered with skill to convey a sense of the inner lives and emotions of the subjects.

Created by claude-3-haiku-20240307 on 2025-01-30

The image appears to be a vintage black and white photograph or illustration depicting two people in a scene. The central figure appears to be a man wearing an ornate headdress or headpiece with floral decorations. He has a serious or pensive expression on his face. In the background, there is another man visible, also with a serious expression. The overall scene has a somber, contemplative mood.

Meta Llama

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-03-14

The image is a charcoal drawing of two men, one wearing a hat and the other wearing a traditional Chinese garment. The man on the left is wearing a wide-brimmed hat and has a serious expression. He is leaning against a wooden door frame, which is adorned with a white floral garland. The man on the right is wearing a traditional Chinese jacket with black buttons and a high collar. He also has a serious expression. In the background, there are trees and a building with a tiled roof. The overall atmosphere of the image is one of solemnity and contemplation. The use of charcoal creates a sense of depth and texture, adding to the overall mood of the piece. The image appears to be a study of human emotion and expression, with the two men serving as subjects. The artist's use of shading and texture creates a sense of realism, drawing the viewer into the scene. The image is likely meant to evoke a sense of introspection and quiet reflection, inviting the viewer to contemplate the emotions and thoughts of the two men.

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-03-14

The image presents a captivating black-and-white drawing, likely created with charcoal or pencil, showcasing a man in traditional Chinese attire. The artwork is characterized by its intricate details and textures, which add depth and dimensionality to the overall composition. **Key Elements:** * **Central Figure:** A man dressed in traditional Chinese clothing, complete with a hat and a long-sleeved shirt, takes center stage. His facial expression is contemplative, inviting the viewer to ponder his thoughts and emotions. * **Floral Motif:** A delicate floral pattern adorns the right side of the image, adding a touch of elegance and sophistication to the overall design. * **Background:** The background of the image features a subtle yet effective use of shading, creating a sense of depth and dimensionality. The artist's skillful manipulation of light and shadow adds texture and visual interest to the composition. * **Border:** The image is framed by a beige border, which provides a subtle contrast to the darker tones of the drawing. This border also serves to separate the artwork from the surrounding environment, drawing the viewer's attention to the central image. **Artistic Style:** The artistic style of the image is reminiscent of traditional Chinese art, with its emphasis on simplicity, elegance, and attention to detail. The use of charcoal or pencil as the primary medium adds a sense of intimacy and expressiveness to the artwork, while the subtle shading and texture create a sense of depth and dimensionality. **Overall Impression:** The image exudes a sense of serenity and contemplation, inviting the viewer to step into the tranquil world of the artwork. The combination of traditional Chinese motifs, elegant lines, and subtle shading creates a visually stunning and thought-provoking piece that rewards close observation and reflection.

Amazon Nova

Created by amazon.nova-pro-v1:0 on 2025-01-30

The image is a black-and-white artwork that depicts a man and a woman. The man is on the right side of the image, and the woman is on the left side. The man is wearing a hat, a shirt, and a jacket. The woman is wearing a hat and a scarf. The man and the woman are looking at each other. In the middle of the image, there is a wooden door with a window.

Created by amazon.nova-lite-v1:0 on 2025-01-30

The image is a black-and-white drawing of two people. The drawing is framed and has a border. The person on the left is wearing a hat and a scarf around his neck. The person on the right is wearing a hat and a long-sleeved shirt with buttons. The drawing also includes a window with a view of a house and a person walking on the street.