Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

Microsoft

AWS Rekognition

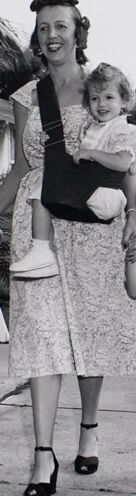

| Age | 1-7 |

| Gender | Female, 100% |

| Happy | 97.9% |

| Calm | 0.7% |

| Confused | 0.5% |

| Sad | 0.3% |

| Angry | 0.2% |

| Surprised | 0.1% |

| Disgusted | 0.1% |

| Fear | 0.1% |

Feature analysis

Amazon

Clarifai

AWS Rekognition

| Person | 99.6% | |

Categories

Imagga

created on 2022-01-08

| streetview architecture | 86.5% | |

| nature landscape | 4.9% | |

| people portraits | 3.9% | |

| paintings art | 2.6% | |

| beaches seaside | 1.1% | |

Captions

Microsoft

created by unknown on 2022-01-08

| a little girl posing for a photo | 80.2% | |

| a girl posing for a photo | 80.1% | |

| a little girl posing for a picture | 80% | |

Clarifai

Created by general-english-image-caption-clip on 2025-07-11

photograph of a family standing in front of their house.

Salesforce

Created by general-english-image-caption-blip on 2025-05-16

a photograph of a woman holding a baby and two children

OpenAI GPT

Created by gpt-4o-2024-11-20 on 2025-06-15

This black-and-white image depicts a group of four people walking outdoors on a residential sidewalk near a well-manicured lawn and palm trees. The central figure is wearing a patterned dress and heels while holding a child on the left side. The two children on either side appear to be dressed in casual clothing; one wears a skirt and shirt, and the other wears a shirt with a tropical pattern and shorts. The backdrop includes a house with white walls and a neatly trimmed yard. A paved walkway divides the lawn, and tall palm and other trees fill the suburban scene.

Created by gpt-4o-2024-08-06 on 2025-06-15

The image depicts four individuals, presumably a mother with her children, walking on a sidewalk. The adult is wearing a long floral dress and high heels, and she is carrying a child in her arms. The child being carried is wearing light-colored clothing. Next to them, a girl in a dress, socks, and shoes holds the adult's hand, and a boy in a patterned outfit, shorts, and sneakers stands on the other side. The scene is set in a suburban area with neatly trimmed lawns, palm trees, other foliage, and a house visible in the background. The sky is clear, indicating it might be daytime.

Anthropic Claude

Created by anthropic.claude-3-haiku-20240307-v1:0 on 2025-06-19

The image shows a family standing outside a house in a tropical setting. The family consists of a woman holding a young child, and two other children, a girl and a boy, standing next to them. They appear to be dressed in casual summer clothing. The background includes palm trees and other lush vegetation, suggesting a warm, tropical environment. The overall scene conveys a sense of family togetherness and a relaxed, outdoor setting.

Created by us.anthropic.claude-3-5-sonnet-20241022-v2:0 on 2025-06-19

This is a black and white photograph that appears to be from the mid-20th century. The image shows a family group standing on a sidewalk in what looks like a residential area in Florida or another southern state, given the palm trees and architectural style. The people are wearing typical 1950s style clothing - the females are in dresses with full skirts, and they're wearing dress shoes. The setting includes a white house in the background, well-manicured lawn, palm trees, and what appears to be Spanish moss hanging from larger trees in the distance. The composition suggests this might be a casual family photo taken during a special occasion or Sunday outing, given their dressy attire.

Meta Llama

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-06-05

The image is a black and white photograph of a woman and three children walking on a sidewalk in front of a house. The woman is wearing a dress with a floral pattern, black heels, and a baby sling with a baby inside. She has her hair styled in a vintage updo.

To the left of the woman is a young girl wearing a white dress with a matching purse and shoes. She has a headband in her hair and is carrying a stuffed animal. To the right of the woman is a boy wearing a Hawaiian shirt, shorts, and shoes. He has his hands in his pockets.

In the background, there are palm trees and other tropical plants. The sky is overcast, and the atmosphere suggests a warm and sunny day. The overall mood of the image is one of happiness and contentment, as the family appears to be enjoying a leisurely stroll together.

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-06-05

The image is a black-and-white photograph of a woman walking down a sidewalk with three children. The woman is wearing a dress and heels, and she has a baby in a carrier on her front. She is holding the hand of a young girl who is wearing a dress and Mary Jane shoes. The girl is carrying a stuffed animal in a bag. To the right of the woman is a young boy wearing shorts and a shirt with a leaf pattern. He is also wearing socks and shoes.

In the background, there are palm trees and houses with lawns. The sky is overcast. The overall atmosphere suggests a warm and sunny day, possibly in the 1950s or 1960s, based on the clothing and hairstyles of the people in the photo.

Amazon Nova

Created by amazon.nova-lite-v1:0 on 2025-06-03

The black-and-white photo features a woman and her three children, standing on a sidewalk. The woman is carrying a baby on her back. The two girls are standing on the left side of the woman. The boy is standing on the right side of the woman. Behind them is a house with a grassy lawn and bushes. There are trees in the area. The sidewalk is wet.

Created by amazon.nova-pro-v1:0 on 2025-06-03

The black-and-white photo features a woman carrying a baby on her back, accompanied by two other children. They are standing on the sidewalk in front of a house. The woman is smiling and looking at the camera. The baby is looking at the boy on the right. The boy is wearing a shirt with a leaf pattern. The girl on the left is wearing a dress and carrying a bag. Behind them are trees and bushes.

Google Gemini

Created by gemini-2.0-flash-lite on 2025-05-16

Here's a description of the image:

Overall Impression: This is a classic black and white photograph of a family taking a stroll. It has a nostalgic, vintage feel.

Key Elements:

- The Family: A woman, likely the mother, is the central figure. She is wearing a floral print dress, heels, and a headscarf, which is stylish for the era. She carries a baby in a carrier. Beside her, a young girl is holding her hand. In front, there is a young boy.

- Setting: The scene appears to be a suburban neighborhood. There is a sidewalk, lush green lawns, and what looks like houses in the background. Palm trees are present, suggesting a warmer climate.

- Composition: The family is walking towards the viewer, giving a sense of movement and approachability. The composition is well-balanced, with the subjects spread across the frame.

- Mood/Atmosphere: The photograph has a feel-good, happy vibe. The expressions of the family, and their interaction, suggest a pleasant outing. The black and white format and the clothing suggest a time in the middle of the 20th century.

Created by gemini-2.0-flash on 2025-05-16

Here is a description of the image:

This black and white photograph appears to be from the mid-20th century and depicts a woman walking on a sidewalk, accompanied by three children. The woman, who seems to be the mother, is wearing a dress and heels, and is carrying a baby in a sling on her chest. To her right is a young boy wearing a matching short-sleeved shirt and shorts with a palm tree pattern, and he is holding the woman's hand. To the woman's left is a young girl in a dress with what appears to be a small bag or purse hanging from her shoulder; she is holding the woman's other hand.

The setting appears to be a residential neighborhood with well-manicured lawns and houses visible in the background. Palm trees and other trees are interspersed along the street, suggesting a warm climate. The photograph is composed with a focus on the family, and the background provides context for their location.

Mistral Pixtral

Created by us.mistral.pixtral-large-2502-v1:0 on 2025-06-01

The black and white photograph depicts a family scene from what appears to be the mid-20th century. The image shows a woman and three children walking along a sidewalk in a residential area. The woman is dressed in a patterned dress and high heels, and she is carrying a young child in her arms. The child she is holding is wearing a light-colored dress.

To the woman's left is a young girl, also dressed in a light-colored dress, holding what appears to be a small bag or purse. To the woman's right is a young boy wearing a short-sleeved shirt with a palm tree pattern and shorts. The boy is also wearing socks and shoes.

The background features a well-maintained lawn, palm trees, and a single-story house with a neatly landscaped yard. The overall setting suggests a warm, possibly tropical or subtropical climate. The family appears to be posing for the photograph, with the woman and children looking towards the camera. The image conveys a sense of familial warmth and togetherness.

Qwen

Created by Qwen/Qwen2.5-VL-7B-Instruct on 2025-07-01

This is a black-and-white photograph of a family group posing outdoors on a sidewalk in what appears to be a suburban neighborhood. The family consists of an adult woman holding a young child, and two young children standing in front of them. The young boy on the right is wearing a short-sleeved shirt and shorts with a tropical or floral pattern. The young girl on the left is wearing a white dress and holding a small object that could be a toy or part of a costume. Another child is being held by the woman, who is smiling and looking towards the camera.

The background features a single-story house with a sloped roof, surrounded by lush greenery including palm trees and other vegetation. The scene suggests a warm, possibly tropical, location. The overall atmosphere of the photo conveys a casual and happy moment, likely captured on a sunny day.

Created by Qwen/Qwen2.5-VL-72B-Instruct on 2025-07-01

This black-and-white photograph shows a family of four walking along a sidewalk in a suburban neighborhood. A woman, likely the mother, is in the center, holding the hands of two children, a girl on her left and a boy on her right. The girl is wearing a white dress and holding a purse, while the boy is dressed in a light patterned shirt and shorts. The woman is also carrying a baby on her left hip. The background features a house with a palm tree in front, a neatly trimmed lawn, and more trees and houses in the distance. The scene suggests a warm, sunny day in a residential area.