Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

AWS Rekognition

| Age | 50-58 |

| Gender | Male, 92.7% |

| Happy | 99.6% |

| Calm | 0.1% |

| Surprised | 0.1% |

| Fear | 0.1% |

| Disgusted | 0.1% |

| Angry | 0% |

| Sad | 0% |

| Confused | 0% |

Feature analysis

Amazon

| Person | 99% | |

Categories

Imagga

| paintings art | 99.9% | |

Captions

Microsoft

created by unknown on 2022-01-08

| a vintage photo of a person | 89% | |

| a vintage photo of a person | 86.8% | |

| a vintage photo of a man and woman posing for a picture | 63.8% | |

Clarifai

created by general-english-image-caption-blip on 2025-05-16

| a photograph of a woman in a wheelchair is sitting in a wheelchair | -100% | |

Google Gemini

Created by gemini-2.0-flash-lite on 2025-05-16

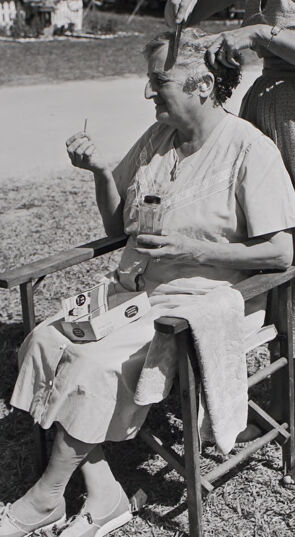

Here's a description of the image:

Overall Scene:

The photograph is a black and white shot capturing a tender moment of care and possibly leisure in an outdoor setting, likely a camping or recreational area. The composition features two elderly women, likely in a camping environment judging by the trailers in the background.

Key Elements:

- Woman in Chair: An older woman is seated in a folding chair. She appears relaxed, with a drink in one hand and a small object (possibly a cigarette or a needle) in the other. She's wearing a light-colored dress and comfortable shoes.

- Woman Combing Hair: Another older woman is standing behind the seated woman, carefully combing or arranging her hair. She is wearing glasses and a dress, focused on her task.

- Setting: The background suggests a campsite or recreational area. Trailers are visible in the distance. The ground is grassy, and the overall impression is one of a casual, outdoor environment.

Atmosphere:

The image has a soft, nostalgic feel. The lighting seems natural, and the subjects appear comfortable and at ease with each other. It conveys a sense of shared experience and quiet companionship.

Created by gemini-2.0-flash on 2025-05-16

This black and white photograph features two women in what appears to be a campground or trailer park setting.

In the foreground, a woman is seated in a wooden director's chair, placed on a grassy area. She is wearing a light-colored, knee-length dress with lace details around the neckline and hem. She also wears slip-on sneakers with laces. In her left hand, she holds a long object, possibly a hair clip or toothpick, and in her right hand, she holds a glass bottle. On her lap is a small box and a towel.

Standing behind her is another woman wearing glasses and a knee-length dress with a halter neckline and a full skirt. She is in the act of combing the seated woman's hair. Her hair is styled in a short, curled wave. She wears flat shoes.

The background includes several trailers and mobile homes. A picket fence runs along the edge of the grassy area, separating it from a road. The overall tone of the photograph is relaxed and casual, suggesting a domestic scene amidst a recreational environment.