Machine Generated Data

Tags

Amazon

created on 2022-01-08

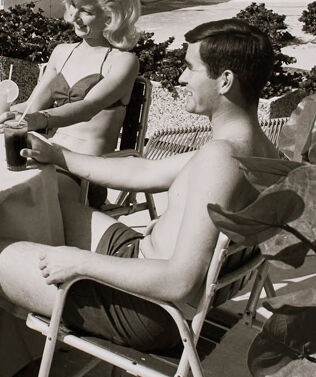

| Person | 99.4 | |

|

| ||

| Human | 99.4 | |

|

| ||

| Person | 99.3 | |

|

| ||

| Person | 98.6 | |

|

| ||

| Person | 98.4 | |

|

| ||

| Chair | 97.9 | |

|

| ||

| Furniture | 97.9 | |

|

| ||

| Person | 97.5 | |

|

| ||

| Person | 96.7 | |

|

| ||

| Person | 96.6 | |

|

| ||

| Person | 96 | |

|

| ||

| Person | 93.2 | |

|

| ||

| Person | 91.1 | |

|

| ||

| Person | 91 | |

|

| ||

| Chair | 87.9 | |

|

| ||

| Person | 81.4 | |

|

| ||

| Restaurant | 79.4 | |

|

| ||

| Meal | 79.2 | |

|

| ||

| Food | 79.2 | |

|

| ||

| Person | 79 | |

|

| ||

| Clothing | 70.4 | |

|

| ||

| Apparel | 70.4 | |

|

| ||

| Plant | 68.5 | |

|

| ||

| People | 67.9 | |

|

| ||

| Cafeteria | 66.2 | |

|

| ||

| Table | 64.6 | |

|

| ||

| Sitting | 63.9 | |

|

| ||

| Portrait | 63.1 | |

|

| ||

| Face | 63.1 | |

|

| ||

| Photography | 63.1 | |

|

| ||

| Photo | 63.1 | |

|

| ||

| Person | 61.1 | |

|

| ||

| Suit | 61 | |

|

| ||

| Coat | 61 | |

|

| ||

| Overcoat | 61 | |

|

| ||

| Crowd | 60.3 | |

|

| ||

| Water | 57.1 | |

|

| ||

Clarifai

created on 2023-10-25

Imagga

created on 2022-01-08

| people | 27.3 | |

|

| ||

| salon | 25 | |

|

| ||

| person | 24.6 | |

|

| ||

| man | 21.5 | |

|

| ||

| chair | 20.8 | |

|

| ||

| adult | 19.5 | |

|

| ||

| motor vehicle | 19.4 | |

|

| ||

| sitting | 18.9 | |

|

| ||

| golf equipment | 18.4 | |

|

| ||

| lifestyle | 18.1 | |

|

| ||

| happy | 16.9 | |

|

| ||

| women | 16.6 | |

|

| ||

| pretty | 14.7 | |

|

| ||

| room | 14.6 | |

|

| ||

| male | 14.2 | |

|

| ||

| lady | 13.8 | |

|

| ||

| sports equipment | 13.8 | |

|

| ||

| home | 13.6 | |

|

| ||

| couple | 13.1 | |

|

| ||

| men | 12.9 | |

|

| ||

| kin | 12.8 | |

|

| ||

| indoor | 12.8 | |

|

| ||

| love | 12.6 | |

|

| ||

| attractive | 12.6 | |

|

| ||

| seat | 12.5 | |

|

| ||

| indoors | 12.3 | |

|

| ||

| sexy | 12 | |

|

| ||

| portrait | 11.6 | |

|

| ||

| leisure | 11.6 | |

|

| ||

| wheeled vehicle | 11.6 | |

|

| ||

| family | 11.6 | |

|

| ||

| interior | 11.5 | |

|

| ||

| mother | 11.4 | |

|

| ||

| table | 10.5 | |

|

| ||

| together | 10.5 | |

|

| ||

| smiling | 10.1 | |

|

| ||

| outdoors | 9.8 | |

|

| ||

| equipment | 9.7 | |

|

| ||

| vehicle | 9.5 | |

|

| ||

| enjoying | 9.5 | |

|

| ||

| senior | 9.4 | |

|

| ||

| clothing | 9.3 | |

|

| ||

| model | 9.3 | |

|

| ||

| smile | 9.3 | |

|

| ||

| child | 9.1 | |

|

| ||

| care | 9 | |

|

| ||

| human | 9 | |

|

| ||

| fun | 9 | |

|

| ||

| reading | 8.6 | |

|

| ||

| skin | 8.5 | |

|

| ||

| travel | 8.4 | |

|

| ||

| cheerful | 8.1 | |

|

| ||

| body | 8 | |

|

| ||

| furniture | 8 | |

|

| ||

| hair | 7.9 | |

|

| ||

| work | 7.8 | |

|

| ||

| happiness | 7.8 | |

|

| ||

| boy | 7.8 | |

|

| ||

| face | 7.8 | |

|

| ||

| youth | 7.7 | |

|

| ||

| elderly | 7.7 | |

|

| ||

| outdoor | 7.6 | |

|

| ||

| casual | 7.6 | |

|

| ||

| relax | 7.6 | |

|

| ||

| togetherness | 7.5 | |

|

| ||

| enjoy | 7.5 | |

|

| ||

| mature | 7.4 | |

|

| ||

| alone | 7.3 | |

|

| ||

| teenager | 7.3 | |

|

| ||

| laptop | 7.3 | |

|

| ||

| business | 7.3 | |

|

| ||

| group | 7.2 | |

|

| ||

| blond | 7.2 | |

|

| ||

Google

created on 2022-01-08

| Furniture | 93.6 | |

|

| ||

| Table | 93.4 | |

|

| ||

| Chair | 88.5 | |

|

| ||

| Black-and-white | 85 | |

|

| ||

| Style | 84.1 | |

|

| ||

| Adaptation | 79.2 | |

|

| ||

| Snapshot | 74.3 | |

|

| ||

| Leisure | 74.1 | |

|

| ||

| Event | 73.4 | |

|

| ||

| Fun | 72.9 | |

|

| ||

| Vintage clothing | 72.8 | |

|

| ||

| Monochrome photography | 72.1 | |

|

| ||

| Recreation | 71.8 | |

|

| ||

| Monochrome | 71.1 | |

|

| ||

| T-shirt | 70.3 | |

|

| ||

| Suit | 69.5 | |

|

| ||

| Art | 67.4 | |

|

| ||

| Room | 67.3 | |

|

| ||

| Tree | 65.9 | |

|

| ||

| Sitting | 64.8 | |

|

| ||

Color Analysis

Face analysis

Amazon

Microsoft

AWS Rekognition

| Age | 21-29 |

| Gender | Male, 100% |

| Happy | 96.1% |

| Disgusted | 1.4% |

| Angry | 1.3% |

| Surprised | 0.3% |

| Sad | 0.3% |

| Fear | 0.2% |

| Confused | 0.2% |

| Calm | 0.2% |

AWS Rekognition

| Age | 0-4 |

| Gender | Male, 93.6% |

| Calm | 47% |

| Happy | 46.2% |

| Confused | 3.8% |

| Angry | 1.5% |

| Sad | 0.7% |

| Disgusted | 0.5% |

| Surprised | 0.2% |

| Fear | 0.1% |

AWS Rekognition

| Age | 22-30 |

| Gender | Female, 97.5% |

| Happy | 99.3% |

| Calm | 0.2% |

| Sad | 0.1% |

| Surprised | 0.1% |

| Angry | 0.1% |

| Disgusted | 0.1% |

| Fear | 0.1% |

| Confused | 0.1% |

AWS Rekognition

| Age | 29-39 |

| Gender | Male, 93.8% |

| Surprised | 48.6% |

| Calm | 16.3% |

| Happy | 14.8% |

| Confused | 5.7% |

| Disgusted | 4.9% |

| Angry | 4.7% |

| Sad | 2.7% |

| Fear | 2.3% |

AWS Rekognition

| Age | 19-27 |

| Gender | Female, 99.6% |

| Happy | 96.5% |

| Calm | 3% |

| Sad | 0.1% |

| Disgusted | 0.1% |

| Angry | 0.1% |

| Confused | 0.1% |

| Fear | 0.1% |

| Surprised | 0.1% |

AWS Rekognition

| Age | 24-34 |

| Gender | Male, 99.8% |

| Sad | 91.4% |

| Angry | 2.4% |

| Happy | 2.3% |

| Calm | 1.8% |

| Confused | 0.9% |

| Disgusted | 0.7% |

| Fear | 0.3% |

| Surprised | 0.2% |

AWS Rekognition

| Age | 36-44 |

| Gender | Male, 99% |

| Disgusted | 86.8% |

| Angry | 4.3% |

| Sad | 3.9% |

| Confused | 1.7% |

| Happy | 1.1% |

| Calm | 1.1% |

| Surprised | 0.7% |

| Fear | 0.5% |

Microsoft Cognitive Services

| Age | 26 |

| Gender | Male |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very likely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very likely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very likely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Feature analysis

Categories

Imagga

| paintings art | 93.6% | |

|

| ||

| nature landscape | 3.3% | |

|

| ||

| beaches seaside | 1.6% | |

|

| ||

Captions

Microsoft

created on 2022-01-08

| a group of people sitting in front of a crowd | 79.9% | |

|

| ||

| a group of people sitting at a beach | 79.8% | |

|

| ||

| a group of people sitting in chairs in front of a crowd | 79.7% | |

|

| ||