Machine Generated Data

Tags

Amazon

created on 2022-05-27

| Human | 99.6 | |

|

| ||

| Furniture | 94.6 | |

|

| ||

| Meal | 94.3 | |

|

| ||

| Food | 94.3 | |

|

| ||

| Outdoors | 80.4 | |

|

| ||

| People | 79.7 | |

|

| ||

| Clothing | 76.7 | |

|

| ||

| Apparel | 76.7 | |

|

| ||

| Crowd | 73 | |

|

| ||

| Military | 72.3 | |

|

| ||

| Leisure Activities | 71 | |

|

| ||

| Sitting | 69.8 | |

|

| ||

| Picnic | 66.9 | |

|

| ||

| Vacation | 66.9 | |

|

| ||

| Tree | 65.5 | |

|

| ||

| Plant | 65.5 | |

|

| ||

| Military Uniform | 64.8 | |

|

| ||

| Female | 62.1 | |

|

| ||

| Army | 61.8 | |

|

| ||

| Armored | 61.8 | |

|

| ||

| Water | 59 | |

|

| ||

| Troop | 57.9 | |

|

| ||

| Musician | 57.2 | |

|

| ||

| Musical Instrument | 57.2 | |

|

| ||

| Soldier | 55.4 | |

|

| ||

Clarifai

created on 2023-10-30

Imagga

created on 2022-05-27

| barrow | 25.4 | |

|

| ||

| man | 23.5 | |

|

| ||

| handcart | 19.5 | |

|

| ||

| vehicle | 18.9 | |

|

| ||

| shovel | 18.1 | |

|

| ||

| male | 17.8 | |

|

| ||

| seller | 17.4 | |

|

| ||

| old | 17.4 | |

|

| ||

| wheeled vehicle | 16.8 | |

|

| ||

| person | 15.3 | |

|

| ||

| sky | 15.3 | |

|

| ||

| people | 15.1 | |

|

| ||

| outdoor | 13 | |

|

| ||

| outdoors | 12.8 | |

|

| ||

| military | 12.5 | |

|

| ||

| architecture | 11.7 | |

|

| ||

| stone | 11.5 | |

|

| ||

| soldier | 10.7 | |

|

| ||

| uniform | 10.6 | |

|

| ||

| travel | 10.6 | |

|

| ||

| building | 10.5 | |

|

| ||

| men | 10.3 | |

|

| ||

| tool | 10.3 | |

|

| ||

| two | 10.2 | |

|

| ||

| protection | 10 | |

|

| ||

| danger | 10 | |

|

| ||

| history | 9.8 | |

|

| ||

| hand tool | 9.8 | |

|

| ||

| war | 9.6 | |

|

| ||

| tree | 9.6 | |

|

| ||

| weapon | 9.5 | |

|

| ||

| clothing | 9.2 | |

|

| ||

| vintage | 9.1 | |

|

| ||

| adult | 9.1 | |

|

| ||

| transportation | 9 | |

|

| ||

| working | 8.8 | |

|

| ||

| ancient | 8.6 | |

|

| ||

| day | 8.6 | |

|

| ||

| statue | 8.4 | |

|

| ||

| summer | 8.4 | |

|

| ||

| city | 8.3 | |

|

| ||

| sport | 8.2 | |

|

| ||

| vacation | 8.2 | |

|

| ||

| dirty | 8.1 | |

|

| ||

| activity | 8.1 | |

|

| ||

| country | 7.9 | |

|

| ||

| sand | 7.9 | |

|

| ||

| rock | 7.8 | |

|

| ||

| destruction | 7.8 | |

|

| ||

| play | 7.8 | |

|

| ||

| mask | 7.7 | |

|

| ||

| equipment | 7.6 | |

|

| ||

| fun | 7.5 | |

|

| ||

| street | 7.4 | |

|

| ||

| historic | 7.3 | |

|

| ||

| gun | 7.2 | |

|

| ||

| recreation | 7.2 | |

|

| ||

| game | 7.1 | |

|

| ||

| trees | 7.1 | |

|

| ||

| conveyance | 7.1 | |

|

| ||

Google

created on 2022-05-27

| Musical instrument | 88.2 | |

|

| ||

| Tree | 85.6 | |

|

| ||

| Adaptation | 79.3 | |

|

| ||

| Folk instrument | 77.5 | |

|

| ||

| Motor vehicle | 75.8 | |

|

| ||

| Art | 72.1 | |

|

| ||

| Event | 70.1 | |

|

| ||

| Vintage clothing | 69.8 | |

|

| ||

| String instrument | 67 | |

|

| ||

| Musician | 66.8 | |

|

| ||

| Sky | 66.2 | |

|

| ||

| History | 65.8 | |

|

| ||

| Sitting | 64.5 | |

|

| ||

| Recreation | 64.4 | |

|

| ||

| Chair | 64.2 | |

|

| ||

| Crew | 62.8 | |

|

| ||

| Stock photography | 62.7 | |

|

| ||

| Guitar | 60.7 | |

|

| ||

| Monochrome | 57.7 | |

|

| ||

| Pole | 56.6 | |

|

| ||

Color Analysis

Face analysis

Amazon

Microsoft

Imagga

AWS Rekognition

| Age | 43-51 |

| Gender | Male, 99.7% |

| Happy | 95.1% |

| Surprised | 6.4% |

| Fear | 5.9% |

| Sad | 2.3% |

| Calm | 2.2% |

| Disgusted | 1.1% |

| Confused | 0.4% |

| Angry | 0.3% |

AWS Rekognition

| Age | 63-73 |

| Gender | Male, 99.9% |

| Calm | 72.6% |

| Angry | 13.5% |

| Surprised | 7.2% |

| Fear | 6.1% |

| Sad | 4.5% |

| Disgusted | 3.5% |

| Happy | 1.5% |

| Confused | 1.1% |

AWS Rekognition

| Age | 50-58 |

| Gender | Male, 50.8% |

| Happy | 88.6% |

| Calm | 9.8% |

| Surprised | 6.4% |

| Fear | 5.9% |

| Sad | 2.3% |

| Angry | 0.3% |

| Disgusted | 0.3% |

| Confused | 0.2% |

AWS Rekognition

| Age | 34-42 |

| Gender | Female, 97.7% |

| Calm | 65.3% |

| Fear | 15.4% |

| Surprised | 10.4% |

| Angry | 3.6% |

| Happy | 3% |

| Sad | 3% |

| Disgusted | 2% |

| Confused | 1.4% |

AWS Rekognition

| Age | 48-54 |

| Gender | Male, 96.2% |

| Calm | 90.1% |

| Surprised | 6.6% |

| Fear | 6.1% |

| Sad | 4.8% |

| Angry | 1.2% |

| Confused | 0.6% |

| Disgusted | 0.5% |

| Happy | 0.4% |

AWS Rekognition

| Age | 54-64 |

| Gender | Male, 99.9% |

| Calm | 69.3% |

| Confused | 12.5% |

| Surprised | 7.8% |

| Fear | 6.8% |

| Happy | 4.9% |

| Sad | 4.1% |

| Angry | 2% |

| Disgusted | 1.5% |

AWS Rekognition

| Age | 36-44 |

| Gender | Female, 100% |

| Happy | 31.8% |

| Calm | 28.1% |

| Angry | 18.2% |

| Disgusted | 11.5% |

| Surprised | 9.9% |

| Fear | 6.3% |

| Sad | 2.5% |

| Confused | 2.3% |

AWS Rekognition

| Age | 54-64 |

| Gender | Male, 92% |

| Sad | 98.1% |

| Calm | 13.5% |

| Angry | 9.4% |

| Fear | 7.9% |

| Confused | 7.2% |

| Surprised | 6.9% |

| Happy | 5.4% |

| Disgusted | 4.1% |

AWS Rekognition

| Age | 36-44 |

| Gender | Female, 100% |

| Calm | 82.8% |

| Surprised | 6.9% |

| Fear | 6.4% |

| Angry | 4.7% |

| Sad | 4.4% |

| Happy | 2.1% |

| Disgusted | 1.5% |

| Confused | 1.1% |

AWS Rekognition

| Age | 34-42 |

| Gender | Male, 98.3% |

| Disgusted | 74.8% |

| Sad | 12.2% |

| Surprised | 6.5% |

| Fear | 6.2% |

| Angry | 5.8% |

| Calm | 3.1% |

| Happy | 1.4% |

| Confused | 0.6% |

AWS Rekognition

| Age | 41-49 |

| Gender | Female, 99.9% |

| Disgusted | 43.5% |

| Calm | 24% |

| Confused | 17.3% |

| Surprised | 8.9% |

| Fear | 6.5% |

| Sad | 4% |

| Happy | 3.2% |

| Angry | 1.5% |

AWS Rekognition

| Age | 50-58 |

| Gender | Female, 99.9% |

| Sad | 60.5% |

| Happy | 33.1% |

| Calm | 31.3% |

| Surprised | 7.1% |

| Fear | 6.6% |

| Angry | 0.9% |

| Confused | 0.8% |

| Disgusted | 0.7% |

AWS Rekognition

| Age | 45-51 |

| Gender | Female, 100% |

| Happy | 99.8% |

| Surprised | 6.3% |

| Fear | 5.9% |

| Sad | 2.2% |

| Disgusted | 0% |

| Confused | 0% |

| Calm | 0% |

| Angry | 0% |

AWS Rekognition

| Age | 37-45 |

| Gender | Female, 99.3% |

| Sad | 51.8% |

| Calm | 44.1% |

| Confused | 14.4% |

| Surprised | 8.1% |

| Fear | 6.9% |

| Happy | 4.2% |

| Angry | 2.2% |

| Disgusted | 2.1% |

AWS Rekognition

| Age | 37-45 |

| Gender | Male, 99.9% |

| Surprised | 73.7% |

| Happy | 18.5% |

| Sad | 11.6% |

| Calm | 10.5% |

| Fear | 7.2% |

| Disgusted | 4.4% |

| Angry | 4% |

| Confused | 3.4% |

AWS Rekognition

| Age | 22-30 |

| Gender | Male, 98.8% |

| Calm | 88.8% |

| Happy | 6.9% |

| Surprised | 6.5% |

| Fear | 5.9% |

| Sad | 2.6% |

| Confused | 1.9% |

| Disgusted | 0.3% |

| Angry | 0.2% |

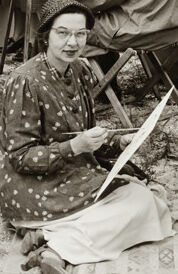

Microsoft Cognitive Services

| Age | 49 |

| Gender | Female |

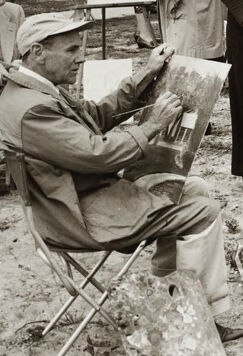

Microsoft Cognitive Services

| Age | 37 |

| Gender | Male |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Unlikely |

| Headwear | Possible |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Unlikely |

| Headwear | Likely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Likely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Possible |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Imagga

| Traits | no traits identified |

Imagga

| Traits | no traits identified |

Imagga

| Traits | no traits identified |

Imagga

| Traits | no traits identified |

Imagga

| Traits | no traits identified |

Imagga

| Traits | no traits identified |

Imagga

| Traits | no traits identified |

Imagga

| Traits | no traits identified |

Feature analysis

Amazon

Clarifai

AWS Rekognition

| Person | 99.6% | |

|

| ||

AWS Rekognition

| Person | 99.5% | |

|

| ||

AWS Rekognition

| Person | 99.5% | |

|

| ||

AWS Rekognition

| Person | 99.3% | |

|

| ||

AWS Rekognition

| Person | 99.1% | |

|

| ||

AWS Rekognition

| Person | 99.1% | |

|

| ||

AWS Rekognition

| Person | 98.8% | |

|

| ||

AWS Rekognition

| Person | 98.8% | |

|

| ||

AWS Rekognition

| Person | 98.8% | |

|

| ||

AWS Rekognition

| Person | 98.3% | |

|

| ||

AWS Rekognition

| Person | 98.1% | |

|

| ||

AWS Rekognition

| Person | 97.6% | |

|

| ||

AWS Rekognition

| Person | 86.5% | |

|

| ||

AWS Rekognition

| Person | 75.6% | |

|

| ||

AWS Rekognition

| Person | 68.1% | |

|

| ||

AWS Rekognition

| Person | 43.6% | |

|

| ||

AWS Rekognition

| Chair | 94.6% | |

|

| ||

Clarifai

| Tree | 87% | |

|

| ||

Clarifai

| Tree | 83% | |

|

| ||

Clarifai

| Tree | 73.1% | |

|

| ||

Clarifai

| Tree | 68.5% | |

|

| ||

Clarifai

| Tree | 65.9% | |

|

| ||

Clarifai

| Tree | 65.2% | |

|

| ||

Clarifai

| Tree | 65.1% | |

|

| ||

Clarifai

| Tree | 64% | |

|

| ||

Clarifai

| Tree | 54.5% | |

|

| ||

Clarifai

| Tree | 53.3% | |

|

| ||

Clarifai

| Tree | 51.3% | |

|

| ||

Clarifai

| Tree | 50.4% | |

|

| ||

Clarifai

| Tree | 50.3% | |

|

| ||

Clarifai

| Tree | 49.2% | |

|

| ||

Clarifai

| Tree | 42.2% | |

|

| ||

Clarifai

| Tree | 42.1% | |

|

| ||

Clarifai

| Tree | 40.9% | |

|

| ||

Clarifai

| Tree | 38.6% | |

|

| ||

Clarifai

| Tree | 37.6% | |

|

| ||

Clarifai

| Tree | 37.4% | |

|

| ||

Clarifai

| Tree | 37.3% | |

|

| ||

Clarifai

| Tree | 34.7% | |

|

| ||

Clarifai

| Man | 83.9% | |

|

| ||

Clarifai

| Man | 78.8% | |

|

| ||

Clarifai

| Man | 74.2% | |

|

| ||

Clarifai

| Man | 68.9% | |

|

| ||

Clarifai

| Man | 64.5% | |

|

| ||

Clarifai

| Man | 53.1% | |

|

| ||

Clarifai

| Man | 42% | |

|

| ||

Clarifai

| Man | 35.2% | |

|

| ||

Clarifai

| Clothing | 81.1% | |

|

| ||

Clarifai

| Clothing | 80.8% | |

|

| ||

Clarifai

| Clothing | 77.9% | |

|

| ||

Clarifai

| Clothing | 76.9% | |

|

| ||

Clarifai

| Clothing | 75.9% | |

|

| ||

Clarifai

| Clothing | 75% | |

|

| ||

Clarifai

| Clothing | 71.9% | |

|

| ||

Clarifai

| Clothing | 69.3% | |

|

| ||

Clarifai

| Clothing | 66.9% | |

|

| ||

Clarifai

| Clothing | 61% | |

|

| ||

Clarifai

| Clothing | 57% | |

|

| ||

Clarifai

| Clothing | 41.4% | |

|

| ||

Clarifai

| Footwear | 71.5% | |

|

| ||

Clarifai

| Footwear | 67.3% | |

|

| ||

Clarifai

| Footwear | 64% | |

|

| ||

Clarifai

| Footwear | 62.2% | |

|

| ||

Clarifai

| Footwear | 52.7% | |

|

| ||

Clarifai

| Footwear | 51.5% | |

|

| ||

Clarifai

| Footwear | 50.7% | |

|

| ||

Clarifai

| Footwear | 44.9% | |

|

| ||

Clarifai

| Footwear | 43.5% | |

|

| ||

Clarifai

| Footwear | 41.6% | |

|

| ||

Clarifai

| Footwear | 41.3% | |

|

| ||

Clarifai

| Footwear | 40.9% | |

|

| ||

Clarifai

| Footwear | 38.3% | |

|

| ||

Clarifai

| Footwear | 36.7% | |

|

| ||

Clarifai

| Footwear | 36.3% | |

|

| ||

Clarifai

| Footwear | 36.1% | |

|

| ||

Clarifai

| Footwear | 35.4% | |

|

| ||

Clarifai

| Footwear | 35.2% | |

|

| ||

Clarifai

| Footwear | 33.5% | |

|

| ||

Clarifai

| Human face | 55.4% | |

|

| ||

Clarifai

| Human face | 45.2% | |

|

| ||

Clarifai

| Human face | 44.5% | |

|

| ||

Clarifai

| Human face | 44.2% | |

|

| ||

Clarifai

| Human face | 42.3% | |

|

| ||

Clarifai

| Human face | 40.3% | |

|

| ||

Clarifai

| Human face | 39.7% | |

|

| ||

Clarifai

| Human face | 39.7% | |

|

| ||

Clarifai

| Human face | 36.5% | |

|

| ||

Clarifai

| Human head | 36.6% | |

|

| ||

Categories

Imagga

created on 2022-05-27

| streetview architecture | 39.7% | |

|

| ||

| paintings art | 30.1% | |

|

| ||

| nature landscape | 20.8% | |

|

| ||

| beaches seaside | 8.6% | |

|

| ||

Captions

Microsoft

created by unknown on 2022-05-27

| a group of people sitting posing for the camera | 90.2% | |

|

| ||

| a group of people posing for a photo | 88.7% | |

|

| ||

| a group of people posing for a picture | 88.6% | |

|

| ||

Clarifai

No captions written

Salesforce

Created by general-english-image-caption-blip on 2025-05-12

a photograph of a group of people sitting around a table

Created by general-english-image-caption-blip-2 on 2025-07-01

an old black and white photo of people sitting on the beach

OpenAI GPT

Created by gpt-4o-2024-11-20 on 2025-06-16

The image depicts a group of people engaged in painting outdoors, likely participating in a plein air art session. They are positioned near a tranquil waterfront with trees in the background, creating a scenic atmosphere. Several individuals are sitting on chairs or stools, holding canvases and art supplies, while others stand beside easels or converse. The group is dressed in a variety of attire, including hats, jackets, and patterned dresses, suitable for an outdoor setting. Artistic works in progress are visible on easels and boards, showcasing landscapes and sketches. The gathering seems to reflect a communal and creative activity in a serene environmental setting.

Created by gpt-4o-2024-08-06 on 2025-06-16

The image depicts a group of people gathered outdoors by a lake or river, engaging in a painting session. They are equipped with easels, canvases, and painting supplies, with some sitting on chairs and others standing. The background features a line of trees and a few houses, suggesting a serene, natural setting. The artists are dressed in a variety of clothing styles, ranging from casual wear to more formal attire, and they appear focused on their artwork. The scene exudes a sense of community and creativity, with a peaceful atmosphere indicative of a shared artistic pursuit.

Anthropic Claude

Created by anthropic.claude-3-haiku-20240307-v1:0 on 2025-06-11

The image appears to depict an outdoor painting session or art class. There is a group of people, both men and women, sitting or standing and engaged in various artistic activities such as painting, sketching, and observing. The setting seems to be a park or outdoor area with palm trees and other foliage visible in the background. The people are dressed in clothing styles that suggest this is a historical photograph, likely from the mid-20th century. Overall, the image conveys a sense of a communal artistic endeavor taking place in a pleasant outdoor environment.

Created by us.anthropic.claude-3-opus-20240229-v1:0 on 2025-06-11

The black and white image depicts a group of people, likely artists, gathered outdoors on a beach. They are sitting and standing around, holding various art supplies like easels, paintbrushes, and canvases. Some appear to be actively painting or sketching the beach scene in front of them.

The people are dressed in clothing styles typical of the mid-20th century era, such as dresses, collared shirts, and hats. Palm trees line the beach in the background, giving the impression this may be taking place in a tropical or subtropical location.

Overall, the vintage photograph captures what seems to be an artists' gathering or painting session taking place in a picturesque beachside setting sometime in the early to mid 1900s. The casual, social nature of the group points to it possibly being an art class, club or friends coming together to practice plein air painting outdoors.

Created by us.anthropic.claude-3-5-sonnet-20241022-v2:0 on 2025-06-11

This is a vintage black and white photograph showing an outdoor art class or painting group. The scene appears to be set in a tropical or coastal location, with palm trees visible in the background. The group consists of several people, both men and women, sitting on folding chairs and blankets while working at their easels. They're dressed in 1950s-style clothing, with some wearing berets and casual attire appropriate for outdoor painting. Each person has their own painting supplies and canvas, suggesting this is either an informal gathering of artists or perhaps a formal art class meeting outdoors. The setting looks quite casual and relaxed, with the artists spread out on what appears to be sandy or unpaved ground. The composition captures a lovely moment of creative community activity, typical of mid-20th century recreational pursuits.

Meta Llama

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-06-02

This image is a black-and-white photograph of a group of people painting outdoors. The group consists of 17 individuals, with 15 standing or sitting in the foreground and two more visible in the background. They are all dressed in casual attire, with some wearing hats and others holding paintbrushes or palettes.

In the center of the image, there is a table with an easel, and several people are gathered around it, engaged in painting. Some are seated on chairs or blankets, while others stand behind their easels. The background of the image features a serene landscape with trees, a body of water, and a few buildings in the distance.

The overall atmosphere of the image suggests a relaxed and creative setting, with the group enjoying a day of outdoor painting together. The image appears to be from an earlier era, possibly the mid-20th century, based on the clothing and hairstyles of the individuals depicted.

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-06-02

The image is a black-and-white photograph of a group of people painting en plein air on the beach. The group consists of approximately 15 individuals, with some sitting and others standing around easels. They are all engaged in painting, with some holding canvases and others holding paintbrushes. The background features a serene beach scene with palm trees, a body of water, and a few houses in the distance. The overall atmosphere suggests a relaxed and creative gathering of artists enjoying the outdoors and each other's company.

Amazon Nova

Created by amazon.nova-pro-v1:0 on 2025-06-01

The black-and-white photo features a group of people sitting and standing on the sand, possibly in a park. Some of them are wearing hats and glasses. They are holding a piece of paper and a paintbrush, and some of them are painting on the canvas. There are trees and a body of water behind them. There is a house and a street light in the distance.

Created by amazon.nova-lite-v1:0 on 2025-06-01

A black-and-white photograph shows a group of people sitting on the ground and standing in an outdoor setting. They are engaged in painting, with some individuals using easels and others holding canvases. The setting appears to be a beach or a park, with trees and a body of water in the background. The people are dressed in casual clothing, and some are wearing hats. The image captures a moment of creativity and relaxation, with the artists focused on their work.

Google Gemini

Created by gemini-2.0-flash-lite on 2025-05-13

Certainly! Here's a description of the image:

Overall Impression:

The image is a vintage, black-and-white photograph depicting a group of people engaged in outdoor painting. The scene evokes a sense of leisurely artistry and camaraderie.

Setting:

The setting appears to be a park or waterfront area. There's a body of water in the background, possibly a lake or a bay. Trees, including palm trees, are visible, suggesting a warm climate. The ground is sandy, indicating it is near the water.

People:

There is a large group of people, presumably artists, of varying ages. They are all dressed in attire typical of the mid-20th century. Some are seated, others are standing, and all are engaged in painting either at easels or holding their art. Many of them have easels set up and are working on canvases.

Activities:

The central focus is the act of painting. The artists are engrossed in their work, some with brushes in hand, some looking at their subjects, and others at the finished painting.

Mood and Tone:

The photograph has a calm, artistic atmosphere. It portrays a moment of creativity, leisure, and community. The black and white format adds to the vintage feel and creates a timeless appeal.

Created by gemini-2.0-flash on 2025-05-13

Here is a description of the image:

This black and white photograph depicts a group of people engaged in an outdoor painting session, likely an art class or plein air painting group. The setting appears to be a park or waterfront location, suggested by the presence of water, trees, and possibly a dock in the background. The group includes a mix of men and women, all appearing to be adults, possibly senior citizens.

Several individuals are actively painting or drawing, each set up with easels or sketchpads. Some are standing, while others are seated on chairs or the ground. They are focused on their work, suggesting a dedicated artistic atmosphere. Their attire is casual, suitable for an outdoor activity.

The background features a body of water, possibly a lake or a bay, with trees and some structures visible in the distance. The lighting is somewhat diffused, typical of an overcast day, which contributes to the overall serene and focused atmosphere of the scene.

Mistral Pixtral

Created by us.mistral.pixtral-large-2502-v1:0 on 2025-06-01

This black-and-white photograph depicts a group of people engaged in an outdoor painting session, likely in a park or a scenic area near a body of water. The group consists of men and women, some seated and others standing, all focused on their artwork. They are using easels, canvases, and various painting supplies. The attire of the individuals suggests that the photo might have been taken in the mid-20th century.

Key details include:

- Setting: The scene is outdoors with trees and a water body in the background. There are also some structures and palm trees visible, indicating a warm climate.

- Participants: The group includes both men and women, all of whom appear to be adults. They are dressed in what seems to be mid-20th-century clothing.

- Activity: Everyone is engaged in painting, with canvases set up on easels. Some are seated on folding chairs, while others stand.

- Equipment: Various painting supplies are visible, including paintbrushes, palettes, and canvases.

- Atmosphere: The atmosphere appears to be relaxed and social, with people enjoying the activity in a communal setting.

Overall, the image captures a moment of artistic expression and community engagement in a serene outdoor environment.

Qwen

Created by Qwen/Qwen2.5-VL-7B-Instruct on 2025-06-28

This black-and-white photograph depicts a group of people engaged in an outdoor painting activity. Several individuals are seated and standing on a dirt or sandy area by a body of water, with trees and a few buildings visible in the background. Some are seated on folding chairs or directly on the ground, while others are standing. Each person appears to have an easel and a canvas in front of them, suggesting they are participating in a plein air painting session. The attire of the individuals suggests it is a calm, possibly recreational or educational gathering, and the setting appears to be a relaxed, natural environment. The photograph is vintage in style, indicating it may be from an earlier time period.

Created by Qwen/Qwen2.5-VL-72B-Instruct on 2025-06-28

This is a vintage black-and-white photograph that depicts a group of people engaged in an outdoor painting class or workshop. The setting appears to be a sunny, natural environment, possibly near a body of water, as some palm trees and a distant water canal are visible in the background.

The participants are both men and women, dressed in attire typical of the 1940s or 1950s, such as hats, dresses, and suits. Some are sitting on chairs or on the ground, focusing on their individual paintings on easels, while others are standing and possibly observing or assisting. The atmosphere suggests a communal and educational activity, with a mix of concentration and interaction among the participants. The scene captures a moment of artistic engagement and social interaction in a pleasant outdoor setting.

Text analysis