Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

Microsoft

AWS Rekognition

| Age | 52-60 |

| Gender | Female, 100% |

| Calm | 80.6% |

| Confused | 12.4% |

| Sad | 4% |

| Angry | 1.1% |

| Happy | 0.7% |

| Surprised | 0.6% |

| Disgusted | 0.4% |

| Fear | 0.2% |

Feature analysis

Amazon

Clarifai

AWS Rekognition

| Person | 99% | |

Categories

Imagga

created on 2022-01-22

| paintings art | 95.5% | |

| streetview architecture | 3.9% | |

Captions

Microsoft

created by unknown on 2022-01-22

Clarifai

Created by general-english-image-caption-clip on 2025-07-12

person in a dress by film costumer designer.

Salesforce

Created by general-english-image-caption-blip on 2025-05-23

a photograph of a woman in a dress and hat with a purse

OpenAI GPT

Created by gpt-4o-2024-11-20 on 2025-06-16

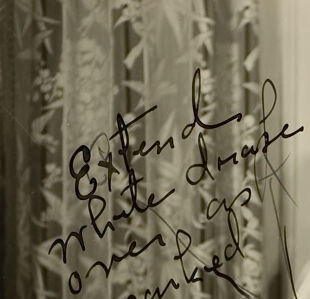

The image showcases a person dressed in 1920s-style fashion. The outfit includes a sleeveless black top paired with a layered, flowing white skirt featuring an asymmetrical hemline. There is a patterned textile or scarf draped off to one side. Accessories include long layered necklaces, bangles on both wrists, and metallic heels. The background contains a decorated interior with patterned curtains and ornamental plants. Handwritten notes on the image suggest instructions for adjustments, such as "Extend white drape over gap."

Created by gpt-4o-2024-08-06 on 2025-06-16

The image depicts a person standing indoors in front of a wall with paneling and a curtain featuring a patterned design. The person is wearing a sleeveless dress with a dark top portion and a lighter sheer skirt that extends to the mid-calf. A shawl or sash with a plaid pattern is draped over one shoulder, and a long necklace is worn around the neck. The person is also accessorized with multiple bracelets on one wrist. The photograph has black marks and annotations written on it, including an instruction to "Extend white drape over top marked." There are several lines extending across the photograph, possibly indicating areas for editing or adjustment. The floor is carpeted, and there appears to be a potted plant partially visible in the image.

Anthropic Claude

Created by anthropic.claude-3-haiku-20240307-v1:0 on 2025-06-21

The image shows a woman wearing a fashionable outfit from the 1920s era. She is standing in what appears to be a room with curtains or drapes in the background. The woman is dressed in a sleeveless black top, a white skirt, and a patterned scarf or shawl. She is also wearing a headband or hat, and has several pieces of jewelry, including a necklace and bracelets. The image has a vintage, black and white aesthetic, and the woman's expression appears serious or contemplative.

Created by us.anthropic.claude-3-5-sonnet-20241022-v2:0 on 2025-06-21

This is a black and white photograph that appears to be from the 1920s, showing someone dressed in typical flapper-era fashion. The subject is wearing a sleeveless black dress with a flowing white skirt, long beaded necklaces, and a close-fitting cloche hat characteristic of the period. They are standing against a wall with decorative curtains or wallpaper visible in the background. The image appears to be autographed with handwritten text in the upper portion. The style and composition suggest this is a professional portrait photograph, and the fashion elements strongly reflect the Art Deco period of the 1920s.

Meta Llama

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-06-10

The image is a black and white photograph of a woman dressed in 1920s attire, with handwritten notes on it.

The woman is positioned in the center of the image, facing forward. She wears a dark-colored sleeveless top, a white skirt, and a long white coat draped over her shoulders. Her head is covered by a dark-colored hat adorned with a white band, and she accessorizes with multiple strands of pearls, a plaid shawl tied around her waist, and a beaded purse. Her left hand holds a strand of pearls, and she wears high heels.

The background of the image features a wall with decorative molding and a dark-colored floor. A plant is visible to the left of the woman, and a curtain hangs behind her. The handwritten notes on the image appear to be corrections or annotations, likely made by someone who was editing or restoring the photograph. The notes are written in black ink and include phrases such as "Extend white drap" and "over lap." Overall, the image presents a stylish and elegant portrait of a woman from the 1920s, with a focus on her clothing and accessories.

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-06-10

The image is a black-and-white photograph of a woman in a 1920s-style outfit, with handwritten notes on the photo. The woman is wearing a dark sleeveless top, a white skirt, and a headband. She has several necklaces and bracelets on, and is holding a purse in her left hand.

The background of the photo appears to be a room with white walls and a window with curtains. There are some plants visible on the left side of the photo. The overall atmosphere of the photo suggests that it was taken in the 1920s or early 1930s, based on the woman's clothing and hairstyle.

Amazon Nova

Created by amazon.nova-lite-v1:0 on 2025-06-06

The image is a black-and-white photograph of a woman standing in a hallway. She is dressed in a flapper-inspired outfit, including a headband, a sleeveless top, a long skirt, and high heels. She is also wearing a necklace and a bracelet. The photograph has a handwritten note on the left side that reads, "Extend your hand, white as snow, and meet me on the corner." There is also a signature in the bottom right corner of the image.

Created by amazon.nova-pro-v1:0 on 2025-06-06

The black and white image shows a woman standing in a hallway. She is wearing a black dress with a white skirt, a black hat, and a necklace. She is also wearing a bracelet and a ring. She is standing in front of a wall with a curtain on the left side. There is a plant on the left side of the curtain. The image has a watermark with the text "Extend the trade while the war is on".

Google Gemini

Created by gemini-2.0-flash-lite on 2025-05-17

Certainly! Here's a description of the image:

Overall Impression:

The image is a vintage black and white photograph. It features a woman posing indoors, likely in a studio setting. The style of her clothing and the markings on the photo suggest it's from the early 20th century, possibly the 1920s.

The Woman:

- She is standing and looking directly at the camera.

- She's wearing a flapper-style outfit: a dark top, layered skirt with a plaid accent, and a headpiece.

- She has multiple strands of pearls around her neck, several bracelets on her arm and a small clutch.

- Her expression seems neutral.

Setting and Details:

- She is standing in front of a wall.

- To the left is a partially visible window with lace curtains.

- There are markings on the photo in what appears to be ink, including instructions like "Extend white drape" and a number.

Overall, the image has the feel of a fashion photograph or a portrait from the era.

Created by gemini-2.0-flash on 2025-05-17

Here is a description of the image:

This is a vintage black and white photograph of a woman in what appears to be a 1920s style outfit. She stands facing the camera, positioned slightly off-center in the frame.

She wears a dark, sleeveless top, a white scarf draped over one shoulder, and a white skirt with a scalloped hem. A plaid sash hangs from her waist. She is accessorized with multiple bangles on her arm, a long pearl necklace, and a beaded headwrap. Her shoes are T-strap heels, common for the era.

In the background to the left, there is a leafy plant and a decorative curtain. The right side of the background is a plain wall with a small air vent near the floor. There are handwritten notes on the print, with one set of instructions to "Extend white drape over marked" along with lines pointing to specific areas on the photograph. There is also the number "98" written on the right.

Mistral Pixtral

Created by us.mistral.pixtral-large-2502-v1:0 on 2025-06-04

The image is a vintage black-and-white photograph of a woman standing indoors. She is dressed in fashion typical of the 1920s or 1930s, characterized by a flapper-style dress. Here are the details:

Attire:

- The woman is wearing a sleeveless, knee-length dress with a drop waist, which was a popular style during the 1920s.

- The dress has a dark top and a lighter, possibly white, skirt.

- She is accessorized with multiple strands of beaded necklaces and bracelets, which were also fashionable during that era.

- She is wearing a cloche hat, a close-fitting hat popular in the 1920s.

- Her shoes appear to be high-heeled and stylish, matching her outfit.

Pose and Expression:

- The woman is posing with one hand on her hip and the other holding a small clutch or handbag.

- She has a confident and poised stance, looking directly at the camera.

Background:

- The background shows an ornate curtain with a floral pattern, suggesting an elegant indoor setting.

- There is a shadow on the wall behind her, likely from the lighting used to take the photograph.

Annotations:

- There are handwritten notes on the photograph, indicating areas that need retouching or adjustments. The notes mention extending the white drape, adjusting the shadows, and other specifics related to the image's appearance.

The overall impression is of a stylish and sophisticated woman from the early 20th century, captured in a formal portrait setting. The annotations suggest that the photograph might have been intended for publication or display, requiring some editing for perfection.

Qwen

Created by Qwen/Qwen2.5-VL-7B-Instruct on 2025-07-14

The image is a vintage black-and-white photograph of a woman standing indoors. She is wearing a stylish outfit typical of the 1920s era, featuring a dark sleeveless top with a plunging neckline, a white plaid skirt, and a white shawl draped over her shoulders. She is accessorized with several long pearl necklaces, bangles, and what appears to be a headband or bandanna. Her pose is relaxed, with one hand in her pocket and the other resting by her side. The background includes a decorative curtain and a white wall with a simple design. The photograph has been inscribed with cursive handwriting on the left side, which is partially visible and appears to contain a message or signature. The overall style and attire suggest a fashionable individual from the roaring 20s.

Created by Qwen/Qwen2.5-VL-72B-Instruct on 2025-07-14

This is a vintage black-and-white photograph of a woman dressed in an elegant 1920s-style outfit. She is wearing a sleeveless dress with a patterned skirt, accessorized with multiple strands of pearls and several bracelets on her left wrist. She also has a headpiece, which appears to be a cloche hat, and is holding a handkerchief or scarf over her left arm. The background features a curtain and a potted plant, adding to the classic and refined ambiance of the scene. There is some handwritten text on the photograph that reads "Extend photo above marked" and "of."