Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

AWS Rekognition

| Age | 18-26 |

| Gender | Male, 97.2% |

| Calm | 61.1% |

| Sad | 30.2% |

| Fear | 3.3% |

| Confused | 2.6% |

| Angry | 1.2% |

| Disgusted | 0.8% |

| Surprised | 0.6% |

| Happy | 0.3% |

Feature analysis

Amazon

Clarifai

AWS Rekognition

| Person | 99.2% | |

Categories

Imagga

created on 2022-01-22

| streetview architecture | 90.4% | |

| paintings art | 5.9% | |

| nature landscape | 2.3% | |

Captions

Microsoft

created by unknown on 2022-01-22

| a group of people standing outside of a building | 79.9% | |

| a group of people standing in front of a building | 78.6% | |

| a group of people standing next to a building | 77.9% | |

Clarifai

Created by general-english-image-caption-clip on 2025-07-13

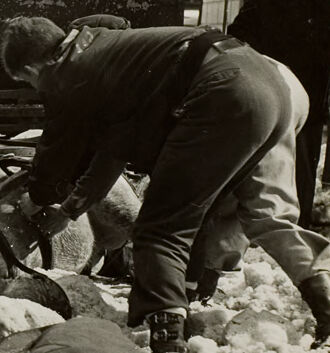

a man and his dog dig out a dumpster from a street.

Salesforce

Created by general-english-image-caption-blip on 2025-05-17

a photograph of a man is standing in the snow with a horse drawn carriage

OpenAI GPT

Created by gpt-4o-2024-08-06 on 2025-06-23

The response was filtered due to the prompt triggering Azure OpenAI's content management policy. The content filter results follow.

hate: safe

self-harm: safe

sexual: safe

violence: high

Anthropic Claude

Created by anthropic.claude-3-haiku-20240307-v1:0 on 2025-06-23

The image depicts a snowy urban street scene. Several people are gathered around a fallen horse, which appears to have collapsed in the snow. The people are dressed in winter clothing, suggesting this is a cold weather event. There are buildings and vehicles visible in the background, indicating this is taking place in a city setting. The overall scene conveys a sense of struggle and hardship, with the fallen horse and people working to assist it.

Created by us.anthropic.claude-3-opus-20240229-v1:0 on 2025-06-23

The black and white photograph depicts a snowy street scene in what appears to be an urban area, likely from the early-to-mid 20th century based on the clothing and vehicles. In the foreground, several men are working to attach chains or ropes to a horse that has fallen down in the slushy street in front of a truck or wagon. The men are wearing coats and hats typical of the era as they attempt to help the struggling horse back to its feet. Brick buildings line the street, which is covered in snow and slush from a recent snowfall.

Created by us.anthropic.claude-3-5-sonnet-20241022-v2:0 on 2025-06-23

This is a black and white historical photograph showing a distressing scene where a horse has fallen on a snowy city street. Several people are gathered around the fallen horse, which appears to be a working horse attached to what looks like a delivery wagon or cart (visible with its hood up in the background). The setting appears to be in an urban area with brick buildings visible along the street. The image likely dates from the mid-20th century, given the style of clothing and vehicles visible in the background. It captures a moment that was probably common in cities when horses were still widely used for transportation and delivery services, especially during difficult winter conditions when streets became slippery with snow.

Meta Llama

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-06-04

The image depicts a scene of a horse lying on the ground, with people gathered around it. The horse is positioned in the center of the image, its body partially covered by a blanket or sheet. It appears to be injured or deceased, as it is not moving and has a noticeable wound on its side.

In the foreground, several individuals are standing or kneeling near the horse. One person is holding the horse's head, while another is attempting to lift its legs. A third person is standing behind the horse, looking down at it with concern. A fourth person is partially visible in the background, standing near a large truck or wagon.

The background of the image shows a city street with buildings and cars. The overall atmosphere of the image suggests that the horse has been involved in an accident or has fallen ill, and the people are trying to help it. The image may have been taken in the early 20th century, based on the clothing and vehicles depicted.

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-06-04

The image depicts a black-and-white photograph of a horse lying on its side in the snow, with several people gathered around it. The horse is wearing a harness and appears to be injured or exhausted. A man is kneeling beside the horse, holding its head, while another man is standing nearby, possibly trying to assist. In the background, there are several buildings, including a storefront with a sign that reads "Woof's." The overall atmosphere of the image suggests that the horse has collapsed due to exhaustion or injury, and the people are trying to help it.

Amazon Nova

Created by amazon.nova-lite-v1:0 on 2025-06-03

A black-and-white photo shows a horse lying on the street covered in snow. It seems like the horse is dead. There are people around the horse. One man is holding the horse's head, while another man is holding the horse's body. A man is standing behind the horse, looking at it. There is a carriage behind the horse, and a building with many windows is on the side of the street.

Created by amazon.nova-pro-v1:0 on 2025-06-03

A black-and-white photo of a horse lying on the snow, with three men trying to help it stand up. The man on the right is holding the horse's harness, while the man on the left is holding the horse's head. The man in the middle is standing near the carriage. There are two people standing behind the carriage, one of whom is wearing a hat. Behind them are buildings and parked cars.

Google Gemini

Created by gemini-2.0-flash on 2025-05-17

The black and white photo shows what appears to be a horse-drawn carriage accident on a snowy city street. In the foreground, a horse lies collapsed on the ground, entangled in its harness. Several people are gathered around the animal, seemingly attempting to help or assess the situation. One person is kneeling beside the horse's head, while another is working near the carriage.

Behind the group, a dark, enclosed carriage is visible, suggesting it was involved in the incident. Further back, city buildings with multiple stories and fire escapes line the street, providing a backdrop of urban life. A vintage car is also visible parked on the side of the road. The overall tone of the image is somber and concerned, reflecting the distress of the animal and the urgency of the moment.

Created by gemini-2.0-flash-lite on 2025-05-17

Certainly! Here's a description of the image:

Overall Impression:

The image is a vintage black and white photograph, likely taken during the mid-20th century. It depicts a distressing scene of a horse collapsed in the snow-covered street of a city.

Key Elements and Details:

- The Horse: The central subject is a horse lying on its side in the snow. It appears to be struggling, possibly injured or exhausted. Its head is visible, and the harness is still attached.

- The People: Several individuals are gathered around the horse. They appear concerned and are likely trying to assist the animal. Two men are positioned near the horse. One is in the process of helping. Another is standing behind the carriage, looking over them. A woman is also standing nearby and watching the situation.

- The Carriage: A horse-drawn carriage is visible in the background. Its presence indicates the horse was likely pulling it when the incident occurred.

- The Setting: The scene is set on a city street. Buildings line the sides of the road. The presence of snow suggests a winter season.

- Overall Mood: The image conveys a sense of urgency, concern, and perhaps even sadness due to the condition of the horse. It captures a moment of human response to an animal in distress.

Let me know if you'd like any more details!

Mistral Pixtral

Created by us.mistral.pixtral-large-2502-v1:0 on 2025-06-03

The image depicts a scene from a snowy urban street, likely from a bygone era given the style of clothing and the presence of a horse-drawn vehicle. Here's a detailed summary:

Setting: The scene is set in a city street covered in snow. The buildings on either side of the street are multi-story and appear to be commercial establishments. The architecture suggests an older urban area.

People: There are four individuals visible in the image:

- Two men are bent over, attending to a fallen horse. One man is near the horse's head, while the other is closer to its hindquarters.

- A third man is standing near the horse, possibly observing or directing the others.

- A fourth man is standing by the horse-drawn vehicle, seemingly overseeing the situation.

Horse: The horse is lying on its side in the snow, still harnessed to the vehicle. It appears to have collapsed or fallen.

Vehicle: The horse-drawn vehicle is a covered wagon or carriage, likely used for transporting goods or people. The vehicle is positioned on the street, partially blocking it.

Clothing: The men are dressed in heavy winter clothing, including coats, hats, and boots, indicating cold weather.

Activity: The men seem to be trying to assist the fallen horse, possibly attempting to lift it or check on its condition.

Background: The street is lined with buildings, and there are a few parked cars visible, suggesting a mix of old and more modern elements.

The image captures a moment of concern and effort, as the men work to address the situation with the fallen horse amidst a snowy urban environment.

Qwen

Created by Qwen/Qwen2.5-VL-7B-Instruct on 2025-07-14

The image is a black-and-white photograph depicting a group of people working on a street in an urban setting. The scene appears to show a snowy or icy environment, with a horse lying on the ground, seemingly injured or distressed. Several individuals are attending to the horse, with one person appearing to be tending to its head while another person is bent over, possibly examining its condition or providing care. The horse is harnessed, suggesting it was previously used for work or transportation. In the background, there are tall buildings, a parked car, and a truck or cart. The overall atmosphere of the photograph suggests a concern for the wellbeing of the animal and the effort to address its situation amidst the urban environment.

Created by Qwen/Qwen2.5-VL-72B-Instruct on 2025-07-14

This black-and-white photograph captures a somber scene from what appears to be an urban setting during winter. In the foreground, a group of people is gathered around a dead horse lying on a snow-covered street. The horse is wearing a harness, suggesting it may have been used for pulling a carriage or cart.

Several individuals are attending to the horse, with two of them bending over, possibly to assist or examine the animal. Another person is standing nearby, gesturing towards an overturned carriage or cart that the horse might have been pulling. The snow on the ground is heavily trodden, indicating activity in the area despite the harsh weather conditions.

In the background, there are multi-story brick buildings with storefronts and signs, typical of a city street. A car is parked on the side of the road, and the overall atmosphere of the image suggests a historical setting, possibly from the early 20th century. The photograph conveys a sense of hardship and the challenges of urban life during that period.