Machine Generated Data

Tags

Amazon

created on 2022-01-22

| Text | 95.3 | |

|

| ||

| Person | 90.8 | |

|

| ||

| Human | 90.8 | |

|

| ||

| Home Decor | 87.2 | |

|

| ||

| Art | 77 | |

|

| ||

| Linen | 74.4 | |

|

| ||

| Finger | 70.2 | |

|

| ||

| Portrait | 58.8 | |

|

| ||

| Photography | 58.8 | |

|

| ||

| Face | 58.8 | |

|

| ||

| Photo | 58.8 | |

|

| ||

| Handwriting | 57.5 | |

|

| ||

| Painting | 57.2 | |

|

| ||

Clarifai

created on 2023-10-27

Imagga

created on 2022-01-22

| portrait | 27.2 | |

|

| ||

| sculpture | 25.1 | |

|

| ||

| person | 24.1 | |

|

| ||

| statue | 22.1 | |

|

| ||

| people | 18.4 | |

|

| ||

| adult | 18.2 | |

|

| ||

| mother | 17 | |

|

| ||

| ancient | 16.4 | |

|

| ||

| art | 15.7 | |

|

| ||

| face | 15.6 | |

|

| ||

| bust | 15.3 | |

|

| ||

| hair | 15.1 | |

|

| ||

| man | 14.1 | |

|

| ||

| model | 14 | |

|

| ||

| fashion | 13.6 | |

|

| ||

| dress | 13.6 | |

|

| ||

| decoration | 13.4 | |

|

| ||

| old | 13.2 | |

|

| ||

| antique | 13.1 | |

|

| ||

| male | 13 | |

|

| ||

| culture | 12.8 | |

|

| ||

| human | 12.8 | |

|

| ||

| ruler | 12.5 | |

|

| ||

| historic | 11.9 | |

|

| ||

| history | 11.6 | |

|

| ||

| vintage | 11.6 | |

|

| ||

| parent | 11.5 | |

|

| ||

| lady | 11.4 | |

|

| ||

| world | 11.3 | |

|

| ||

| sexy | 11.3 | |

|

| ||

| one | 11.2 | |

|

| ||

| pretty | 11.2 | |

|

| ||

| attractive | 11.2 | |

|

| ||

| religion | 10.8 | |

|

| ||

| clothing | 10.7 | |

|

| ||

| bride | 10.6 | |

|

| ||

| black | 10.2 | |

|

| ||

| makeup | 10.1 | |

|

| ||

| groom | 9.5 | |

|

| ||

| love | 9.5 | |

|

| ||

| historical | 9.4 | |

|

| ||

| smiling | 9.4 | |

|

| ||

| happiness | 9.4 | |

|

| ||

| religious | 9.4 | |

|

| ||

| elegance | 9.2 | |

|

| ||

| costume | 9.2 | |

|

| ||

| currency | 9 | |

|

| ||

| marble | 8.7 | |

|

| ||

| cute | 8.6 | |

|

| ||

| money | 8.5 | |

|

| ||

| monument | 8.4 | |

|

| ||

| head | 8.4 | |

|

| ||

| traditional | 8.3 | |

|

| ||

| design | 8.3 | |

|

| ||

| wedding | 8.3 | |

|

| ||

| cash | 8.2 | |

|

| ||

| cheerful | 8.1 | |

|

| ||

| posing | 8 | |

|

| ||

| close | 8 | |

|

| ||

| eyes | 7.8 | |

|

| ||

| skin | 7.7 | |

|

| ||

| plastic art | 7.6 | |

|

| ||

| stone | 7.6 | |

|

| ||

| tourism | 7.4 | |

|

| ||

| closeup | 7.4 | |

|

| ||

| grandma | 7.3 | |

|

| ||

| business | 7.3 | |

|

| ||

| sensuality | 7.3 | |

|

| ||

| family | 7.1 | |

|

| ||

| women | 7.1 | |

|

| ||

| princess | 7 | |

|

| ||

Google

created on 2022-01-22

| Face | 98.3 | |

|

| ||

| Chin | 96.7 | |

|

| ||

| Eyebrow | 93.7 | |

|

| ||

| Jaw | 88.2 | |

|

| ||

| Art | 79.8 | |

|

| ||

| Vintage clothing | 76.3 | |

|

| ||

| Beauty | 75 | |

|

| ||

| Chest | 73.9 | |

|

| ||

| Painting | 70.5 | |

|

| ||

| Beard | 68.8 | |

|

| ||

| Self-portrait | 66.2 | |

|

| ||

| Visual arts | 65.6 | |

|

| ||

| Room | 63.7 | |

|

| ||

| Necklace | 62.7 | |

|

| ||

| History | 62.6 | |

|

| ||

| Sitting | 61.9 | |

|

| ||

| Classic | 61.8 | |

|

| ||

| Portrait | 59.5 | |

|

| ||

| Retro style | 58.7 | |

|

| ||

| Fur | 54.5 | |

|

| ||

Color Analysis

Face analysis

Amazon

Microsoft

AWS Rekognition

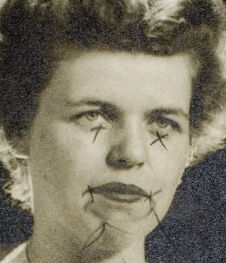

| Age | 30-40 |

| Gender | Female, 99.8% |

| Calm | 91.6% |

| Confused | 4.9% |

| Sad | 2% |

| Surprised | 0.4% |

| Happy | 0.4% |

| Disgusted | 0.3% |

| Angry | 0.2% |

| Fear | 0.2% |

Microsoft Cognitive Services

| Age | 27 |

| Gender | Female |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Feature analysis

Amazon

Person

| Person | 90.8% | |

|

| ||

Categories

Imagga

| paintings art | 83.2% | |

|

| ||

| people portraits | 16.3% | |

|

| ||

Captions

Microsoft

created on 2022-01-22

| an old photo of a woman | 90.3% | |

|

| ||

| a woman posing for a photo | 89.7% | |

|

| ||

| a person posing for the camera | 89.6% | |

|

| ||

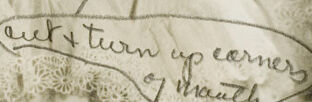

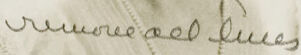

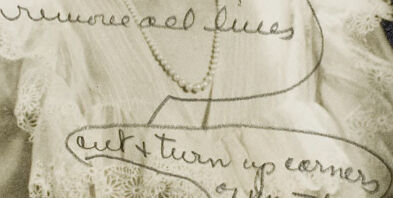

Text analysis

Amazon

turn

up

of

out

of mouth

out x turn up corners

mouth

corners

remove

x

remove ael lues

ael

lues

reoree@el lue

utitun

up earner

reoree@el

lue

utitun

up

earner