Machine Generated Data

Tags

Amazon

created on 2022-01-21

Clarifai

created on 2023-10-26

Imagga

created on 2022-01-21

| film | 35.4 | |

|

| ||

| blackboard | 33.4 | |

|

| ||

| x-ray film | 30.2 | |

|

| ||

| photographic paper | 22.9 | |

|

| ||

| television | 18.8 | |

|

| ||

| home | 18.3 | |

|

| ||

| equipment | 16.1 | |

|

| ||

| photographic equipment | 15.5 | |

|

| ||

| newspaper | 15.1 | |

|

| ||

| computer | 14.7 | |

|

| ||

| man | 14.1 | |

|

| ||

| monitor | 13.6 | |

|

| ||

| case | 13.6 | |

|

| ||

| business | 13.3 | |

|

| ||

| technology | 13.3 | |

|

| ||

| interior | 13.3 | |

|

| ||

| people | 12.8 | |

|

| ||

| laptop | 12.7 | |

|

| ||

| house | 12.5 | |

|

| ||

| telecommunication system | 12 | |

|

| ||

| person | 11.9 | |

|

| ||

| indoor | 11.9 | |

|

| ||

| indoors | 11.4 | |

|

| ||

| black | 11.4 | |

|

| ||

| room | 11.3 | |

|

| ||

| looking | 11.2 | |

|

| ||

| adult | 11 | |

|

| ||

| office | 10.4 | |

|

| ||

| daily | 10.2 | |

|

| ||

| product | 10.1 | |

|

| ||

| negative | 9.9 | |

|

| ||

| screen | 9.4 | |

|

| ||

| building | 9.2 | |

|

| ||

| working | 8.8 | |

|

| ||

| table | 8.8 | |

|

| ||

| happy | 8.8 | |

|

| ||

| lifestyle | 8.7 | |

|

| ||

| work | 8.6 | |

|

| ||

| happiness | 8.6 | |

|

| ||

| living | 8.5 | |

|

| ||

| male | 8.5 | |

|

| ||

| design | 8.4 | |

|

| ||

| modern | 8.4 | |

|

| ||

| old | 8.4 | |

|

| ||

| fireplace | 8.3 | |

|

| ||

| digital | 8.1 | |

|

| ||

| hospital | 8 | |

|

| ||

| decor | 7.9 | |

|

| ||

| businessman | 7.9 | |

|

| ||

| creation | 7.9 | |

|

| ||

| portrait | 7.8 | |

|

| ||

| class | 7.7 | |

|

| ||

| attractive | 7.7 | |

|

| ||

| casual | 7.6 | |

|

| ||

| relaxation | 7.5 | |

|

| ||

| keyboard | 7.5 | |

|

| ||

| one | 7.5 | |

|

| ||

| retro | 7.4 | |

|

| ||

| object | 7.3 | |

|

| ||

| open | 7.2 | |

|

| ||

| glass | 7.2 | |

|

| ||

| furniture | 7.1 | |

|

| ||

| face | 7.1 | |

|

| ||

| medicine | 7 | |

|

| ||

Google

created on 2022-01-21

| Picture frame | 83.6 | |

|

| ||

| Black-and-white | 83 | |

|

| ||

| Art | 81.1 | |

|

| ||

| Font | 76.2 | |

|

| ||

| Monochrome photography | 75.9 | |

|

| ||

| Painting | 74.9 | |

|

| ||

| Monochrome | 72.3 | |

|

| ||

| Rectangle | 71.3 | |

|

| ||

| Room | 69.1 | |

|

| ||

| Visual arts | 68.3 | |

|

| ||

| Event | 64 | |

|

| ||

| Stock photography | 63.4 | |

|

| ||

| Display device | 58.4 | |

|

| ||

| Photographic paper | 58.1 | |

|

| ||

| Collection | 54.2 | |

|

| ||

| Still life photography | 54.1 | |

|

| ||

| Illustration | 53.8 | |

|

| ||

| History | 51.9 | |

|

| ||

| Modern art | 51.1 | |

|

| ||

Microsoft

created on 2022-01-21

| text | 99.2 | |

|

| ||

| clothing | 98.2 | |

|

| ||

| person | 97.1 | |

|

| ||

| man | 91.3 | |

|

| ||

| television | 90 | |

|

| ||

| indoor | 88.6 | |

|

| ||

| screen | 73.8 | |

|

| ||

| black and white | 67.1 | |

|

| ||

| flat | 36.2 | |

|

| ||

| picture frame | 7.7 | |

|

| ||

Color Analysis

Face analysis

Amazon

AWS Rekognition

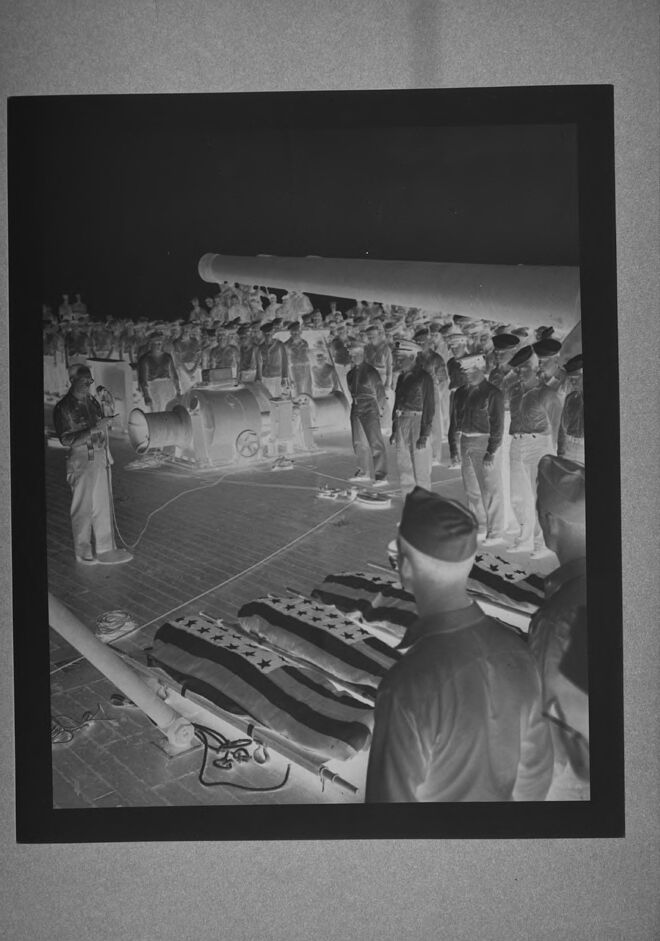

| Age | 23-31 |

| Gender | Female, 83.5% |

| Calm | 98% |

| Sad | 1.3% |

| Disgusted | 0.3% |

| Angry | 0.2% |

| Happy | 0.1% |

| Fear | 0% |

| Surprised | 0% |

| Confused | 0% |

AWS Rekognition

| Age | 25-35 |

| Gender | Male, 89.5% |

| Calm | 94.1% |

| Sad | 2.8% |

| Happy | 1.2% |

| Surprised | 1% |

| Angry | 0.3% |

| Confused | 0.2% |

| Disgusted | 0.2% |

| Fear | 0.1% |

AWS Rekognition

| Age | 26-36 |

| Gender | Male, 98.8% |

| Calm | 60.6% |

| Sad | 14% |

| Happy | 10.4% |

| Angry | 7.8% |

| Confused | 2.8% |

| Disgusted | 2.2% |

| Surprised | 1.2% |

| Fear | 1% |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Likely |

Feature analysis

Categories

Imagga

| paintings art | 72.9% | |

|

| ||

| interior objects | 19.4% | |

|

| ||

| streetview architecture | 6.9% | |

|

| ||

Captions

Microsoft

created on 2022-01-21

| a person standing in front of a flat screen television | 63.8% | |

|

| ||

| a person standing in front of a flat screen tv | 63.7% | |

|

| ||

| a person standing in front of a flat screen television on the wall | 59.8% | |

|

| ||

Text analysis

Amazon

3

A-KODA

HAGON-YT3RA2-MAMTZA3

HAGON-YT3RA2-MAMTZA3