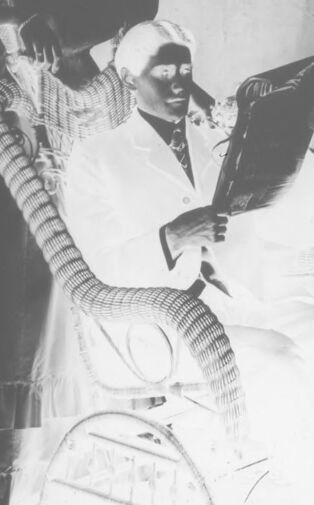

Machine Generated Data

Tags

Amazon

created on 2019-06-01

| Apparel | 98.5 | |

|

| ||

| Clothing | 98.5 | |

|

| ||

| Human | 96.7 | |

|

| ||

| Person | 93.6 | |

|

| ||

| Art | 83.2 | |

|

| ||

| Face | 82.5 | |

|

| ||

| Female | 81.6 | |

|

| ||

| Drawing | 79.2 | |

|

| ||

| Person | 77.5 | |

|

| ||

| People | 76.5 | |

|

| ||

| Dress | 75.1 | |

|

| ||

| Woman | 67.5 | |

|

| ||

| Photography | 66.8 | |

|

| ||

| Photo | 66.8 | |

|

| ||

| Text | 66.1 | |

|

| ||

| Portrait | 65.7 | |

|

| ||

| Performer | 63 | |

|

| ||

| Girl | 62.4 | |

|

| ||

| Painting | 62.3 | |

|

| ||

| Fashion | 60.7 | |

|

| ||

| Gown | 59.9 | |

|

| ||

| Coat | 58.2 | |

|

| ||

| Overcoat | 58.2 | |

|

| ||

| Suit | 58.2 | |

|

| ||

| Advertisement | 57 | |

|

| ||

| Robe | 56.9 | |

|

| ||

| Sketch | 56.6 | |

|

| ||

Clarifai

created on 2019-06-01

Imagga

created on 2019-06-01

| negative | 56.5 | |

|

| ||

| film | 46.8 | |

|

| ||

| sketch | 36.3 | |

|

| ||

| photographic paper | 33.2 | |

|

| ||

| drawing | 30.8 | |

|

| ||

| photographic equipment | 22.1 | |

|

| ||

| representation | 18.9 | |

|

| ||

| black | 18.7 | |

|

| ||

| person | 15.9 | |

|

| ||

| people | 15.6 | |

|

| ||

| dress | 15.4 | |

|

| ||

| art | 14.8 | |

|

| ||

| old | 14.6 | |

|

| ||

| grunge | 14.5 | |

|

| ||

| man | 14.2 | |

|

| ||

| attractive | 14 | |

|

| ||

| vintage | 13.2 | |

|

| ||

| adult | 13 | |

|

| ||

| sexy | 12.8 | |

|

| ||

| silhouette | 12.4 | |

|

| ||

| portrait | 12.3 | |

|

| ||

| retro | 12.3 | |

|

| ||

| fashion | 12.1 | |

|

| ||

| body | 12 | |

|

| ||

| clothing | 11.6 | |

|

| ||

| bride | 11.6 | |

|

| ||

| posing | 11.5 | |

|

| ||

| ancient | 11.2 | |

|

| ||

| model | 10.9 | |

|

| ||

| male | 10.6 | |

|

| ||

| elegance | 10.1 | |

|

| ||

| symbol | 9.4 | |

|

| ||

| cute | 9.3 | |

|

| ||

| face | 9.2 | |

|

| ||

| sensuality | 9.1 | |

|

| ||

| style | 8.9 | |

|

| ||

| hair | 8.7 | |

|

| ||

| book jacket | 8.7 | |

|

| ||

| design | 8.4 | |

|

| ||

| window | 8.4 | |

|

| ||

| pretty | 8.4 | |

|

| ||

| figure | 8.3 | |

|

| ||

| sport | 8.2 | |

|

| ||

| gorgeous | 8.2 | |

|

| ||

| religion | 8.1 | |

|

| ||

| love | 7.9 | |

|

| ||

| couple | 7.8 | |

|

| ||

| sculpture | 7.8 | |

|

| ||

| antique | 7.8 | |

|

| ||

| men | 7.7 | |

|

| ||

| statue | 7.6 | |

|

| ||

| power | 7.6 | |

|

| ||

| decoration | 7.1 | |

|

| ||

| architecture | 7 | |

|

| ||

| modern | 7 | |

|

| ||

| jacket | 7 | |

|

| ||

Google

created on 2019-06-01

| Photograph | 97.2 | |

|

| ||

| Snapshot | 84.5 | |

|

| ||

| Stock photography | 76.4 | |

|

| ||

| Victorian fashion | 69.3 | |

|

| ||

| Photography | 62.4 | |

|

| ||

| Black-and-white | 56.4 | |

|

| ||

| Vintage clothing | 50.7 | |

|

| ||

Microsoft

created on 2019-06-01

| text | 99.5 | |

|

| ||

| book | 94.2 | |

|

| ||

| sketch | 93.7 | |

|

| ||

| drawing | 91.4 | |

|

| ||

| black and white | 80.9 | |

|

| ||

| cartoon | 78.6 | |

|

| ||

| wedding dress | 52.1 | |

|

| ||

Color Analysis

Face analysis

Amazon

AWS Rekognition

| Age | 26-43 |

| Gender | Female, 77.1% |

| Angry | 7% |

| Happy | 52.6% |

| Calm | 17.7% |

| Surprised | 11.1% |

| Sad | 6.9% |

| Confused | 2.7% |

| Disgusted | 2.1% |

Feature analysis

Categories

Imagga

| paintings art | 100% | |

|

| ||

Captions

Microsoft

created on 2019-06-01

| a close up of a book | 43.2% | |

|

| ||

| close up of a book | 37.7% | |

|

| ||

| a person with a book | 37.6% | |

|

| ||