Machine Generated Data

Tags

Amazon

created on 2019-06-01

Clarifai

created on 2019-06-01

Imagga

created on 2019-06-01

| blackboard | 38.3 | |

|

| ||

| negative | 35.9 | |

|

| ||

| film | 34.3 | |

|

| ||

| grunge | 29.8 | |

|

| ||

| old | 25.1 | |

|

| ||

| vintage | 24.8 | |

|

| ||

| fountain | 23.2 | |

|

| ||

| art | 23 | |

|

| ||

| texture | 22.2 | |

|

| ||

| retro | 22.1 | |

|

| ||

| photographic paper | 20.6 | |

|

| ||

| tray | 20.4 | |

|

| ||

| structure | 20.4 | |

|

| ||

| frame | 19.2 | |

|

| ||

| pattern | 19.1 | |

|

| ||

| antique | 19 | |

|

| ||

| paint | 18.1 | |

|

| ||

| design | 18 | |

|

| ||

| decoration | 16.8 | |

|

| ||

| receptacle | 16.5 | |

|

| ||

| grungy | 15.2 | |

|

| ||

| container | 14.6 | |

|

| ||

| aged | 14.5 | |

|

| ||

| border | 14.5 | |

|

| ||

| dirty | 14.5 | |

|

| ||

| photographic equipment | 13.7 | |

|

| ||

| material | 13.4 | |

|

| ||

| weathered | 13.3 | |

|

| ||

| graphic | 13.1 | |

|

| ||

| rough | 12.8 | |

|

| ||

| black | 12.6 | |

|

| ||

| surface | 12.3 | |

|

| ||

| textured | 12.3 | |

|

| ||

| space | 11.6 | |

|

| ||

| text | 11.3 | |

|

| ||

| architecture | 10.9 | |

|

| ||

| detailed | 10.6 | |

|

| ||

| edge | 10.6 | |

|

| ||

| color | 10.6 | |

|

| ||

| wall | 10.4 | |

|

| ||

| paper | 10.3 | |

|

| ||

| collage | 9.6 | |

|

| ||

| water | 9.3 | |

|

| ||

| drawing | 9.3 | |

|

| ||

| screen | 9.3 | |

|

| ||

| decorative | 9.2 | |

|

| ||

| sketch | 9.1 | |

|

| ||

| digital | 8.9 | |

|

| ||

| noisy | 8.9 | |

|

| ||

| detail | 8.8 | |

|

| ||

| computer | 8.8 | |

|

| ||

| noise | 8.8 | |

|

| ||

| stamp | 8.7 | |

|

| ||

| messy | 8.7 | |

|

| ||

| rust | 8.7 | |

|

| ||

| dirt | 8.6 | |

|

| ||

| splash | 8.6 | |

|

| ||

| card | 8.5 | |

|

| ||

| sculpture | 8.5 | |

|

| ||

| letter | 8.2 | |

|

| ||

| backgrounds | 8.1 | |

|

| ||

| close | 8 | |

|

| ||

| postmark | 7.9 | |

|

| ||

| scratches | 7.9 | |

|

| ||

| scratch | 7.8 | |

|

| ||

| ancient | 7.8 | |

|

| ||

| ornament | 7.8 | |

|

| ||

| cold | 7.7 | |

|

| ||

| flower | 7.7 | |

|

| ||

| floral | 7.7 | |

|

| ||

| 7.7 | ||

|

| ||

| damaged | 7.6 | |

|

| ||

| glass | 7.4 | |

|

| ||

| brown | 7.4 | |

|

| ||

| cash | 7.3 | |

|

| ||

| effect | 7.3 | |

|

| ||

| global | 7.3 | |

|

| ||

| currency | 7.2 | |

|

| ||

| religion | 7.2 | |

|

| ||

| cool | 7.1 | |

|

| ||

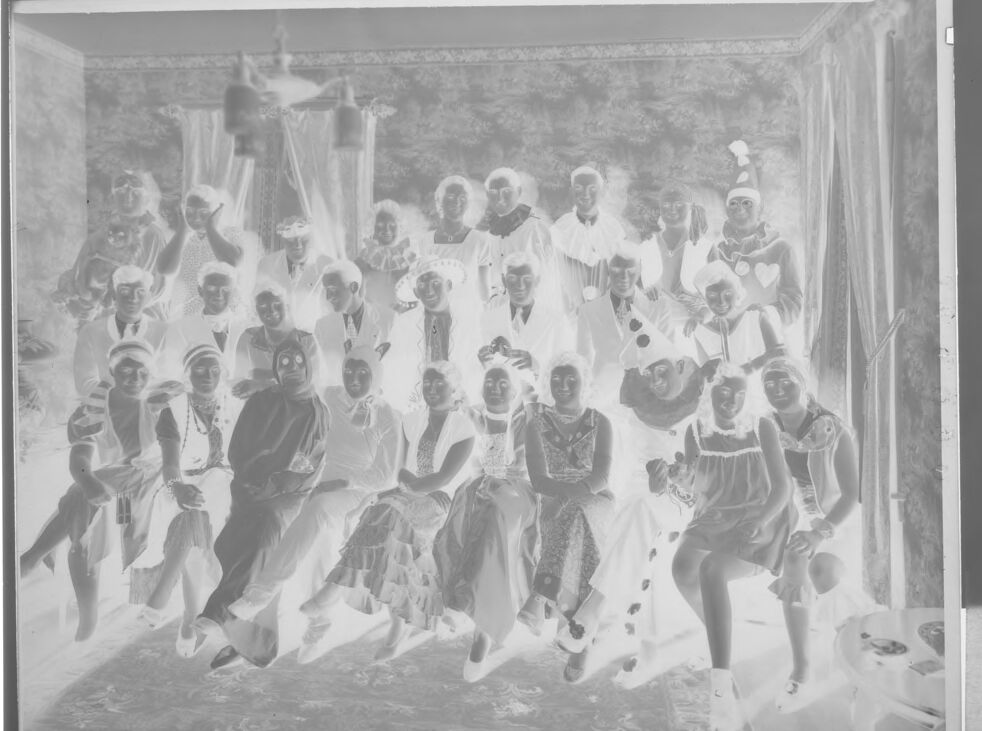

Google

created on 2019-06-01

| Photograph | 96 | |

|

| ||

| Snapshot | 82.5 | |

|

| ||

| Picture frame | 73.8 | |

|

| ||

| Stock photography | 66.6 | |

|

| ||

| Art | 62.5 | |

|

| ||

| Photography | 62.4 | |

|

| ||

| Team | 59.4 | |

|

| ||

| Painting | 58.3 | |

|

| ||

| Black-and-white | 56.4 | |

|

| ||

Color Analysis

Face analysis

Amazon

AWS Rekognition

| Age | 23-38 |

| Gender | Male, 52.8% |

| Sad | 46.5% |

| Angry | 45.5% |

| Surprised | 45.3% |

| Calm | 49.5% |

| Disgusted | 45.1% |

| Happy | 47.8% |

| Confused | 45.4% |

AWS Rekognition

| Age | 20-38 |

| Gender | Female, 53.7% |

| Happy | 45.4% |

| Calm | 46% |

| Angry | 46.4% |

| Confused | 45.9% |

| Surprised | 45.7% |

| Sad | 47.6% |

| Disgusted | 48.1% |

AWS Rekognition

| Age | 26-43 |

| Gender | Female, 50.1% |

| Angry | 45.2% |

| Disgusted | 45.1% |

| Happy | 46.5% |

| Sad | 52.3% |

| Surprised | 45.2% |

| Calm | 45.5% |

| Confused | 45.2% |

AWS Rekognition

| Age | 23-38 |

| Gender | Female, 51.3% |

| Disgusted | 45.2% |

| Confused | 45.6% |

| Sad | 45.9% |

| Happy | 47% |

| Angry | 45.7% |

| Surprised | 45.9% |

| Calm | 49.6% |

AWS Rekognition

| Age | 26-43 |

| Gender | Female, 52.5% |

| Angry | 45.5% |

| Sad | 46.4% |

| Disgusted | 45.5% |

| Happy | 47.7% |

| Calm | 48.5% |

| Surprised | 45.7% |

| Confused | 45.8% |

AWS Rekognition

| Age | 19-36 |

| Gender | Male, 51% |

| Happy | 45.7% |

| Sad | 48.2% |

| Confused | 45.6% |

| Angry | 45.4% |

| Disgusted | 45.6% |

| Surprised | 45.4% |

| Calm | 49.2% |

AWS Rekognition

| Age | 26-43 |

| Gender | Female, 54.9% |

| Disgusted | 45.5% |

| Sad | 46.7% |

| Confused | 45.7% |

| Happy | 46.6% |

| Angry | 45.4% |

| Surprised | 45.6% |

| Calm | 49.5% |

AWS Rekognition

| Age | 23-38 |

| Gender | Female, 53.9% |

| Happy | 47.3% |

| Sad | 47.3% |

| Surprised | 46% |

| Confused | 46.1% |

| Disgusted | 46.1% |

| Calm | 46.5% |

| Angry | 45.7% |

AWS Rekognition

| Age | 20-38 |

| Gender | Male, 52.8% |

| Happy | 47.7% |

| Surprised | 45.4% |

| Calm | 49.9% |

| Sad | 45.9% |

| Disgusted | 45.3% |

| Angry | 45.3% |

| Confused | 45.4% |

AWS Rekognition

| Age | 26-43 |

| Gender | Female, 50.5% |

| Surprised | 45.4% |

| Sad | 46.1% |

| Happy | 47.6% |

| Angry | 45.3% |

| Disgusted | 45.7% |

| Confused | 45.3% |

| Calm | 49.5% |

AWS Rekognition

| Age | 26-43 |

| Gender | Male, 51.2% |

| Sad | 46.2% |

| Calm | 50.5% |

| Surprised | 45.5% |

| Angry | 45.4% |

| Disgusted | 45.4% |

| Happy | 46.5% |

| Confused | 45.5% |

AWS Rekognition

| Age | 26-43 |

| Gender | Female, 55% |

| Angry | 45.2% |

| Disgusted | 45.3% |

| Happy | 47.5% |

| Sad | 49.2% |

| Surprised | 45.2% |

| Calm | 47% |

| Confused | 45.6% |

AWS Rekognition

| Age | 17-27 |

| Gender | Female, 50.6% |

| Angry | 45.8% |

| Surprised | 45.8% |

| Calm | 45.5% |

| Sad | 48.1% |

| Confused | 45.6% |

| Disgusted | 46.4% |

| Happy | 47.7% |

AWS Rekognition

| Age | 20-38 |

| Gender | Male, 50.7% |

| Confused | 45.5% |

| Angry | 45.6% |

| Sad | 48.6% |

| Calm | 48.2% |

| Disgusted | 45.4% |

| Happy | 46.1% |

| Surprised | 45.5% |

AWS Rekognition

| Age | 16-27 |

| Gender | Female, 50.2% |

| Disgusted | 45.4% |

| Sad | 49.5% |

| Calm | 47.8% |

| Confused | 45.3% |

| Surprised | 45.3% |

| Happy | 46.4% |

| Angry | 45.4% |

AWS Rekognition

| Age | 15-25 |

| Gender | Female, 54.6% |

| Disgusted | 46.7% |

| Confused | 45.6% |

| Sad | 47.9% |

| Happy | 47% |

| Angry | 45.9% |

| Surprised | 45.6% |

| Calm | 46.2% |

AWS Rekognition

| Age | 35-52 |

| Gender | Female, 51.2% |

| Sad | 50.4% |

| Angry | 46.7% |

| Surprised | 45.9% |

| Calm | 45.5% |

| Disgusted | 45.3% |

| Happy | 45.4% |

| Confused | 45.9% |

AWS Rekognition

| Age | 26-43 |

| Gender | Female, 51.8% |

| Disgusted | 45.3% |

| Sad | 49.5% |

| Confused | 45.2% |

| Happy | 45.3% |

| Angry | 45.3% |

| Surprised | 45.2% |

| Calm | 49.3% |

AWS Rekognition

| Age | 26-43 |

| Gender | Female, 50.5% |

| Happy | 50.2% |

| Calm | 46% |

| Angry | 46.2% |

| Surprised | 45.5% |

| Disgusted | 45.8% |

| Confused | 45.5% |

| Sad | 45.8% |

AWS Rekognition

| Age | 26-43 |

| Gender | Female, 51.9% |

| Sad | 50.2% |

| Angry | 45.4% |

| Disgusted | 45.5% |

| Surprised | 45.2% |

| Happy | 45.4% |

| Calm | 48.2% |

| Confused | 45.2% |

Feature analysis

Categories

Imagga

| paintings art | 98.5% | |

|

| ||

Captions

Microsoft

created on 2019-06-01

| a group of people posing for a photo | 90.8% | |

|

| ||

| an old photo of a group of people posing for the camera | 86.7% | |

|

| ||

| a group of people posing for a picture | 86.6% | |

|

| ||