Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

AWS Rekognition

| Age | 23-31 |

| Gender | Male, 99.8% |

| Calm | 87.9% |

| Sad | 7.2% |

| Confused | 1.3% |

| Angry | 1% |

| Happy | 1% |

| Fear | 0.6% |

| Disgusted | 0.5% |

| Surprised | 0.4% |

Feature analysis

Amazon

Clarifai

AWS Rekognition

| Person | 99.5% | |

Categories

Imagga

created on 2022-01-23

| paintings art | 99.4% | |

Captions

Microsoft

created by unknown on 2022-01-23

| a group of people posing for a photo | 89.1% | |

| a group of people posing for the camera | 89% | |

| a group of people posing for a picture | 88.9% | |

Clarifai

Created by general-english-image-caption-clip on 2025-07-12

photograph of the band, by photographer.

Salesforce

Created by general-english-image-caption-blip on 2025-05-17

a photograph of a group of men in white uniforms standing in front of a fence

OpenAI GPT

Created by gpt-4o-2024-11-20 on 2025-06-17

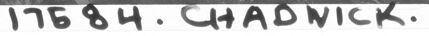

This is a black-and-white negative image showing five individuals standing in front of a large wooden boat. They appear to be dressed in work clothing, including overalls, indicating they might be laborers or fishermen. The ground beneath them is uneven, possibly dirt or rocks, suggesting an outdoor setting. At the bottom of the image, "17584. Chadwick" is handwritten, possibly identifying the scene or the photographer.

Created by gpt-4o-2024-08-06 on 2025-06-17

The image is a black-and-white negative of five individuals posing in front of a large wooden boat. They are dressed in work attire, including overalls, and stand closely together. The background features the curved side of the boat and some ropes or tarps. The ground appears textured, likely indicating sand or dirt. The handwritten note at the bottom reads "17584 CHADWICK."

Anthropic Claude

Created by anthropic.claude-3-haiku-20240307-v1:0 on 2025-06-17

The image shows a group of five individuals standing together in what appears to be a mountainous outdoor setting. They are all wearing white clothing, including hats or caps. The individuals are standing on a rocky surface with mountains visible in the background. The image has a black and white, historical appearance.

Created by us.anthropic.claude-3-5-sonnet-20241022-v2:0 on 2025-06-17

This is a black and white historical photograph showing five workers standing in a row. They are all wearing light-colored work clothes, including overalls or coveralls, and caps. The workers appear to be industrial or maritime laborers, given their attire. The image has a number "17584" written at the top and "17584. CHADWICK." written at the bottom, suggesting this may be from some kind of archival collection or documentation. The background shows what appears to be a curved or stepped surface, possibly part of a ship or industrial structure. The photo has the grainy, high-contrast quality typical of early to mid-20th century photography.

Meta Llama

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-06-10

The image is a black-and-white photograph of five men standing in front of a boat, with the men wearing overalls and hats. The background is a large boat, and the overall atmosphere suggests a work-related setting.

- Five men are standing in front of a boat:

- The men are standing in a line, with their arms around each other's shoulders.

- They are all wearing overalls and hats, which suggests that they may be workers or laborers.

- The men appear to be in their mid-to-late 20s or 30s, based on their facial features and body language.

- The men are wearing overalls and hats:

- The overalls are light-colored and appear to be made of a durable material, such as denim or canvas.

- The hats are also light-colored and appear to be made of a similar material to the overalls.

- The men's clothing suggests that they may be working outdoors in a physical environment.

- The background is a large boat:

- The boat is large and appears to be made of wood.

- It has a flat bottom and a curved hull, which suggests that it may be a cargo ship or a fishing vessel.

- The boat is positioned behind the men, creating a sense of depth and perspective in the image.

Overall, the image suggests that the men are workers or laborers who are involved in some kind of maritime industry. The fact that they are standing in front of a large boat and wearing overalls and hats suggests that they may be engaged in physical labor or maintenance work.

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-06-10

This image is a black-and-white photograph of five men standing together, with a caption at the bottom that reads "17584. CHADWICK." The men are dressed in white overalls and caps, with one man wearing a short-sleeved shirt underneath his overalls. They appear to be standing on a rocky surface, possibly a beach or a construction site.

The background of the image is dark and indistinct, but it appears to be a body of water or a sky. The overall atmosphere of the image suggests that it was taken during the daytime, possibly in the early 20th century.

The image has a faded quality to it, with some areas appearing more washed out than others. This could be due to the age of the photograph or the way it was developed. Despite this, the image still conveys a sense of camaraderie and friendship among the five men, who are all smiling and looking directly at the camera.

Amazon Nova

Created by amazon.nova-pro-v1:0 on 2025-05-31

The black-and-white image shows five men standing close to each other, probably posing for a photo. They are wearing white overalls, and three of them are wearing hats. The man on the left is wearing a hat with a brim, while the man on the right is wearing a cap. They are standing on what seems to be a rocky surface. Behind them is a structure that looks like a boat.

Created by amazon.nova-lite-v1:0 on 2025-05-31

The image is a black-and-white photograph featuring five men standing together in a row. They are all dressed in white overalls, hats, and shoes, suggesting a uniform work attire. The men are positioned in front of a large structure, possibly a building or a ship, which has a flat, textured surface covered in snow or ice. The background is somewhat blurry, with some details of the structure visible, indicating that the focus of the photograph is on the men. The photograph has a watermark with the number "17584" in the top left corner and the name "Chadwick" in the bottom right corner.

Google Gemini

Created by gemini-2.0-flash-lite on 2025-05-18

Here's a description of the image:

The image appears to be a negative print, so the colors are inverted. It features five men standing close together in what seems to be an outdoor setting. All the men are wearing overalls and caps. They are standing on what looks like a rough, textured surface, possibly a pile of sand or dirt. Behind them, there's a structure that looks like the side of a boat. The overall impression is of a group of workers or crew members. The numbers "17584" are written at the top, and at the bottom, along with the word "CHADWICK" which likely refers to the photographer or the subject matter.

Created by gemini-2.0-flash on 2025-06-16

Here is a description of the image:

This is a black and white photographic negative. It shows five men standing in a line, likely for a portrait. They are wearing overalls and caps and appear to be workers of some kind, possibly laborers or farmers. The background is a wooden structure, maybe a boat or a building under construction, and what seems to be a dirt or mud ground. Writing is visible at the top and bottom of the image; both show "17584", and the bottom also includes "CHADNICK".

Mistral Pixtral

Created by us.mistral.pixtral-large-2502-v1:0 on 2025-05-31

The image is a black-and-white photograph featuring five men standing side by side. They appear to be dressed in work clothes, including overalls, hats, and some with suspenders. The men are posing in front of a large stack of what looks like ice blocks, suggesting they might be ice harvesters or workers in an ice storage facility. The ground in front of them is covered with what seems to be ice shavings or debris. The photograph has a caption at the top that reads "17584." and at the bottom, it reads "17584. CHADNICK." This could indicate a cataloging number and possibly a name related to the image. The overall setting and attire of the men suggest a historical context, likely from the early 20th century or late 19th century.

Qwen

Created by Qwen/Qwen2.5-VL-7B-Instruct on 2025-07-05

The image is a black-and-white photograph showing five individuals standing together outdoors. They are all dressed in work attire, which includes overalls and caps. The background features what appears to be a structure with steps and some materials or debris, possibly indicating a construction or industrial setting. The individuals are standing close to each other, suggesting camaraderie or teamwork. The photograph has a label at the top and bottom, with the numbers "17584" prominently displayed. The bottom label also includes the name "CHADNICK." The overall appearance of the image suggests it might be from a historical document or archive.

Created by Qwen/Qwen2.5-VL-72B-Instruct on 2025-07-05

This is a black-and-white photograph showing five men posed together. They are dressed in white overalls with suspenders, and some of them are wearing hats. The men appear to be standing in an industrial setting, possibly a shipyard, as there is a large curved structure in the background that resembles a ship's hull. The ground appears to be covered in some kind of debris or sand. The image is labeled with the number "17584" and the name "Chadwick" at the bottom. The photograph has a vintage look, suggesting it was taken some time ago.