Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

AWS Rekognition

| Age | 36-44 |

| Gender | Female, 77.2% |

| Sad | 70.3% |

| Happy | 8.6% |

| Calm | 7.9% |

| Confused | 7.7% |

| Angry | 1.6% |

| Surprised | 1.3% |

| Fear | 1.3% |

| Disgusted | 1.2% |

Feature analysis

Amazon

Clarifai

AWS Rekognition

| Person | 92% | |

Categories

Imagga

created on 2022-01-23

| beaches seaside | 74.4% | |

| interior objects | 13.8% | |

| streetview architecture | 10.7% | |

Captions

Microsoft

created by unknown on 2022-01-23

| a group of people in a cage | 70% | |

| a close up of a cage | 69.9% | |

| a group of people standing in a cage | 58.7% | |

Clarifai

Created by general-english-image-caption-clip on 2025-07-14

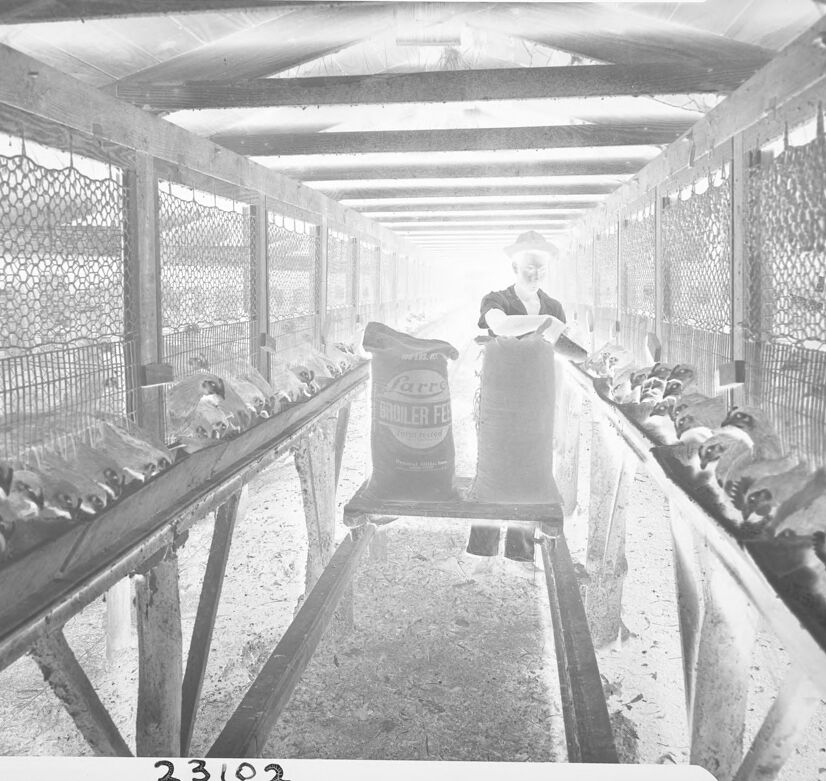

a photograph of a chicken coop.

Salesforce

Created by general-english-image-caption-blip on 2025-05-22

a photograph of a man standing in a caged in a cage

OpenAI GPT

Created by gpt-4o-2024-08-06 on 2025-06-23

The response was filtered due to the prompt triggering Azure OpenAI's content management policy. The content filter results follow.

hate: safe

self-harm: safe

sexual: safe

violence: medium

Anthropic Claude

Created by anthropic.claude-3-haiku-20240307-v1:0 on 2025-06-20

The image shows the interior of what appears to be an animal enclosure or pen. The space is long and narrow, with a wire mesh or fencing lining the walls on both sides. There are several cages or pens visible, each containing what look like small animals, possibly rabbits or other small mammals. In the center of the image, a person can be seen carrying or moving a large bag or container, likely containing food or supplies for the animals. The overall scene suggests this is some kind of animal housing or breeding facility, though the specific purpose is not entirely clear from the image alone.

Created by us.anthropic.claude-3-5-sonnet-20241022-v2:0 on 2025-06-20

This appears to be a historical black and white photograph of a poultry or chicken coop interior. The image shows multiple tiered rows of cages or compartments along the walls, with what appears to be chickens housed inside them. The structure has a covered roof with support beams, and there appears to be some kind of mesh or wire fencing material visible on the walls. Someone is visible in the background, presumably tending to the birds. The setup looks to be an early example of a commercial or organized poultry farming operation. The image has numbers visible (23102) which suggests it may be from an agricultural documentation or archive.

Meta Llama

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-06-03

The image is a black-and-white photograph of a man in a barn, surrounded by rows of animal cages. The man is standing in the center of the image, wearing a dark shirt and hat, and appears to be holding a large bag of feed. The cages are made of metal and have wire mesh sides, with some of them containing small animals that appear to be rabbits or guinea pigs. The background of the image shows the interior of the barn, with a dirt floor and wooden beams supporting the roof. The overall atmosphere of the image suggests a rural or agricultural setting, possibly a farm or animal shelter.

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-06-03

The image depicts a black-and-white photograph of a man standing in a long, narrow room with rows of cages on either side. The man is wearing a dark shirt and a hat, and he appears to be holding a large bag or sack. The cages are filled with what appear to be chickens or other small birds.

In the foreground, there is a large bag or sack with the words "TURKEY BROILER FEED" printed on it. The bag is sitting on a wooden platform or table, and it appears to be being used to feed the birds in the cages.

The background of the image shows the long, narrow room with rows of cages on either side. The walls of the room are made of wood or metal, and there are windows or vents along the top of the walls. The floor of the room is covered in a layer of dirt or straw, and there are several pieces of equipment or machinery visible in the background.

Overall, the image suggests that this is a poultry farm or breeding facility, where birds are being raised for food or other purposes. The presence of the large bag of feed and the cages filled with birds suggests that the facility is focused on raising turkeys or other types of poultry.

Amazon Nova

Created by amazon.nova-pro-v1:0 on 2025-06-02

The image is a black-and-white photograph of a person standing inside a poultry farm. The individual is wearing a hat and appears to be feeding the birds. The poultry farm consists of long, narrow enclosures with wire mesh walls and wooden frames. Inside these enclosures, there are rows of chickens. The person is standing in the middle of the enclosures, holding a bag of feed, and is likely distributing the feed to the birds. The photograph has a vintage look, suggesting it was taken several decades ago. There is a number "23102" printed at the bottom of the image, which could be a reference number or a code.

Created by amazon.nova-lite-v1:0 on 2025-06-02

The image appears to be a black-and-white photograph of a person standing inside a poultry farm or chicken coop. The person is wearing a hat and a shirt, and they are standing on a platform with two sacks of feed in front of them. The person is looking directly at the camera. On either side of the person, there are rows of chickens in cages, with some of them facing the person. The image has a watermark with the text "23102" in the bottom left corner.

Google Gemini

Created by gemini-2.0-flash-lite on 2025-05-17

Here's a description of the image:

Overall Scene: The image is a black and white, slightly high-angle shot of a chicken coop interior. The perspective creates a long, tunnel-like effect, drawing the eye towards a bright source of light at the end.

Structure: The coop appears to be constructed with wooden framing. Two long rows of cages, covered with wire mesh, run along the sides. The ceiling is supported by beams.

Chickens: Numerous chickens line the troughs of the cages on both sides. They appear to be focused on eating.

Person: A person, presumably a farmer or worker, stands in the center of the coop near a large, full feed bag and a barrel. They seem to be working with the feed, potentially refilling the troughs for the chickens.

Other Details:

- The ground appears to be covered in wood shavings or a similar material.

- There is a sign on the feed bag that reads "Farro BROILER FEED".

Overall Impression: The image captures a scene of poultry farming. The lighting creates a stark contrast, emphasizing the geometric structure of the coop and the repetitive rows of cages. It seems like a typical daily operation in the raising of chickens.

Created by gemini-2.0-flash on 2025-05-17

The monochrome photograph depicts a person surrounded by chickens in a commercial setting. The photo has been edited to change the colors to a negative view, reversing the dark and light areas of the original photo.

The subject of the photo is a person standing between two long, narrow troughs or feeding stations filled with chickens. The individual is wearing a short-sleeved shirt and a hat, looking directly at the viewer, and leaning on what appears to be a large bag of feed with the label "Larro BROILER FEED." There is a second, similar bag beside the bag.

The background shows a long, narrow structure with rows of cages or pens along both sides. The cages are made of wire mesh, and each contains a row of chickens. Above, the structure appears to have a slatted or beamed ceiling, casting geometric shadows down the length of the hall.

The image has a frame or border that is white, with the number "23102" stamped upside down along the top border and right-side up along the bottom.

Mistral Pixtral

Created by us.mistral.pixtral-large-2502-v1:0 on 2025-06-05

The image depicts an indoor poultry farm setup, likely from an earlier era given the black-and-white nature of the photograph. The setting is a long, narrow structure with a wooden framework and wire mesh walls, allowing for ventilation and light.

In the foreground, a person is standing on a raised platform, holding a large sack labeled "Purina Broiler Feed," which suggests that the feed is intended for broiler chickens. The person appears to be in the process of distributing feed to the chickens.

On either side of the central walkway, there are rows of chickens confined in wire cages or pens. The chickens are densely packed, which is typical of intensive poultry farming practices aimed at maximizing space and efficiency.

The overall environment appears to be designed for commercial poultry production, focusing on raising broiler chickens for meat. The presence of the Purina feed bag indicates a reliance on commercial feed to support the rapid growth of the chickens. The setup reflects a systematic approach to poultry farming, emphasizing efficiency and productivity.

Qwen

Created by Qwen/Qwen2.5-VL-72B-Instruct on 2025-07-03

This is a black-and-white historical photograph showing a man working in a poultry barn. The image depicts a long row of raised, slatted cages containing chickens, likely broilers, which are being raised for meat production. The man, dressed in a dark shirt and hat, is standing in the aisle between the rows of cages. He is holding a sack, possibly containing feed, and appears to be in the process of feeding the birds. The barn has a wooden structure with a roof and wire mesh on the sides, allowing for ventilation. The lighting suggests it is daytime, and the overall scene captures a moment of agricultural activity. The photograph is labeled with the number "23102" at the top and bottom.

Created by Qwen/Qwen2.5-VL-7B-Instruct on 2025-07-03

The image is a black-and-white photograph depicting a man standing in a poultry farm or poultry house. The man is wearing a hat and is holding a feed sack labeled "Purina Broiler Feed." The setting appears to be a long barn or shed with wooden beams and a roof structure. On either side of the man, there are rows of wire cages or compartments housing chickens. The floor of the barn is covered with a mixture of dirt and possibly bedding material. The overall scene suggests a farming environment where the man is likely feeding the chickens. The photograph has a vintage look, with the numbers "23102" written in pencil in the upper and lower corners, indicating it might be a historical or archival photo.