Machine Generated Data

Tags

Amazon

created on 2021-12-15

| Chair | 99.9 | |

|

| ||

| Furniture | 99.9 | |

|

| ||

| Human | 99 | |

|

| ||

| Person | 98.7 | |

|

| ||

| Chair | 98.6 | |

|

| ||

| Tai Chi | 93.4 | |

|

| ||

| Sport | 93.4 | |

|

| ||

| Martial Arts | 93.4 | |

|

| ||

| Sports | 93.4 | |

|

| ||

| Dining Table | 62.6 | |

|

| ||

| Table | 62.6 | |

|

| ||

| Portrait | 60.3 | |

|

| ||

| Photography | 60.3 | |

|

| ||

| Photo | 60.3 | |

|

| ||

| Face | 60.3 | |

|

| ||

Clarifai

created on 2023-10-15

Imagga

created on 2021-12-15

| sketch | 42.1 | |

|

| ||

| drawing | 33.6 | |

|

| ||

| person | 24.9 | |

|

| ||

| representation | 24.4 | |

|

| ||

| people | 20.1 | |

|

| ||

| adult | 19 | |

|

| ||

| dance | 16.9 | |

|

| ||

| snow | 16.6 | |

|

| ||

| portrait | 15.5 | |

|

| ||

| fashion | 14.3 | |

|

| ||

| posing | 14.2 | |

|

| ||

| man | 14.2 | |

|

| ||

| attractive | 14 | |

|

| ||

| outdoor | 13.8 | |

|

| ||

| pose | 13.6 | |

|

| ||

| model | 13.2 | |

|

| ||

| dancer | 13.1 | |

|

| ||

| body | 12.8 | |

|

| ||

| human | 12.7 | |

|

| ||

| outdoors | 12.7 | |

|

| ||

| happy | 12.5 | |

|

| ||

| silhouette | 12.4 | |

|

| ||

| black | 12 | |

|

| ||

| fitness | 11.7 | |

|

| ||

| active | 11.7 | |

|

| ||

| summer | 11.6 | |

|

| ||

| one | 11.2 | |

|

| ||

| pretty | 11.2 | |

|

| ||

| performer | 11.2 | |

|

| ||

| hair | 11.1 | |

|

| ||

| winter | 11.1 | |

|

| ||

| elegance | 10.9 | |

|

| ||

| dress | 10.8 | |

|

| ||

| leisure | 10.8 | |

|

| ||

| life | 10.3 | |

|

| ||

| art | 10.2 | |

|

| ||

| happiness | 10.2 | |

|

| ||

| sensuality | 10 | |

|

| ||

| smile | 10 | |

|

| ||

| male | 9.9 | |

|

| ||

| dancing | 9.6 | |

|

| ||

| jump | 9.6 | |

|

| ||

| clothing | 9.5 | |

|

| ||

| women | 9.5 | |

|

| ||

| wall | 9.4 | |

|

| ||

| action | 9.3 | |

|

| ||

| sport | 9.2 | |

|

| ||

| tree | 9.2 | |

|

| ||

| fun | 9 | |

|

| ||

| lady | 8.9 | |

|

| ||

| sax | 8.8 | |

|

| ||

| jumping | 8.7 | |

|

| ||

| lifestyle | 8.7 | |

|

| ||

| cold | 8.6 | |

|

| ||

| motion | 8.6 | |

|

| ||

| creation | 8.5 | |

|

| ||

| casual | 8.5 | |

|

| ||

| freedom | 8.2 | |

|

| ||

| teenager | 8.2 | |

|

| ||

| exercise | 8.2 | |

|

| ||

| style | 8.2 | |

|

| ||

| sunset | 8.1 | |

|

| ||

| success | 8 | |

|

| ||

| sexy | 8 | |

|

| ||

| forest | 7.8 | |

|

| ||

| face | 7.8 | |

|

| ||

| leg | 7.8 | |

|

| ||

| men | 7.7 | |

|

| ||

| frost | 7.7 | |

|

| ||

| performance | 7.7 | |

|

| ||

| weather | 7.6 | |

|

| ||

| hand | 7.6 | |

|

| ||

| blond | 7.5 | |

|

| ||

| passion | 7.5 | |

|

| ||

| joy | 7.5 | |

|

| ||

| wind instrument | 7.4 | |

|

| ||

| teen | 7.3 | |

|

| ||

| alone | 7.3 | |

|

| ||

| business | 7.3 | |

|

| ||

| businessman | 7.1 | |

|

| ||

| sky | 7 | |

|

| ||

| modern | 7 | |

|

| ||

Google

created on 2021-12-15

| Black-and-white | 85.8 | |

|

| ||

| Style | 84 | |

|

| ||

| Chair | 81.8 | |

|

| ||

| Art | 80.3 | |

|

| ||

| Waist | 77.8 | |

|

| ||

| Font | 77.1 | |

|

| ||

| Monochrome photography | 76.4 | |

|

| ||

| Monochrome | 76.2 | |

|

| ||

| Dance | 74.5 | |

|

| ||

| Happy | 72.4 | |

|

| ||

| Choreography | 71.1 | |

|

| ||

| Performing arts | 70.6 | |

|

| ||

| Event | 69.8 | |

|

| ||

| Balance | 69.8 | |

|

| ||

| Picture frame | 69 | |

|

| ||

| Athletic dance move | 68.8 | |

|

| ||

| Entertainment | 66.4 | |

|

| ||

| Fashion design | 65.3 | |

|

| ||

| Photo caption | 64.6 | |

|

| ||

| Visual arts | 63.9 | |

|

| ||

Color Analysis

Face analysis

Amazon

AWS Rekognition

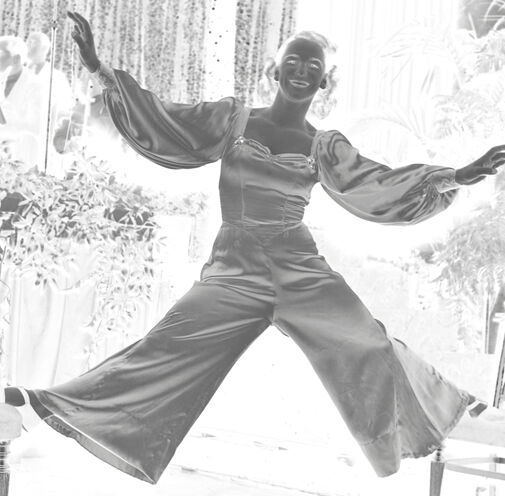

| Age | 25-39 |

| Gender | Female, 95.2% |

| Happy | 93.9% |

| Calm | 2.6% |

| Sad | 1.2% |

| Fear | 0.9% |

| Surprised | 0.8% |

| Angry | 0.4% |

| Disgusted | 0.1% |

| Confused | 0.1% |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Feature analysis

Categories

Imagga

| paintings art | 100% | |

|

| ||

Captions

Microsoft

created on 2021-12-15

| a person flying through the air while holding an umbrella | 27.7% | |

|

| ||

| an old photo of a person | 27.6% | |

|

| ||

Text analysis

Amazon

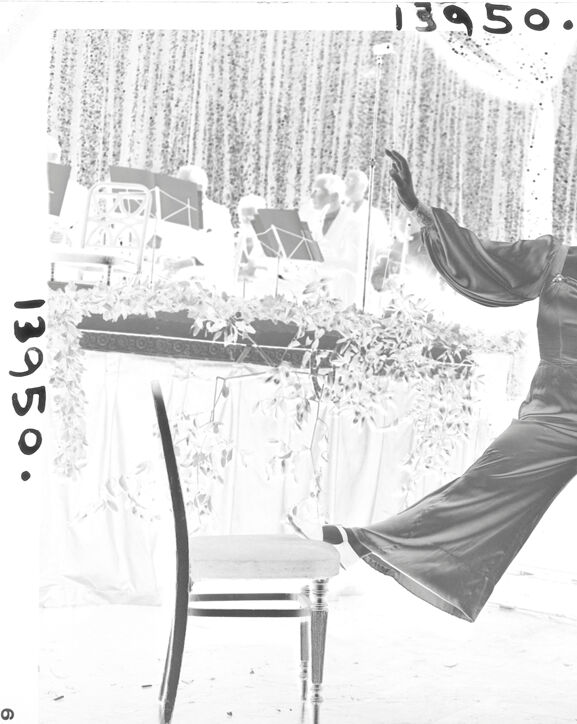

9

13950.

3950.

13950.

6

3950.

13950.

6