Machine Generated Data

Tags

Amazon

created on 2021-12-15

Clarifai

created on 2023-10-15

Imagga

created on 2021-12-15

| swab | 35.1 | |

|

| ||

| cleaning implement | 31.2 | |

|

| ||

| sketch | 30.9 | |

|

| ||

| drawing | 26.8 | |

|

| ||

| statue | 22.6 | |

|

| ||

| picket fence | 21 | |

|

| ||

| old | 20.2 | |

|

| ||

| representation | 18.5 | |

|

| ||

| fence | 18.4 | |

|

| ||

| sculpture | 18.3 | |

|

| ||

| architecture | 18 | |

|

| ||

| history | 17.9 | |

|

| ||

| black | 14.4 | |

|

| ||

| travel | 14.1 | |

|

| ||

| barrier | 14 | |

|

| ||

| snow | 13.6 | |

|

| ||

| stone | 13.5 | |

|

| ||

| monument | 13.1 | |

|

| ||

| ancient | 13 | |

|

| ||

| sky | 12.7 | |

|

| ||

| building | 12.7 | |

|

| ||

| art | 12.4 | |

|

| ||

| man | 12.1 | |

|

| ||

| fountain | 11.9 | |

|

| ||

| winter | 11.9 | |

|

| ||

| landmark | 11.7 | |

|

| ||

| famous | 11.2 | |

|

| ||

| historic | 11 | |

|

| ||

| structure | 10.9 | |

|

| ||

| city | 10.8 | |

|

| ||

| column | 10.8 | |

|

| ||

| tree | 10.8 | |

|

| ||

| people | 10.6 | |

|

| ||

| dress | 9.9 | |

|

| ||

| tourism | 9.9 | |

|

| ||

| marble | 9.8 | |

|

| ||

| obstruction | 9.5 | |

|

| ||

| vintage | 9.1 | |

|

| ||

| person | 9.1 | |

|

| ||

| religion | 9 | |

|

| ||

| outdoors | 9 | |

|

| ||

| male | 8.5 | |

|

| ||

| dark | 8.3 | |

|

| ||

| light | 8.1 | |

|

| ||

| menorah | 8.1 | |

|

| ||

| scene | 7.8 | |

|

| ||

| cold | 7.7 | |

|

| ||

| heritage | 7.7 | |

|

| ||

| grunge | 7.7 | |

|

| ||

| temple | 7.6 | |

|

| ||

| park | 7.6 | |

|

| ||

| weather | 7.4 | |

|

| ||

| dirty | 7.2 | |

|

| ||

| women | 7.1 | |

|

| ||

Google

created on 2021-12-15

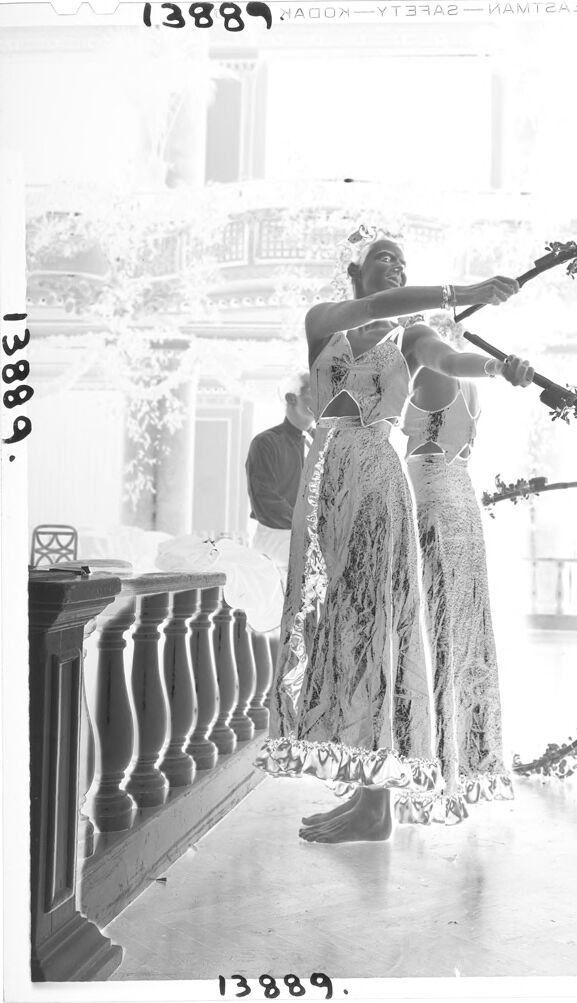

| Style | 83.8 | |

|

| ||

| Black-and-white | 81.9 | |

|

| ||

| Art | 76.6 | |

|

| ||

| Monochrome | 72.3 | |

|

| ||

| Monochrome photography | 72.1 | |

|

| ||

| Sculpture | 69.4 | |

|

| ||

| Event | 68.9 | |

|

| ||

| Fashion design | 66.2 | |

|

| ||

| Stock photography | 64.9 | |

|

| ||

| Statue | 64.6 | |

|

| ||

| Visual arts | 63.5 | |

|

| ||

| Font | 60.9 | |

|

| ||

| Vintage clothing | 59.6 | |

|

| ||

| History | 56.9 | |

|

| ||

| Costume design | 56.1 | |

|

| ||

| Illustration | 55.4 | |

|

| ||

Microsoft

created on 2021-12-15

| text | 98.1 | |

|

| ||

| dress | 94.6 | |

|

| ||

| sketch | 69.9 | |

|

| ||

| black and white | 66.5 | |

|

| ||

| clothing | 61.3 | |

|

| ||

| woman | 54.3 | |

|

| ||

| wedding dress | 50.4 | |

|

| ||

Color Analysis

Face analysis

Amazon

AWS Rekognition

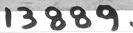

| Age | 22-34 |

| Gender | Female, 78.3% |

| Calm | 72.2% |

| Confused | 7.1% |

| Sad | 5.8% |

| Angry | 4.1% |

| Disgusted | 3% |

| Surprised | 3% |

| Fear | 2.6% |

| Happy | 2.3% |

AWS Rekognition

| Age | 2-8 |

| Gender | Female, 93.2% |

| Surprised | 59.6% |

| Calm | 25.2% |

| Fear | 5.1% |

| Confused | 4.1% |

| Sad | 2.9% |

| Happy | 1.2% |

| Disgusted | 1.2% |

| Angry | 0.7% |

AWS Rekognition

| Age | 23-37 |

| Gender | Female, 51% |

| Sad | 80.1% |

| Calm | 14.8% |

| Confused | 1.5% |

| Fear | 1.5% |

| Surprised | 1.1% |

| Happy | 0.6% |

| Angry | 0.3% |

| Disgusted | 0.2% |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Feature analysis

Categories

Imagga

| paintings art | 99.7% | |

|

| ||

Captions

Microsoft

created on 2021-12-15

| an old photo of a person | 60.5% | |

|

| ||

| old photo of a person | 54.4% | |

|

| ||

Text analysis

Amazon

13889

13889.

MAMTZA

(3889

NAGON- YT3RA2

13889.

•688E1

MAMTZA

(3889

NAGON-

YT3RA2

13889.

•688E1