Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

AWS Rekognition

| Age | 22-34 |

| Gender | Female, 96.2% |

| Calm | 91.2% |

| Surprised | 2.9% |

| Sad | 2.2% |

| Happy | 1.7% |

| Confused | 0.9% |

| Angry | 0.8% |

| Fear | 0.2% |

| Disgusted | 0.1% |

Feature analysis

Amazon

Clarifai

AWS Rekognition

| Chair | 99.7% | |

Categories

Imagga

created on 2021-12-15

| paintings art | 80.1% | |

| interior objects | 18.9% | |

Captions

Microsoft

created by unknown on 2021-12-15

| a person sitting on a chair | 75.1% | |

| a person sitting in a chair | 75% | |

| a man and a woman sitting on a chair | 40.9% | |

Clarifai

Created by general-english-image-caption-clip on 2025-07-11

black and white photo of a woman playing the piano.

Salesforce

Created by general-english-image-caption-blip on 2025-05-22

a photograph of a woman sitting at a table with a lamp on it

OpenAI GPT

Created by gpt-4o-2024-11-20 on 2025-06-14

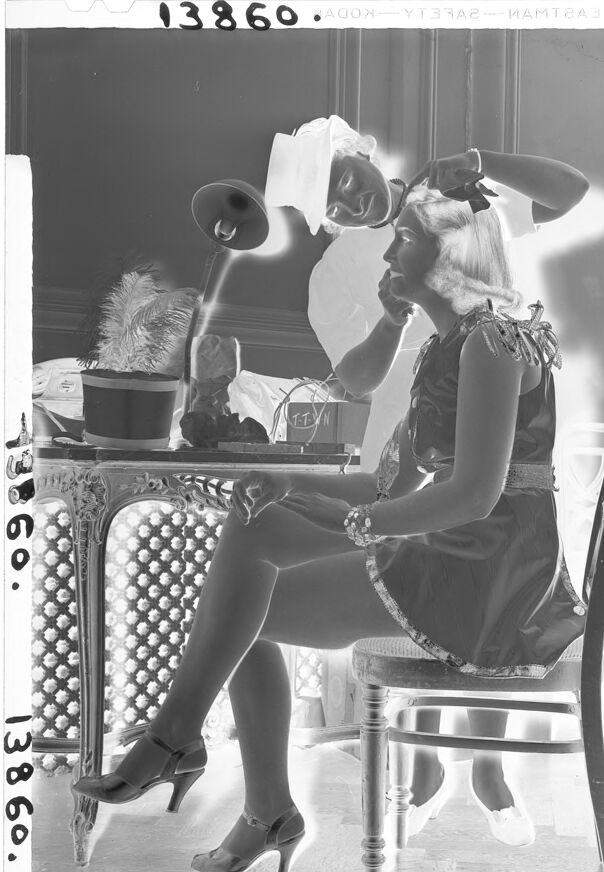

The image is a black-and-white, inverted photograph featuring a person seated on a chair wearing a dress with decorative fringe details and high-heeled shoes. The setting appears to be indoors, with ornate furniture such as a detailed table with intricate patterns. On the table, there are various items, including a feathered decoration and a lamp. The scene suggests a stylized or theatrical context, with attention to fashion and detail.

Created by gpt-4o-2024-08-06 on 2025-06-14

The image appears to be a photographic negative showing a scene indoors. The room features ornate furniture, including a decorative table and wooden chairs with patterned upholstery. On the table, there is a lamp with a curved neck and shade, a magazine with visible text, and a plant with feathery foliage. One seated individual is wearing a short dress with fringe details and high-heeled shoes, while another person is standing and reaching towards their hair, possibly styling it. The floor has a parquet pattern, and the background has wooden paneling and molding, suggesting a sophisticated environment.

Anthropic Claude

Created by anthropic.claude-3-haiku-20240307-v1:0 on 2025-06-18

The image shows a woman sitting in an ornate chair, dressed in an elaborate costume with a large floral headpiece. She appears to be applying makeup or styling her hair, with various beauty and grooming tools visible on the table in front of her. The image has a vintage, black and white aesthetic, suggesting it may be from an earlier era. The woman's pose and attire suggest she is likely a performer or actress preparing for a performance or photoshoot.

Created by us.anthropic.claude-3-5-sonnet-20241022-v2:0 on 2025-06-18

This appears to be a black and white photograph from what looks like the 1930s or 1940s. The image shows someone seated at an ornate table or vanity, wearing what appears to be a dress or skirt and heels. The furniture has decorative beaded or studded details typical of that era. There's some bright lighting coming in from what might be a window, creating dramatic contrast in the image. On the table surface, there appear to be some items that could be beauty or fashion accessories. The overall composition and styling suggests this might be from a fashion shoot or personal portrait session from that time period.

Meta Llama

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-06-09

The image depicts a woman sitting in front of a vanity, engaged in the act of removing her hat. She is dressed in a dark dress with a decorative trim around the hem and a matching belt, paired with high heels. Her hair is styled in an updo, and she wears a bracelet on her right wrist.

The woman sits on a chair in front of a vanity, which features a mirror and a lamp. The room's background is not clearly visible, but it appears to be a bedroom or dressing room. The overall atmosphere of the image suggests that the woman is preparing for a social event or has just finished getting ready for the day.

The image is a black-and-white photograph, likely taken in the early 20th century, given the style of the woman's clothing and the setting. The image has a vintage feel to it, with a sense of nostalgia and elegance.

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-06-09

This image is a black-and-white photograph of a woman sitting in front of a vanity, applying makeup. She is wearing a short dress with a high neckline and a decorative belt around her waist. Her hair is styled in an updo, and she has on high heels. The woman is seated on a chair with a curved back and legs, facing the vanity. The vanity has a mirror attached to it, and there are various items on top, including a lamp, a plant, and a box.

The background of the image appears to be a room with a door and a window. The overall atmosphere of the image suggests that the woman is getting ready for a performance or event, possibly a theatrical production. The image has a vintage feel to it, with the black-and-white color scheme and the style of the woman's clothing and hairstyle.

Amazon Nova

Created by amazon.nova-lite-v1:0 on 2025-06-08

The image is a black-and-white photograph of a woman sitting on a chair in a room. She is dressed in a dress with a belt and high heels. She is looking at something in front of her, possibly a mirror. She is wearing a bracelet and a ring on her left hand. Behind her is a table with a potted plant, a lamp, and other objects. There is a person standing behind her, possibly fixing her hair.

Created by amazon.nova-pro-v1:0 on 2025-06-08

A black-and-white photo of a woman sitting on a chair with a person standing behind her. The woman is wearing a dress, bracelet, and high heels. She is holding her hair while the person behind her is probably fixing her hair. On the table in front of her is a potted plant, a box, and other objects. On the right side, there is another chair. Behind them is a glass window with a door.

Google Gemini

Created by gemini-2.0-flash-lite on 2025-05-16

Here's a description of the image:

Overall Impression: The image is a negative photograph in black and white. It depicts a woman being styled, likely for a performance or event. The scene suggests a moment of preparation behind the scenes.

Key Elements:

- Subject: A woman is the central focus, seated on a chair. She's wearing a short, decorative dress with embellishments on the shoulders. She also has jewelry on her wrist and appears to be wearing heels.

- Hairstylist: A person, dressed in what seems to be a white work garment (possibly a smock or uniform) and wearing a head covering, is attending to the woman's hair. Their hands are visible, working on the woman's hairstyle.

- Setting: The scene seems to be indoors, likely a backstage or dressing room. A richly decorated table with ornate legs and a mesh-like detail is visible, adorned with a lamp, some sort of container holding a feather, and other items.

- Background: In the background, there's a partially visible figure dressed in a uniform or costume. A simple chair sits near this figure.

- Lighting: The lighting is uneven, creating areas of bright contrast and shadow. There's a significant burst of light on the right side of the image.

Inferences:

- The scene likely involves a performer or someone preparing for a performance.

- The presence of the hairstylist, the attire of the woman and the background figures suggests a professional setting.

- The image is a classic example of a candid shot, giving a peek into the preparation process.

Created by gemini-2.0-flash on 2025-05-16

The image shows a woman seated on a chair, presumably in a dressing room, while another person, possibly a hairdresser, works on her hair. The woman is dressed in a short, ornate dress with details like fringing and a decorative belt, and she is wearing heeled shoes and a bracelet. She appears to be in profile, looking to her right.

The person tending to her hair is wearing a white uniform, possibly a stylist or makeup artist, and has a white head covering. A lamp is positioned near them, providing light.

In the background, a small table is visible, holding various items like a hat with feathers, bottles, and wires. Another person wearing a white uniform and hat is partially visible behind a chair, possibly an assistant or another member of the crew.

The image is in black and white and seems to be a photograph from a vintage era, possibly related to theater or performance. The format appears to be a photographic negative, which means the tones are inverted.

Mistral Pixtral

Created by us.mistral.pixtral-large-2502-v1:0 on 2025-06-01

The image is a black-and-white photograph depicting a woman in a vintage setting, likely from the mid-20th century. She is seated on a chair in front of a dressing table with a large mirror. The woman is elegantly dressed in a sleeveless dress with a belt, and she is wearing high-heeled shoes. She appears to be trying on or adjusting a hat while looking at her reflection in the mirror.

The dressing table is adorned with various items, including a large feathered hat, a smaller hat, a box, and a handbag. The setting suggests a boudoir or dressing room, with ornate furniture and a sense of luxury. The photograph captures a moment of personal grooming and fashion, highlighting the style and elegance of the era. The lighting is soft, enhancing the overall aesthetic of the scene.

Qwen

Created by Qwen/Qwen2.5-VL-7B-Instruct on 2025-07-09

The image is a black-and-white photograph with a vintage aesthetic. It features a person sitting on a chair in a room that appears to be a dressing room or a backstage area. The individual is wearing a sleeveless dress with a sequined or embellished design, and high-heeled shoes. They are holding a white paper or cloth up to their face, possibly adjusting their makeup or hair. The room includes a table with various objects such as a lamp, a hat, and what appears to be a feathered accessory. There is also a mirror in the background reflecting part of the room. The overall lighting is bright, with a strong light source coming from behind the person, creating a dramatic effect. The photograph has a Kodak safety film marking on the left side, and there are multiple watermarks of the number "13860" throughout the image.

Created by Qwen/Qwen2.5-VL-72B-Instruct on 2025-07-09

This black-and-white photograph shows a woman sitting on a chair in front of a dressing table. She appears to be applying makeup or adjusting her appearance. The woman is wearing a sleeveless dress with a patterned design and high-heeled shoes. Her hair is styled in a vintage manner. The dressing table has various items on it, including a feathered hat, a handbag, and other personal belongings. There is a lamp on the table, and a mirror reflects part of her image. The setting seems to be a dressing room or a private space with elegant furniture, including a decorative chair and a patterned table. The image has a vintage feel, likely from the mid-20th century.