Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

AWS Rekognition

| Age | 48-66 |

| Gender | Female, 53.5% |

| Angry | 33.9% |

| Calm | 17.2% |

| Sad | 12.7% |

| Confused | 12.4% |

| Disgusted | 8.3% |

| Happy | 6.3% |

| Surprised | 4.8% |

| Fear | 4.4% |

Feature analysis

Amazon

Clarifai

AWS Rekognition

| Person | 99.5% | |

Categories

Imagga

created on 2021-12-15

| paintings art | 93.4% | |

| interior objects | 6% | |

Captions

Microsoft

created by unknown on 2021-12-15

| a group of people posing for a photo | 75.1% | |

| a group of people posing for the camera | 75% | |

| a group of people posing for a picture | 74.9% | |

Clarifai

Created by general-english-image-caption-clip on 2025-07-13

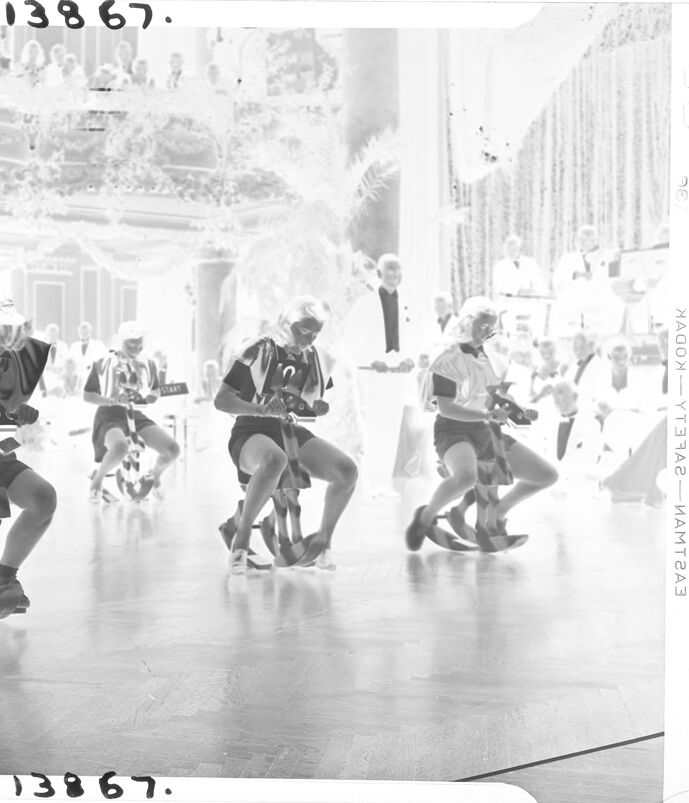

dancers perform in the 1950s.

Salesforce

Created by general-english-image-caption-blip on 2025-05-22

a photograph of a group of people are dancing in a dance floor

OpenAI GPT

Created by gpt-4o-2024-11-20 on 2025-06-15

This image is a black-and-white photographic negative. It depicts a lively scene, likely an indoor performance or staged event. Several individuals are riding hobby horses while dressed in costumes resembling sports uniforms or matching outfits. The riders appear to be energetically engaged, possibly mimicking a race or playful activity. The environment features ornate architectural details and spectators watching in the background. Plants and decorative elements further enhance the grandeur of the setting.

Created by gpt-4o-2024-08-06 on 2025-06-15

The image is a photographic negative showing a performance or event where several individuals are participating in a playful activity. The group is in an indoor setting with a decorative background, featuring plants and what appears to be a large audience observing from a balcony or upper level.

In the foreground, there are several people wearing athletic or casual clothing, each holding a hobby horse, which is a toy made from a stick with a horse's head, used for a riding imitation. The participants appear to be engaged in some sort of staged or choreographed event, possibly for entertainment.

The photograph's negative qualities make the colors and lighting appear inverted—the figures, which would normally be in light clothing, appear darker, and the background details are highlighted unlike their usual depiction in a regular photograph. The image's top and bottom edges feature a handwritten or printed number, "13867," which suggests cataloging or indexing. The side of the image shows a partial film border indicating it is Kodak safety film.

Anthropic Claude

Created by anthropic.claude-3-haiku-20240307-v1:0 on 2025-06-18

The image appears to show a performance or dance scene. It depicts several people on a stage or performance area, dressed in what looks like athletic or dance attire. They are in various poses and movements, suggesting a choreographed routine or dance performance. The image has a black and white aesthetic, giving it a vintage or historical feel.

Created by us.anthropic.claude-3-5-sonnet-20241022-v2:0 on 2025-06-18

This appears to be a black and white photograph of what looks like a roller derby or roller skating event. Several people are shown skating on what appears to be a smooth indoor floor. The skaters are wearing similar uniforms with shorts and appear to be in motion, captured mid-stride or mid-movement. The image has a vintage quality to it, suggesting it may be from an earlier era. The lighting creates interesting contrasts and the motion of the skaters gives the photo a sense of dynamic energy and movement.

Meta Llama

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-05-30

This image is a black-and-white photograph of a group of people riding rocking horses in a large room. The people are dressed in uniforms, with some wearing shorts and others wearing skirts. They are all wearing helmets and appear to be in motion, as if they are participating in a competition or event.

The background of the image shows a large room with a high ceiling and ornate decorations. There are people standing along the walls, watching the event. The overall atmosphere suggests a fun and lively occasion, possibly a charity event or a community gathering.

The image has a vintage feel to it, with the black-and-white tone and the style of the uniforms suggesting that it may have been taken in the mid-20th century. Overall, the image captures a unique and entertaining moment in time, showcasing the creativity and enthusiasm of the participants.

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-05-30

The image depicts a black-and-white photograph of a group of people participating in a race on unicycles, with the numbers "13867" handwritten at the top and bottom of the photo. The scene is set in a large room with a high ceiling, featuring a polished floor and a crowd of spectators in the background. The participants are dressed in athletic attire, including shorts, t-shirts, and sneakers, and are equipped with helmets and other safety gear.

The image appears to be a vintage photograph, likely taken in the mid-20th century, given the style of the clothing and the quality of the image. The presence of the handwritten numbers suggests that the photo may have been taken for documentation or archival purposes.

Overall, the image captures a unique and dynamic moment in time, showcasing the excitement and energy of the unicycle race. The photograph's historical significance and nostalgic value make it a fascinating piece of visual history.

Amazon Nova

Created by amazon.nova-lite-v1:0 on 2025-05-29

The image appears to be a black and white photograph of a group of people participating in a unique activity. They are riding on exercise bikes, and the bikes are positioned on the floor. The people are dressed in what seems to be uniforms, and they are all wearing helmets for safety. Some of them are wearing sneakers, while others are wearing leather shoes. The activity seems to be taking place in a room with a high ceiling and a chandelier, suggesting a formal or elegant setting.

The image also includes a watermark in the top left corner that reads "13867," which could be a reference to a specific event or location. The image has a vintage or historical feel, possibly from the early 20th century. Overall, the image depicts a group of people engaging in a unique and possibly challenging physical activity, while dressed in uniforms and wearing protective gear.

Created by amazon.nova-pro-v1:0 on 2025-05-29

A black-and-white photo shows a group of people performing a synchronized routine on unicycles. They are wearing matching outfits and helmets, and their bodies are bent forward, suggesting a coordinated movement. The photo is taken from a low angle, capturing the performers' legs and unicycles in motion. The background features a blurred audience seated in chairs, possibly in a theater or performance space.

Google Gemini

Created by gemini-2.0-flash on 2025-05-16

Certainly! Here's a description of the image:

General Description

The image appears to be a black and white photographic negative of an indoor event. It shows a group of people seemingly engaged in a hobby horse race. The event appears to be taking place in a large room, possibly a ballroom or auditorium, with a crowd watching from the background.

Foreground

Several figures are seated on hobby horses, appearing to be in motion. Each rider seems focused and is dressed in some kind of uniform, suggesting a competitive nature to the event.

Background

The background features decorations that seem to be indicative of a special occasion, such as floral arrangements and banners. A large crowd is observing the activity, suggesting this event may be a performance or competition.

Overall Impression

The image evokes a sense of novelty and amusement, as the hobby horse race appears to be a lighthearted and playful event. The setting and attire of the observers suggest that this might be a formal occasion with an element of fun.

Created by gemini-2.0-flash-lite on 2025-05-16

Here's a description of the image:

Overall Impression:

The image is a black and white negative depicting a unique athletic event or competition. It's likely an older photograph, given the film quality and the subjects' attire. The scene seems to be taking place indoors in a grand hall or auditorium, with an audience present.

Key Elements:

- The Participants: There are several individuals in the foreground, all seemingly involved in a race or demonstration. They are on what appear to be small wooden contraptions, each with two "feet" or platforms. Their attire includes shorts, short-sleeved shirts, and possibly helmets or caps.

- The Activity: The individuals are engaged in what looks like some sort of simulated "horseback riding" competition. They're straddling the wooden devices and using their legs and arms to propel themselves forward, or perhaps even to balance.

- The Setting: The scene is set in a large, well-lit room with spectators visible in the background, who appear to be observing. The architectural style suggests a historic setting.

- The "Start" and "Numbers": There is a "START" sign visible, indicating the beginning of the competition. Each of the participants also has a number marked on their shirt.

- The Audience: A large group of people are standing in the background, observing the competition.

Overall, the photograph captures a unique and possibly humorous athletic event from an earlier time.

Mistral Pixtral

Created by us.mistral.pixtral-large-2502-v1:0 on 2025-06-01

The image appears to be a black-and-white photograph capturing a group of performers in a synchronized dance or acrobatic routine. The performers are wearing costumes that include shorts, knee-high socks, and some form of headgear. They are in a dynamic pose, with one leg raised and bent at the knee, and the other leg extended behind them. They are holding small objects, possibly props, in their hands.

The setting looks like an indoor venue, possibly a theater or a large hall, with an audience visible in the background. The audience is seated and appears to be watching the performance. The performers are on a polished floor, and the background includes decorative elements such as curtains and possibly chandeliers, suggesting a formal or festive event.

The photograph has a vintage quality, indicated by the black-and-white color scheme and the style of the costumes and setting. The number "13867" is visible at the top and bottom of the image, which might be a reference or catalog number. The text on the right side of the image includes the words "EASTMAN," "SAFETY," and "KODAK," which are associated with photographic film, further suggesting that this is an archival or historical photograph.

Qwen

Created by Qwen/Qwen2.5-VL-7B-Instruct on 2025-07-09

The image appears to be a black-and-white photograph of a race involving participants on stationary bicycles. The event seems to be taking place indoors, likely within a large hall or ballroom, as indicated by the ornate ceiling and chandeliers in the background. The audience is seated around the hall, watching the race, and there are a few individuals in the background who might be officials or staff members.

The participants are wearing cycling outfits, including short-sleeved tops and shorts, and they are focused on pedaling their stationary bikes. Some have helmets or headgear, suggesting a level of safety consideration for the event. The setting gives the impression of a formal or celebratory occasion, possibly a charity event or a special gathering where this activity is being incorporated as a form of entertainment or competition.

Created by Qwen/Qwen2.5-VL-72B-Instruct on 2025-07-09

This black-and-white photograph depicts a group of people riding mechanical scooters in a grand, ornate space. The riders, dressed in casual attire, appear to be participating in a recreational or performance activity. The background features a chandelier, decorative elements, and a large audience seated and standing, watching the event. The image has a vintage quality, suggesting it might be from a past era. The number "13867" is prominently displayed at the top and bottom of the image.