Machine Generated Data

Tags

Amazon

created on 2021-12-15

Clarifai

created on 2023-10-15

Imagga

created on 2021-12-15

| brass | 57.3 | |

|

| ||

| wind instrument | 52 | |

|

| ||

| cornet | 47.1 | |

|

| ||

| sax | 44.2 | |

|

| ||

| musical instrument | 29.3 | |

|

| ||

| negative | 24.1 | |

|

| ||

| film | 22.6 | |

|

| ||

| male | 19.8 | |

|

| ||

| people | 19.5 | |

|

| ||

| businessman | 18.5 | |

|

| ||

| person | 17.7 | |

|

| ||

| trombone | 16.8 | |

|

| ||

| man | 16.8 | |

|

| ||

| drawing | 16.6 | |

|

| ||

| business | 15.8 | |

|

| ||

| black | 15.6 | |

|

| ||

| silhouette | 14.9 | |

|

| ||

| work | 13.3 | |

|

| ||

| photographic paper | 13 | |

|

| ||

| manager | 12.1 | |

|

| ||

| design | 11.8 | |

|

| ||

| professional | 11.8 | |

|

| ||

| adult | 11 | |

|

| ||

| chart | 10.5 | |

|

| ||

| art | 10.5 | |

|

| ||

| symbol | 10.1 | |

|

| ||

| old | 9.7 | |

|

| ||

| portrait | 9.7 | |

|

| ||

| group | 9.7 | |

|

| ||

| technology | 9.6 | |

|

| ||

| engineer | 9.6 | |

|

| ||

| looking | 9.6 | |

|

| ||

| serious | 9.5 | |

|

| ||

| plan | 9.4 | |

|

| ||

| men | 9.4 | |

|

| ||

| grunge | 9.4 | |

|

| ||

| human | 9 | |

|

| ||

| sky | 8.9 | |

|

| ||

| job | 8.8 | |

|

| ||

| photographic equipment | 8.7 | |

|

| ||

| pencil | 8.6 | |

|

| ||

| hand | 8.3 | |

|

| ||

| new | 8.1 | |

|

| ||

| office | 8 | |

|

| ||

| building | 7.9 | |

|

| ||

| designing | 7.9 | |

|

| ||

| pensive | 7.8 | |

|

| ||

| space | 7.8 | |

|

| ||

| construction | 7.7 | |

|

| ||

| engineering | 7.6 | |

|

| ||

| horn | 7.6 | |

|

| ||

| elegance | 7.6 | |

|

| ||

| style | 7.4 | |

|

| ||

| device | 7.4 | |

|

| ||

| event | 7.4 | |

|

| ||

| graphic | 7.3 | |

|

| ||

| team | 7.2 | |

|

| ||

| women | 7.1 | |

|

| ||

| day | 7.1 | |

|

| ||

| architecture | 7 | |

|

| ||

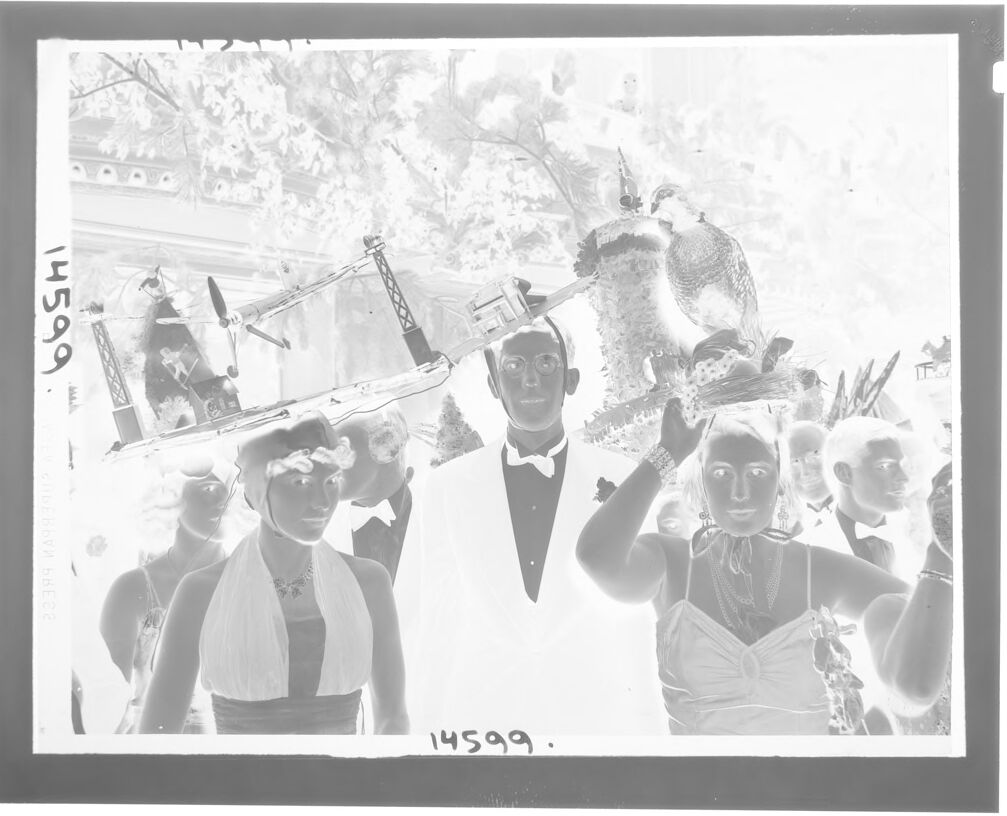

Google

created on 2021-12-15

| Picture frame | 85.4 | |

|

| ||

| Gesture | 85.3 | |

|

| ||

| Art | 78.9 | |

|

| ||

| Font | 78.8 | |

|

| ||

| Hat | 78 | |

|

| ||

| Suit | 74.7 | |

|

| ||

| Vintage clothing | 70.6 | |

|

| ||

| Vest | 70 | |

|

| ||

| Event | 69.5 | |

|

| ||

| Monochrome photography | 66.6 | |

|

| ||

| Visual arts | 66.5 | |

|

| ||

| Formal wear | 63.9 | |

|

| ||

| Monochrome | 63.7 | |

|

| ||

| Room | 62.9 | |

|

| ||

| Stock photography | 61.7 | |

|

| ||

| Illustration | 60 | |

|

| ||

| History | 59.8 | |

|

| ||

| Photographic paper | 57.9 | |

|

| ||

| Photo caption | 57.6 | |

|

| ||

| T-shirt | 56.2 | |

|

| ||

Color Analysis

Face analysis

Amazon

AWS Rekognition

| Age | 28-44 |

| Gender | Male, 71.2% |

| Surprised | 54% |

| Calm | 11.8% |

| Angry | 11.1% |

| Sad | 9.8% |

| Disgusted | 6.6% |

| Happy | 2.5% |

| Confused | 2.3% |

| Fear | 1.8% |

AWS Rekognition

| Age | 19-31 |

| Gender | Female, 91.4% |

| Angry | 59% |

| Happy | 19.1% |

| Surprised | 14.5% |

| Fear | 3.4% |

| Calm | 1.7% |

| Confused | 1.7% |

| Sad | 0.3% |

| Disgusted | 0.3% |

AWS Rekognition

| Age | 22-34 |

| Gender | Female, 95% |

| Surprised | 47.6% |

| Calm | 24.7% |

| Confused | 9.1% |

| Angry | 8.5% |

| Happy | 7.4% |

| Sad | 1.5% |

| Disgusted | 0.8% |

| Fear | 0.4% |

AWS Rekognition

| Age | 18-30 |

| Gender | Female, 94.5% |

| Calm | 79.8% |

| Confused | 5.6% |

| Sad | 4% |

| Angry | 3.9% |

| Surprised | 3.9% |

| Happy | 1.5% |

| Fear | 0.8% |

| Disgusted | 0.5% |

AWS Rekognition

| Age | 26-40 |

| Gender | Female, 73% |

| Happy | 83.9% |

| Calm | 10.9% |

| Sad | 3.2% |

| Angry | 0.8% |

| Fear | 0.5% |

| Confused | 0.3% |

| Surprised | 0.2% |

| Disgusted | 0.2% |

Feature analysis

Categories

Imagga

| paintings art | 100% | |

|

| ||

Captions

Microsoft

created on 2021-12-15

| a vintage photo of a group of people posing for the camera | 88.7% | |

|

| ||

| a vintage photo of a group of people posing for a picture | 88.6% | |

|

| ||

| an old photo of a group of people posing for the camera | 88.5% | |

|

| ||

Text analysis

Amazon

14599

14599.

ИАЧЯЗЯЦ

28399

28399 ИАЧЯЗЯЦ ALL

ALL

14599.

14599

14599.

14599