Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

Microsoft

AWS Rekognition

| Age | 34-50 |

| Gender | Male, 54.8% |

| Disgusted | 45% |

| Sad | 45% |

| Confused | 45% |

| Happy | 54.8% |

| Fear | 45% |

| Surprised | 45.1% |

| Calm | 45% |

| Angry | 45% |

Feature analysis

Amazon

Clarifai

AWS Rekognition

| Person | 99.3% | |

Categories

Imagga

created on 2019-11-16

| events parties | 49.2% | |

| food drinks | 31.7% | |

| interior objects | 10.9% | |

| people portraits | 4% | |

| cars vehicles | 2% | |

| text visuals | 1.5% | |

Captions

Microsoft

created by unknown on 2019-11-16

| a man standing in front of a store | 71.2% | |

| a man standing in front of a refrigerator | 45.1% | |

| a man that is standing in front of a store | 45% | |

Clarifai

No captions written

Salesforce

Created by general-english-image-caption-blip on 2025-05-27

a photograph of a man standing at a counter with a counter top

Created by general-english-image-caption-blip-2 on 2025-07-10

a black and white photo of three men standing behind a counter

OpenAI GPT

Created by gpt-4o-2024-11-20 on 2025-06-17

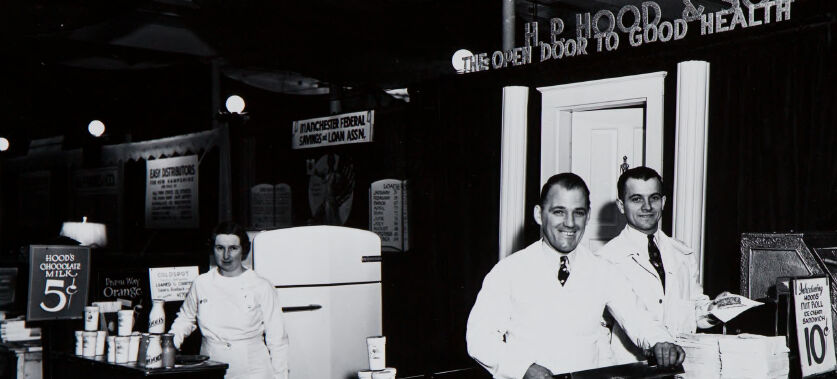

The image depicts a vintage trade show or exhibition booth organized by Hood & Sons, showcasing dairy products as part of their brand. The foreground includes a display counter with stacks of packaged goods, plates containing samples, and signage highlighting prices, such as Hood’s chocolate milk for 5 cents and other products. A refrigerator is visible in the background, along with promotional signage stating, "The Open Door to Good Health." The booth is designed to promote the company’s offerings in a professional and appealing manner. The overall setting is reminiscent of mid-20th-century marketing efforts.

Created by gpt-4o-2024-08-06 on 2025-06-17

The image depicts a vintage advertisement or promotional display for Hood's products at a trade show or similar event. The setup includes a booth with a counter that prominently features a display of products, possibly including sandwiches or dairy items, as suggested by the white lab coats and hats worn by the individuals behind the counter. The booth signage reads "H. P. Hood & Sons" and "The Open Door to Good Health." To the side, there is signage advertising "Hood's Chocolate Milk" for 5 cents and another sign indicating "Hoodsys" at 10 cents. The booth is equipped with what appears to be a refrigerator, and other products are displayed on the counters. The overall setting suggests a period atmosphere, likely from the mid-20th century.

Anthropic Claude

Created by anthropic.claude-3-haiku-20240307-v1:0 on 2025-06-18

The image shows the interior of what appears to be a small retail establishment, possibly a pharmacy or health food store. There are two men standing behind a counter, wearing white coats or uniforms, suggesting they are employees or staff. The counter is filled with various products and displays, including a sign that reads "The open door to good health". The overall scene has a vintage or historical feel, likely from an earlier time period.

Created by us.anthropic.claude-3-5-sonnet-20241022-v2:0 on 2025-06-18

This is a vintage black and white photograph of what appears to be a Hood's dairy or ice cream shop. The storefront has a sign that reads "THE OPEN DOOR TO GOOD HEALTH" and shows several workers dressed in crisp white uniforms behind a counter. The workers appear to be in good spirits, and the counter displays various products including what looks like ice cream or dairy items. There's a price sign visible showing "5¢" and some other promotional materials. The image has that classic mid-20th century American retail establishment feel to it, with its traditional counter service setup and uniformed staff. The lighting and composition create a nostalgic snapshot of what appears to be a thriving local business from that era.

Meta Llama

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-06-07

The image is a black-and-white photograph of a store counter with two men in white coats and a woman in a white dress standing behind it. The counter is cluttered with various items, including a refrigerator, a sign that reads "Hood's Chocolate Milk 5¢", and a sign that reads "10¢".

The man on the left is wearing a white coat and has short dark hair. He is leaning on the counter with his right arm and looking at the camera. The man on the right is also wearing a white coat and has short dark hair. He is standing behind the counter and looking at the camera. The woman is standing to the left of the man on the left and is wearing a long white dress. She has short dark hair and is looking at the camera.

In the background, there are several signs and posters on the wall, including one that reads "This Open Door to Good Health" and another that reads "Hood's". There is also a doorway in the background that leads to another room.

Overall, the image appears to be a vintage photograph of a store or market, possibly from the early 20th century. The men and woman in white coats suggest that they may be pharmacists or store owners, and the signs and posters in the background provide additional context about the store's products and services.

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-06-07

The image is a black-and-white photograph of a store with three men and one woman standing behind the counter. The store appears to be a pharmacy or a health food store, as indicated by the sign above the door that reads "H.P. HOOD & SONS" and "THE OPEN DOOR TO GOOD HEALTH."

The man on the left is wearing a white lab coat and has his hands resting on the counter. The man in the middle is also wearing a white lab coat and is holding a piece of paper in his hand. The man on the right is wearing a white shirt and tie and is standing behind the cash register. The woman is standing behind the counter on the left side of the image, wearing a white dress and apron.

There are various items displayed on the counter, including bottles, jars, and boxes. A sign on the counter reads "HOOD'S CHOCOLATE MILK 5¢" and another sign next to the cash register reads "10¢." The background of the image shows a dark room with several signs and posters on the walls. Overall, the image suggests that the store is a place where customers can purchase healthy food and drinks, and the staff is knowledgeable and helpful.

Amazon Nova

Created by amazon.nova-lite-v1:0 on 2025-06-07

The image is a black-and-white photograph of a scene inside a store. It features three people standing behind a counter. The counter is well-stocked with various items, including cups, bottles, and other objects. The two men are dressed in white lab coats and ties, suggesting they might be pharmacists or chemists. The woman standing next to them is dressed in a white uniform, possibly a pharmacist's assistant or a store employee. The counter is labeled "HOOD'S," which might be the name of the store or the brand they are selling. Behind the counter, there is a refrigerator and a banner with the text "BLOOD CHOCOLATE MILK 5." There are also some other signs and advertisements on the wall.

Created by amazon.nova-pro-v1:0 on 2025-06-07

The black-and-white photo shows a group of people in front of a counter inside a store. There are two men standing behind the counter, and a woman is standing on the left side. They are all wearing white outfits, and one of the men is wearing a tie. The counter has many items on top, including a box with the number 10 on it. Behind them are a refrigerator and a sign.

Google Gemini

Created by gemini-2.0-flash-lite on 2025-05-11

Here's a description of the image:

Overall Impression:

The image is a black and white photograph, likely taken in the mid-20th century. It depicts a commercial setup, possibly at a fair or exhibition, promoting "H.P. Hood & Sons" products.

Setting and Composition:

- The scene is indoors, potentially a food stall or booth.

- There are three main individuals visible.

- A counter or display area dominates the scene, showcasing food items and advertising materials.

- Lighting seems to be artificial, with some lights illuminating the counter and products.

People:

- Two men are behind the counter. They wear white coats, suggesting they are workers or employees of "Hood's". One is holding a knife and they seem to be preparing food.

- A woman is behind a smaller counter in the background. She also wears a white coat.

Products and Advertising:

- Signs and displays are prominently featured, promoting Hood's products like "Hood's Chocolate Milk", "Hood's Orange", and some kind of roll.

- Price signs of "5¢" and "10¢" are clearly visible.

- A sign overhead says "H.P. Hood & Sons" with the slogan "THE OPEN DOOR TO GOOD HEALTH".

- Advertisements for "Manchester Federal Savings & Loan Assn" are displayed.

- An old-fashioned cash register is present.

- A refrigerator is present.

Atmosphere:

The photograph evokes a sense of a bygone era. It is probably a scene from a promotional event for the Hood brand. The overall look suggests it was likely taken in the 1930s or 1940s.

Created by gemini-2.0-flash on 2025-05-11

Certainly, let's describe the image you've provided.

General Description:

The image is a black and white photograph depicting an interior scene, likely a trade show booth or commercial display. It features several people and elements suggesting the promotion of a food-related business.

Key Elements and Figures:

People:

- Two men stand behind a counter with the name "HOODS" prominently displayed. Both men are wearing what appear to be white jackets or coats, potentially uniforms. One is smiling broadly at the camera and appears to be in the middle of cutting something on the counter. The other is more reserved.

- A woman, also wearing a white outfit, stands to the left of the counter, near a display of drinks or dairy products.

Counter/Display:

- A large counter has "HOODS" embossed on the front.

- The counter is covered with items, possibly sandwiches or other food products. There is also a cash register visible.

Signage:

- Above the men, a sign reads "H.P. HOOD & SONS" with the slogan "THE OPEN DOOR TO GOOD HEALTH."

- To the left, a sign advertises "HOODS CHOCOLATE MILK" for 5 cents.

Other Details:

- A white refrigerator is in the background, suggesting dairy products or cold storage.

- There is another advertisement for “MANCHESTER FEDERAL SAVINGS LOAN ASSN”

Overall Impression:

The image evokes a sense of mid-20th century marketing and commercial presentation. The focus is clearly on promoting the "HOODS" brand, likely a food or dairy company. The clean white uniforms and bright lighting contribute to a sense of hygiene and trustworthiness that the company likely intended to convey to its potential customers.

Let me know if you would like me to focus on a specific aspect or area of the image!

Mistral Pixtral

Created by us.mistral.pixtral-large-2502-v1:0 on 2025-06-08

The image depicts a vintage photograph of a dairy or food product store, specifically "H.P. Hood & Sons." The store has a sign above the entrance that reads "The Open Door to Good Health." The interior of the store features three employees dressed in white uniforms, which were typical for food service workers in the mid-20th century.

Setting and Environment:

- The store appears to be well-organized with a clean and professional appearance.

- There is a refrigerator or cooler visible in the background, likely used for storing dairy products.

- Various signs and advertisements are displayed, promoting products and prices. For example, there is a sign advertising "Hood's Milk" for 5 cents.

Employees:

- Three employees are visible in the image.

- Two employees are standing behind a counter, possibly preparing or packaging products. One of them is holding a container, and the other is working with some items on the counter.

- The third employee is standing near the refrigerator, possibly attending to the products inside.

Products and Displays:

- The counter is laden with various dairy products, including milk containers and possibly other items like butter or cheese.

- There are promotional materials and price tags visible, indicating the cost of different products.

- A cash register is present on the counter, suggesting that this is the point of sale area.

Signage and Branding:

- The store's name, "H.P. Hood & Sons," is prominently displayed, indicating the brand's identity.

- The slogan "The Open Door to Good Health" emphasizes the health benefits associated with the store's products.

Overall, the image captures a moment in the history of a dairy or food product store, showcasing the employees, products, and the store's branding from a bygone era.

Qwen

Created by Qwen/Qwen2.5-VL-7B-Instruct on 2025-07-20

This black-and-white image depicts a vintage milk stand or promotional booth. The name "H.P. Hood & Sons" is prominently displayed at the top of the booth, accompanied by the slogan "The Open Door to Good Health." The booth appears to be set up in a public or semi-public space, possibly at an exhibition or event.

Inside the booth, two individuals are standing behind a counter. The person on the left is wearing a white uniform, which suggests they may be a salesperson or employee. The person on the right is also dressed in white but appears to be cutting a cake or similar food item, possibly for display or as part of a promotional offer.

On the counter, there are various items, including a sign that advertises "Hood's Chocolate Milk" and "Orange" for 5 cents. There are also some stacked items that resemble cardboard boxes or cartons, possibly containing dairy products. A cash register is visible on the right side of the counter.

The overall setting gives the impression of a mid-20th-century promotional event or trade show. The booth is well-organized, with signage and products neatly displayed to attract attention.

Created by Qwen/Qwen2.5-VL-72B-Instruct on 2025-07-20

This black-and-white photograph depicts a vintage scene, likely from the mid-20th century, featuring a dairy or food product display booth. The booth is branded with the name "H.P. Hood & Sons," and a slogan above the display reads, "The Open Door to Good Health."

In the foreground, two men in lab coats are standing behind a counter. The counter is filled with various dairy products, including butter and milk, with price tags indicating costs such as "5¢" and "10¢." One of the men is holding a knife and appears to be cutting a piece of butter. To the left of the counter, a woman in a lab coat is arranging products on a smaller display table. Behind the booth, there are posters and signs, including one for the "Manchester Federal Savings & Loan Association." The overall setting suggests a promotional event or exhibition, possibly at a fair or market.