Machine Generated Data

Tags

Amazon

created on 2019-11-16

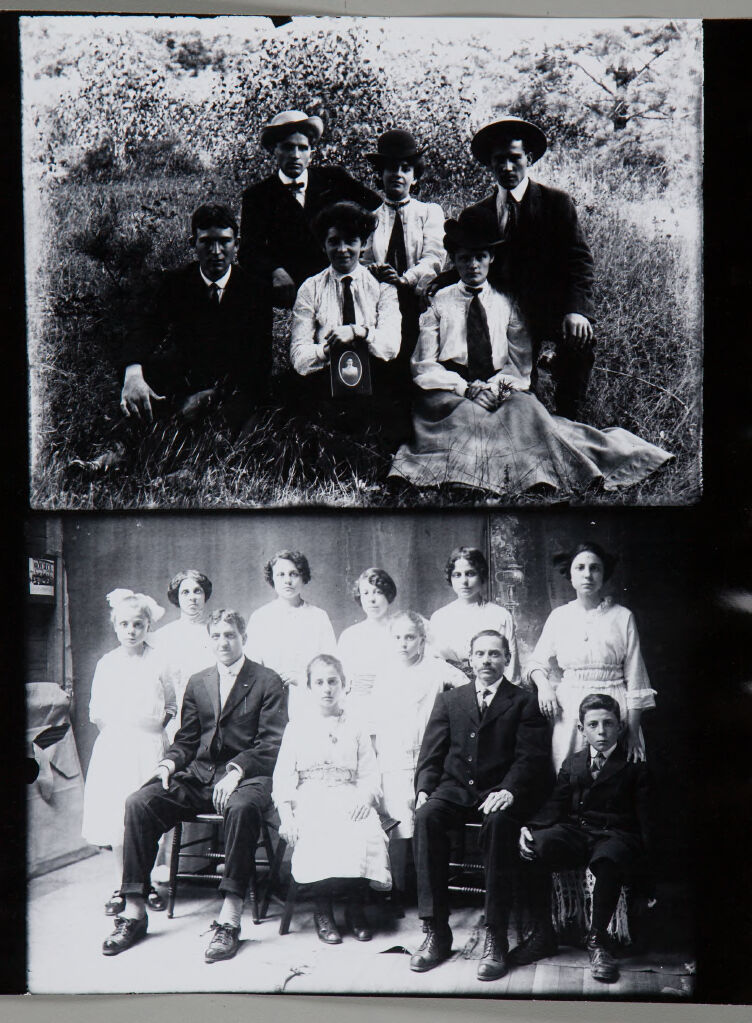

| Person | 99.3 | |

|

| ||

| Human | 99.3 | |

|

| ||

| Person | 99.1 | |

|

| ||

| Person | 98.9 | |

|

| ||

| Person | 98.8 | |

|

| ||

| Person | 98.8 | |

|

| ||

| Person | 98.6 | |

|

| ||

| Person | 97.9 | |

|

| ||

| Person | 97.8 | |

|

| ||

| Person | 97.5 | |

|

| ||

| Footwear | 97.5 | |

|

| ||

| Apparel | 97.5 | |

|

| ||

| Clothing | 97.5 | |

|

| ||

| Shoe | 97.5 | |

|

| ||

| Accessory | 97.3 | |

|

| ||

| Accessories | 97.3 | |

|

| ||

| Tie | 97.3 | |

|

| ||

| Person | 97.1 | |

|

| ||

| Person | 97.1 | |

|

| ||

| Shoe | 93.9 | |

|

| ||

| Person | 93.8 | |

|

| ||

| Furniture | 91.2 | |

|

| ||

| Advertisement | 91.2 | |

|

| ||

| Poster | 91.2 | |

|

| ||

| Collage | 91 | |

|

| ||

| Person | 89 | |

|

| ||

| Person | 84.8 | |

|

| ||

| Person | 82.1 | |

|

| ||

| Leisure Activities | 78.9 | |

|

| ||

| Person | 77.8 | |

|

| ||

| Chair | 68.7 | |

|

| ||

| People | 63 | |

|

| ||

| Suit | 62.1 | |

|

| ||

| Overcoat | 62.1 | |

|

| ||

| Coat | 62.1 | |

|

| ||

| Dress | 62 | |

|

| ||

| Portrait | 61.3 | |

|

| ||

| Photo | 61.3 | |

|

| ||

| Photography | 61.3 | |

|

| ||

| Face | 61.3 | |

|

| ||

| Musical Instrument | 60.1 | |

|

| ||

| Musician | 60.1 | |

|

| ||

| Performer | 60 | |

|

| ||

Clarifai

created on 2019-11-16

Imagga

created on 2019-11-16

| kin | 55.8 | |

|

| ||

| man | 23.5 | |

|

| ||

| black | 22.9 | |

|

| ||

| people | 19.5 | |

|

| ||

| person | 18.8 | |

|

| ||

| world | 18.5 | |

|

| ||

| male | 16.4 | |

|

| ||

| silhouette | 15.7 | |

|

| ||

| bride | 15.4 | |

|

| ||

| portrait | 14.2 | |

|

| ||

| vintage | 13.2 | |

|

| ||

| couple | 13.1 | |

|

| ||

| grunge | 11.9 | |

|

| ||

| adult | 11.8 | |

|

| ||

| art | 11.3 | |

|

| ||

| wedding | 11 | |

|

| ||

| family | 10.7 | |

|

| ||

| old | 10.4 | |

|

| ||

| window | 10.2 | |

|

| ||

| child | 10.1 | |

|

| ||

| dress | 9.9 | |

|

| ||

| love | 9.5 | |

|

| ||

| culture | 9.4 | |

|

| ||

| happy | 9.4 | |

|

| ||

| group | 8.9 | |

|

| ||

| office | 8.8 | |

|

| ||

| boy | 8.8 | |

|

| ||

| symbol | 8.7 | |

|

| ||

| antique | 8.6 | |

|

| ||

| happiness | 8.6 | |

|

| ||

| sport | 8.4 | |

|

| ||

| retro | 8.2 | |

|

| ||

| team | 8.1 | |

|

| ||

| businessman | 7.9 | |

|

| ||

| business | 7.9 | |

|

| ||

| face | 7.8 | |

|

| ||

| bridal | 7.8 | |

|

| ||

| marriage | 7.6 | |

|

| ||

| wife | 7.6 | |

|

| ||

| poster | 7.5 | |

|

| ||

| bouquet | 7.5 | |

|

| ||

| planner | 7.5 | |

|

| ||

| dark | 7.5 | |

|

| ||

| one | 7.5 | |

|

| ||

| light | 7.3 | |

|

| ||

| groom | 7.3 | |

|

| ||

| smile | 7.1 | |

|

| ||

Google

created on 2019-11-16

| Photograph | 96.9 | |

|

| ||

| People | 93.1 | |

|

| ||

| Snapshot | 85.1 | |

|

| ||

| Photography | 72.5 | |

|

| ||

| Picture frame | 70.2 | |

|

| ||

| Art | 69.5 | |

|

| ||

| Photographic paper | 66.3 | |

|

| ||

| Room | 65.7 | |

|

| ||

| Stock photography | 65.4 | |

|

| ||

| Family | 58.3 | |

|

| ||

| Black-and-white | 56.4 | |

|

| ||

Color Analysis

Face analysis

Amazon

AWS Rekognition

| Age | 22-34 |

| Gender | Male, 54.1% |

| Fear | 45% |

| Angry | 45% |

| Calm | 54.6% |

| Surprised | 45% |

| Happy | 45% |

| Confused | 45% |

| Sad | 45.2% |

| Disgusted | 45% |

AWS Rekognition

| Age | 23-35 |

| Gender | Male, 54.5% |

| Fear | 45% |

| Angry | 45.1% |

| Calm | 54.5% |

| Surprised | 45.1% |

| Happy | 45% |

| Confused | 45.1% |

| Sad | 45.1% |

| Disgusted | 45% |

AWS Rekognition

| Age | 30-46 |

| Gender | Male, 54.9% |

| Calm | 54.9% |

| Angry | 45% |

| Surprised | 45% |

| Disgusted | 45% |

| Happy | 45% |

| Sad | 45% |

| Fear | 45% |

| Confused | 45% |

AWS Rekognition

| Age | 13-25 |

| Gender | Male, 54.7% |

| Happy | 45% |

| Angry | 45.2% |

| Fear | 45.2% |

| Surprised | 45.1% |

| Calm | 52.5% |

| Sad | 46.5% |

| Disgusted | 45.1% |

| Confused | 45.3% |

AWS Rekognition

| Age | 32-48 |

| Gender | Male, 54.2% |

| Sad | 45% |

| Fear | 45% |

| Angry | 45% |

| Surprised | 45% |

| Calm | 45.1% |

| Disgusted | 45% |

| Confused | 45% |

| Happy | 54.9% |

AWS Rekognition

| Age | 24-38 |

| Gender | Male, 51.2% |

| Angry | 46% |

| Surprised | 45.2% |

| Confused | 46.8% |

| Disgusted | 49% |

| Sad | 45.5% |

| Happy | 45.3% |

| Calm | 46.9% |

| Fear | 45.2% |

AWS Rekognition

| Age | 23-37 |

| Gender | Male, 52.5% |

| Calm | 54.9% |

| Surprised | 45% |

| Happy | 45% |

| Sad | 45% |

| Confused | 45% |

| Angry | 45% |

| Fear | 45% |

| Disgusted | 45% |

AWS Rekognition

| Age | 30-46 |

| Gender | Female, 53.9% |

| Fear | 45% |

| Sad | 45.3% |

| Happy | 45.8% |

| Disgusted | 45% |

| Calm | 53.7% |

| Confused | 45% |

| Angry | 45.1% |

| Surprised | 45% |

AWS Rekognition

| Age | 20-32 |

| Gender | Male, 50.1% |

| Confused | 45.1% |

| Angry | 45.4% |

| Surprised | 45% |

| Calm | 54.3% |

| Disgusted | 45% |

| Fear | 45% |

| Sad | 45.1% |

| Happy | 45% |

AWS Rekognition

| Age | 25-39 |

| Gender | Male, 54.6% |

| Angry | 45.1% |

| Surprised | 45% |

| Sad | 45.1% |

| Happy | 45% |

| Calm | 53.1% |

| Fear | 45% |

| Confused | 46.6% |

| Disgusted | 45% |

AWS Rekognition

| Age | 21-33 |

| Gender | Female, 52.4% |

| Calm | 53.8% |

| Fear | 45.1% |

| Confused | 45.1% |

| Happy | 45.1% |

| Angry | 45.5% |

| Disgusted | 45.1% |

| Sad | 45.2% |

| Surprised | 45.1% |

AWS Rekognition

| Age | 13-25 |

| Gender | Female, 54.7% |

| Fear | 45% |

| Happy | 45% |

| Confused | 45.2% |

| Calm | 54.4% |

| Disgusted | 45% |

| Sad | 45.2% |

| Angry | 45.1% |

| Surprised | 45% |

AWS Rekognition

| Age | 21-33 |

| Gender | Female, 54.3% |

| Angry | 45.1% |

| Surprised | 45% |

| Sad | 45.2% |

| Happy | 45% |

| Disgusted | 45% |

| Confused | 45% |

| Calm | 54.7% |

| Fear | 45% |

AWS Rekognition

| Age | 18-30 |

| Gender | Male, 53.9% |

| Angry | 45.2% |

| Sad | 45.2% |

| Confused | 46.6% |

| Disgusted | 45.1% |

| Surprised | 46.9% |

| Happy | 45% |

| Fear | 45.1% |

| Calm | 50.8% |

AWS Rekognition

| Age | 21-33 |

| Gender | Female, 52.3% |

| Surprised | 45% |

| Fear | 45% |

| Disgusted | 45.1% |

| Calm | 53.1% |

| Angry | 45.3% |

| Confused | 45.3% |

| Happy | 45% |

| Sad | 46.2% |

AWS Rekognition

| Age | 23-35 |

| Gender | Male, 52.7% |

| Angry | 45.2% |

| Sad | 45% |

| Happy | 45% |

| Disgusted | 45% |

| Calm | 54.7% |

| Confused | 45.1% |

| Surprised | 45.1% |

| Fear | 45% |

AWS Rekognition

| Age | 5-15 |

| Gender | Female, 54.3% |

| Fear | 45% |

| Happy | 45% |

| Calm | 45.8% |

| Surprised | 45% |

| Disgusted | 45% |

| Angry | 51.8% |

| Sad | 45.6% |

| Confused | 46.7% |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Feature analysis

Categories

Imagga

| paintings art | 54.1% | |

|

| ||

| people portraits | 45.5% | |

|

| ||

Captions

Microsoft

created on 2019-11-16

| a vintage photo of a group of people posing for the camera | 98.1% | |

|

| ||

| a vintage photo of a group of people posing for a picture | 98% | |

|

| ||

| a group of people posing for a photo | 97.9% | |

|

| ||