Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

Microsoft

AWS Rekognition

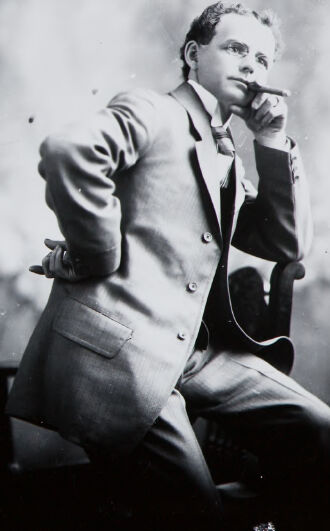

| Age | 17-29 |

| Gender | Male, 99.2% |

| Disgusted | 0.1% |

| Surprised | 0.1% |

| Happy | 0.1% |

| Sad | 1.1% |

| Angry | 1.9% |

| Fear | 0% |

| Calm | 95.9% |

| Confused | 0.7% |

Feature analysis

Amazon

Clarifai

AWS Rekognition

| Person | 99.1% | |

Categories

Imagga

created on 2019-11-16

| paintings art | 81.2% | |

| interior objects | 9% | |

| food drinks | 8.4% | |

Captions

Microsoft

created by unknown on 2019-11-16

| a man and a woman standing in front of a window | 70.8% | |

| a person standing in front of a window | 70.7% | |

| a person standing in front of a window | 70.6% | |

Clarifai

No captions written

Salesforce

Created by general-english-image-caption-blip on 2025-05-17

a photograph of a man in a suit and tie is posing for a photo

Created by general-english-image-caption-blip-2 on 2025-07-10

two black and white photographs of a man and woman

OpenAI GPT

Created by gpt-4o-2024-11-20 on 2025-06-17

The image appears to be a vintage photograph divided into two sections. On the left side, a person is standing next to a chair, holding a tennis racket, dressed in a sweater, skirt, and boots, suggesting an older style of athletic attire. On the right side, a person is seated on a chair, dressed in a formal suit, posing with one arm resting on the back of the chair and the other hand touching their face. The overall aesthetic of the photograph suggests a historical or early 20th-century setting.

Created by gpt-4o-2024-08-06 on 2025-06-17

The image comprises two black and white portraits captured side by side. On the left side, there is an individual wearing a long white skirt and a sweater, holding a tennis racket with one hand while standing next to a chair. The background is a simple dark wall, and the lighting is soft, casting gentle shadows. On the right side of the image, another individual is seated on a chair, dressed in a formal suit with a vest and tie, and is resting an elbow on the arm of the chair, with the hand placed on the face. The background in this section is lighter, with a textured or cloud-like pattern. Both portraits are of a vintage style, likely from the early 20th century.

Anthropic Claude

Created by anthropic.claude-3-haiku-20240307-v1:0 on 2025-06-18

The image appears to be a black and white photograph containing two separate portraits. On the left side, there is a portrait of a woman standing and holding what appears to be a tennis racket. On the right side, there is a portrait of a man sitting and looking thoughtfully to the side. Both portraits have a vintage, historical feel to them.

Created by us.anthropic.claude-3-5-sonnet-20241022-v2:0 on 2025-06-18

This is a vintage black and white photograph that appears to be from the early 20th century. It shows two people in a formal studio setting. One person is seated while the other stands. They are both wearing what appears to be business attire typical of that era - suits or formal wear. The photograph has the characteristic style and composition of early portrait photography, with a plain backdrop and formal poses. The image quality and styling suggests it may have been taken sometime in the 1910s or 1920s. There's an interesting contrast between light and shadow in the composition, which was common in studio photography of that period.

Meta Llama

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-06-07

The image presents a striking contrast between two black-and-white photographs, each featuring a distinct individual.

Left Photograph:

- A woman stands confidently, dressed in a long-sleeved dress with a high neckline and a skirt that falls just below her knees.

- Her attire is complemented by a pair of high-heeled boots, adding a touch of elegance to her overall appearance.

- She holds a tennis racket in her right hand, while her left hand rests on the back of a chair, conveying a sense of poise and sophistication.

- The background of the photograph is shrouded in darkness, with a subtle hint of light emanating from the left side, casting a warm glow on the subject.

Right Photograph:

- A man sits in a chair, exuding an air of nonchalance as he leans back, resting his right elbow on the armrest.

- His attire consists of a suit and tie, complete with a pocket square, which adds a touch of refinement to his overall appearance.

- He holds a cigar in his right hand, taking a puff, while his left hand rests on his lap, creating a relaxed and casual atmosphere.

- The background of the photograph is a soft, blurred light, which serves to accentuate the subject's features and create a sense of depth.

Overall Impression:

- The two photographs are presented side by side, creating a sense of contrast and juxtaposition between the two individuals.

- The woman's formal attire and poised demeanor are juxtaposed with the man's more relaxed and casual appearance, highlighting the different personalities and styles of the two subjects.

- The use of black and white photography adds a timeless quality to the images, evoking a sense of nostalgia and classic elegance.

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-06-07

The image is a black-and-white photograph of two separate portraits, one of a woman and the other of a man. The woman is positioned on the left side of the image, while the man is on the right.

Woman's Portrait:

- The woman stands in front of a dark background, with her body facing slightly to the right.

- She wears a long coat over a dress, paired with boots.

- Her short hair is styled neatly.

- In her right hand, she holds a tennis racket.

- A chair is visible behind her.

Man's Portrait:

- The man sits on a chair, facing the camera with his body turned slightly to the left.

- He is dressed in a suit, complete with a tie and a pocket watch chain hanging from his waistcoat pocket.

- His right elbow rests on the back of the chair, while his left hand supports his chin.

- The background of his portrait features a light-colored wall or backdrop.

Both portraits appear to be from the early 20th century, likely taken in a studio setting.

Amazon Nova

Created by amazon.nova-lite-v1:0 on 2025-06-07

The image shows two black-and-white photos of a man and a woman, respectively. The woman is standing and holding a tennis racket in her right hand. She is wearing a coat, a skirt, and boots. The man is sitting on a chair, wearing a suit and tie, and smoking a cigar. He is holding a cigarette in his right hand and a cigar in his left hand. Both photos have a blurry effect, and the background is dark.

Created by amazon.nova-pro-v1:0 on 2025-06-07

The image is a composite of two black-and-white photographs. On the left, a woman stands in a dark room with a light source behind her. She is dressed in a long skirt and a jacket, holding a tennis racket in her right hand. Her left hand rests on her hip, and she appears to be looking off to the side. On the right, a man is seated on a chair, dressed in a suit and tie. He holds a cigarette in his right hand, resting his elbow on his knee, and appears to be looking directly at the camera.

Google Gemini

Created by gemini-2.0-flash on 2025-05-11

Here's a description of the image:

The image shows two separate black and white portraits on a single frame, likely from a photographic plate.

On the left: A woman stands next to a chair, holding a tennis racket. She wears a light-colored jacket with fur trim, a white knee-length skirt, and boots. Her hair is in a short style. The background is dark, with what appears to be studio lighting above her.

On the right: A man sits on a chair, leaning forward with one arm resting on his knee and the other hand at his chin, holding a cigar. He wears a suit and tie. The background is a lighter, neutral tone.

Created by gemini-2.0-flash-lite on 2025-05-11

Here's a description of the image:

This is a black and white photo depicting two portraits. The left side shows a woman in what appears to be a casual dress, holding a tennis racket in her right hand. She is wearing a knitted coat with a decorative collar, a skirt, and tall boots. She is standing next to a small chair, likely used for resting. The background is dark, possibly a studio setting.

The right side features a man sitting in a chair, holding a cigar. He is wearing a suit and tie, with a thoughtful expression on his face. The background appears to be blurred, possibly a backdrop or an out-of-focus scene.

Mistral Pixtral

Created by us.mistral.pixtral-large-2502-v1:0 on 2025-06-08

The image is a vintage black-and-white photograph featuring two separate portraits side by side.

Left Portrait:

- The subject is a woman standing in a studio setting.

- She is dressed in a long, light-colored dress with a high neckline and long sleeves, typical of early 20th-century fashion.

- She is holding a tennis racket in her right hand and is positioned next to a stool.

- The background is plain and dark, with a bright light illuminating her from the front, creating a stark contrast.

- Her hair is styled in a short bob, and she is wearing earrings.

Right Portrait:

- The subject is a man seated on a chair, posing in a relaxed manner.

- He is wearing a light-colored suit with a white shirt and a dark tie.

- He has one arm resting on the back of the chair and the other arm resting on his knee.

- He is holding a cigarette in his right hand and appears to be looking off to the side.

- The background is a painted backdrop, giving the impression of an outdoor scene with clouds and foliage.

- His hair is neatly combed, and he has a clean-shaven face.

The overall style and clothing suggest that the photograph was taken in the early to mid-20th century. The poses and attire reflect the formal portrait photography of that era.

Qwen

Created by Qwen/Qwen2.5-VL-7B-Instruct on 2025-07-20

The image is a black-and-white photograph divided into two sections, likely taken in a studio setting. On the left side, there is a woman standing in profile, holding a tennis racket. She is dressed in a long-sleeved dress with a fur collar, and her posture suggests she is posing for the photograph. On the right side, there is a man sitting in a relaxed pose, smoking a pipe. He is wearing a suit and has a confident, somewhat playful expression. The background in both images is plain and dark, which helps to focus attention on the subjects. The style of clothing and the photographic technique suggest that the image is from the early to mid-20th century.

Created by Qwen/Qwen2.5-VL-72B-Instruct on 2025-07-20

This black-and-white image appears to be a vintage photograph, likely from the early 20th century. It is divided into two sections:

Left Side: A woman is standing next to a chair, holding an old-fashioned tennis racket. She is dressed in a long-sleeved dress with a fur collar and cuffs, and she is wearing boots. Her hairstyle is short, typical of the era. The setting appears to be indoors, possibly in a studio, with a plain backdrop.

Right Side: A man is seated in a chair, dressed in a formal suit with a tie. He has his left hand on his chin and is looking directly at the camera. His right hand is resting on the arm of the chair. The background is a soft, blurred gradient, giving the image a classic studio portrait feel.

The overall composition and attire suggest that this photograph might have been taken for a formal portrait or a social occasion. The juxtaposition of the woman with a tennis racket and the man in a formal pose creates an interesting contrast.