Machine Generated Data

Tags

Color Analysis

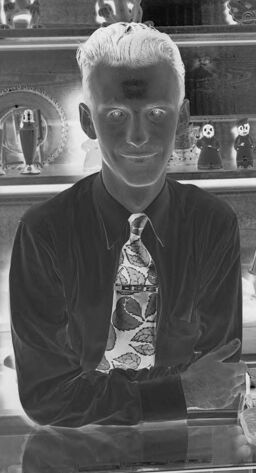

Face analysis

Amazon

AWS Rekognition

| Age | 39-47 |

| Gender | Male, 100% |

| Sad | 39.7% |

| Calm | 20.2% |

| Surprised | 11% |

| Confused | 8.5% |

| Angry | 7.2% |

| Happy | 6.4% |

| Disgusted | 5.1% |

| Fear | 1.9% |

Feature analysis

Amazon

Clarifai

AWS Rekognition

| Person | 99.6% | |

Categories

Imagga

created on 2022-01-22

| text visuals | 44% | |

| paintings art | 40.8% | |

| streetview architecture | 11.3% | |

| beaches seaside | 3.2% | |

Captions

Microsoft

created by unknown on 2022-01-22

| a group of people standing in front of a store | 78.5% | |

| a group of people sitting in front of a store | 67.7% | |

| a group of men standing in front of a store | 67.6% | |

Clarifai

Created by general-english-image-caption-clip on 2025-07-13

person, a chemist, stands in front of a display of crystals.

Salesforce

Created by general-english-image-caption-blip on 2025-05-18

a photograph of a man and woman standing in front of a counter

OpenAI GPT

Created by gpt-4o-2024-11-20 on 2025-06-17

The image shows two individuals standing behind a glass display case in what appears to be a shop. The display case contains various small items, possibly jewelry or trinkets. Behind them, wooden shelves are stocked with decorative and collectible items, including figurines, mugs, and other crafts. There is a lamp positioned on the counter between the individuals, suggesting the display is well-lit. The setting seems to reflect a vintage or artisanal atmosphere.

Created by gpt-4o-2024-08-06 on 2025-06-17

The image appears to be a black and white negative of a photo depicting two people standing behind a glass counter or showcase. The showcase seems to contain various items that look like jewelry or small personalized objects. Behind them, there are shelves lined with decorative items including figurines, pottery, and possibly other curios. The man is dressed in a dark shirt with a patterned tie, and the woman is wearing a light-colored blouse. There is an overhead light fixture between them and various items can be seen both in front of and behind the glass counter.

Anthropic Claude

Created by anthropic.claude-3-haiku-20240307-v1:0 on 2025-06-21

The image shows the interior of a jewelry store. Two people, a man and a woman, are standing behind the counter, surrounded by various jewelry and decorative items displayed on the shelves. The man is wearing a suit and tie, while the woman is wearing a white blouse. The store appears to be well-stocked with a variety of items, including figurines, mugs, and other decorative objects. The overall atmosphere of the image suggests a traditional jewelry store setting.

Created by us.anthropic.claude-3-5-sonnet-20241022-v2:0 on 2025-06-21

This appears to be a vintage black and white photograph taken in what looks like a jewelry store or gift shop. The image shows two people standing behind a glass display counter. The photograph appears to be a negative or inverted image, giving it an unusual appearance where dark areas appear light and vice versa. Behind them on shelves are various decorative items including what appear to be figurines and cups. The person on the left is wearing a dark suit with a patterned tie, while the person on the right is wearing what appears to be a light-colored uniform or jacket. The display case in front of them appears to contain jewelry or other small items arranged in rows. The overall setting suggests this is a retail environment from perhaps the 1940s or 1950s.

Meta Llama

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-06-10

The image is a black-and-white photograph of a man and woman standing behind a counter in a jewelry store. The man, with short, light-colored hair, is wearing a dark shirt and a tie with a pattern. The woman, with curly, light-colored hair, is wearing a light-colored blouse. They are both looking at the camera and smiling.

Behind them is a large display case filled with various items, including jewelry, figurines, and other trinkets. The case has several shelves and drawers, and there are also some decorative items on the walls above the case.

The overall atmosphere of the image suggests that it was taken in the mid-20th century, possibly in the 1940s or 1950s. The style of the clothing and the decor of the store are consistent with this time period.

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-06-10

This image is a black-and-white photograph of a man and woman standing behind a glass counter in a jewelry store. The man, positioned on the left, has short white hair and wears a dark shirt with a tie featuring a floral pattern. He is leaning on the counter with his arms crossed. The woman, on the right, has long white hair and is dressed in a white blouse. She also leans on the counter with her arms crossed.

The counter is filled with various jewelry pieces, including rings, earrings, and other accessories. Behind the counter, there are shelves displaying an assortment of items, such as figurines, vases, and other decorative objects. The overall atmosphere of the image suggests a professional setting, likely taken for promotional or advertising purposes.

Amazon Nova

Created by amazon.nova-lite-v1:0 on 2025-06-08

The image shows a black-and-white photograph of two people standing in front of a glass display case. The man is wearing a tie and has his arms crossed, while the woman is wearing a white blouse and has her hands on the glass. The display case contains various items, including jewelry, figurines, and other decorative objects. The image has a blurry effect, giving it a vintage or nostalgic feel.

Created by amazon.nova-pro-v1:0 on 2025-06-08

The monochromatic image shows two people standing in front of a glass display case with various items inside. The man is wearing a long-sleeve shirt with a tie, while the woman is wearing a blouse. Both of them are smiling and posing for the camera. Behind them are shelves with various items, including cups, plates, and other objects. The image has a vintage filter, giving it an old-fashioned look.

Google Gemini

Created by gemini-2.0-flash-lite on 2025-05-16

Here's a description of the image:

Overall Impression:

The image is a black and white negative of a photograph depicting two people behind a jewelry counter. The scene appears to be from a vintage shop, likely the mid-20th century based on the style.

People and Position:

- Man: A man is standing on the left side of the counter. He is wearing a dark shirt and tie with a leaf pattern, and his hair is slicked back. He is smiling at the camera.

- Woman: A woman is standing on the right side of the counter. She is wearing a button-up blouse and her hair is styled in waves. She is also looking at the camera.

- Both are posed behind the jewelry counter.

Setting:

- Jewelry Counter: The counter has glass display cases filled with jewelry. The cases are likely made of glass or a similar transparent material.

- Shelves: Behind the counter, there are shelves with various items on display.

- Items on Shelves: The shelves hold various decorative pieces, including ceramic figurines (rabbits, people figures), teacups, plates, and ornaments.

- Lighting: There is a large, vintage-style lamp between the two people.

Overall Mood:

The photograph has a vintage and slightly formal feel. The people are posed and looking at the camera, which hints at a planned or posed shot rather than a candid one.

Created by gemini-2.0-flash on 2025-05-16

The black and white negative shows a man and woman standing in a store with display cases of jewelry and a shelf behind them filled with various objects. The man is on the left, wearing a dark collared shirt and a tie with a light-colored pattern. He has short hair and is smiling slightly with his arms crossed. To his right, a large lamp sits on the counter. The woman is to the right of the lamp, wearing a light-colored button-down shirt and has wavy hair. Her hands are resting on the counter.

The display cases in front of them are filled with jewelry, including necklaces, rings, and brooches, arranged in velvet-lined boxes. Behind them, the shelf contains a variety of items such as figurines, vases, and cups. A sign that reads "JEWELRY" is mounted above the shelf. The overall impression is of a family-owned jewelry store, likely from the mid-20th century.

Mistral Pixtral

Created by us.mistral.pixtral-large-2502-v1:0 on 2025-06-03

The image is a black-and-white photograph that appears to be taken inside a store. The store has a display case in the foreground, which is filled with various items, possibly small toys or trinkets.

Behind the display case, there are two individuals. The person on the left is a man wearing a dark suit and tie. He has his arms crossed and is standing in front of a shelf that contains an assortment of figurines and decorative items. The items on the shelf include animals, clocks, and other small ornaments.

The person on the right is a woman wearing a light-colored dress or uniform. She is standing behind the display case and appears to be handling or arranging some items on the counter. The background features shelves filled with more decorative items and figurines, suggesting that the store specializes in such goods.

The overall atmosphere of the image suggests a vintage or retro setting, possibly from the mid-20th century, given the style of clothing and the types of items on display.

Qwen

Created by Qwen/Qwen2.5-VL-7B-Instruct on 2025-07-14

This is a black-and-white photograph featuring two individuals standing behind a counter, likely in a jewelry store. The setting is a display case with shelves filled with various jewelry items, including necklaces, bracelets, and watches. The top shelf holds decorative pieces, possibly figurines or ornaments. The counter has a lamp and some small items, possibly sales materials or additional jewelry pieces. The background shows more shelves with more jewelry and what appear to be gift boxes. The overall atmosphere suggests a vintage or retro aesthetic, possibly mid-20th century. The lighting is bright, highlighting the individuals and the display case.

Created by Qwen/Qwen2.5-VL-72B-Instruct on 2025-07-14

This black-and-white image appears to show two people standing behind a jewelry store counter. The man on the left is wearing a dark shirt with a patterned tie, while the woman on the right is dressed in a light-colored blouse. Behind them, there are shelves displaying various items, including jewelry, small figurines, and decorative objects. The counter in front of them has more jewelry items on display. The reflection of a lamp is visible in the glass of the counter, and there is a sign above that reads "JEWELRY." The image has a vintage feel, suggesting it might be from an earlier time period.