Machine Generated Data

Tags

Amazon

created on 2022-01-23

| Person | 99.5 | |

|

| ||

| Human | 99.5 | |

|

| ||

| Clothing | 99.4 | |

|

| ||

| Apparel | 99.4 | |

|

| ||

| Person | 98.9 | |

|

| ||

| Person | 98.8 | |

|

| ||

| Person | 97.7 | |

|

| ||

| Person | 97.5 | |

|

| ||

| Face | 91.5 | |

|

| ||

| Suit | 89.2 | |

|

| ||

| Overcoat | 89.2 | |

|

| ||

| Coat | 89.2 | |

|

| ||

| People | 87.2 | |

|

| ||

| Room | 76.3 | |

|

| ||

| Indoors | 76.3 | |

|

| ||

| Chair | 76.2 | |

|

| ||

| Furniture | 76.2 | |

|

| ||

| Portrait | 69.6 | |

|

| ||

| Photography | 69.6 | |

|

| ||

| Photo | 69.6 | |

|

| ||

| Female | 62.5 | |

|

| ||

| Shirt | 59 | |

|

| ||

| Text | 58.6 | |

|

| ||

| Tuxedo | 57.9 | |

|

| ||

| Man | 57.2 | |

|

| ||

| Senior Citizen | 57 | |

|

| ||

| Steamer | 55.4 | |

|

| ||

Clarifai

created on 2023-10-26

Imagga

created on 2022-01-23

Google

created on 2022-01-23

| Photograph | 94.2 | |

|

| ||

| Black | 89.8 | |

|

| ||

| Style | 84 | |

|

| ||

| Black-and-white | 82.9 | |

|

| ||

| Monochrome photography | 77.2 | |

|

| ||

| Suit | 76.5 | |

|

| ||

| Monochrome | 75.9 | |

|

| ||

| Snapshot | 74.3 | |

|

| ||

| Vintage clothing | 73 | |

|

| ||

| Pattern | 72.3 | |

|

| ||

| Event | 70.8 | |

|

| ||

| Art | 69.5 | |

|

| ||

| Room | 67.6 | |

|

| ||

| Stock photography | 66.3 | |

|

| ||

| Classic | 63 | |

|

| ||

| Visual arts | 62.2 | |

|

| ||

| History | 56.6 | |

|

| ||

| Photo caption | 56.3 | |

|

| ||

| Rectangle | 55.5 | |

|

| ||

| Fashion design | 52.7 | |

|

| ||

Microsoft

created on 2022-01-23

| person | 99.6 | |

|

| ||

| clothing | 94.8 | |

|

| ||

| man | 92.5 | |

|

| ||

| woman | 88.6 | |

|

| ||

| text | 86.9 | |

|

| ||

| wedding dress | 79.3 | |

|

| ||

| human face | 73.9 | |

|

| ||

| bride | 62.6 | |

|

| ||

| old | 48.5 | |

|

| ||

Color Analysis

Face analysis

Amazon

AWS Rekognition

| Age | 48-54 |

| Gender | Male, 97.8% |

| Calm | 65.2% |

| Sad | 12.6% |

| Happy | 5.8% |

| Confused | 5.7% |

| Disgusted | 3.2% |

| Surprised | 3.1% |

| Fear | 2.7% |

| Angry | 1.6% |

AWS Rekognition

| Age | 54-62 |

| Gender | Male, 99% |

| Calm | 93.2% |

| Sad | 3.4% |

| Happy | 1.1% |

| Confused | 0.9% |

| Surprised | 0.8% |

| Disgusted | 0.2% |

| Angry | 0.2% |

| Fear | 0.1% |

AWS Rekognition

| Age | 51-59 |

| Gender | Male, 99.2% |

| Calm | 64.8% |

| Sad | 21% |

| Surprised | 11.4% |

| Confused | 1.2% |

| Happy | 0.6% |

| Angry | 0.6% |

| Fear | 0.2% |

| Disgusted | 0.2% |

AWS Rekognition

| Age | 41-49 |

| Gender | Male, 92% |

| Calm | 98.5% |

| Sad | 1.3% |

| Surprised | 0.1% |

| Angry | 0% |

| Confused | 0% |

| Happy | 0% |

| Disgusted | 0% |

| Fear | 0% |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Unlikely |

| Headwear | Possible |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Unlikely |

| Blurred | Very unlikely |

Feature analysis

Categories

Imagga

| people portraits | 67.1% | |

|

| ||

| paintings art | 28.7% | |

|

| ||

| interior objects | 1.3% | |

|

| ||

Captions

Microsoft

created by unknown on 2022-01-23

| a group of people standing in front of a mirror posing for the camera | 85.4% | |

|

| ||

| a group of people standing in front of a mirror | 85.3% | |

|

| ||

| a group of people in front of a mirror posing for the camera | 84% | |

|

| ||

Clarifai

created by general-english-image-caption-blip on 2025-05-18

| a photograph of a group of people standing around a table | -100% | |

|

| ||

Google Gemini

Created by gemini-2.0-flash-lite on 2025-05-18

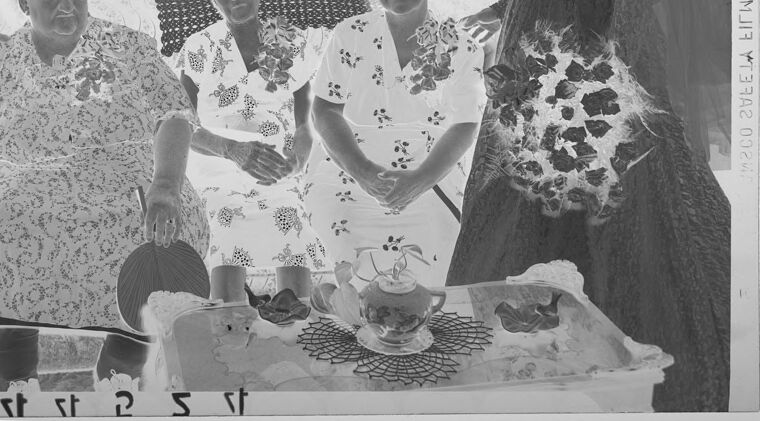

Here's a description of the image:

Overall Impression:

The image is a black and white, negative-style photograph, likely a historical shot. It depicts a formal gathering, possibly a wedding or some other celebratory event. The composition is staged, with figures arranged in a domestic setting, suggesting an indoor location.

Key Elements:

- Wedding Couple: The bride stands prominently on the right side. She's in a detailed gown with a veil and holding a large bouquet. The groom, dressed in a dark suit, looks toward the seated individuals.

- Seated Group: The figures on the left and center are likely close family, dressed in floral patterned dresses, seated on a couch. One of them holds a fan.

- Table Setting: In the foreground, there is a table with a tea set, indicating a celebratory meal or reception aspect.

- Setting: The scene is set in a well-lit room with lace curtains. A decorative object hangs on the wall.

Style & Tone:

The negative style gives a unique look to the image. The photograph evokes a sense of nostalgia and formality, typical of older portraiture. The details in the dresses and the overall arrangement suggest it was a carefully considered photograph.

Created by gemini-2.0-flash on 2025-05-18

This black-and-white photographic negative shows a wedding scene, with a groom in a suit standing to the left, looking down at three women seated behind a table with tea service. To the right, a bride in a gown and veil holds a bouquet.

The groom is dressed in a dark suit, with a light-colored shirt and tie. His hair is light and neatly styled. He has a profile view.

The three women sitting behind the table appear to be older relatives or guests. They are seated on a couch with a textured pattern. Each is wearing a dress with floral designs. The woman on the left holds a fan.

The table in front of the women is set with a tea service, including a teapot and cups. There is a doily beneath the tea service.

The bride, standing to the right, is wearing a dark gown with floral accents and a veil that extends behind her. She carries a bouquet, partially obscuring her dress.

Behind the group, draped curtains frame a window or doorway. A decorative wall hanging is visible above the couch.

Text analysis

Amazon