Machine Generated Data

Tags

Amazon

created on 2019-11-16

Clarifai

created on 2019-11-16

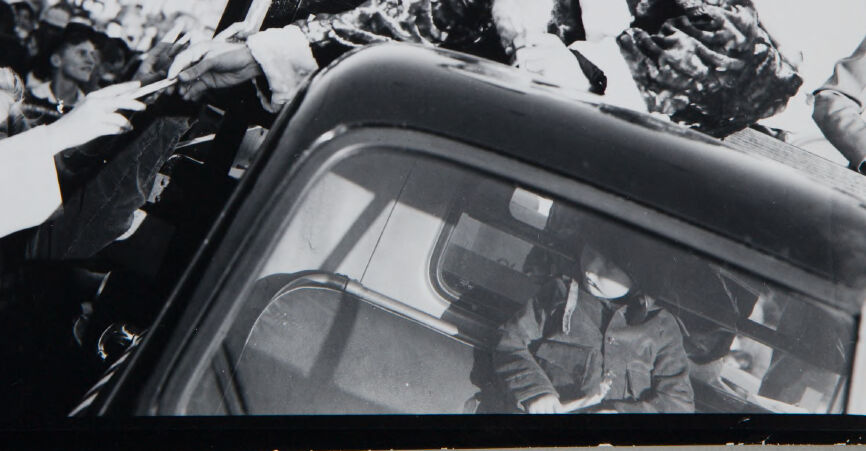

| car | 99.9 | |

|

| ||

| vehicle | 99.9 | |

|

| ||

| transportation system | 99.3 | |

|

| ||

| people | 98.4 | |

|

| ||

| windshield | 97.1 | |

|

| ||

| street | 96.5 | |

|

| ||

| traffic | 94.6 | |

|

| ||

| drive | 94.6 | |

|

| ||

| accident | 93.7 | |

|

| ||

| travel | 93.1 | |

|

| ||

| driver | 93 | |

|

| ||

| automotive | 92.7 | |

|

| ||

| police | 92.1 | |

|

| ||

| man | 91.3 | |

|

| ||

| monochrome | 90.9 | |

|

| ||

| road | 90.8 | |

|

| ||

| one | 90.3 | |

|

| ||

| vehicle window | 90 | |

|

| ||

| convertible | 87.7 | |

|

| ||

| fast | 86.4 | |

|

| ||

Imagga

created on 2019-11-16

| car | 63.3 | |

|

| ||

| transportation | 44.8 | |

|

| ||

| vehicle | 41.9 | |

|

| ||

| automobile | 36.4 | |

|

| ||

| drive | 34.3 | |

|

| ||

| auto | 32.5 | |

|

| ||

| transport | 30.1 | |

|

| ||

| cockpit | 29.1 | |

|

| ||

| speed | 26.6 | |

|

| ||

| road | 25.3 | |

|

| ||

| driving | 25.1 | |

|

| ||

| travel | 23.9 | |

|

| ||

| driver | 22.3 | |

|

| ||

| traffic | 19.9 | |

|

| ||

| wheel | 18.9 | |

|

| ||

| mirror | 17.8 | |

|

| ||

| windshield | 17.4 | |

|

| ||

| billboard | 15.8 | |

|

| ||

| fast | 15 | |

|

| ||

| business | 14.6 | |

|

| ||

| new | 14.6 | |

|

| ||

| engine | 14.4 | |

|

| ||

| device | 14 | |

|

| ||

| highway | 13.5 | |

|

| ||

| sitting | 12.9 | |

|

| ||

| motion | 12.8 | |

|

| ||

| signboard | 12.8 | |

|

| ||

| person | 12.7 | |

|

| ||

| side | 12.2 | |

|

| ||

| inside | 12 | |

|

| ||

| people | 11.7 | |

|

| ||

| screen | 11.7 | |

|

| ||

| reflection | 11.6 | |

|

| ||

| hand | 11.4 | |

|

| ||

| headlight | 11.3 | |

|

| ||

| electronic equipment | 11.2 | |

|

| ||

| happy | 10.7 | |

|

| ||

| monitor | 10.5 | |

|

| ||

| urban | 10.5 | |

|

| ||

| window | 10.4 | |

|

| ||

| portrait | 10.4 | |

|

| ||

| adult | 10.3 | |

|

| ||

| seat | 10.3 | |

|

| ||

| safety | 10.1 | |

|

| ||

| equipment | 10 | |

|

| ||

| technology | 9.6 | |

|

| ||

| structure | 9.4 | |

|

| ||

| movement | 9.4 | |

|

| ||

| man | 9.4 | |

|

| ||

| power | 9.2 | |

|

| ||

| close | 9.1 | |

|

| ||

| modern | 9.1 | |

|

| ||

| racing | 8.8 | |

|

| ||

| motor | 8.7 | |

|

| ||

| light | 8.7 | |

|

| ||

| journey | 8.5 | |

|

| ||

| communication | 8.4 | |

|

| ||

| car mirror | 8.3 | |

|

| ||

| street | 8.3 | |

|

| ||

| detail | 8 | |

|

| ||

| smile | 7.8 | |

|

| ||

| happiness | 7.8 | |

|

| ||

| cars | 7.8 | |

|

| ||

| protective covering | 7.7 | |

|

| ||

| outdoors | 7.5 | |

|

| ||

| smiling | 7.2 | |

|

| ||

| black | 7.2 | |

|

| ||

| sky | 7 | |

|

| ||

Google

created on 2019-11-16

| Motor vehicle | 97.4 | |

|

| ||

| Photograph | 95.1 | |

|

| ||

| Snapshot | 82 | |

|

| ||

| Vehicle | 81.6 | |

|

| ||

| Car | 69.9 | |

|

| ||

| Black-and-white | 68.3 | |

|

| ||

| Stock photography | 65.4 | |

|

| ||

| Photography | 62.4 | |

|

| ||

Microsoft

created on 2019-11-16

| vehicle | 95.2 | |

|

| ||

| car | 94.5 | |

|

| ||

| land vehicle | 94.4 | |

|

| ||

| text | 92.7 | |

|

| ||

| black and white | 83.7 | |

|

| ||

| street | 83.5 | |

|

| ||

Color Analysis

Face analysis

Amazon

AWS Rekognition

| Age | 26-42 |

| Gender | Male, 83.5% |

| Surprised | 1% |

| Confused | 2.3% |

| Sad | 50.9% |

| Disgusted | 0.9% |

| Angry | 37.3% |

| Calm | 2.2% |

| Fear | 0.6% |

| Happy | 4.9% |

AWS Rekognition

| Age | 13-25 |

| Gender | Male, 54.1% |

| Happy | 45.2% |

| Disgusted | 45.1% |

| Confused | 45.2% |

| Calm | 49.3% |

| Fear | 45.5% |

| Sad | 47.8% |

| Angry | 46.7% |

| Surprised | 45.3% |

AWS Rekognition

| Age | 31-47 |

| Gender | Female, 50.3% |

| Confused | 49.5% |

| Happy | 49.8% |

| Disgusted | 49.5% |

| Calm | 49.6% |

| Angry | 49.5% |

| Sad | 49.7% |

| Surprised | 49.7% |

| Fear | 49.6% |

AWS Rekognition

| Age | 20-32 |

| Gender | Male, 94.9% |

| Angry | 2.1% |

| Sad | 11.2% |

| Calm | 83.2% |

| Disgusted | 0.1% |

| Confused | 2.8% |

| Surprised | 0.4% |

| Fear | 0.1% |

| Happy | 0.1% |

AWS Rekognition

| Age | 22-34 |

| Gender | Female, 51% |

| Happy | 45% |

| Angry | 45% |

| Disgusted | 45% |

| Sad | 50.1% |

| Surprised | 45% |

| Calm | 45.2% |

| Fear | 49.5% |

| Confused | 45% |

AWS Rekognition

| Age | 18-30 |

| Gender | Female, 53.9% |

| Calm | 45% |

| Fear | 45.2% |

| Sad | 45% |

| Happy | 45.4% |

| Disgusted | 53.3% |

| Surprised | 45.1% |

| Confused | 45.1% |

| Angry | 45.8% |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Feature analysis

Categories

Imagga

| paintings art | 88% | |

|

| ||

| cars vehicles | 6.5% | |

|

| ||

| food drinks | 4.7% | |

|

| ||

Captions

Microsoft

created on 2019-11-16

| a group of people in a car | 75.1% | |

|

| ||

| a group of people sitting around a car | 64.2% | |

|

| ||

| a group of people sitting in a car | 62.4% | |

|

| ||

Text analysis

Amazon

1D