Machine Generated Data

Tags

Amazon

created on 2022-01-22

Clarifai

created on 2023-10-26

Imagga

created on 2022-01-22

Google

created on 2022-01-22

| Table | 95.9 | |

|

| ||

| Furniture | 93.9 | |

|

| ||

| Black | 89.6 | |

|

| ||

| Chair | 84.3 | |

|

| ||

| Style | 83.9 | |

|

| ||

| Sharing | 81.5 | |

|

| ||

| Desk | 79.8 | |

|

| ||

| Adaptation | 79.2 | |

|

| ||

| Curtain | 75 | |

|

| ||

| Snapshot | 74.3 | |

|

| ||

| Room | 74.2 | |

|

| ||

| Vintage clothing | 73.7 | |

|

| ||

| Art | 71.5 | |

|

| ||

| Event | 71.2 | |

|

| ||

| Writing desk | 68.6 | |

|

| ||

| Font | 68.3 | |

|

| ||

| Monochrome | 67.4 | |

|

| ||

| Stock photography | 65.3 | |

|

| ||

| Rectangle | 63.3 | |

|

| ||

| Illustration | 62.9 | |

|

| ||

Color Analysis

Face analysis

Amazon

Microsoft

AWS Rekognition

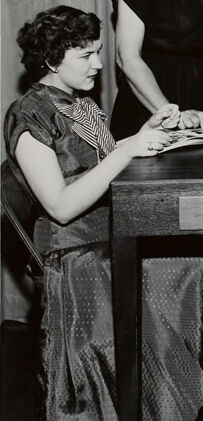

| Age | 21-29 |

| Gender | Female, 98.7% |

| Confused | 30.6% |

| Angry | 18.8% |

| Surprised | 18.6% |

| Disgusted | 12.1% |

| Sad | 9.4% |

| Calm | 6.8% |

| Fear | 2.7% |

| Happy | 1% |

AWS Rekognition

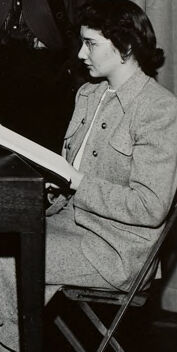

| Age | 22-30 |

| Gender | Female, 99.8% |

| Calm | 90.9% |

| Sad | 7.8% |

| Angry | 0.3% |

| Disgusted | 0.3% |

| Confused | 0.2% |

| Fear | 0.2% |

| Surprised | 0.1% |

| Happy | 0.1% |

AWS Rekognition

| Age | 22-30 |

| Gender | Male, 99.1% |

| Happy | 89.8% |

| Confused | 3.9% |

| Surprised | 1.6% |

| Fear | 1.3% |

| Sad | 1.3% |

| Disgusted | 0.8% |

| Angry | 0.8% |

| Calm | 0.5% |

AWS Rekognition

| Age | 24-34 |

| Gender | Female, 99.5% |

| Sad | 39.2% |

| Confused | 35.1% |

| Angry | 8.3% |

| Surprised | 4.9% |

| Happy | 4.8% |

| Disgusted | 3.2% |

| Calm | 3% |

| Fear | 1.3% |

AWS Rekognition

| Age | 24-34 |

| Gender | Female, 100% |

| Happy | 98.7% |

| Surprised | 0.5% |

| Fear | 0.2% |

| Confused | 0.1% |

| Angry | 0.1% |

| Sad | 0.1% |

| Disgusted | 0.1% |

| Calm | 0.1% |

AWS Rekognition

| Age | 23-31 |

| Gender | Female, 99.9% |

| Calm | 99.8% |

| Sad | 0.1% |

| Happy | 0% |

| Fear | 0% |

| Angry | 0% |

| Surprised | 0% |

| Disgusted | 0% |

| Confused | 0% |

AWS Rekognition

| Age | 24-34 |

| Gender | Female, 99.6% |

| Happy | 70.5% |

| Calm | 21.1% |

| Surprised | 2.2% |

| Sad | 2.1% |

| Confused | 1.9% |

| Angry | 0.9% |

| Disgusted | 0.6% |

| Fear | 0.5% |

AWS Rekognition

| Age | 20-28 |

| Gender | Female, 99.8% |

| Happy | 69.9% |

| Calm | 9% |

| Surprised | 7.7% |

| Confused | 3.6% |

| Fear | 3.6% |

| Angry | 2.9% |

| Sad | 2.1% |

| Disgusted | 1.2% |

AWS Rekognition

| Age | 56-64 |

| Gender | Female, 99.9% |

| Calm | 42.8% |

| Happy | 19.3% |

| Confused | 9.1% |

| Sad | 8.9% |

| Disgusted | 6.3% |

| Angry | 5.9% |

| Surprised | 5% |

| Fear | 2.7% |

Microsoft Cognitive Services

| Age | 50 |

| Gender | Female |

Microsoft Cognitive Services

| Age | 39 |

| Gender | Female |

Microsoft Cognitive Services

| Age | 38 |

| Gender | Female |

Microsoft Cognitive Services

| Age | 27 |

| Gender | Female |

Microsoft Cognitive Services

| Age | 26 |

| Gender | Female |

Microsoft Cognitive Services

| Age | 37 |

| Gender | Female |

Microsoft Cognitive Services

| Age | 27 |

| Gender | Female |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very likely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very likely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Unlikely |

| Headwear | Unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Unlikely |

| Blurred | Very unlikely |

Feature analysis

Amazon

Clarifai

AWS Rekognition

| Person | 99.7% | |

|

| ||

AWS Rekognition

| Person | 99.3% | |

|

| ||

AWS Rekognition

| Person | 99.2% | |

|

| ||

AWS Rekognition

| Person | 98.9% | |

|

| ||

AWS Rekognition

| Person | 98.6% | |

|

| ||

AWS Rekognition

| Person | 98.1% | |

|

| ||

AWS Rekognition

| Person | 98% | |

|

| ||

AWS Rekognition

| Person | 90.8% | |

|

| ||

AWS Rekognition

| Person | 69.1% | |

|

| ||

AWS Rekognition

| Chair | 97.1% | |

|

| ||

Clarifai

| Table | 91.7% | |

|

| ||

Clarifai

| Table | 36.9% | |

|

| ||

Clarifai

| Clothing | 83.3% | |

|

| ||

Clarifai

| Clothing | 81% | |

|

| ||

Clarifai

| Clothing | 78% | |

|

| ||

Clarifai

| Clothing | 66.1% | |

|

| ||

Clarifai

| Clothing | 61% | |

|

| ||

Clarifai

| Clothing | 43.9% | |

|

| ||

Clarifai

| Clothing | 43.1% | |

|

| ||

Clarifai

| Clothing | 42.7% | |

|

| ||

Clarifai

| Woman | 82.7% | |

|

| ||

Clarifai

| Woman | 75.3% | |

|

| ||

Clarifai

| Woman | 72.7% | |

|

| ||

Clarifai

| Woman | 71.5% | |

|

| ||

Clarifai

| Woman | 70.9% | |

|

| ||

Clarifai

| Woman | 69.2% | |

|

| ||

Clarifai

| Woman | 63.9% | |

|

| ||

Clarifai

| Woman | 55.9% | |

|

| ||

Clarifai

| Woman | 49.1% | |

|

| ||

Clarifai

| Woman | 44.1% | |

|

| ||

Clarifai

| Woman | 41.5% | |

|

| ||

Clarifai

| Woman | 37.6% | |

|

| ||

Clarifai

| Human face | 79.4% | |

|

| ||

Clarifai

| Human face | 77.6% | |

|

| ||

Clarifai

| Human face | 73.1% | |

|

| ||

Clarifai

| Human face | 72.9% | |

|

| ||

Clarifai

| Human face | 71.8% | |

|

| ||

Clarifai

| Human face | 68.4% | |

|

| ||

Clarifai

| Human face | 65.8% | |

|

| ||

Clarifai

| Human face | 64.9% | |

|

| ||

Clarifai

| Human face | 62.7% | |

|

| ||

Clarifai

| Footwear | 77.3% | |

|

| ||

Clarifai

| Footwear | 77.1% | |

|

| ||

Clarifai

| Footwear | 73.4% | |

|

| ||

Clarifai

| Footwear | 67.5% | |

|

| ||

Clarifai

| Footwear | 63.8% | |

|

| ||

Clarifai

| Footwear | 56% | |

|

| ||

Clarifai

| Footwear | 53.2% | |

|

| ||

Clarifai

| Footwear | 49.2% | |

|

| ||

Clarifai

| Footwear | 40.4% | |

|

| ||

Clarifai

| Footwear | 36.3% | |

|

| ||

Clarifai

| Human arm | 41% | |

|

| ||

Clarifai

| Human arm | 39.2% | |

|

| ||

Clarifai

| Human arm | 34.2% | |

|

| ||

Clarifai

| Human arm | 33.2% | |

|

| ||

Clarifai

| Human hair | 39.7% | |

|

| ||

Clarifai

| Human hair | 35.7% | |

|

| ||

Clarifai

| Human hair | 35.1% | |

|

| ||

Clarifai

| Human hair | 33.2% | |

|

| ||

Clarifai

| Human leg | 35.7% | |

|

| ||

Clarifai

| Human leg | 34.2% | |

|

| ||

Categories

Imagga

created on 2022-01-22

| people portraits | 96.6% | |

|

| ||

| events parties | 1.4% | |

|

| ||

Captions

Microsoft

created by unknown on 2022-01-22

| a group of people sitting at a table | 98.3% | |

|

| ||

| a group of people sitting at a table posing for a photo | 98.2% | |

|

| ||

| a group of people sitting at a table posing for the camera | 98.1% | |

|

| ||

Clarifai

Created by general-english-image-caption-clip on 2025-07-14

a group of women in a living room.

Salesforce

Created by general-english-image-caption-blip on 2025-05-23

a photograph of a group of women sitting around a table

OpenAI GPT

Created by gpt-4o-2024-11-20 on 2025-06-17

This is a black-and-white photograph depicting a group of women gathered around a wooden table. Some are seated while others are standing. On the table, there are open books and a typewriter, suggesting a setting involving reading, writing, or a collaborative task. The women are wearing formal or semi-formal clothing appropriate for the mid-20th century and are engaged in their activity. A curtain serves as the backdrop.

Created by gpt-4o-2024-08-06 on 2025-06-17

The image features a group of nine women gathered around a wooden table, with some seated and others standing. There are various documents or papers spread across the table that the women are interacting with. A typewriter is also prominently placed on the table's surface. The women are dressed in mid-20th-century attire, including dresses and skirts, and the photograph appears to be in black and white. The setting suggests a formal or professional gathering or meeting environment.

Anthropic Claude

Created by anthropic.claude-3-haiku-20240307-v1:0 on 2025-06-16

The image shows a group of women gathered around a table, likely in an office or workplace setting. They appear to be working together, with some of them writing or reading from books or documents on the table. The women are dressed in formal attire typical of the time period, with some wearing glasses and others having their hair styled in curls or waves. The image has a vintage, black-and-white aesthetic, suggesting it was taken in the past.

Created by us.anthropic.claude-3-5-sonnet-20241022-v2:0 on 2025-06-16

This is a black and white photograph that appears to be from the 1950s, showing a group of women in a professional or academic setting. They are gathered around a large desk or table, with some seated and others standing behind them. The women are all wearing typical 1950s attire - dresses, skirts and blouses with conservative styling. Several are wearing glasses, and they appear to be looking at documents or materials spread out on the table. There's what looks like a typewriter or office machine visible on the desk. The fashion and styling, including the women's short, curled hairstyles, strongly suggests this is from the mid-20th century. The setting appears institutional, with a plain curtain or backdrop behind them.

Meta Llama

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-05-31

The image is a black-and-white photograph of a group of women gathered around a table, with one woman standing behind them. The women are dressed in formal attire, with some wearing dresses and others wearing skirts and blouses. They appear to be engaged in a discussion or meeting, with some holding papers or books.

In the center of the table, there is a small box or machine that appears to be the focal point of their attention. The background of the image is a plain wall or curtain, which suggests that the photo was taken in a studio or office setting.

Overall, the image conveys a sense of professionalism and seriousness, as the women are all dressed formally and appear to be focused on their discussion. The presence of the box or machine on the table adds an element of mystery, as it is unclear what its purpose is or what the women are discussing.

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-05-31

The image depicts a group of nine women gathered around a large wooden desk, with some seated and others standing. The women are dressed in various attire, including dresses, skirts, and blouses, with some wearing heels or flats. The desk features two drawers and is accompanied by a typewriter and a stack of papers. The background consists of a light-colored curtain, suggesting a professional or educational setting. The overall atmosphere appears to be one of collaboration and productivity, with the women engaged in a shared activity or discussion.

Amazon Nova

Created by amazon.nova-pro-v1:0 on 2025-05-30

A black-and-white photo of eight women sitting on chairs around a table, probably inside a room. They are all wearing dresses, and some of them are wearing eyeglasses. There are books on the table, and one of them is holding a book. There is a box on the table. Behind them is a white curtain. The photo has a white border.

Created by amazon.nova-lite-v1:0 on 2025-05-30

The image is a black-and-white photograph of a group of women, possibly students, sitting around a wooden desk. They are dressed in vintage clothing, with some wearing glasses, suggesting an academic or professional setting. The women are engaged in reading various books and papers, indicating a study session or a meeting. The desk is equipped with several books and a typewriter, hinting at a research or writing-related activity. Behind the group, there is a curtain, possibly indicating that the photo was taken in a classroom or a similar environment.

Google Gemini

Created by gemini-2.0-flash on 2025-05-17

Here is a description of the image:

This is a black and white photograph of a group of eight women gathered around a large table. The women appear to be of various ages, dressed in modest, mid-century attire, suggesting the photo was taken sometime in the 1940s or 1950s.

Four of the women are seated, with open books and what look like course materials in front of them on the table. One of the seated women has a typewriter in front of her, possibly taking notes or transcribing something. The others seem to be engaged in reading or discussing the books.

Behind the seated women, four other women stand, watching or participating in the activity. They are all wearing dresses or blouses and skirts, and some have glasses. Their expressions are neutral to slightly interested.

The table is a dark color and has two visible drawers with simple handles. The background is a plain, light-colored curtain. The overall lighting is soft, creating a sense of a studious or academic setting.

Created by gemini-2.0-flash-lite on 2025-05-17

Here is a description of the image:

The photograph is a vintage black and white shot featuring a group of nine women. They are arranged behind a large, dark wooden table, likely in a classroom or office setting.

The women are all dressed in styles characteristic of the mid-20th century, with modest dresses, blouses, and skirts. Most wear glasses and have their hair styled in classic waves and curls. They appear to be engaged in a meeting or study session, as they are looking at books, papers, and one woman is operating a typewriter. The background is a simple, plain curtain. The photograph has a formal and posed feel, characteristic of the era it was taken in.

Mistral Pixtral

Created by us.mistral.pixtral-large-2502-v1:0 on 2025-06-03

The image depicts a group of eight women gathered around a large table. The setting appears to be from the mid-20th century, likely the 1940s or 1950s, based on the style of clothing and hairstyles.

Here are some detailed observations:

Clothing and Appearance:

- The women are dressed in a variety of styles typical of the era, including dresses, blouses, and skirts.

- Hairstyles are also characteristic of the time, with several women wearing short, curled hairstyles.

- Some women are wearing glasses.

Activity:

- The women are engaged in different tasks around the table.

- Three women are seated at the table, appearing to be writing or reading from books.

- One woman is seated at a typewriter, which is placed on the table.

- The remaining four women are standing behind the seated women, observing or possibly supervising.

Setting:

- The background is a plain curtain, suggesting the photo might have been taken in a studio or a staged environment.

- The table is large and wooden, with drawers on either side.

- The atmosphere appears to be formal or professional, possibly indicating a work or educational setting.

Equipment:

- The presence of the typewriter and books suggests that the women might be involved in clerical, administrative, or educational work.

Overall, the image captures a moment of collaboration or instruction among a group of women, reflecting the professional roles and attire of the mid-20th century.

Qwen

Created by Qwen/Qwen2.5-VL-7B-Instruct on 2025-07-14

This is a black-and-white photograph of a group of eight women. They appear to be engaged in an educational or professional setting, possibly a secretarial or clerical training class. The women are seated around a wooden table, which has a drawer unit underneath. On the table, there are several open books, a typewriter, and a small box, suggesting that they might be learning about secretarial skills or office work.

The women are dressed in formal or business attire typical of mid-20th-century fashion, including blouses, skirts, and jackets. The woman at the center of the group is operating the typewriter, while the others seem to be observing or waiting for their turn. The background features a plain curtain, which keeps the focus on the group at the table. The overall atmosphere of the image suggests a formal and structured environment, likely from the 1940s or 1950s.

Created by Qwen/Qwen2.5-VL-72B-Instruct on 2025-07-14

The image is a black-and-white photograph of a group of women gathered around a rectangular table. There are eight women in total, with four seated at the table and four standing behind them. The women are dressed in what appears to be mid-20th century fashion, wearing dresses and jackets. They are engaged in some sort of activity, possibly a study group or a discussion, as several of them are holding open books or papers. The table has a few items on it, including a box and some papers. The setting appears to be indoors, with a plain curtain in the background. The overall atmosphere suggests a formal or semi-formal gathering, possibly for educational or professional purposes.