Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

AWS Rekognition

| Age | 24-34 |

| Gender | Male, 99.9% |

| Calm | 97.8% |

| Sad | 1.3% |

| Surprised | 0.4% |

| Happy | 0.2% |

| Confused | 0.2% |

| Disgusted | 0.1% |

| Angry | 0% |

| Fear | 0% |

Feature analysis

Amazon

Clarifai

AWS Rekognition

| Person | 99.8% | |

Categories

Imagga

created on 2022-01-15

| people portraits | 72.9% | |

| interior objects | 10% | |

| text visuals | 5.4% | |

| streetview architecture | 3.6% | |

| beaches seaside | 2.5% | |

| events parties | 2.3% | |

| paintings art | 1.9% | |

Captions

Microsoft

created by unknown on 2022-01-15

| a group of people standing in front of a window | 67.3% | |

| a group of people standing in front of a store | 63% | |

| a group of people standing in front of a truck | 59.2% | |

Clarifai

Created by general-english-image-caption-clip on 2025-07-11

the team in the operating room.

Salesforce

Created by general-english-image-caption-blip on 2025-05-23

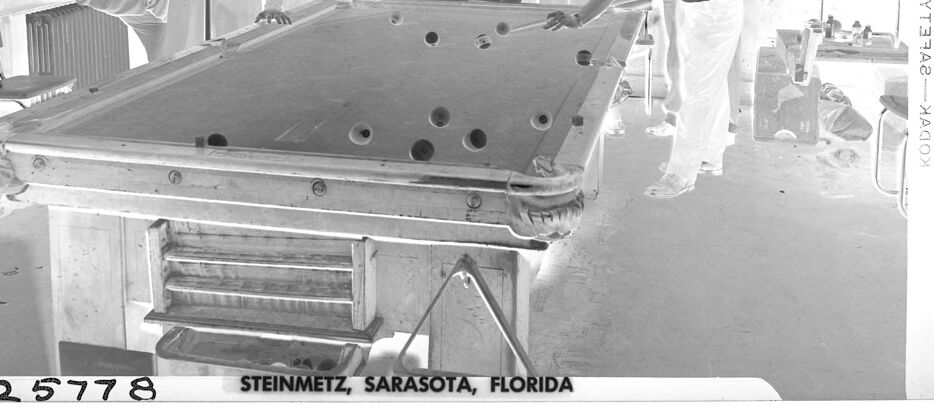

a photograph of a group of people playing pool games in a room

OpenAI GPT

Created by gpt-4o-2024-11-20 on 2025-06-11

The image depicts a group of individuals gathered around a billiards table in a room with large windows and chairs along the walls. The setting appears to be casual, with some individuals standing and others seated. The billiards table features several holes on its surface, possibly indicating a specialized or modified game. Hanging above the table is a light fixture, suggesting that the area is purposefully lit for the game. The label "Steinmetz, Sarasota, Florida" is visible at the bottom, indicating the location or photographer.

Created by gpt-4o-2024-08-06 on 2025-06-11

The image depicts a negative photograph of a group of men gathered around a tabletop game or billiard table. The table has pockets similar to a pool table, and one man appears to be playing while the others, dressed casually in shirts and pants, observe him. The room has multiple chairs placed around the perimeter and features large windows or skylights on the ceiling. There is a light fixture hanging above the table. The image has visible markings that indicate "Steinmetz, Sarasota, Florida" at the bottom along with the number "25778".

Anthropic Claude

Created by anthropic.claude-3-haiku-20240307-v1:0 on 2025-06-17

The image shows a group of people gathered around a pool table in what appears to be a workshop or studio setting. The room has large windows, a hanging lamp, and some chairs and other equipment visible. The people seem to be examining or working on the pool table, which has several holes or pockets in it. The image is in black and white, giving it a vintage or historical feel.

Created by us.anthropic.claude-3-5-sonnet-20241022-v2:0 on 2025-06-17

This is a black and white historical photograph taken in Sarasota, Florida, as indicated by the caption "STEINMETZ, SARASOTA, FLORIDA." The image shows a group of people gathered around what appears to be a pool or billiards table. The players are wearing a mix of dark and light-colored clothing, with some in white and others in darker shirts. The scene appears to be indoors, with some lighting fixtures visible hanging from above. The photo has a vintage quality to it, likely from the mid-20th century, and captures what seems to be a social gathering or recreational moment at a pool hall or social club.

Meta Llama

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-06-02

The image depicts a black-and-white photograph of a group of men gathered around a pool table, with one man in the foreground preparing to shoot a cue ball.

Foreground:

- The man in the foreground is positioned on the right side of the image, leaning over the pool table with his cue stick poised to strike the cue ball.

- He wears a dark-colored shirt and white pants, and has short hair.

Background:

- Behind the man in the foreground, a group of approximately ten men are standing or sitting around the pool table, observing the scene.

- They are dressed in casual attire, including t-shirts, shorts, and pants.

- Some of the men are holding pool cues, while others appear to be watching the game.

Pool Table:

- The pool table is positioned centrally in the image, with the man in the foreground preparing to shoot the cue ball.

- The table has several holes and pockets, indicating that it is a standard-sized pool table.

Lighting:

- The room is well-lit, with several light sources visible in the background.

- The lighting is soft and even, casting a warm glow over the scene.

Overall:

- The image captures a moment of camaraderie and friendly competition among the group of men gathered around the pool table.

- The black-and-white photograph adds a sense of nostalgia and timelessness to the scene, evoking memories of simpler times and social gatherings.

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-06-02

The image is a black-and-white photograph of a group of men gathered around a pool table. The scene appears to be set in a room with a high ceiling, featuring windows and a light fixture hanging above the table. The men are dressed in casual attire, with some wearing dark shirts and others in lighter colors. One man is leaning on the table, while another is poised to strike the cue ball.

In the foreground, the pool table takes center stage, with its surface reflecting the light from the overhead fixture. The table's legs are visible, and a few chairs are scattered around the room, suggesting that the space may be used for social gatherings or recreational activities.

At the bottom of the image, a caption reads "STEINMETZ, SARASOTA, FLORIDA," indicating the location where the photograph was taken. The overall atmosphere of the image suggests a relaxed and informal setting, with the men enjoying each other's company as they engage in a game of pool.

Amazon Nova

Created by amazon.nova-lite-v1:0 on 2025-06-01

This black-and-white photograph captures a group of men engaged in a game of pool in a room with a ceiling lamp. The players are dressed in casual attire, with one man wearing a watch. The pool table is positioned centrally in the room, surrounded by chairs and a radiator. The image includes a watermark in the bottom left corner, indicating the location as Steinmetz, Sarasota, Florida, along with a numerical code.

Created by amazon.nova-pro-v1:0 on 2025-06-01

A black-and-white photo of a group of men playing pool in a room with a billiard table, a lamp, a chair, and a radiator. The man in the front is bending down and holding a cue stick, and he is about to hit the ball. The other men are standing behind him, and some are holding a cue stick. The room has a ceiling with a hanging lamp and a window with a curtain.

Google Gemini

Created by gemini-2.0-flash on 2025-05-18

Here is a description of the image:

This is a black and white photograph of a group of men playing or watching a game of billiards in a room, probably in Sarasota, Florida. The photo appears to be an inverted print of a negative, which makes the lights dark and vice versa.

In the center of the room is a billiard table, complete with billiard balls scattered across its surface. One man is leaning over the table, aiming with a cue stick as if about to take a shot. Other men surround the table, some standing, some sitting, and all are looking at the action on the table. Their poses suggest that they are intently watching the game.

Above the table, a large lamp fixture hangs, illuminating the playing surface. Behind the players are windows, letting in natural light. The room seems casual and relaxed, indicative of a pastime or social gathering.

The photo is labeled "STEINMETZ, SARASOTA, FLORIDA" at the bottom, suggesting the location of the scene, and includes a number "25778," possibly a reference or catalog number. The image has a vintage quality.

Created by gemini-2.0-flash-lite on 2025-05-18

Here's a description of the image:

Overall Impression: This appears to be a vintage photograph, possibly a negative. It shows a group of men in a billiards room. The style of the image suggests it was taken in the mid-20th century.

Setting and Composition:

- The scene is indoors, likely in a billiards or pool room.

- A large pool table dominates the foreground.

- There are several men in the room, some standing around the table and others observing.

- The lighting is relatively diffused, with a prominent overhead light fixture.

- The walls appear to be dark, possibly painted. There is a window or opening in the background.

People:

- One man is in the process of taking a shot, leaning over the pool table with a cue stick.

- Other men are watching, some with their hands on the table or standing with arms crossed.

- The men are dressed casually, in shirts and pants.

Details:

- The pool table has pockets, and a few billiard balls are scattered on its surface.

- There are other items visible in the background, such as chairs, and what looks like a radiator against a wall.

- The image has a vintage feel, with a clear focus on the people and the activity.

Inscriptions:

- The image appears to contain text along the bottom, including the numbers "25778" and the location "STEINMETZ, SARASOTA, FLORIDA."

- There is also text on the right side of the image indicating "KODAK SAFETY" which may indicate the type of film.

This image offers a glimpse into a scene of leisure and camaraderie from the past, capturing a moment in a billiards game.

Mistral Pixtral

Created by us.mistral.pixtral-large-2502-v1:0 on 2025-06-02

The image depicts a group of men gathered in what appears to be a recreational or social setting, possibly a club or a billiards room. The scene is set in Sarasota, Florida, as indicated by the text at the bottom of the image.

In the foreground, a man is leaning over a billiards table, preparing to take a shot. The table has multiple balls arranged on it, suggesting a game in progress. The man is dressed in light-colored pants and a dark shirt.

Surrounding the billiards table, several other men are standing or sitting, watching the game. They are dressed in casual attire, with some wearing short-sleeved shirts and others in light-colored pants. The expressions on their faces suggest they are engaged in the game and possibly conversing with one another.

The room itself has a somewhat utilitarian appearance, with a large overhead light fixture providing illumination. There are chairs placed around the room, and some equipment or machinery is visible in the background, indicating that this might be a multi-purpose space.

Overall, the image captures a moment of leisure and camaraderie among the group of men, centered around a game of billiards.

Qwen

Created by Qwen/Qwen2.5-VL-7B-Instruct on 2025-07-24

This is a black-and-white photograph showing a group of people gathered around a pool table, seemingly in a recreational setting. The scene appears to be indoors, as indicated by the ceiling lights and the visible skylight in the background. The individuals are casually dressed, with some wearing polo shirts and others in lighter attire. Most of the people are standing, and one person is in the process of taking a shot with a pool cue, while others are watching and engaged in conversation. The room has a relaxed atmosphere, and there are a few chairs visible, one of which appears to be in the process of being moved. The image has a vintage quality, and there is a text overlay at the bottom that reads "2 5778 STEINMETZ, SARASOTA, FLORIDA," suggesting the location and a catalog number.

Created by Qwen/Qwen2.5-VL-72B-Instruct on 2025-07-24

This black-and-white photograph captures a group of men gathered around a pool table in a casual, social setting. The men are dressed in a mix of uniforms and casual attire, suggesting they might be in a recreational area, possibly a military or naval facility. The room has a high ceiling with skylights and a hanging light fixture. There are chairs and a radiator in the background, and the floor appears to be concrete. The image has a vintage feel, likely from the mid-20th century. The text at the bottom of the image reads "STEINMETZ, SARASOTA, FLORIDA," indicating the location or the photographer.