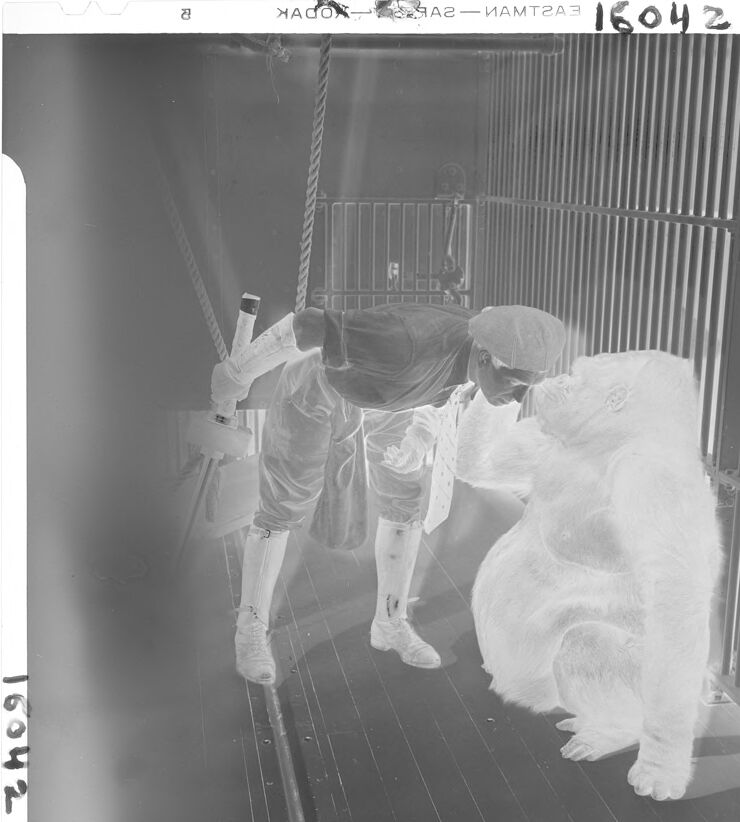

Machine Generated Data

Tags

Amazon

created on 2021-12-15

Clarifai

created on 2023-10-15

Imagga

created on 2021-12-15

| groom | 27.1 | |

|

| ||

| bride | 22.4 | |

|

| ||

| wedding | 22.1 | |

|

| ||

| negative | 21.4 | |

|

| ||

| people | 20.6 | |

|

| ||

| love | 18.9 | |

|

| ||

| film | 18.8 | |

|

| ||

| couple | 18.3 | |

|

| ||

| dress | 18.1 | |

|

| ||

| cradle | 15.3 | |

|

| ||

| portrait | 14.9 | |

|

| ||

| married | 14.4 | |

|

| ||

| person | 13.5 | |

|

| ||

| happiness | 13.3 | |

|

| ||

| marriage | 13.3 | |

|

| ||

| happy | 13.2 | |

|

| ||

| photographic paper | 13 | |

|

| ||

| celebration | 12.8 | |

|

| ||

| baby bed | 12 | |

|

| ||

| women | 11.9 | |

|

| ||

| adult | 11.6 | |

|

| ||

| man | 11.4 | |

|

| ||

| bouquet | 11.3 | |

|

| ||

| elegance | 10.9 | |

|

| ||

| veil | 10.8 | |

|

| ||

| smile | 10.7 | |

|

| ||

| hand | 10.6 | |

|

| ||

| furniture | 10.6 | |

|

| ||

| human | 10.5 | |

|

| ||

| men | 10.3 | |

|

| ||

| two | 10.2 | |

|

| ||

| wed | 9.8 | |

|

| ||

| romantic | 9.8 | |

|

| ||

| family | 9.8 | |

|

| ||

| smiling | 9.4 | |

|

| ||

| face | 9.2 | |

|

| ||

| child | 9.1 | |

|

| ||

| cheerful | 8.9 | |

|

| ||

| ceremony | 8.7 | |

|

| ||

| light | 8.7 | |

|

| ||

| photographic equipment | 8.7 | |

|

| ||

| life | 8.7 | |

|

| ||

| wife | 8.5 | |

|

| ||

| attractive | 8.4 | |

|

| ||

| fashion | 8.3 | |

|

| ||

| toilet tissue | 8.1 | |

|

| ||

| male | 7.8 | |

|

| ||

| pretty | 7.7 | |

|

| ||

| youth | 7.7 | |

|

| ||

| traditional | 7.5 | |

|

| ||

| city | 7.5 | |

|

| ||

| tradition | 7.4 | |

|

| ||

| sexy | 7.2 | |

|

| ||

| black | 7.2 | |

|

| ||

| suit | 7.2 | |

|

| ||

| looking | 7.2 | |

|

| ||

| romance | 7.1 | |

|

| ||

| day | 7.1 | |

|

| ||

| furnishing | 7 | |

|

| ||

Google

created on 2021-12-15

| Monochrome photography | 72 | |

|

| ||

| Rectangle | 71.6 | |

|

| ||

| Art | 69.3 | |

|

| ||

| Pattern | 67.6 | |

|

| ||

| Font | 67.3 | |

|

| ||

| Monochrome | 65.6 | |

|

| ||

| Baby | 65.2 | |

|

| ||

| Stock photography | 64.4 | |

|

| ||

| Room | 63.8 | |

|

| ||

| Visual arts | 59.1 | |

|

| ||

| Photographic paper | 57.6 | |

|

| ||

| Paper product | 53.3 | |

|

| ||

| Paper | 52.9 | |

|

| ||

| Vintage clothing | 52.8 | |

|

| ||

Microsoft

created on 2021-12-15

| text | 99.8 | |

|

| ||

| black and white | 86.3 | |

|

| ||

| clothing | 65.3 | |

|

| ||

Color Analysis

Face analysis

Amazon

AWS Rekognition

| Age | 34-50 |

| Gender | Male, 71.3% |

| Calm | 86% |

| Sad | 10.8% |

| Happy | 1% |

| Confused | 0.9% |

| Surprised | 0.7% |

| Angry | 0.4% |

| Disgusted | 0.2% |

| Fear | 0.1% |

Feature analysis

Categories

Imagga

| paintings art | 99.8% | |

|

| ||

Captions

Text analysis

Amazon

16042

a

zhogi

74091

НАСОЛ

-ИАМТГАЗ

9AS -ИАМТГАЗ

9AS

16042

HAGO

A2-MAMTZA3

16042

16042

HAGO

A2-MAMTZA3