Machine Generated Data

Tags

Amazon

created on 2022-01-08

Clarifai

created on 2023-10-25

Imagga

created on 2022-01-08

| shop | 43.1 | |

|

| ||

| mercantile establishment | 32.7 | |

|

| ||

| architecture | 30.5 | |

|

| ||

| building | 30.3 | |

|

| ||

| city | 28.3 | |

|

| ||

| stall | 27.8 | |

|

| ||

| place of business | 21.5 | |

|

| ||

| bakery | 19.7 | |

|

| ||

| structure | 18.2 | |

|

| ||

| travel | 17.6 | |

|

| ||

| old | 16.7 | |

|

| ||

| house | 16.7 | |

|

| ||

| urban | 15.7 | |

|

| ||

| sky | 15.3 | |

|

| ||

| machinist | 14.3 | |

|

| ||

| street | 13.8 | |

|

| ||

| construction | 13.7 | |

|

| ||

| sign | 13.5 | |

|

| ||

| town | 13 | |

|

| ||

| billboard | 12 | |

|

| ||

| vehicle | 11.7 | |

|

| ||

| transportation | 11.7 | |

|

| ||

| toyshop | 11.4 | |

|

| ||

| light | 11.4 | |

|

| ||

| tourism | 10.7 | |

|

| ||

| car | 10.4 | |

|

| ||

| establishment | 10.4 | |

|

| ||

| exterior | 10.1 | |

|

| ||

| road | 9.9 | |

|

| ||

| history | 9.8 | |

|

| ||

| daily | 9.6 | |

|

| ||

| signboard | 9.6 | |

|

| ||

| brick | 9.4 | |

|

| ||

| stone | 9.3 | |

|

| ||

| tree | 9.2 | |

|

| ||

| business | 9.1 | |

|

| ||

| night | 8.9 | |

|

| ||

| sculpture | 8.7 | |

|

| ||

| downtown | 8.6 | |

|

| ||

| office | 8.6 | |

|

| ||

| wall | 8.5 | |

|

| ||

| culture | 8.5 | |

|

| ||

| monument | 8.4 | |

|

| ||

| famous | 8.4 | |

|

| ||

| historic | 8.2 | |

|

| ||

| transport | 8.2 | |

|

| ||

| landmark | 8.1 | |

|

| ||

| shoe shop | 8 | |

|

| ||

| art | 7.9 | |

|

| ||

| ancient | 7.8 | |

|

| ||

| roof | 7.6 | |

|

| ||

| clouds | 7.6 | |

|

| ||

| vintage | 7.4 | |

|

| ||

| danger | 7.3 | |

|

| ||

| tower | 7.2 | |

|

| ||

| modern | 7 | |

|

| ||

Google

created on 2022-01-08

| Photograph | 94.2 | |

|

| ||

| Black | 89.8 | |

|

| ||

| Black-and-white | 86.9 | |

|

| ||

| Motor vehicle | 85.7 | |

|

| ||

| Style | 84 | |

|

| ||

| Vehicle | 83.4 | |

|

| ||

| Car | 80.6 | |

|

| ||

| Monochrome | 78.1 | |

|

| ||

| Tints and shades | 76.9 | |

|

| ||

| Monochrome photography | 76.7 | |

|

| ||

| Vehicle door | 75.2 | |

|

| ||

| Umbrella | 75 | |

|

| ||

| Snapshot | 74.3 | |

|

| ||

| Road | 70.4 | |

|

| ||

| Art | 70.2 | |

|

| ||

| Font | 69.9 | |

|

| ||

| Automotive tire | 69 | |

|

| ||

| Automotive exterior | 67.8 | |

|

| ||

| Automotive lighting | 66.2 | |

|

| ||

| Tire | 65.8 | |

|

| ||

Microsoft

created on 2022-01-08

| text | 99.9 | |

|

| ||

| black and white | 91.8 | |

|

| ||

| street | 77 | |

|

| ||

Color Analysis

Face analysis

Amazon

AWS Rekognition

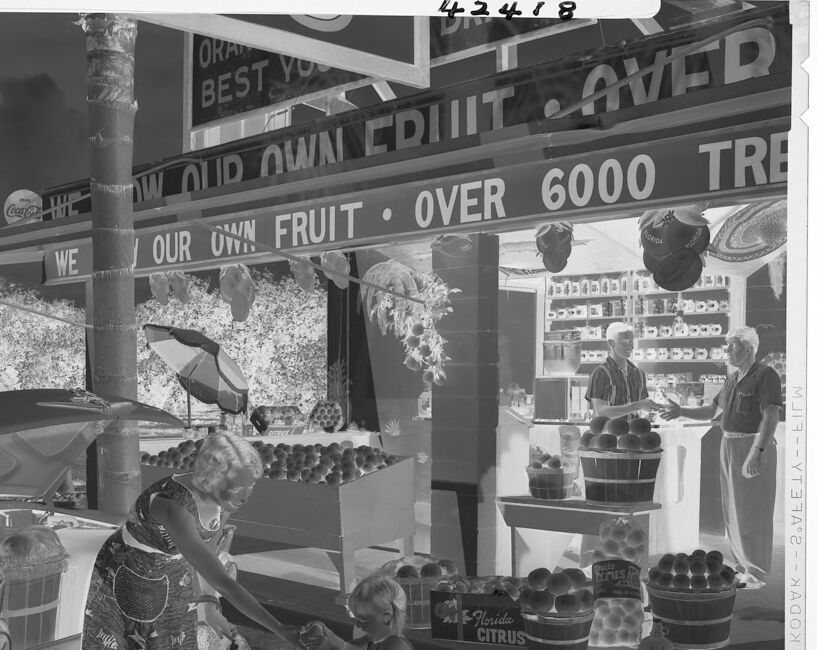

| Age | 26-36 |

| Gender | Female, 94.5% |

| Calm | 46.6% |

| Sad | 38.9% |

| Happy | 8.1% |

| Angry | 4.1% |

| Disgusted | 0.7% |

| Surprised | 0.7% |

| Confused | 0.5% |

| Fear | 0.3% |

AWS Rekognition

| Age | 49-57 |

| Gender | Female, 88.5% |

| Calm | 95.6% |

| Sad | 4% |

| Confused | 0.1% |

| Happy | 0.1% |

| Surprised | 0% |

| Disgusted | 0% |

| Angry | 0% |

| Fear | 0% |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Unlikely |

| Blurred | Possible |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Feature analysis

Categories

Imagga

| streetview architecture | 97.9% | |

|

| ||

| paintings art | 1.4% | |

|

| ||

Captions

Microsoft

created on 2022-01-08

| a person standing in front of a store | 67% | |

|

| ||

| a person standing in front of a store | 66.9% | |

|

| ||

| a group of people standing in front of a store | 53.9% | |

|

| ||

Text analysis

Amazon

6000

OUR

OVER

TRE

WE

BEST

FRUIT

CITRUS

W

FLORIDA

OWN

Coca-Cola

OUR OWN FRUIT ® OVER 6000 TRE

ORA

OUD

W OUD OWN CRUIT AVER

43418

CRUIT

BEST YO

Horida

AVER

YO

hrus

Spuida

the

®

az

42418

OR

BEST YO

OUD OWN EDUIT AVER

OUR OWN FRUIT 0VER 6000 TRE

Coca-Col

WE

EFlorida

CITRUS

--YT3A -

42418

OR

BEST

YO

OUD

OWN

EDUIT

AVER

OUR

FRUIT

0VER

6000

TRE

Coca-Col

WE

EFlorida

CITRUS

--YT3A

-