Machine Generated Data

Tags

Amazon

created on 2022-01-08

| Person | 99 | |

|

| ||

| Human | 99 | |

|

| ||

| Person | 98.4 | |

|

| ||

| Clothing | 88.8 | |

|

| ||

| Apparel | 88.8 | |

|

| ||

| Person | 87.9 | |

|

| ||

| Person | 84.4 | |

|

| ||

| Face | 83.3 | |

|

| ||

| Furniture | 74.5 | |

|

| ||

| Chair | 73.8 | |

|

| ||

| Tank | 71.6 | |

|

| ||

| Military | 71.6 | |

|

| ||

| Transportation | 71.6 | |

|

| ||

| Military Uniform | 71.6 | |

|

| ||

| Armored | 71.6 | |

|

| ||

| Vehicle | 71.6 | |

|

| ||

| Army | 71.6 | |

|

| ||

| Indoors | 65.9 | |

|

| ||

| Meal | 65.6 | |

|

| ||

| Food | 65.6 | |

|

| ||

| People | 63.9 | |

|

| ||

| Room | 63.2 | |

|

| ||

| Screen | 60.8 | |

|

| ||

| Electronics | 60.8 | |

|

| ||

| Flooring | 60.2 | |

|

| ||

| Finger | 59.7 | |

|

| ||

| Table | 59.6 | |

|

| ||

| Monitor | 59.3 | |

|

| ||

| Display | 59.3 | |

|

| ||

| Photography | 57.4 | |

|

| ||

| Photo | 57.4 | |

|

| ||

| Man | 57.2 | |

|

| ||

| Female | 55.6 | |

|

| ||

| Person | 49.9 | |

|

| ||

Clarifai

created on 2023-10-25

Imagga

created on 2022-01-08

Google

created on 2022-01-08

| Black | 89.7 | |

|

| ||

| Black-and-white | 84.7 | |

|

| ||

| Style | 84 | |

|

| ||

| Monochrome photography | 77.2 | |

|

| ||

| Monochrome | 74.8 | |

|

| ||

| Snapshot | 74.3 | |

|

| ||

| Cooking | 69.7 | |

|

| ||

| Art | 69.1 | |

|

| ||

| Room | 67.4 | |

|

| ||

| Office equipment | 66.6 | |

|

| ||

| Font | 66.3 | |

|

| ||

| Event | 65.3 | |

|

| ||

| Stock photography | 63.9 | |

|

| ||

| Sitting | 62.4 | |

|

| ||

| Rectangle | 61.6 | |

|

| ||

| Machine | 59.6 | |

|

| ||

| Service | 57.5 | |

|

| ||

| Photographic paper | 57.2 | |

|

| ||

| Vintage clothing | 57 | |

|

| ||

| Display device | 50 | |

|

| ||

Microsoft

created on 2022-01-08

| person | 98.4 | |

|

| ||

| text | 96.6 | |

|

| ||

| black and white | 90.4 | |

|

| ||

| concert | 90.1 | |

|

| ||

| piano | 83.5 | |

|

| ||

| musical instrument | 81.2 | |

|

| ||

| clothing | 68 | |

|

| ||

Color Analysis

Face analysis

Amazon

AWS Rekognition

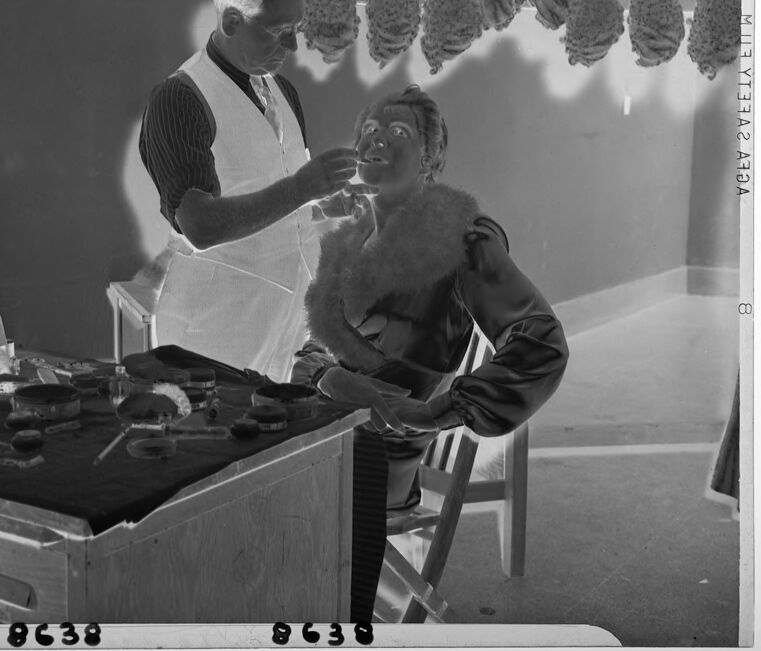

| Age | 25-35 |

| Gender | Female, 67% |

| Surprised | 90.1% |

| Happy | 5.4% |

| Fear | 2.2% |

| Calm | 0.9% |

| Sad | 0.4% |

| Disgusted | 0.4% |

| Angry | 0.3% |

| Confused | 0.2% |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Feature analysis

Categories

Imagga

| interior objects | 52.7% | |

|

| ||

| paintings art | 19.6% | |

|

| ||

| people portraits | 7.5% | |

|

| ||

| events parties | 6.6% | |

|

| ||

| food drinks | 4.3% | |

|

| ||

| pets animals | 3.9% | |

|

| ||

| text visuals | 2.2% | |

|

| ||

| streetview architecture | 1.8% | |

|

| ||

Captions

Microsoft

created on 2022-01-08

| a man and a woman standing in front of a window | 46.6% | |

|

| ||

| a man and a woman standing in front of a box | 46.5% | |

|

| ||

| a man and a woman sitting in a box | 39.9% | |

|

| ||

Text analysis

Amazon

8638

8

MU7YT37A2

MU7YT37A2 A70A

8633

A70A

8638

MIR YT33A 2 A73A

8638

MIR

YT33A

2

A73A