Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

AWS Rekognition

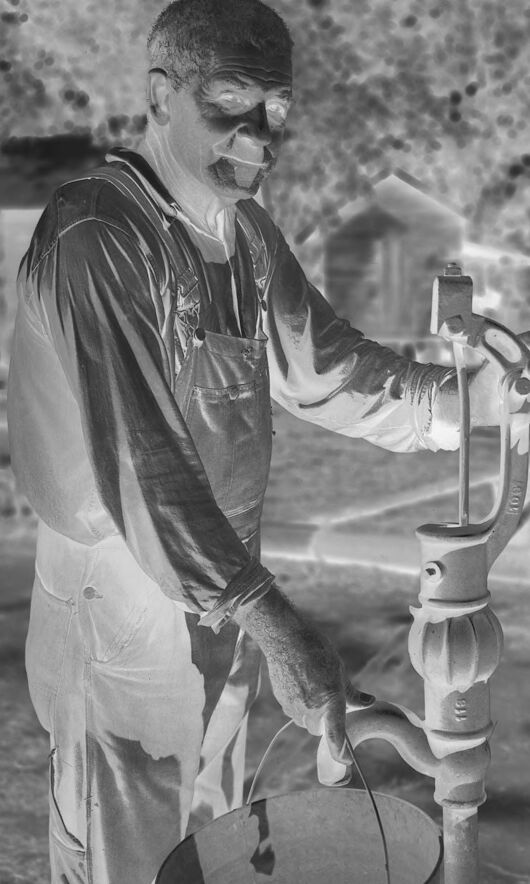

| Age | 48-56 |

| Gender | Female, 65.8% |

| Happy | 41.8% |

| Calm | 27.4% |

| Surprised | 20.8% |

| Disgusted | 4.2% |

| Fear | 1.9% |

| Sad | 1.4% |

| Angry | 1.3% |

| Confused | 1.2% |

Feature analysis

Amazon

Clarifai

AWS Rekognition

| Person | 99.1% | |

Categories

Imagga

created on 2022-01-09

| paintings art | 65.3% | |

| interior objects | 25.7% | |

| streetview architecture | 8.2% | |

Captions

Microsoft

created by unknown on 2022-01-09

| a man standing in front of a building | 81.9% | |

| an old photo of a man | 81.8% | |

| old photo of a man | 81.7% | |

Clarifai

Created by general-english-image-caption-clip on 2025-07-14

a man pumps water into a well.

Salesforce

Created by general-english-image-caption-blip on 2025-05-20

a photograph of a man in a leather jacket is using a hose to fix a pipe

OpenAI GPT

Created by gpt-4o-2024-11-20 on 2025-06-09

The image shows a man wearing overalls and a long-sleeved shirt operating a hand pump to draw water. He is holding a bucket under the pump spout to collect the water. The background features a rural setting with trees and structures such as buildings or houses, giving it a rustic atmosphere. The image appears to be in negative format, which reverses light and dark areas.

Created by gpt-4o-2024-08-06 on 2025-06-09

The image is a negative photo showing a person standing outdoors, operating a hand water pump. The individual is wearing overalls and a long-sleeved shirt. They have one hand on the pump handle, while the other hand is holding a metal bucket by its handle. The background appears to be a rural setting, with trees and a couple of wooden structures visible. The words "KODAK SAFETY" are printed along the top edge of the image.

Anthropic Claude

Created by anthropic.claude-3-haiku-20240307-v1:0 on 2025-06-14

The image shows a man wearing a white protective suit and mask, working on some kind of industrial equipment or machinery. He appears to be in a workshop or industrial setting, with a blurred background suggesting a cluttered, industrial environment. The man has a serious, focused expression as he operates the machinery in front of him. The image has a black and white, documentary-style aesthetic, capturing the man at work in what seems to be a hazardous or specialized industrial setting.

Created by us.anthropic.claude-3-5-sonnet-20241022-v2:0 on 2025-06-14

This is a black and white photograph showing a person operating what appears to be an old-fashioned water pump. The individual is wearing overalls and a long-sleeved shirt, and is positioned next to a manual hand pump, which was a common way to draw water from wells in earlier times. The pump appears to be made of cast iron with a curved handle and decorative details typical of vintage water pumps. The image has a historical quality to it, likely taken sometime in the early-to-mid 20th century. The background appears somewhat blurred but shows what might be stone or brick structures.

Meta Llama

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-05-28

The image is a black-and-white photograph of a man operating a water pump. The man, dressed in overalls and a long-sleeved shirt, is shown from the waist up, facing to the right. He has short hair and appears to be in his 50s or 60s. His left hand is on the handle of the pump, while his right hand is on the spout, which is bent downward. The pump is made of metal and has a wooden handle.

In the background, there are trees and buildings visible, although they are not clearly defined due to the low resolution of the image. The overall atmosphere of the image suggests that it was taken in a rural or agricultural setting, possibly during the mid-20th century.

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-05-28

This image is a black and white photograph of a man operating a hand pump. The man is wearing overalls and has short hair. He is standing in front of a hand pump, which he is operating with both hands. The background of the image is blurry, but it appears to be a rural or outdoor setting. There are trees and buildings visible in the distance.

The image has a vintage feel to it, and the quality of the photograph suggests that it may have been taken many years ago. The overall atmosphere of the image is one of simplicity and hard work. The man's expression is serious, and his posture suggests that he is focused on the task at hand. The image conveys a sense of nostalgia and a connection to the past.

Amazon Nova

Created by amazon.nova-lite-v1:0 on 2025-06-04

The image is a black-and-white photograph of a man standing in front of a pump. He is dressed in a long-sleeve shirt and overalls, which suggest that he is engaged in some form of manual labor. The man is holding the handle of the pump with his right hand, and his left hand is resting on the pump's spout. The pump is positioned on a concrete base, and there is a bucket placed below it. Behind the man, there is a blurry background that includes a house and some trees.

Created by amazon.nova-pro-v1:0 on 2025-06-04

The black-and-white photo shows a man standing and holding a pump with both hands. He is wearing a long-sleeve shirt and an apron. He is holding a bucket in his right hand. Behind him, there is a blurry background of a house and a tree. The image has a watermark on the top right corner with the text "Y3343-X400".

Google Gemini

Created by gemini-2.0-flash-lite on 2025-05-01

The image shows a man pumping water from an old-fashioned hand pump. He is wearing light-colored overalls and a long-sleeved shirt. His sleeves are rolled up a bit and he has a serious look on his face. He is holding a metal bucket with his left hand. The pump itself is made of metal and is somewhat worn. Behind him is what appears to be a blurred background of trees and buildings. The image has a vintage feel, with a negative effect.

Created by gemini-2.0-flash on 2025-05-01

The black and white image is a negative of a man at an outdoor water pump. The man, middle-aged with short hair, is wearing overalls over a long-sleeved shirt. He stands slightly angled to the right, operating a tall, cast-iron water pump. His right hand is on the pump handle, while his left hand holds a bucket underneath the pump spout.

Behind the man, there are indistinct structures that appear to be buildings, and foliage in the background suggests a rural or outdoor setting. The image is from a Kodak safety negative.

Mistral Pixtral

Created by us.mistral.pixtral-large-2502-v1:0 on 2025-06-02

The image is a black-and-white photograph featuring a man standing outdoors. He is operating a manual water pump, which is a common sight in rural areas. The man is wearing a long-sleeved shirt, overalls, and light-colored pants. He is holding a cup or container under the spout of the pump, presumably to collect water. The background shows a building and some trees, indicating a rural or semi-rural setting. The photograph has a vintage feel, possibly due to the style of clothing and the equipment being used. The image also has some visible damage or wear, such as spots and scratches, which adds to its aged appearance. The brand "KODAK SAFETY" is visible at the top, suggesting it was taken with a Kodak film.

Qwen

Created by Qwen/Qwen2.5-VL-7B-Instruct on 2025-07-10

This is a black and white photograph depicting a man engaged in a task that appears to involve working with a piece of machinery. The man is wearing a long-sleeved shirt, overalls, and a protective mask that covers most of his face, indicating the activity might be hazardous or require respiratory protection. He is operating a piece of equipment that looks like an industrial machine, possibly for mixing or manipulating materials. The setting seems to be an industrial or construction site, given the heavy-duty nature of the equipment and the man's attire. The photograph has a vintage quality, suggesting it could be from an earlier era, and the image is marked with "KODAK SAFETY" in the upper right corner, indicating that it was processed using Kodak safety film.

Created by Qwen/Qwen2.5-VL-72B-Instruct on 2025-07-10

This is a black-and-white photograph featuring a man engaged in painting or coating an ornamental metal object, likely a decorative pole or stand. The man is wearing overalls and appears to be holding a paint or spray gun, with paint visibly dripping from the object he is working on. The background suggests an outdoor setting, possibly a workshop or a construction area, with blurred structures and pipes in the distance. The image has a vintage quality, indicated by the "Kodak Safety" text at the top and the visible film perforations on the sides.