Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

AWS Rekognition

| Age | 37-45 |

| Gender | Female, 96.7% |

| Happy | 65.2% |

| Calm | 24.4% |

| Surprised | 3.6% |

| Fear | 3% |

| Sad | 1.1% |

| Confused | 1% |

| Disgusted | 1% |

| Angry | 0.8% |

Feature analysis

Amazon

Clarifai

AWS Rekognition

| Person | 97.6% | |

Categories

Imagga

created on 2022-01-09

| streetview architecture | 76.9% | |

| paintings art | 21.7% | |

Captions

Microsoft

created by unknown on 2022-01-09

| a group of people posing for a photo | 77.6% | |

| a group of people posing for the camera | 77.5% | |

| a group of people posing for a picture | 77.4% | |

Clarifai

Created by general-english-image-caption-clip on 2025-07-11

photograph of a young boy and girl riding a ferris wheel.

Salesforce

Created by general-english-image-caption-blip on 2025-05-22

a photograph of a woman in a costume is standing in front of a plane

OpenAI GPT

Created by gpt-4o-2024-11-20 on 2025-06-14

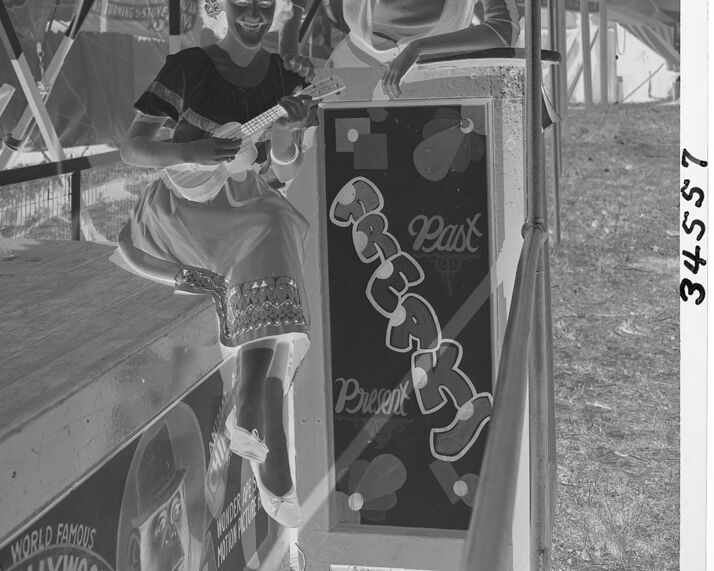

The image appears to be set at a carnival or sideshow environment. It features two figures positioned near a large sign or poster that reads "FREAK PAST PRESENT." The poster is colorful and uses bold fonts to draw attention. Behind them, there are tents and banners with text and graphics, suggesting a lively atmosphere. The foreground includes a table or platform, and one of the figures is sitting on it, holding a small musical instrument resembling a ukulele. To the side, a small loudspeaker is visible, possibly used for announcements.

Created by gpt-4o-2024-08-06 on 2025-06-14

The image depicts a negative photograph of two people oriented towards the camera, situated in what appears to be a carnival or amusement setting. One individual is seated on an elevated platform, possibly a stage or an attraction booth, holding a small stringed instrument, possibly a ukulele. The attire includes a dress with a detailed pattern on the hem, suggesting a festive or performance theme. Visible in the background are large banners and striped tent structures indicative of a fair atmosphere. Prominent in the foreground is a sign that contains stylized text; part of the sign reads “Past Present,” possibly advertising an attraction or show at the fair. The image has a black and white aesthetic typical of film negatives from earlier decades.

Anthropic Claude

Created by anthropic.claude-3-haiku-20240307-v1:0 on 2025-06-19

The image shows two women in a carnival or circus-like setting. One woman is sitting on a platform playing a guitar, while the other woman is standing next to her, smiling. They appear to be part of some kind of entertainment or performance, as evidenced by the colorful banners and decorations around them. The overall scene has a vintage or historical feel to it, with the black and white photography style.

Created by us.anthropic.claude-3-opus-20240229-v1:0 on 2025-06-19

The black and white image shows two young women happily smiling and playing ukuleles while standing on a small stage or platform. They are wearing matching dresses or outfits with ruffled sleeves. The stage has a banner that says "Pax" and some other text that is partially cut off in the photo. The women seem to be performing or entertaining at some kind of outdoor fair or carnival, based on the tents and structures visible in the background.

Created by us.anthropic.claude-3-5-sonnet-20241022-v2:0 on 2025-06-19

This appears to be a black and white photograph taken at a carnival or fair, likely from several decades ago. The image shows two people at what appears to be a carnival booth or attraction. One person is seated and holding what looks like a ukulele or small stringed instrument, while another person is positioned higher up. They're both smiling and appear to be carnival workers or performers. The setting includes carnival tents or awnings in the background, and there's a decorative sign panel visible with some text and designs on it. The photo has that classic vintage carnival atmosphere, capturing a moment of entertainment and amusement park culture from what seems to be the mid-20th century.

Meta Llama

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-06-09

The image is a black-and-white photograph of two women in a carnival setting. The woman on the left is sitting on a wooden platform, playing a ukulele and wearing a dark dress with a white apron. The woman on the right is standing behind the platform, wearing a light-colored dress and a hat.

In the background, there are several tents and banners, including one that reads "World Famous Hollywood" and another that says "Past Present". The overall atmosphere suggests a lively and festive event, possibly a carnival or circus.

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-06-09

This image is a black-and-white photograph of two women, one playing a ukulele and the other holding a megaphone. The woman on the left has short, curly hair and is wearing a dark top with a light skirt featuring an embroidered hem. She is sitting on a platform, playing a ukulele. The woman on the right has short, curly hair and is wearing a light top with a light vest over it. She is standing behind the platform, holding a megaphone.

In the background, there are several banners and signs, including one that reads "World Famous Hollywood" and another that appears to be an advertisement for a circus or carnival. The overall atmosphere of the image suggests that the women are performing at an outdoor event, possibly a circus or carnival.

Amazon Nova

Created by amazon.nova-lite-v1:0 on 2025-06-08

The image is a black-and-white photograph depicting two women in a circus setting. The woman on the left is seated on a wooden platform, holding a ukulele, and appears to be playing it. She is dressed in a skirt and a white blouse, and her hair is styled in a way that is reminiscent of the 1940s or 1950s. The woman on the right is standing behind a box or podium, and she is smiling and looking at the woman on the left. She is wearing a white apron and a white blouse, and her hair is styled in a bun. Behind them, there is a tent or canopy, and there are banners and other circus-related decorations. The image has a nostalgic and old-fashioned feel, and it captures a moment of lightheartedness and joy in the midst of a circus performance.

Created by amazon.nova-pro-v1:0 on 2025-06-08

The black-and-white image shows two women, perhaps performers, smiling and posing for a photo. The woman on the left is holding a guitar, and the woman on the right is leaning on a stand with a sign on it. Behind them is a tent with a banner hanging on the side. There is also a stage with a banner on the side. There is a watermark on the bottom right corner.

Google Gemini

Created by gemini-2.0-flash on 2025-05-17

Here is a description of the image:

This black and white image depicts two women in front of what appears to be a show or entertainment venue, possibly a carnival or circus. The image seems to be a photographic negative, as dark areas in the scene appear light, and vice versa.

On the left, a younger woman is seated on an elevated platform, holding and playing a ukulele. She has a floral ornament in her hair and wears a dark, long-sleeved top and a knee-length skirt. The platform she sits on has the words "WORLD FAMOUS HOLLYWOOD MOTION PICTURES" printed on its side.

To the right of the young woman is another woman, possibly older, standing near what appears to be a painted sign that reads "Past," and the word "Present" written beneath a whimsical design. The sign seems to be part of the structure of a booth or show entrance, which also has a pair of megaphones attached to it.

The background is filled with tents and poles of a circus or carnival setting. There are banners or posters visible behind the women advertising other acts. The image has a vintage aesthetic, likely from the mid-20th century or earlier. There is a number "34557" written along the right side.

Created by gemini-2.0-flash-lite on 2025-05-17

Certainly! Here's a description of the image:

Overall Impression:

This is a black and white image, likely a negative of a photograph, featuring two women at what appears to be a fair or carnival setting.

Key Elements and Details:

- The Women: Two women are the primary subjects. One is seated and playing a ukulele. The other is standing alongside, resting her arm on a structure. Both women are styled with the fashion of the era, likely the 1930s or 1940s. They are both smiling and seem to be in a jovial mood.

- Setting: The background suggests a fair or carnival. Tents or banners with text and images can be seen, and there are posts and other structural elements typical of such events.

- Signage: A sign is visible, with the word "Crazy" vertically, and the words "Past" and "Present" beside it. It is likely part of an attraction within the fair.

- Details: The image is full of context clues about the time. The style of clothing, the type of ukulele, and the background elements all contribute to a historical feel.

Composition and Style:

The photograph is well-composed, capturing a sense of activity and the atmosphere of the event. It is a snapshot of a specific moment in time, likely intended to document an event.

Mistral Pixtral

Created by us.mistral.pixtral-large-2502-v1:0 on 2025-06-02

The black-and-white photograph depicts two women who appear to be performers at a fair or carnival. They are standing on a platform, likely part of a sideshow or entertainment attraction.

The woman on the left is seated on a raised platform, holding a guitar. She is wearing a dress with a belt and has a joyful expression on her face. The woman on the right is standing next to a decorated box that has "Past" and "Present" written on it, along with some whimsical designs. She is also dressed in a costume that matches the carnival theme.

In the background, there are tents and various fairground structures, including a banner that partially reads "World Famous Hollywood." The overall scene suggests a lively and festive atmosphere, typical of a traveling carnival or fair from a bygone era. The photograph has a vintage feel, indicated by the clothing styles and the quality of the image.

Qwen

Created by Qwen/Qwen2.5-VL-7B-Instruct on 2025-07-24

This black-and-white photograph captures a lively scene at what appears to be a fair or carnival. Two women are featured prominently in the image. The woman on the left is seated on the edge of a stage, holding a small guitar and smiling, suggesting she might be performing or entertaining. She has a stylish, vintage hairstyle and is dressed in a short, patterned dress that complements her outfit with a pair of ankle boots. The woman on the right is standing and smiling as well, possibly interacting with the woman on the left. She is dressed in a more formal, long-sleeve top and a necklace, and her posture suggests she is engaged in the activity or entertainment.

The background includes banners and signage, which indicate the setting is likely a fairground or a similar event. One sign reads "Hollywood," hinting at a possible theme or setting inspired by Hollywood or a similar famous location. The lighting in the photograph is dramatic, with shadows and highlights creating a classic, vintage feel. The photograph has a border with a number on the right side, possibly a catalog or identification number for the image.

Created by Qwen/Qwen2.5-VL-72B-Instruct on 2025-07-24

This black-and-white photograph, taken in a vintage style, captures a lively scene at a carnival or fair. In the foreground, a woman with short, light-colored hair is seated on a platform, playing a small guitar or ukulele. She is dressed in a dark dress with a light skirt and a patterned hem. Behind her, another woman with short, light-colored hair stands, smiling and looking towards the camera. She is wearing a light-colored top and a dark dress. A banner with the words "Past," "Present," and "Future" is visible in the background, suggesting a sideshow or attraction related to fortune-telling or predictions. The setting appears to be outdoors, with a tent and some equipment visible in the background. The image has a nostalgic and playful atmosphere.