Machine Generated Data

Tags

Color Analysis

Face analysis

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Feature analysis

Amazon

Clarifai

AWS Rekognition

| Person | 97.3% | |

Categories

Imagga

created on 2022-01-08

| paintings art | 90.2% | |

| streetview architecture | 9.5% | |

Captions

Microsoft

created by unknown on 2022-01-08

| a vintage photo of a group of people posing for the camera | 92.8% | |

| a vintage photo of a group of people posing for a picture | 92.7% | |

| a vintage photo of some people posing for the camera | 91.6% | |

Clarifai

Created by general-english-image-caption-clip on 2025-07-13

photograph of a woman sitting on a bench with her dog.

Salesforce

Created by general-english-image-caption-blip on 2025-05-15

a photograph of a woman is sitting in a chair while another woman is standing next to her

OpenAI GPT

Created by gpt-4o-2024-11-20 on 2025-06-14

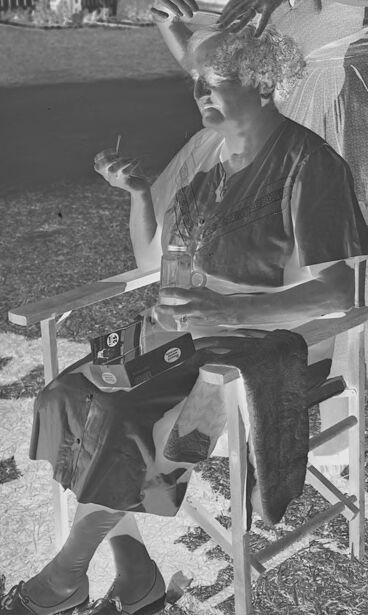

This is a black-and-white negative photograph depicting two women outdoors in a camping or trailer park setting. One woman is seated in a wooden folding chair, while the other stands behind her, styling her hair. The seated woman has curly hair and appears relaxed, holding a small object in one hand and a box in her lap. She is dressed in a dark dress and loafers. The standing woman, wearing sunglasses and a halter-style dress, is actively combing or manipulating the seated woman’s hair.

In the background are several recreational trailers and campers surrounded by trees and grass. Outdoor furniture, including a table and chairs, and other camping items are visible, giving the impression of a serene and casual atmosphere.

Created by gpt-4o-2024-08-06 on 2025-06-14

The image is a black and white negative, showing two women outdoors in what appears to be a trailer park setting. One woman is sitting on a wooden chair, wearing a long dress and holding a cigarette. She has a package of hair dye or styling product on her lap. The other woman is standing behind her, working on the seated woman's hair. Both are dressed in 1950s-style clothing. In the background, there are several trailers with awnings, some chairs, and trees, indicating a casual, relaxed setting. The image has a vintage feel, suggested by the style of clothing and the overall scene.

Anthropic Claude

Created by anthropic.claude-3-haiku-20240307-v1:0 on 2025-06-19

The image shows an outdoor camping or recreational scene. In the foreground, there is a person sitting in a chair, seemingly working on something. Behind them, there are several trailers or campers parked, suggesting this is a camping or recreational vehicle area. The overall atmosphere appears to be relaxed and informal, with the natural surroundings providing a scenic backdrop to the scene.

Created by us.anthropic.claude-3-5-sonnet-20241022-v2:0 on 2025-06-19

This black and white photograph appears to be taken at a trailer park or camping ground, with travel trailers visible in the background. In the foreground, someone is seated in what looks like a chair or stool while another person appears to be styling or working on their hair. The setting seems casual and outdoors, with grass visible on the ground. The image has a vintage quality to it, suggesting it may have been taken some time ago. In the background, you can see some lawn chairs and camping equipment scattered around the trailers.

Meta Llama

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-06-09

The image is a black-and-white photograph of a woman cutting another woman's hair in a grassy area. The woman sitting in the chair has short, curly hair and is wearing a dark dress with a light-colored apron over it. She is holding a cigarette in her right hand and has a camera on her lap. The woman standing behind her is wearing a sleeveless dress and has short, curly hair. She is holding scissors in her right hand and is cutting the seated woman's hair.

In the background, there are several trailers and trees. The overall atmosphere suggests a casual, outdoor setting, possibly at a campsite or a park. The woman cutting the hair appears to be doing so in a relaxed manner, and the woman sitting in the chair seems to be enjoying the experience. The presence of the camera on her lap suggests that she may be taking photos of the event.

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-06-09

The image depicts a black-and-white photograph of two women in an outdoor setting, with one woman cutting the hair of the other. The woman sitting in the chair is wearing a dark dress and has a cigarette in her right hand. She also has a glass of liquid in her left hand and appears to be wearing dark shoes. The woman standing behind her is wearing a light-colored dress and has her hands raised as if she is cutting the seated woman's hair.

In the background, there are several trailers or mobile homes, suggesting that the scene may be taking place at a campsite or trailer park. The overall atmosphere of the image appears to be relaxed and casual, with the two women engaged in a friendly activity together.

Amazon Nova

Created by amazon.nova-lite-v1:0 on 2025-06-08

The black-and-white image shows a woman sitting on a chair with her hair being styled by another woman. The woman sitting is wearing a dress and shoes, and she is holding a bottle in her hand. The woman standing is wearing a dress, a bracelet, and glasses. Behind them, there is a grassy area with a trailer and a ladder. The image has a watermark with the text "3113-H" on the right side.

Created by amazon.nova-pro-v1:0 on 2025-06-08

The black and white photo features a woman sitting on a chair and smoking a cigarette. She is wearing glasses and holding a bottle. Another woman is standing in front of her, and she is holding her hair. She is wearing a bracelet and sandals. Behind them, there is a grassy area with some chairs, a ladder, and a building. In the distance, there are trees.

Google Gemini

Created by gemini-2.0-flash-lite on 2025-05-16

Here's a description of the image:

The image is a black and white, inverted-color photograph, likely from the mid-20th century, showing a relaxed outdoor scene, presumably a camping or vacation setting. The scene features two women. One is seated in a director's chair, wearing a dress, stockings, and what appear to be spectator shoes. She's holding a small item (likely a cigarette or a match) in her fingers. The other woman is standing behind her and attending to the seated woman's hair with what look like scissors. The standing woman wears a top, a dress and a pair of slides, and has a pair of glasses.

In the background, there are two vintage travel trailers. The one to the left has an awning. There's a small picket fence and some basic camping furniture visible as well.

The overall impression is of a casual, leisurely moment, perhaps a haircut session at a campsite. The photograph is in very good condition for its age, and the inversion gives it a slightly dreamlike and vintage feel. The text "31/134" is written on the white border of the photograph. Also, "KODAK SAFETY FILM" is written along the top.

Created by gemini-2.0-flash on 2025-05-16

Here's a description of the image:

The image is an inverted black and white photograph, showing what appears to be two women outdoors in a casual setting. In the foreground, a woman is sitting in a white wooden chair. She is holding a jar or glass in one hand and a small stick or match in the other. A box is resting on her lap. She's wearing shoes, stockings, and a dress or skirt and top. A sweater or jacket is draped over the chair.

Standing behind her is another woman who is in the process of combing the seated woman's hair. She is wearing glasses, a dress, and white shoes.

In the background, there are two trailers or camper vehicles, with what looks like camping furniture and some form of fence around them. The setting appears to be a grassy area with trees in the distance. The image overall has a vintage feel, suggesting it may be an older photograph. The negative effect makes the scene surreal.

Mistral Pixtral

Created by us.mistral.pixtral-large-2502-v1:0 on 2025-06-05

The image depicts a scene from what appears to be a camping or trailer park setting. In the foreground, two women are engaged in an activity. One woman is seated on a wooden chair, holding a cigarette in one hand and a small object in the other. She is wearing a dress and appears to be having her hair styled or set by the other woman, who is standing behind her. The standing woman is using a hairdryer, which is an older model, indicating that the photograph is likely from the mid-20th century.

The seated woman has curlers in her hair, suggesting that she is getting a perm or setting her hair. On the ground near the chair, there is a pair of shoes, a towel, and a small suitcase or bag, which might contain hairdressing tools or personal items.

In the background, there are several trailers or caravans, indicating that this is a mobile home or trailer park. The area is grassy, and there are a few folding chairs and other camping paraphernalia scattered around. The overall atmosphere suggests a casual, outdoor setting, possibly during a leisurely vacation or a stay at a trailer park. The image captures a moment of personal grooming and social interaction in a relaxed environment.

Qwen

Created by Qwen/Qwen2.5-VL-7B-Instruct on 2025-07-06

This is a black-and-white photograph depicting an outdoor scene. It shows two individuals engaged in a grooming activity. The person standing appears to be styling or cutting the hair of the seated individual, who is holding a drink and a book, suggesting a relaxed atmosphere. The setting appears to be a campsite or recreational area, as there are trailers and camping equipment visible in the background. The individuals are dressed in attire that would be suitable for a leisurely vacation or camping trip. The photograph has a vintage feel, possibly from the mid-20th century, based on the style of clothing and the quality of the image.

Created by Qwen/Qwen2.5-VL-72B-Instruct on 2025-07-06

This black-and-white photograph captures an outdoor scene, likely from a mid-20th century setting. A woman is seated in a director's chair, appearing to be getting her hair styled. She is holding a cigarette in one hand and a bottle in the other, with her feet propped up on the chair's footrest. A jacket is draped over the arm of the chair.

Standing behind her is another woman, who appears to be a hairdresser, styling the seated woman's hair. The hairdresser is wearing sunglasses and an apron, and she is holding a comb or similar styling tool. In the background, there are two vintage trailers or RVs, a picnic table, and some other outdoor furniture, suggesting a camping or recreational area. The ground is covered with what appears to be mulch or wood chips. The overall atmosphere is casual and relaxed.