Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

AWS Rekognition

| Age | 23-33 |

| Gender | Female, 87.3% |

| Happy | 87% |

| Calm | 7.2% |

| Surprised | 4.3% |

| Sad | 0.5% |

| Fear | 0.5% |

| Disgusted | 0.2% |

| Confused | 0.1% |

| Angry | 0.1% |

Feature analysis

Amazon

Clarifai

AWS Rekognition

| Person | 99.8% | |

Categories

Imagga

created on 2022-01-09

| paintings art | 51.4% | |

| streetview architecture | 47.3% | |

Captions

Microsoft

created by unknown on 2022-01-09

| a group of people posing for a photo | 89.8% | |

| a group of people posing for a picture | 89.7% | |

| a group of people posing for the camera | 89.6% | |

Salesforce

Created by general-english-image-caption-blip on 2025-05-22

a photograph of a man and woman are holding baseball bats

OpenAI GPT

Created by gpt-4o-2024-11-20 on 2025-06-17

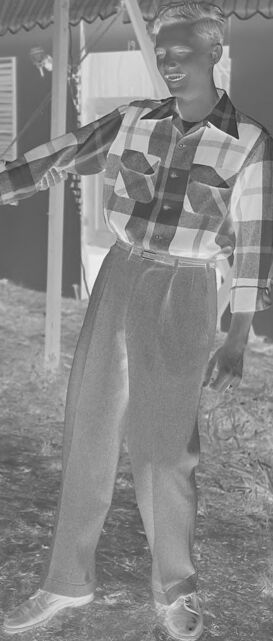

The image depicts two individuals in an outdoor setting with signage in the background that reads "GIRLESK" and "Singapore S...". They appear to be at a fair or carnival-like venue, featuring buildings with scalloped roofing, banners, and poles. The person on the left is dressed in a sleeveless top and a knee-length skirt, while the person on the right wears a plaid shirt and trousers. The ground consists of grass, suggesting an open or park-like area.

Created by gpt-4o-2024-08-06 on 2025-06-17

This is a black and white photograph of two individuals standing in an outdoor setting, possibly at a fair or carnival. The photo is a negative, which means the dark and light areas are inverted. The person on the left is wearing a sleeveless top and a knee-length skirt, while the person on the right is wearing a long-sleeved checkered shirt and trousers. They appear to be playfully interacting, with the person on the left holding the arm of the person on the right. In the background, there are signs that read "GIRLESK" and "SINGAPORE S...", indicating they might be near attractions or booths with themed entertainment. The ground beneath them is grassy, and there are structures resembling tents or temporary buildings behind them.

Anthropic Claude

Created by anthropic.claude-3-haiku-20240307-v1:0 on 2025-06-19

The image shows two people, a woman and a man, standing together in front of a carnival or fair setting. The woman is wearing a long dress and the man is wearing a checkered shirt and pants. They appear to be holding hands and smiling, suggesting they are enjoying themselves at the carnival. In the background, there is a sign that says "Girlesque", indicating this is likely some kind of entertainment or attraction at the carnival. The overall scene depicts a lighthearted, festive atmosphere.

Created by us.anthropic.claude-3-5-sonnet-20241022-v2:0 on 2025-06-19

This appears to be a vintage black and white photograph taken at what looks like a fairground or carnival, as indicated by the "GIRLES" sign visible in the background. Two people are shown in casual 1950s-style clothing - one wearing a dark sleeveless top with a light-colored skirt, and the other in a plaid button-up shirt with loose-fitting trousers. They appear to be in a playful pose, holding hands and leaning away from each other in a fun, dynamic stance. The setting appears to be outdoors on bare ground, with some carnival structures and awnings visible in the background. The image has the characteristic look of mid-20th century photography.

Meta Llama

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-06-09

The image depicts a black-and-white photograph of a man and woman standing in front of a sign that reads "GIRLESK" and another sign that reads "SINGAPORE SALES".

The woman is wearing a sleeveless top, a long skirt, and flat shoes. She has short hair and is holding the man's hand with her right hand. The man is wearing a plaid shirt, dark pants, and sneakers. He has short hair and is smiling.

The background of the image shows a grassy area with several signs and tents. The overall atmosphere of the image suggests that it was taken at some kind of event or festival, possibly in the 1950s or 1960s based on the clothing and hairstyles of the people in the photo.

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-06-09

This image is a black-and-white photograph of a man and woman dancing in front of a sign that reads "GIRLESK" and "SINGAPORE SAFETY." The woman, positioned on the left, has short blonde hair and wears a dark sleeveless top paired with a light-colored skirt. Her right arm is extended, holding the man's left hand, while her left arm is bent at the elbow, with her hand grasping his right arm. The man, standing on the right, has short dark hair and is dressed in a plaid shirt, dark pants, and shoes. His right arm is extended, holding the woman's left hand, and his left arm is bent at the elbow, with his hand grasping her right arm.

In the background, there are several signs, including one that reads "SINGAPORE SAFETY" and another that appears to be a directional sign. The overall atmosphere suggests a casual, outdoor setting, possibly at a fair or festival.

Amazon Nova

Created by amazon.nova-lite-v1:0 on 2025-06-08

The image is a black-and-white photograph of a man and a woman standing outside. They are both smiling and appear to be posing for a photograph. The man is wearing a checkered shirt and pants, while the woman is wearing a sleeveless top and a skirt. They are standing in front of a sign that reads "GIRLESK" and "SINGAPORE SA". Behind them is a tent with a sign that reads "SINGAPORE SA". The image has a watermark on the top left corner that reads "2015-03-01".

Created by amazon.nova-pro-v1:0 on 2025-06-08

The black and white image features a man and a woman standing in front of a building, possibly a circus tent. The woman is wearing a sleeveless blouse and a skirt, while the man is wearing a checkered polo shirt, pants, and shoes. They are holding hands and smiling. Behind them are some signs, one of which reads "GIRLS." There is a flag on the left side of the image.

Google Gemini

Created by gemini-2.0-flash on 2025-05-17

Here is a description of the image:

This is a black-and-white photographic negative depicting a man and a woman holding hands and posing in front of booths at what appears to be a fair or carnival.

The woman is on the left, wearing a sleeveless top, a knee-length skirt, and flat shoes. Her hair is styled in waves. The man on the right wears a patterned, long-sleeved shirt, slacks, and shoes. They are holding hands between them, almost as if dancing.

Behind them are several booths with signs. One sign reads "SINGAPORE SOCKS," another reads "GIRLESK," and a third reads "SINGAPORE SA." The booths have simple awning structures.

The photograph has the typical black-and-white inverted tones of a negative, and along the borders, there are markings and perforations, indicating that this is a film negative. The number "34624" is visible in the lower right corner, likely a serial number or cataloging mark.

Created by gemini-2.0-flash-lite on 2025-05-17

Here's a description of the image:

Overall Impression:

The image is a negative, meaning the colors are inverted. It appears to be a vintage photograph taken outdoors, likely at some kind of fair or carnival. The main focus is on a couple holding hands and posing in front of what seem to be vendor booths.

People:

- Couple: A man and a woman are the primary subjects. They are facing towards the camera and holding each other's hands.

- Woman: She's wearing a sleeveless top and a long, light-colored skirt. Her hair is styled in a vintage wave.

- Man: He's in a plaid button-down shirt, pants, and shoes.

- They seem to be smiling.

Background:

- Vendor Booths: Behind the couple, there are several vendor booths. Signage above the booths is visible. The sign reads, "GIRLESK" and "SINGAPORE SALE" and "SINGAPORE SOCKS".

- Setting: The background is slightly blurred, but it appears to be a grassy or dirt ground.

Other Details:

- Negative: The image is a negative, so the tones are inverted.

- Frame: The image includes the borders of the film negative, including the punch holes on the left side.

- Text: Text can be seen along the top, indicating that the film is Kodak Safety film, and the bottom right corner of the image has the number "34624."

Possible Context:

The scene suggests a fun, casual setting like a fair or carnival. It's likely a moment captured during a day out at the event.

Mistral Pixtral

Created by us.mistral.pixtral-large-2502-v1:0 on 2025-06-02

The image is a black and white photograph depicting two people posing outdoors in front of a market stall. The stall has signs that read "Singapore Shoes" and "Girlesk" (possibly a misspelling of "Girlish"). The two individuals are standing close together, each holding one end of a long object, possibly a stick or a pole, which they are both grasping with both hands.

The person on the left is wearing a sleeveless top with a pattern on the left side and a light-colored skirt. The person on the right is dressed in a plaid shirt and dark pants. Both individuals are wearing shoes and appear to be smiling or laughing. The background includes other market stalls with visible signage and a grassy area. The photograph has a vintage appearance, indicated by the style of clothing and the quality of the image. The photograph is marked with "KODAK SAFETY" and has a series of numbers along the edges, typical of film negatives.

Qwen

Created by Qwen/Qwen2.5-VL-7B-Instruct on 2025-07-04

This is a black-and-white photograph of two individuals engaged in a playful activity. The setting appears to be an outdoor event or fair, as indicated by the signs reading "GIRLESK" and "SINGAPORE SACKS" in the background. The person on the left is wearing a sleeveless top and a knee-length skirt, while the person on the right is dressed in a plaid shirt and trousers. Both individuals are smiling and appear to be participating in a sack race or a similar game, as suggested by the sign for "SINGAPORE SACKS." The atmosphere seems festive and lighthearted, and the event appears to be held at night.

Created by Qwen/Qwen2.5-VL-72B-Instruct on 2025-07-04

This black-and-white photograph depicts two women engaging in a lively interaction outdoors. The woman on the left is dressed in a sleeveless top and a knee-length skirt, with her hair styled in a bun. She appears to be pulling or leading the other woman by the hand. The woman on the right is wearing a plaid shirt with rolled-up sleeves and loose-fitting pants. She has short, styled hair and is smiling as she walks along. The background features a structure with signs reading "Singapore Sling" and "Girl Esk," suggesting a carnival or fair setting with food or beverage stands. The scene is set on a grassy area, and the overall atmosphere is cheerful and casual. The photograph includes perforations along the left edge, indicating it is a film strip image.