Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

AWS Rekognition

| Age | 12-20 |

| Gender | Female, 88.2% |

| Happy | 89.8% |

| Sad | 2.9% |

| Calm | 2.7% |

| Confused | 1.9% |

| Disgusted | 0.9% |

| Fear | 0.7% |

| Angry | 0.7% |

| Surprised | 0.5% |

Feature analysis

Amazon

Clarifai

AWS Rekognition

| Person | 98.7% | |

Categories

Imagga

created on 2022-01-09

| paintings art | 54.2% | |

| people portraits | 35.7% | |

| streetview architecture | 5.7% | |

Captions

Microsoft

created by unknown on 2022-01-09

| a group of people around each other | 77% | |

| a group of people sitting on the ground | 76.9% | |

| a group of people sitting at a table | 70.5% | |

Clarifai

Created by general-english-image-caption-clip on 2025-07-14

a group of women in a park.

Salesforce

Created by general-english-image-caption-blip on 2025-05-20

a photograph of a group of young girls sitting on a field

OpenAI GPT

Created by gpt-4o-2024-11-20 on 2025-06-10

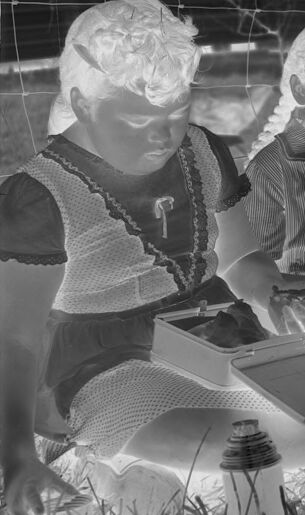

The image appears to be a black-and-white negative photograph of a group of children sitting outdoors on the grass. They seem to be enjoying a picnic or snack, with lunchboxes and beverages visible beside them. The children are dressed in mid-20th-century attire, including patterned dresses and shoes. The setting suggests a casual, pleasant gathering, possibly at a park or an outdoor event.

Created by gpt-4o-2024-08-06 on 2025-06-10

This image is in negative format and shows a group of children sitting on the grass. They appear to be having a picnic or lunch outdoors. Each child is holding or has a lunchbox in front of them, and there are various items like bottles and cups visible. The children are dressed in clothes that suggest the image might be from mid-20th century based on the style. The scene is set outdoors in what seems to be a sunny location, with grass covering the ground and a building or structure visible in the background.

Anthropic Claude

Created by anthropic.claude-3-haiku-20240307-v1:0 on 2025-06-15

The image appears to depict a group of mannequins or doll-like figures sitting in a grassy outdoor setting. The figures are arranged in a disorganized manner, with some lying down and others sitting upright. They seem to be interacting with various objects, including what appears to be a framed image or screen. The overall scene has a surreal and unsettling quality, with the figures appearing lifeless and disconnected from their surroundings.

Created by us.anthropic.claude-3-5-sonnet-20241022-v2:0 on 2025-06-15

This appears to be a vintage black and white photograph that shows a group of children sitting in what looks like a row or line. The image has some damage or deterioration, visible through cracking and distortion patterns across its surface. The children are wearing casual clothing typical of mid-20th century style, with textured or knitted tops visible on some of them. The bottom portion of the image shows some grass or foliage. The photograph has a somewhat ethereal quality due to its aged condition and the way the damage creates an interesting layered effect over the original image.

Meta Llama

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-06-09

The image is a black-and-white photograph of a group of people sitting on the ground, surrounded by grass. The photo appears to be a negative, with the image inverted and the background appearing lighter than the foreground.

In the foreground, there are several people sitting on the ground, some of whom are holding objects such as cameras or books. The people are dressed in casual clothing, and some of them have their legs crossed or are sitting with their feet tucked under them. The grass in the foreground is long and unkempt, and it appears to be a sunny day.

In the background, there is a net or a fence that separates the group from the rest of the area. Beyond the net, there are trees and other objects that are not clearly visible due to the inversion of the image.

Overall, the image suggests that the group is engaged in some kind of outdoor activity or picnic, and they are enjoying each other's company in a relaxed setting.

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-06-09

The image is a black and white photograph of five children sitting on the grass, eating from lunchboxes. The children are all wearing casual clothing, with the two girls on the left wearing dresses and the three boys on the right wearing shirts and pants. They are all holding cups and appear to be drinking from them.

In the foreground, there is a patch of grass that fills the bottom half of the image. The background is out of focus, but it appears to be a building or structure of some kind. The overall atmosphere of the image suggests a carefree and relaxed scene, with the children enjoying their meal together in a peaceful outdoor setting.

Amazon Nova

Created by amazon.nova-lite-v1:0 on 2025-06-05

The image is a black-and-white photograph of a group of children sitting on the grass, possibly in a park or a backyard. The children are wearing old-fashioned clothing, and some of them have their faces covered with white powder. The children are sitting in a row, and some of them are holding bottles and other objects. The image has a blurry effect, and there is a watermark on the right side of the image.

Created by amazon.nova-pro-v1:0 on 2025-06-05

A black-and-white photo shows a group of children sitting on the grass. They are all smiling and appear to be enjoying themselves. The child on the left is wearing a dress and has a box in front of her. The child in the middle is holding a cup, and the child next to her is holding a bottle. The child on the right is holding a cup and a bottle. Behind them is a fence.

Google Gemini

Created by gemini-2.0-flash-lite on 2025-05-02

Certainly! Here's a description of the image:

Overall Impression:

The image is a black and white negative depicting a group of children. It appears to be taken outdoors, possibly during a picnic or break.

Specific Details:

- Subjects: There are several children, all seemingly around the same age, likely girls. They're all seated on the ground.

- Activities: The children are engaged in eating. Many of them are holding food items or have open lunchboxes/containers.

- Setting: They are sitting on a patch of grass. There is a fence or netting in the background, which may suggest a play area or recreational space. The presence of lunchboxes and containers indicates a meal or snacktime.

- Aesthetics: The image has a nostalgic quality due to the negative film and the vintage clothing and setting.

Let me know if you'd like a more detailed description of any particular element!

Created by gemini-2.0-flash on 2025-05-02

Here's a description of the image you sent:

The image appears to be an inverted black and white photograph (or a photographic negative) showing a group of five or six young people sitting on the grass. They appear to be having a picnic. They are positioned close to each other, and many seem to be eating or drinking from cups. Several of the children have lunchboxes near them.

A net or fence is visible in the background. The ground is covered in grass. The image has a "KODAK SAFETY" strip on the side, a characteristic of older photographic film.

Mistral Pixtral

Created by us.mistral.pixtral-large-2502-v1:0 on 2025-06-02

The image appears to be a black-and-white photograph showing a group of people sitting on the hood and roof of a car. The scene seems to be outdoors, with tall grass in the foreground. The individuals in the photo are casually dressed, suggesting a relaxed or informal setting.

Here are some detailed observations:

People: There are six individuals visible in the image. They appear to be engaged in conversation or enjoying a moment together.

- The person on the far left is wearing a patterned shirt and has short, curly hair.

- The second person from the left is wearing a striped shirt and has short hair.

- The third person, a woman, is wearing a dark top and has her hand near her face, possibly adjusting her hair or shielding her eyes.

- The fourth person is wearing a light-colored shirt and has short hair.

- The fifth person is wearing a light-colored shirt and appears to be holding something in their hand.

- The sixth person on the far right is wearing a light-colored shirt and has short hair.

Car: The car they are sitting on appears to be a vintage model, possibly from the mid-20th century. The hood is open, and some of the individuals are sitting on it.

Setting: The background is not clearly visible, but the presence of tall grass suggests an outdoor, possibly rural or suburban setting.

Atmosphere: The overall atmosphere of the image is casual and relaxed, indicating a social gathering or a moment of leisure.

The photograph has a nostalgic feel, possibly due to its black-and-white nature and the vintage car, suggesting it might have been taken several decades ago.

Qwen

Created by Qwen/Qwen2.5-VL-7B-Instruct on 2025-07-10

This is a black-and-white photograph of a group of children sitting on the grass, seemingly engaged in an outdoor activity. Each child has a small table or tray in front of them, and some are eating or drinking from containers. The setting appears to be a casual, picnic-like scenario. The children are wearing casual clothing, and the image has a vintage quality, suggesting it might have been taken several decades ago. The background is slightly out of focus, which brings the attention to the children and their activities.

Created by Qwen/Qwen2.5-VL-72B-Instruct on 2025-07-10

This black-and-white image shows a group of children sitting on the grass, enjoying a picnic. They are dressed in school uniforms and appear to be eating from lunchboxes. The children are sitting in a row, and some are holding cups or other items. The image has a vintage feel, and there are water bottles and other picnic items scattered around them. The photo also has a ghostly or double-exposure effect, giving it a surreal quality.