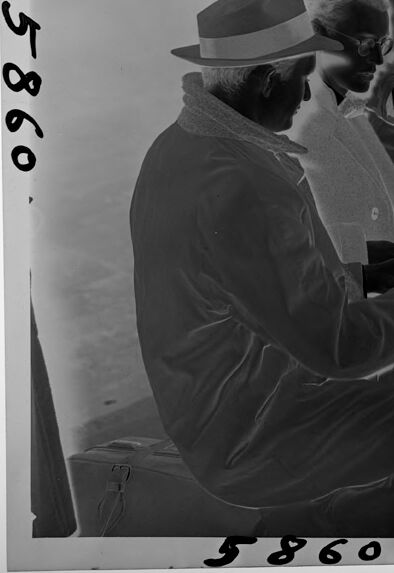

Machine Generated Data

Tags

Amazon

created on 2022-01-08

Clarifai

created on 2023-10-25

| people | 99.8 | |

|

| ||

| man | 98.8 | |

|

| ||

| group | 98.6 | |

|

| ||

| monochrome | 97.9 | |

|

| ||

| group together | 97.5 | |

|

| ||

| adult | 97.1 | |

|

| ||

| street | 89.6 | |

|

| ||

| woman | 87.1 | |

|

| ||

| sitting | 86.1 | |

|

| ||

| three | 84.5 | |

|

| ||

| journalist | 83.4 | |

|

| ||

| several | 81.6 | |

|

| ||

| sit | 80.8 | |

|

| ||

| leader | 80.2 | |

|

| ||

| administration | 80.1 | |

|

| ||

| child | 79.4 | |

|

| ||

| meeting | 78 | |

|

| ||

| police | 77.9 | |

|

| ||

| four | 76.9 | |

|

| ||

| chair | 76.7 | |

|

| ||

Imagga

created on 2022-01-08

| man | 49 | |

|

| ||

| person | 39.6 | |

|

| ||

| surgeon | 36.7 | |

|

| ||

| male | 34 | |

|

| ||

| people | 32.9 | |

|

| ||

| office | 30.8 | |

|

| ||

| computer | 30 | |

|

| ||

| business | 27.9 | |

|

| ||

| working | 27.4 | |

|

| ||

| businessman | 27.4 | |

|

| ||

| laptop | 26.7 | |

|

| ||

| work | 25.9 | |

|

| ||

| worker | 21.3 | |

|

| ||

| executive | 21.1 | |

|

| ||

| adult | 20.6 | |

|

| ||

| job | 18.6 | |

|

| ||

| professional | 18.3 | |

|

| ||

| indoors | 16.7 | |

|

| ||

| corporate | 16.3 | |

|

| ||

| sitting | 16.3 | |

|

| ||

| engineer | 16 | |

|

| ||

| men | 15.4 | |

|

| ||

| desk | 15.3 | |

|

| ||

| suit | 14.5 | |

|

| ||

| meeting | 14.1 | |

|

| ||

| portrait | 13.6 | |

|

| ||

| home | 13.6 | |

|

| ||

| room | 13.4 | |

|

| ||

| keyboard | 13.1 | |

|

| ||

| senior | 13.1 | |

|

| ||

| table | 13.1 | |

|

| ||

| happy | 11.9 | |

|

| ||

| team | 11.6 | |

|

| ||

| businesspeople | 11.4 | |

|

| ||

| looking | 11.2 | |

|

| ||

| smiling | 10.8 | |

|

| ||

| together | 10.5 | |

|

| ||

| teacher | 10.4 | |

|

| ||

| notebook | 10.4 | |

|

| ||

| glasses | 10.2 | |

|

| ||

| baron | 10.1 | |

|

| ||

| occupation | 10.1 | |

|

| ||

| group | 9.7 | |

|

| ||

| technology | 9.6 | |

|

| ||

| retirement | 9.6 | |

|

| ||

| education | 9.5 | |

|

| ||

| lifestyle | 9.4 | |

|

| ||

| manager | 9.3 | |

|

| ||

| mature | 9.3 | |

|

| ||

| teamwork | 9.3 | |

|

| ||

| communication | 9.2 | |

|

| ||

| face | 9.2 | |

|

| ||

| businesswoman | 9.1 | |

|

| ||

| mask | 8.8 | |

|

| ||

| jacket | 8.8 | |

|

| ||

| businessmen | 8.8 | |

|

| ||

| colleagues | 8.7 | |

|

| ||

| elderly | 8.6 | |

|

| ||

| reading | 8.6 | |

|

| ||

| adults | 8.5 | |

|

| ||

| career | 8.5 | |

|

| ||

| sit | 8.5 | |

|

| ||

| black | 8.4 | |

|

| ||

| monitor | 8.4 | |

|

| ||

| color | 8.3 | |

|

| ||

| camera | 8.3 | |

|

| ||

| helmet | 8.3 | |

|

| ||

| retired | 7.8 | |

|

| ||

| industry | 7.7 | |

|

| ||

| employee | 7.6 | |

|

| ||

| casual | 7.6 | |

|

| ||

| studio | 7.6 | |

|

| ||

| hand | 7.6 | |

|

| ||

| tie | 7.6 | |

|

| ||

| industrial | 7.3 | |

|

| ||

| planner | 7.2 | |

|

| ||

| handsome | 7.1 | |

|

| ||

| equipment | 7.1 | |

|

| ||

| hat | 7 | |

|

| ||

Google

created on 2022-01-08

| Outerwear | 95.6 | |

|

| ||

| Hat | 92 | |

|

| ||

| Coat | 89.8 | |

|

| ||

| Cap | 85.3 | |

|

| ||

| Sleeve | 76.1 | |

|

| ||

| Sun hat | 75.3 | |

|

| ||

| Snapshot | 74.3 | |

|

| ||

| Personal protective equipment | 73.2 | |

|

| ||

| Crew | 71.8 | |

|

| ||

| Fedora | 70.7 | |

|

| ||

| Monochrome | 68.4 | |

|

| ||

| Font | 67.4 | |

|

| ||

| Vintage clothing | 66.3 | |

|

| ||

| Service | 65.8 | |

|

| ||

| Stock photography | 65.3 | |

|

| ||

| History | 64.7 | |

|

| ||

| Art | 64.3 | |

|

| ||

| Baseball cap | 63.5 | |

|

| ||

| Suit | 57.4 | |

|

| ||

| Monochrome photography | 57.4 | |

|

| ||

Microsoft

created on 2022-01-08

| person | 99.6 | |

|

| ||

| text | 91.9 | |

|

| ||

| black and white | 91.5 | |

|

| ||

| outdoor | 90.6 | |

|

| ||

| clothing | 81.2 | |

|

| ||

| helmet | 76.6 | |

|

| ||

| monochrome | 64.1 | |

|

| ||

Color Analysis

Face analysis

Amazon

AWS Rekognition

| Age | 35-43 |

| Gender | Female, 53.2% |

| Sad | 89% |

| Calm | 6.8% |

| Happy | 1.5% |

| Fear | 0.8% |

| Angry | 0.8% |

| Confused | 0.6% |

| Disgusted | 0.3% |

| Surprised | 0.2% |

AWS Rekognition

| Age | 37-45 |

| Gender | Female, 76.8% |

| Calm | 57.5% |

| Sad | 33.2% |

| Surprised | 3.9% |

| Disgusted | 1.5% |

| Fear | 1.4% |

| Confused | 1% |

| Angry | 0.7% |

| Happy | 0.7% |

AWS Rekognition

| Age | 24-34 |

| Gender | Male, 95.4% |

| Calm | 53.1% |

| Sad | 43.5% |

| Happy | 2% |

| Disgusted | 0.5% |

| Angry | 0.4% |

| Confused | 0.3% |

| Fear | 0.2% |

| Surprised | 0.1% |

Feature analysis

Categories

Imagga

| events parties | 52.2% | |

|

| ||

| people portraits | 19% | |

|

| ||

| nature landscape | 10.9% | |

|

| ||

| streetview architecture | 10.1% | |

|

| ||

| pets animals | 2.2% | |

|

| ||

| food drinks | 2.1% | |

|

| ||

| paintings art | 2% | |

|

| ||

Captions

Microsoft

created on 2022-01-08

| a group of people sitting at a train station | 58.9% | |

|

| ||

| a group of people looking at a phone | 58.8% | |

|

| ||

| a group of people sitting at a train station looking at the camera | 53.5% | |

|

| ||

Text analysis

Amazon

5860

5860

5860

5860