Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

AWS Rekognition

| Age | 33-41 |

| Gender | Male, 84.9% |

| Calm | 95.6% |

| Sad | 3.2% |

| Surprised | 0.6% |

| Disgusted | 0.2% |

| Confused | 0.1% |

| Fear | 0.1% |

| Happy | 0.1% |

| Angry | 0.1% |

Feature analysis

Amazon

Clarifai

AWS Rekognition

| Person | 98.6% | |

Categories

Imagga

created on 2022-01-08

| interior objects | 98.3% | |

| streetview architecture | 1.6% | |

Captions

Microsoft

created by unknown on 2022-01-08

| a group of people in a room | 83.9% | |

| a group of people standing in a room | 78.5% | |

| a group of people posing for a photo | 59.1% | |

Clarifai

Created by general-english-image-caption-clip on 2025-07-14

a photograph of a family.

Salesforce

Created by general-english-image-caption-blip on 2025-05-22

a photograph of a woman in a white dress and a dog on a checkered floor

OpenAI GPT

Created by gpt-4o-2024-11-20 on 2025-06-12

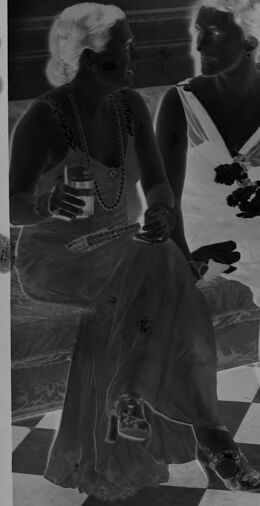

The image features a formal and elegant setting with four individuals dressed in fine attire, holding drinks, and engaged in conversation. The group is situated on and around a decorative sofa in a room with a checkered floor pattern, which creates a stylish ambiance. A seated dog is included in the foreground, adding a unique element to the scene. The overall composition suggests a refined social gathering or event.

Created by gpt-4o-2024-08-06 on 2025-06-12

The image is a photographic negative depicting a group of elegantly dressed people gathered indoors. There are four adults visible, with three seated on a patterned sofa and one standing to the right. The people are dressed in formal attire, with the women wearing gowns and the man in a suit. They are engaged in conversation while holding drinks. The setting appears to be a sophisticated interior space, featuring a black and white checkered floor. Additionally, there is a dog lying on the floor in the foreground. The image has a vintage feel, likely dating back several decades.

Anthropic Claude

Created by anthropic.claude-3-haiku-20240307-v1:0 on 2025-06-19

The image appears to depict a formal social gathering or event, likely from an earlier era based on the attire and setting. It shows several individuals, including two women in formal dresses and a man in a white suit, standing in a room with a checkered floor. There are also what appear to be animal pelts or rugs on the floor. The overall scene conveys a sense of elegance and sophistication characteristic of a high-society gathering or event from the past.

Created by us.anthropic.claude-3-opus-20240229-v1:0 on 2025-06-19

The black and white photograph shows a group of well-dressed people, likely at a formal event or party. The image is taken from above, looking down at the checkered floor where the people are standing and a dog is sitting.

The people are wearing elegant evening attire typical of an earlier era, with the women in long gowns and the men in tuxedos. They appear to be conversing and mingling.

In the foreground, a small fluffy dog, possibly a poodle or similar breed, is sitting and looking up towards the camera. The contrast of the formally dressed partygoers and the dog adds an amusing and whimsical element to the composition.

The setting has decorative wall panels and curtains visible, suggesting it may be taking place in an upscale home or venue. The angled overhead perspective and vintage clothing give the image an artistic, nostalgic quality capturing a snapshot of a bygone social gathering.

Created by us.anthropic.claude-3-5-sonnet-20241022-v2:0 on 2025-06-19

This appears to be a vintage black and white photograph taken in what looks like an elegant interior setting. The scene shows several people in formal attire - women in evening dresses and a man in a white dinner jacket - gathered on a distinctive checkerboard floor. What makes the image particularly interesting is the presence of what appears to be a dog lying on the checkered floor. The interior appears to be quite upscale, with decorative elements visible in the background. The contrast between the black and white tiles creates a striking geometric pattern that draws the eye. The photograph has a classic, possibly 1920s or 1930s feel to it, based on the style of dress and interior design elements visible.

Meta Llama

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-06-05

The image is a black-and-white photograph of a group of people and a dog in a room with a checkered floor. The image is likely from the 1920s or 1930s, based on the clothing and hairstyles of the individuals depicted.

- A group of people:

- There are five people in the image, all dressed in formal attire.

- The woman on the left is wearing a long dress and has her hair styled in an updo.

- The woman in the middle is also wearing a long dress and has her hair styled in an updo.

- The man on the right is wearing a suit and tie, and has his hair styled in a pompadour.

- The two women in the background are both wearing dresses and have their hair styled in buns.

- A dog:

- There is a small dog in the foreground of the image, walking towards the camera.

- The dog appears to be a terrier mix, with a short, smooth coat and floppy ears.

- A room with a checkered floor:

- The room has a checkered floor, with alternating black and white squares.

- The walls of the room are dark-colored, and there are several pieces of furniture visible, including a couch and a chair.

- The overall atmosphere of the image is one of elegance and sophistication, suggesting that the individuals depicted are from a wealthy or upper-class background.

Overall, the image appears to be a formal portrait of a group of people and their dog, taken in a luxurious setting. The clothing and hairstyles of the individuals suggest that the image was taken in the early 20th century, likely in the 1920s or 1930s.

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-06-05

The image is a black-and-white photograph of four people and a dog in a room with a checkered floor. The individuals are dressed in formal attire, with the two women on the left wearing long dresses and the man on the right wearing a white suit. The woman in the middle is also wearing a long dress. The dog is lying on the floor between the man and the woman in the middle.

The background of the image features a wall with a door and a window, as well as a checkered floor that adds to the formal atmosphere of the scene. Overall, the image appears to be a vintage photograph from the early 20th century, possibly taken at a social event or gathering.

Amazon Nova

Created by amazon.nova-lite-v1:0 on 2025-06-03

The image is a black-and-white photograph featuring a group of people gathered in a room. The setting appears to be a formal event, possibly a party or social gathering. The people are dressed in elegant attire, with the woman on the left wearing a dress and holding a drink, and the man in the center wearing a suit and bow tie. The woman on the right is holding a dog, which is lying on the floor. The floor is covered with a checkered pattern, and there is a door in the background. The image has a vintage feel, with a watermark in the bottom right corner.

Created by amazon.nova-pro-v1:0 on 2025-06-03

The image is a black-and-white photograph of a group of people in a room. The room has a checkered floor, and the people are dressed in formal attire. There are three women and one man in the photo. Two women are sitting on a couch, one of whom is holding a cup, and the other is holding a bouquet of flowers. The other woman is standing next to the man, who is wearing a bow tie and holding a cup. There is a dog in front of the couch.

Google Gemini

Created by gemini-2.0-flash on 2025-05-17

Here is a description of the image:

This black-and-white photo presents a scene of what appears to be a gathering or party, possibly from the early to mid-20th century. The floor is a striking checkerboard pattern of dark and light squares.

Two women are seated on a patterned sofa or armchair. They are holding glasses, and both appear to be dressed in elegant gowns. One woman is wearing a necklace.

A third woman is standing and appears to be engaged in conversation with a man. The man is wearing a suit with a bow tie, and he also holds a glass in his hand.

In the foreground, a dog is lying on the checkerboard floor.

The lighting is somewhat soft and even, which helps to bring out the details of the people's clothing and faces. The style and setting suggest a formal or semi-formal occasion.

Created by gemini-2.0-flash-lite on 2025-05-17

Certainly! Here's a description of the image:

Overall Impression

The image is a black and white negative, likely from a photograph taken in a vintage style. It depicts a group of people in what appears to be a social setting, perhaps a gathering or party.

Key Elements:

People: There are four people visible. Two women are seated, one on a sofa. Another woman and a man stand in the background. The man wears a white suit and a bow tie. The people are casually dressed in formal attire.

Dog: A dog is positioned in the foreground, on the checkered floor.

Setting: The scene takes place indoors. There's a checkered floor and a doorway. The background provides a sense of an elegant space.

Atmosphere: The overall feeling is one of an elegant, social scene from the past.

Additional details

The photo is in a negative format, as a result, the colours are inverted.

Mistral Pixtral

Created by us.mistral.pixtral-large-2502-v1:0 on 2025-06-02

The image appears to be a vintage black-and-white photograph, likely from the early to mid-20th century, depicting a group of people in formal attire at a social gathering or party. The scene takes place indoors, on a checkered floor, which adds a sense of elegance and formality to the setting.

Here are the details of the image:

People and Attire:

- There are four individuals visible in the photograph.

- Three women are dressed in elegant evening gowns, and one man is wearing a white suit with a black bow tie.

- The women have short, styled hair, which was fashionable during the 1920s and 1930s.

- The man is holding a drink in his hand and appears to be in conversation with one of the women.

Setting:

- The room has a checkered floor with black and white tiles, which is a distinctive feature of the setting.

- There is an armchair in the background, suggesting a comfortable and possibly luxurious environment.

- The room appears to be well-lit, with light coming from an unseen source, possibly a chandelier or other overhead lighting.

Activities:

- The individuals seem to be engaged in a social interaction, possibly at a cocktail party or a similar event.

- One woman is seated on the armchair, holding a drink and a cigarette, indicating a relaxed and leisurely atmosphere.

- Another woman is standing near the seated woman, also holding a drink.

- The man is standing and conversing with one of the women, adding to the social ambiance of the scene.

Additional Elements:

- There is a small dog lying on the floor, which adds a touch of domesticity and warmth to the otherwise formal setting.

- The photograph has some visible damage or wear, such as scratches and marks, indicating its age and possible historical significance.

Overall, the image captures a moment of social elegance and interaction, providing a glimpse into the fashion and lifestyle of a bygone era.

Qwen

Created by Qwen/Qwen2.5-VL-7B-Instruct on 2025-07-10

The image is a black-and-white photograph featuring four individuals in what appears to be a formal or elegant setting, possibly a historical indoor event or gathering. The floor has a checkered pattern, and the walls are adorned with wooden paneling.

Foreground and Floor: The checkered floor is prominent, with a dog on a leash lying down in the center of the image. The dog appears to be wearing a collar and possibly some kind of vest or harness.

Left Side: Two women are seated on a couch. Both are dressed in dresses that suggest a mid-20th-century fashion style, possibly from the 1930s or 1940s. They are holding drinks and appear to be conversing. One of the women has her hair styled with what looks like a headpiece or hairstyle from that era, and she is wearing pearl necklaces.

Background: A man dressed in a formal white suit is standing and holding a drink. He is positioned behind the seated women and near a doorway. His attire and posture suggest he may be a waiter or butler.

The overall atmosphere of the image conveys a sense of elegance and formality, with the clothing and setting reflecting a past era. The lighting and composition give the photograph a classic, vintage feel.

Created by Qwen/Qwen2.5-VL-72B-Instruct on 2025-07-10

This black-and-white photograph captures a scene in an elegant interior setting with a checkered floor. There are four individuals and a dog in the image. Three women are seated on a sofa, each holding a glass, and they appear to be engaged in conversation. They are dressed in formal attire, with one of them wearing a necklace. A man in a white suit stands behind them, holding a tray with glasses, suggesting he is serving them. The dog is lying on the floor in the foreground, looking up. The photograph has a vintage feel, with some imperfections and markings on the edges, indicating it might be an old photograph or a print. The number "6912" is visible in the bottom corners of the image.