Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

AWS Rekognition

| Age | 31-41 |

| Gender | Male, 99.9% |

| Calm | 76.5% |

| Happy | 18.3% |

| Sad | 2.3% |

| Confused | 1% |

| Surprised | 0.8% |

| Angry | 0.8% |

| Disgusted | 0.2% |

| Fear | 0.1% |

Feature analysis

Amazon

Clarifai

AWS Rekognition

| Person | 99.2% | |

Categories

Imagga

created on 2022-01-08

| streetview architecture | 91.5% | |

| beaches seaside | 2.6% | |

| paintings art | 1.9% | |

| nature landscape | 1.1% | |

Captions

Microsoft

created by unknown on 2022-01-08

| a group of people standing in front of a crowd | 79.3% | |

| a group of people standing in front of a building | 79.2% | |

| a group of people in front of a building | 79.1% | |

Clarifai

Created by general-english-image-caption-clip on 2025-07-14

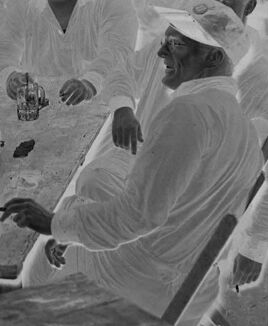

a group of men and women in white robes, sitting on a chair, drinking tea and talking.

Salesforce

Created by general-english-image-caption-blip on 2025-05-20

a photograph of a group of people sitting around a table

OpenAI GPT

Created by gpt-4o-2024-11-20 on 2025-06-09

This is a black-and-white negative image depicting a group of people seated around a table inside a tent. The individuals appear to be wearing uniform-like clothing with hats, suggesting a possible military or organized setting. The table has drinking glasses and small items, potentially indicating a casual or social gathering. Behind them are wicker chairs and the striped fabric of the tent draping across the background. One person is seen reclining and lifting a leg in a playful or relaxed gesture.

Created by gpt-4o-2024-08-06 on 2025-06-09

The image is a negative of a photograph depicting a group of men wearing white uniforms sitting around a long wooden table under a striped tent. The table is scattered with objects, which might be cups or glasses, and one man has his leg raised. Surrounding the group are folding chairs, and the setting appears to be a casual or recreational environment, possibly outdoor given the tent. The men seem to be engaged in a social gathering or moment of leisure.

Anthropic Claude

Created by anthropic.claude-3-haiku-20240307-v1:0 on 2025-06-14

The image appears to show a group of people gathered around a table in what seems to be a tent or temporary structure. The individuals are all wearing white garments, likely protective clothing, and appear to be engaged in some kind of activity or discussion. The setting suggests this may be a medical or scientific context, though I cannot identify any specific individuals in the image.

Created by us.anthropic.claude-3-opus-20240229-v1:0 on 2025-06-14

The black and white image shows a group of men, likely medical professionals or researchers, sitting around a table in what appears to be a tent or temporary structure. They are all wearing white coats or protective clothing and some have masks or head coverings on. The table has various objects on it, possibly medical instruments or samples. The men seem to be engaged in some sort of discussion or examination. The striped pattern of the tent walls and ceiling can be seen in the background.

Created by us.anthropic.claude-3-5-sonnet-20241022-v2:0 on 2025-06-14

This appears to be a historical black and white photograph showing a group of people gathered around a table, likely in some kind of outdoor or tent setting. They are all wearing white clothing or uniforms and white caps. The setting appears casual, with what looks like beer steins or drinking vessels on the table. Behind them, you can see what appears to be canvas or tent material creating walls or partitions. The furniture includes what appears to be wicker chairs and a simple wooden table. The image has the quality and style typical of early-to-mid 20th century photography.

Meta Llama

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-06-05

The image depicts a group of men gathered around a table, likely in a social setting. The men are dressed in white attire, with some wearing hats, and are seated on wooden chairs. The table is made of wood and has several glasses and other items on it.

In the background, there is a striped tent or awning, which suggests that the scene may be taking place outdoors. The overall atmosphere appears to be relaxed and convivial, with the men engaged in conversation and enjoying each other's company.

The image appears to be a historical photograph, possibly from the early 20th century, given the style of clothing and the setting. The image is in black and white, which adds to its nostalgic and timeless quality.

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-06-05

The image depicts a group of men gathered around a table, with some seated and others standing. The men are dressed in white attire, including hats, and appear to be engaged in conversation or possibly playing a game. The table is cluttered with various items, such as mugs and other objects.

In the background, there are several chairs and tables, suggesting that the scene is set in a casual outdoor setting, possibly a picnic or a social gathering. The overall atmosphere of the image is one of camaraderie and relaxation, with the men seeming to enjoy each other's company.

The image has a vintage feel to it, with the black-and-white tone and the style of the clothing and furniture suggesting that it may have been taken in the early 20th century. Overall, the image captures a moment of leisure and socialization among a group of men, and provides a glimpse into a bygone era.

Amazon Nova

Created by amazon.nova-lite-v1:0 on 2025-06-03

The image is a black-and-white photograph of a group of people sitting around a table. They are all wearing white uniforms, hats, and shoes. The table has cups, glasses, and other items on it. The people are sitting in chairs, and some of them are standing. The image has a watermark on the right side that reads "DELENDEN BY 223." The background of the image is blurry, and it appears to be a tent.

Created by amazon.nova-pro-v1:0 on 2025-06-03

A black-and-white photo of a group of men sitting on chairs around a table, probably in a tent. The men are wearing white hats and white long-sleeved shirts. The man on the left is wearing a belt and holding a shoe. There are cups and other objects on the table. Behind them are chairs and a striped curtain. The photo has a watermark with the text "DEHENDER 2012" on the right side.

Google Gemini

Created by gemini-2.0-flash on 2025-04-30

Certainly! Here's a description of the image based on the provided information:

Overall Impression:

The image appears to be an old black-and-white photograph of a group of men gathered in a tent. The setting seems relaxed and casual.

Details:

Setting: The scene takes place inside a tent with a striped canvas overhead.

People: There are approximately 13 men in the picture. Most of them appear to be wearing light-colored uniforms or clothing and caps. They are seated around tables.

Activities: Some of the men are seated at a large wooden table, and some appear to be drinking from glasses or mugs. One man in the front row is holding his foot up on the table. One man is smoking a cigar.

Objects: There are glasses, mugs, chairs, tables, and a tent present in the picture.

Style: The image has the distinct characteristics of a vintage photograph.

If you'd like me to describe any specific aspect of the image in more detail, just let me know!

Created by gemini-2.0-flash-lite on 2025-04-30

This is a black and white image showing a group of men gathered around a table in what appears to be a tent. The men are all wearing white uniforms and baseball caps. They are sitting and standing, and some are holding glasses or mugs, while others have cigars. One man is holding his foot up on the table. There are folding chairs, woven chairs, and a large table. In the background, a striped tent is visible. The image appears to be an older photograph due to the negative film style.

Mistral Pixtral

Created by us.mistral.pixtral-large-2502-v1:0 on 2025-06-01

The image depicts a group of people gathered around a table in what appears to be a makeshift or temporary setting, possibly a tent. The individuals are dressed in white clothing and head coverings, which suggests they might be in a medical or sanitary environment. Some of them are wearing masks or have their faces partially covered, and a few are holding objects like bottles or utensils. The scene appears to be from an earlier time period, indicated by the style of clothing and the overall setting.

The group seems to be engaged in a social or communal activity, possibly a meal or a meeting. The atmosphere looks somewhat informal and relaxed, with people sitting and standing around the table. The background shows more people and chairs, indicating that this might be a larger gathering or event. The image has a historical feel to it, and the setting suggests it could be related to a medical camp, field hospital, or similar context.

Qwen

Created by Qwen/Qwen2.5-VL-7B-Instruct on 2025-07-06

The image is a black-and-white photograph depicting a group of individuals seated around a table in what appears to be an informal outdoor setting, possibly a tent or a large canopy. The individuals are dressed in light, long-sleeved shirts and shorts, suggesting a warm climate. The table is cluttered with glass tumblers and what looks like a small object, possibly a piece of candy or a small toy, that one of the individuals is holding. The group seems to be engaged in a casual activity, with some laughing and gesturing, creating a relaxed and jovial atmosphere. The background includes additional chairs and a tent-like structure, reinforcing the casual, outdoor setting. The image has a vintage quality, hinting at a historical context.

Created by Qwen/Qwen2.5-VL-72B-Instruct on 2025-07-06

This image is a black-and-white photograph depicting a group of men seated around a table under a tent with a striped canopy. The men are dressed in white uniforms and caps, suggesting they might be medical personnel or patients. They are smiling and appear to be enjoying a casual moment together. On the table, there are mugs and other items, indicating they might be having a meal or a drink. The setting looks informal and relaxed, with additional chairs and tables visible in the background. The overall atmosphere suggests a camaraderie and a break from routine activities.