Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

AWS Rekognition

| Age | 45-51 |

| Gender | Female, 91.8% |

| Happy | 99.4% |

| Surprised | 0.1% |

| Confused | 0.1% |

| Sad | 0.1% |

| Calm | 0.1% |

| Disgusted | 0.1% |

| Angry | 0.1% |

| Fear | 0.1% |

Feature analysis

Amazon

Clarifai

AWS Rekognition

| Person | 94.9% | |

Categories

Imagga

created on 2022-01-08

| paintings art | 99.3% | |

Captions

Microsoft

created by unknown on 2022-01-08

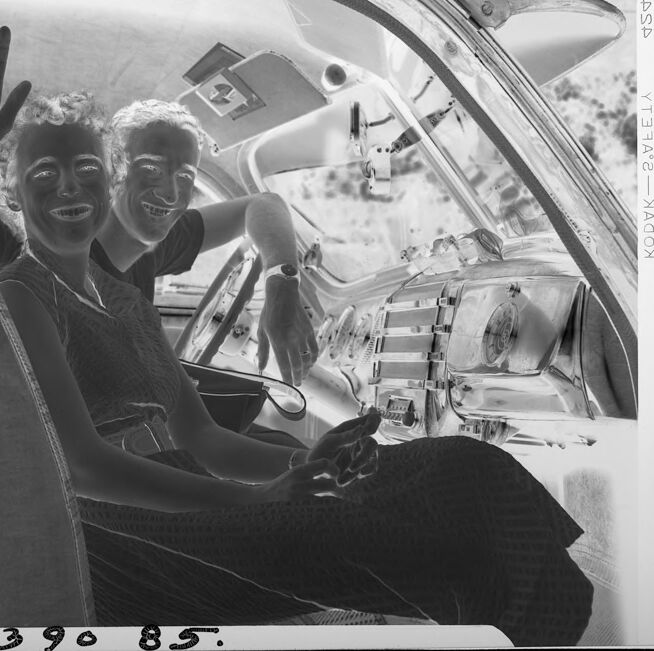

| a man sitting in a car | 63.4% | |

| a man sitting on top of a car | 57.5% | |

| a man riding on the back of a car | 48% | |

Clarifai

Created by general-english-image-caption-clip on 2025-07-10

actor and his wife, in the back seat of a car.

Salesforce

Created by general-english-image-caption-blip on 2025-05-20

a photograph of a man and woman waving in a car

OpenAI GPT

Created by gpt-4o-2024-11-20 on 2025-06-09

This is a high-contrast, black-and-white negative image of two individuals sitting inside a car. The interior of the vehicle is prominently displayed, with visible elements such as the dashboard, steering wheel, and seats. The car’s door is open, revealing part of the exterior environment, and there is a garment hung inside the vehicle near the seat. The scene captures a candid moment, with the individuals engaging in a gesture or interaction. Visible textures and details in the clothing and the vehicle interior add depth to the image. The overall atmosphere is vintage and reflective of a past era.

Created by gpt-4o-2024-08-06 on 2025-06-09

The image is a black and white negative photograph depicting the interior of an older model car. There are two individuals seated inside. The car has a distinctive interior with a classic dashboard featuring round dials and knobs. One of the individuals has their hand raised, and the other is holding something small in their hand. A garment or fabric item is hanging on the back of the seat, visible towards the left side of the frame. The overall composition suggests a candid moment captured inside the vehicle.

Anthropic Claude

Created by anthropic.claude-3-haiku-20240307-v1:0 on 2025-06-15

The image shows two people, a man and a woman, sitting together in what appears to be the interior of a vehicle. They are both smiling and waving at the camera. The image has a black and white aesthetic, giving it a vintage or nostalgic feel. The vehicle's interior is visible, with various details and equipment visible through the transparent windshield or window.

Created by us.anthropic.claude-3-opus-20240229-v1:0 on 2025-06-15

The black and white photograph shows a smiling young couple sitting together in the front seat of a vintage car, likely from the 1950s or 1960s based on their clothing and hairstyles. The man has his arm around the woman and they both appear happy and carefree. The car's interior is visible, including the steering wheel, dashboard gauges, and bench seat upholstery. Outside the car windows, trees can be seen, suggesting the photo was taken outdoors, possibly on a pleasant drive. The image captures a nostalgic, lighthearted moment between the couple enjoying a ride in their classic automobile.

Created by us.anthropic.claude-3-5-sonnet-20241022-v2:0 on 2025-06-15

This is a black and white photograph showing two people sitting in what appears to be a vintage car, likely from around the 1950s or 1960s. One person is waving at the camera while both appear to be smiling. The image is taken through the car's window, showing the interior dashboard and front seats. The photo has a casual, candid quality to it, and there appears to be some clothing hanging in the background of the car. The number "390 85" is visible at the bottom of the photograph, suggesting this might be from an archive or photo collection.

Meta Llama

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-05-29

The image is a black-and-white photograph of two women in a car, with one waving at the camera. The photo appears to be from the 1950s or 1960s, based on the style of clothing and the car.

- Two women in a car

- The woman on the left has curly hair and is wearing a dark dress.

- She is waving at the camera with her right hand.

- The woman on the right has short hair and is wearing a light-colored dress.

- She is leaning forward, looking at the camera with a smile.

- Car interior

- The car is a convertible, with the top down.

- The interior is light-colored, with a dashboard and steering wheel visible.

- There are several objects in the background, including what appears to be a coat or jacket hanging from the back of the seat.

- Background

- The background is blurry, but it appears to be a sunny day with trees and other foliage visible.

- There are also some buildings or houses in the distance.

Overall, the image captures a carefree moment of two women enjoying a drive on a nice day. The photo's style and setting suggest that it was taken in the mid-20th century.

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-05-29

This image is a black-and-white photograph of a couple sitting in the front seats of a car, captured from the outside looking in. The woman, positioned on the left, has her right hand raised in a waving gesture. She wears a sleeveless dress and has short, curly hair. The man, seated on the right, has short hair and is dressed in a short-sleeved shirt. He appears to be operating the vehicle.

The car's interior features a steering wheel, dashboard, and windshield. A coat or jacket hangs from a hook on the left side of the image. The background, visible through the windshield, shows trees and bushes, suggesting that the car is parked outdoors.

At the bottom of the image, the numbers "390 85" are handwritten in black ink. Along the right edge, the text "KODAK—SAFETY 424" is printed vertically. The overall atmosphere of the image conveys a sense of happiness and joy, as the couple appears to be enjoying each other's company while driving.

Amazon Nova

Created by amazon.nova-lite-v1:0 on 2025-05-28

The image is a black-and-white photograph of two people sitting inside a car. The car appears to be an older model, possibly from the 1950s or 1960s. The woman is sitting in the front passenger seat, while the man is sitting in the driver's seat. The woman is smiling and waving her hand, while the man is smiling and looking at the camera. The car's interior is visible, including the dashboard and the steering wheel. The image has a vintage look, with a slightly grainy texture and a faded color palette. The image also includes a watermark in the bottom right corner, which reads "390 85" and "KADAD YTEEFFE-KADAD".

Created by amazon.nova-pro-v1:0 on 2025-05-28

The black-and-white photograph features two people sitting inside a car. The woman is sitting on the passenger seat, while the man is sitting behind her. The woman is waving her hand and smiling, while the man is smiling and leaning on the car's door. The car's door is open, and the steering wheel is visible. There is a piece of clothing hanging on the side of the car.

Google Gemini

Created by gemini-2.0-flash-lite on 2025-05-01

Certainly! Here's a description of the image:

Overall Impression:

The image is a black and white, likely a negative, of two people inside a vintage car. They are both smiling and appear to be enjoying themselves.

Key Details:

- Subject: A man and a woman are the primary subjects. The woman is seated in the driver's seat, while the man is leaning into the car.

- Action: The woman has her arm raised, possibly waving or greeting someone. They both have a happy expression.

- Setting: The scene is inside an older car, with visible dashboard elements, steering wheel, and other interior details. The shape of the car suggests it's from the mid-20th century.

- Composition: The photograph is taken from a perspective that gives a good view of the interior of the car and the people within it.

- Negative Appearance: Since it is a negative, the colors appear inverted (light areas are dark, and dark areas are light).

Possible Story:

The photograph likely captures a moment of joy and leisure, perhaps a road trip or a casual outing in a classic car. The smiles on their faces indicate a positive and lighthearted atmosphere.

Created by gemini-2.0-flash on 2025-05-01

Here is a description of the image:

The image is a black and white photographic negative showing two people inside of a car. A woman is sitting in the passenger seat, waving with her right hand. She is wearing a dress with a belted waist, and her hair appears to be curly. A man is in the driver's seat, smiling with his arm resting on the seat back behind the woman. He is wearing a short-sleeved shirt, and there is a watch visible on his wrist. The interior of the car is old-fashioned, with a large, detailed dashboard featuring a radio and various gauges. There is a steering wheel in front of the driver. A coat or jacket is hanging on a hanger inside the car, partially obscuring the view through the front windshield. The top border of the image has perforations, indicating that it is a film negative. Handwritten numbers are visible along the bottom edge of the frame. There is a "Kodak Safety" notice printed on the side of the negative.

Mistral Pixtral

Created by us.mistral.pixtral-large-2502-v1:0 on 2025-06-01

The image is a black and white photograph showing two individuals seated inside the cockpit of an aircraft. The person on the left is waving with their right hand, while the person on the right is smiling and looking towards the camera. Both individuals are wearing casual clothing, and their faces appear to be painted or darkened, possibly for theatrical or humorous effect. The aircraft's interior is visible, including the instrument panel and various controls. The photograph is taken from outside the cockpit, looking in through the open door. The background shows some trees, suggesting the aircraft is on the ground, possibly at an airfield. The image has a vintage feel, and the markings on the border indicate it might be from a photo archive, with numbers and possibly a date or catalog information visible.

Qwen

Created by Qwen/Qwen2.5-VL-7B-Instruct on 2025-07-06

This is a black and white photograph showing the interior of a vehicle, possibly a vintage car. Two individuals are seated inside the vehicle, smiling and appearing to enjoy themselves. The person in the driver's seat is waving with their right hand. The interior of the car is visible, featuring a steering wheel, dashboard instruments, and a gear shift. There are hanging garments on the left side of the image, possibly part of the car's storage or a coat rack. The image has a vintage feel, and the numbers "390 85" and "KODAK 2-A-WEEL" are printed in the bottom right corner, indicating it is likely a historical photograph. The overall mood of the image is cheerful and nostalgic.

Created by Qwen/Qwen2.5-VL-72B-Instruct on 2025-07-06

This black-and-white image shows two individuals seated inside a spacecraft, likely from the early space exploration era. The person on the left is waving with a cheerful expression, while the person on the right is smiling and holding onto a control panel. The interior of the spacecraft is visible, featuring numerous switches and instruments. The image has a vintage quality, with a number "390 85" printed at the bottom and some text along the right edge. The photograph appears to capture a moment of excitement or farewell, possibly during a training session or a simulated mission.