Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

AWS Rekognition

| Age | 47-53 |

| Gender | Female, 85.8% |

| Calm | 41.2% |

| Happy | 19.3% |

| Disgusted | 15.6% |

| Fear | 5.4% |

| Surprised | 5.2% |

| Angry | 5.1% |

| Sad | 4.2% |

| Confused | 3.9% |

Feature analysis

Amazon

Clarifai

AWS Rekognition

| Person | 99.3% | |

Categories

Imagga

created on 2022-01-09

| paintings art | 63.6% | |

| streetview architecture | 27.1% | |

| pets animals | 4% | |

| beaches seaside | 3.1% | |

Captions

Microsoft

created by unknown on 2022-01-09

| a group of people standing next to a person | 67.7% | |

| a group of people looking at each other | 67.6% | |

| a group of people looking at a person | 64.8% | |

Clarifai

Created by general-english-image-caption-clip on 2025-07-12

a black and white photograph of a bride and groom.

Salesforce

Created by general-english-image-caption-blip on 2025-05-03

a photograph of a group of people standing around a table

OpenAI GPT

Created by gpt-4o-2024-11-20 on 2025-06-18

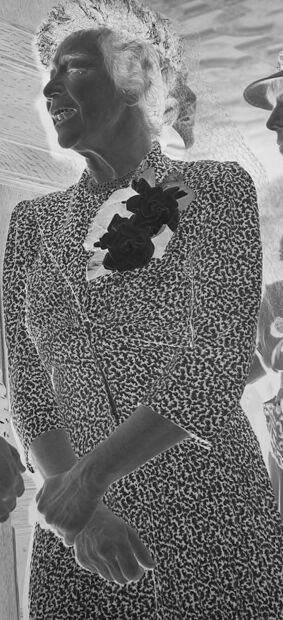

The image shows three individuals dressed in formal attire, gathered in a social setting. The negative image reveals details of their clothing, such as patterned dresses, floral decorations, and hats. The individual on the left is wearing a white suit, while the others appear to be adorned with accessories like gloves, necklaces, and floral accents. The background includes architectural details, such as wooden features and a textured ceiling, suggesting an indoor environment. The figures appear engaged in conversation or interaction.

Created by gpt-4o-2024-08-06 on 2025-06-18

The image appears to be a negative photograph depicting a group of four individuals standing together, dressed in formal attire. The negative effect reverses the image’s colors, making white clothes appear black and vice versa. The individuals are engaged in conversation or interaction, with two women wearing hats adorned with flowers. One woman is wearing a dress with a darker floral pattern, accessorized with a large flower on the chest, and is holding a purse in one hand. Another woman is wearing a dress with small patterns, with a long-sleeve design and a white hat that also features floral decorations. A man dressed in a suit with visible buttons and a tie is standing to the left. The background includes a wall and what seems to be a ceiling with a pattern. The image has the number "12156" written at the top and is on Kodak safety film, as indicated by the text on the border.

Anthropic Claude

Created by anthropic.claude-3-haiku-20240307-v1:0 on 2025-06-19

The image shows three well-dressed individuals, likely at some kind of formal event or gathering. The person on the left is wearing a polka dot dress and hat, while the person in the middle is wearing a patterned dress and hat. The person on the right is also wearing an ornate hat. The three individuals appear to be engaged in conversation or some kind of interaction. The image has a black and white aesthetic, giving it a vintage or historical feel.

Created by us.anthropic.claude-3-opus-20240229-v1:0 on 2025-06-19

The black and white image shows three smiling women wearing dresses and hats from the 1930s or 1940s era, based on their clothing and hairstyles. They appear to be at a social event, possibly a wedding or celebration, and are standing close together while conversing and laughing. The background has wood paneling and decorative curtains, suggesting they are indoors at a venue or home. The photo has a nostalgic, vintage feel capturing a happy moment between friends during that time period.

Created by us.anthropic.claude-3-5-sonnet-20241022-v2:0 on 2025-06-19

This appears to be a vintage black and white photograph showing three elderly women standing together. They are all wearing patterned dresses - one notably in a polka dot pattern. The women have similar hairstyles typical of an earlier era, with their hair styled in short, curled waves. The image has a distinctive photographic quality common to mid-20th century photography, with some light and shadow contrast effects visible. The number "12156" appears to be written at the top of the photograph, suggesting it may be from an archive or collection.

Meta Llama

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-06-09

The image is a black-and-white photograph of four individuals, likely taken in the mid-20th century. The photograph appears to be a formal gathering, possibly at an event or ceremony.

- Leftmost Individual

- Wearing a white suit with a dark shirt and tie

- Standing with his hands clasped in front of him

- Partially visible, with only his upper body shown

- Second Individual from the Left

- Wearing a white dress with black polka dots

- Holding a small purse or clutch in her right hand

- Facing the third individual

- Third Individual from the Left

- Wearing a dark dress with a white pattern

- Holding a dark flower or brooch on her left shoulder

- Facing the second individual

- Rightmost Individual

- Wearing a dark dress with a white pattern

- Holding a dark object, possibly a fan or a book

- Facing the third individual

The background of the image features a wall with a door and a textured ceiling. The overall atmosphere suggests a formal event or gathering, possibly during a special occasion or ceremony.

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-06-09

The image is a black-and-white photograph of four individuals, with the faces and hands of three people visible. The individuals are dressed in formal attire, with the two women on the right wearing hats and the man on the left wearing a suit jacket. The woman in the middle is wearing a dress with a pattern of small dots, while the woman on the right is wearing a dress with a floral pattern. The man on the left is wearing a suit jacket with buttons on the sleeve.

In the background, there appears to be a wooden door or wall, and a window with a curtain or blind. The overall atmosphere of the image suggests a formal event or gathering, possibly from the early 20th century given the style of clothing and the black-and-white photography.

Amazon Nova

Created by amazon.nova-lite-v1:0 on 2025-06-08

The image is a black-and-white photograph featuring four individuals standing together, possibly for a formal portrait. The individuals are dressed in vintage clothing, suggesting a historical context. The woman on the left is wearing a polka-dot dress with a matching hat adorned with a flower. The woman in the middle is dressed in a patterned dress with a flower on her chest. The woman on the right is wearing a dress with a fur collar and a hat with a flower. The man on the left is dressed in a suit with a tie. The photograph has a watermark with the number "12156" in the top corners.

Created by amazon.nova-pro-v1:0 on 2025-06-08

The image is a black-and-white photograph featuring three individuals standing together. The photograph is composed of two distinct halves, with the left half showing a woman wearing a polka-dotted dress and a hat, and the right half showing another woman in a leopard print dress and a hat. The woman in the leopard print dress is holding a bouquet of flowers. The woman on the left is looking at the woman in the leopard print dress, and they appear to be engaged in a conversation. Behind them, there is a wooden door and a mirror reflecting the image of the woman in the leopard print dress. The photograph has a vintage feel, possibly taken in the mid-20th century.

Google Gemini

Created by gemini-2.0-flash on 2025-05-17

Here is a description of the image:

The photograph is an inverse image featuring a group of people, likely from a bygone era, dressed in formal attire. On the left side of the image, a man can be partially seen, dressed in a suit with a tie. Next to him stands a woman wearing a polka dot dress with a hat. She appears to be engaged in conversation with another woman in a patterned dress, adorned with a flower. To the right of these two women, there's another woman in a hat and what seems to be a fur stole, holding a purse. The image quality indicates that it may be an inverted negative, which explains the contrast and tone inversions. The number "12156." is visible at the top and bottom of the image. The environment behind the people is somewhat indistinct but appears to be an indoor setting with textured walls.

Created by gemini-2.0-flash-lite on 2025-05-17

Here's a description of the image:

Overall Impression: The image appears to be a photograph of a group of people. It is a negative image, which means the light and dark values are reversed (white appears black, and vice versa).

Key Elements:

- People: There are at least four people visible. They seem to be dressed in formal or semi-formal attire, suggesting a special occasion. The central focus is on two elderly women, possibly in conversation, while other people are partially visible on the left and right.

- Attire: The people are wearing what seem to be dresses with patterns. Some women are wearing hats with decorations, which suggests an old-fashioned look. The man on the left is wearing a suit and tie.

- Setting: The photo appears to have been taken indoors. There are wooden elements suggesting a room or interior.

Possible Context:

- Given the formal attire and setting, this could be a picture from a wedding, a family gathering, or some other special event.

- The age of the people, the clothing styles, and the negative effect suggest that this is an older photograph.

Note: Due to the negative image, it's difficult to assess color and finer details.

Mistral Pixtral

Created by us.mistral.pixtral-large-2502-v1:0 on 2025-06-01

The image appears to be a black and white photograph from what seems to be a formal event or gathering. The photograph shows a group of people standing together. Here are some details:

People in the Image:

- There are four individuals visible in the image.

- The person on the far left is partially visible and is wearing a suit with a bow tie.

- The three women in the center and right are dressed in formal attire.

Clothing and Accessories:

- The woman in the center-left is wearing a polka-dotted dress and a hat with decorative elements.

- The woman in the center is wearing a patterned dress and a hat with a black decorative element.

- The woman on the far right is wearing a dress with a floral pattern and a hat with decorative elements.

- All three women are wearing gloves.

Background and Setting:

- The background appears to be a wooden wall or structure.

- There is a faint, wavy pattern in the background, possibly indicating a curtain or decorative element.

Photographic Details:

- The image has a number "12156" printed on it, which could be a reference or catalog number.

- There are some markings and text on the right side of the image, but they are not fully legible.

The overall atmosphere of the image suggests a formal or ceremonial occasion, possibly from an earlier era given the style of clothing and the black and white nature of the photograph.

Qwen

Created by Qwen/Qwen2.5-VL-7B-Instruct on 2025-07-06

This black-and-white photograph appears to depict a formal event or gathering, possibly from the early to mid-20th century. The setting seems to be indoors, with a decorative wall visible in the background.

In the foreground, there are three women dressed in formal attire. The woman on the left is wearing a white outfit with a pattern of small black dots, a wide-brimmed hat adorned with what appears to be a feathered or decorative element, and she is holding a white cane. The woman in the center is also dressed in a dark outfit with a pattern and is wearing a similar styled hat. The woman on the right is wearing a lighter-colored outfit with a fur-trimmed wrap and a hat that also seems to have a feather or decorative element.

To the left, partially visible, is a man in a suit with a tie, gesturing towards the women, suggesting he might be interacting with them or addressing them in some capacity. The overall atmosphere of the photograph conveys a formal and possibly celebratory occasion.

Created by Qwen/Qwen2.5-VL-72B-Instruct on 2025-07-06

This black-and-white photograph captures a formal scene featuring four elegantly dressed individuals. At the center are two women engaged in conversation. The woman on the left is wearing a light-colored dress with a small, dark pattern and a wide-brimmed hat adorned with a dark ribbon. She has short, light-colored hair and is smiling as she speaks. The woman on the right is dressed in a darker, patterned dress with a brooch featuring dark flowers. She has short, dark, curly hair and is also smiling, appearing to be enjoying the conversation. To the far right, another woman is partially visible, wearing a fur stole and a hat with a dark band. She is looking away from the central figures. On the far left, the partial figure of a man in a light-colored suit and tie is visible, with his hand raised as if gesturing. The background suggests an indoor setting with a patterned ceiling and a wall with vertical lines. The photograph has a vintage feel, likely from the mid-20th century.