Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

AWS Rekognition

| Age | 33-41 |

| Gender | Male, 97.6% |

| Calm | 84% |

| Sad | 9.7% |

| Surprised | 3.1% |

| Confused | 1.2% |

| Angry | 0.6% |

| Disgusted | 0.6% |

| Fear | 0.5% |

| Happy | 0.3% |

Feature analysis

Amazon

Clarifai

AWS Rekognition

| Person | 99.5% | |

Categories

Imagga

created on 2022-01-15

| nature landscape | 92.7% | |

| pets animals | 5.1% | |

| streetview architecture | 1.1% | |

Captions

Microsoft

created by unknown on 2022-01-15

| a group of people posing for a photo | 93.6% | |

| a group of people posing for the camera | 93.5% | |

| a group of people posing for a picture | 93.4% | |

Clarifai

Created by general-english-image-caption-clip on 2025-07-12

a group of women in traditional dress.

Salesforce

Created by general-english-image-caption-blip on 2025-05-16

a photograph of a group of people standing around a bed

OpenAI GPT

Created by gpt-4o-2024-11-20 on 2025-06-16

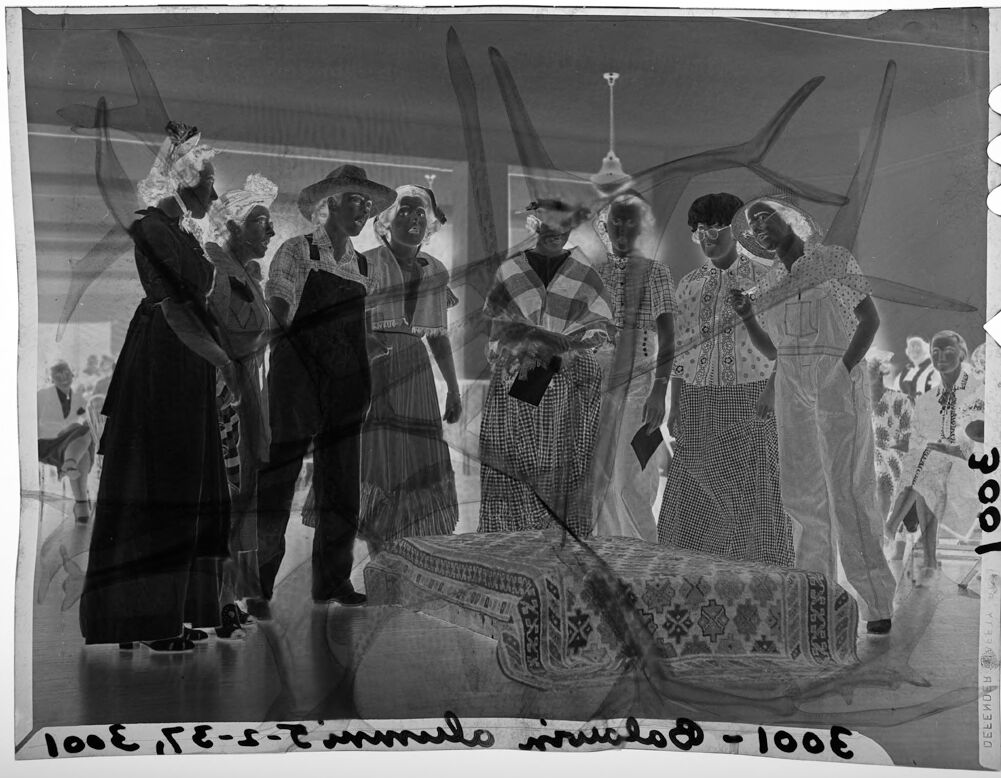

The image is a negative photograph featuring a group of individuals dressed in various costumes, standing together in what appears to be an indoor setting. The clothing styles include patterned designs, overalls, dresses, and hats, suggesting a themed or era-specific gathering. There is a patterned object, likely a rug or decorative piece, placed prominently in the foreground. In the background, architectural elements like lighting fixtures and a ceiling can be seen, along with other people seated or present in the setting. The overall composition appears to capture a moment of camaraderie or performance.

Created by gpt-4o-2024-08-06 on 2025-06-16

The image is a photo negative showing a group of people dressed in various costumes. They are standing together in a room, presumably for a themed event or gathering. Two women on the left side are wearing dresses with head coverings and aprons, suggesting a retro or historical theme. A man next to them is wearing overalls and a wide-brimmed hat. A person in the center has a shawl draped over their shoulders. On the right, three individuals are wearing patterned shirts and overalls. There is a decorated cushion or rug on the floor in front of them. The scene includes several more people in the background, some seated and others standing, observing the group. Writing appears at the bottom and side, though the reversed nature of the negative makes it difficult to decipher.

Anthropic Claude

Created by anthropic.claude-3-haiku-20240307-v1:0 on 2025-06-19

The image appears to be a black and white photograph depicting a group of people in traditional or costume attire. The individuals are standing around what looks like a decorative rug or carpet, and there appears to be some kind of stage or backdrop behind them. The clothing and accessories worn by the people suggest this may be a performance or theatrical scene of some kind. The overall atmosphere of the image conveys a sense of formality and cultural tradition.

Created by us.anthropic.claude-3-5-sonnet-20241022-v2:0 on 2025-06-19

This appears to be a vintage black and white photograph, likely from the mid-20th century. The image shows a group of people standing together, wearing clothing typical of that era - women in dresses with some wearing long skirts, and men in casual attire. The photo appears to be taken indoors, and there seems to be some damage or deterioration to the photograph, visible as marks or spots on the image. There's what looks like a patterned rug or carpet visible in the lower portion of the image. The photograph has a date notation at the bottom, though it's not clearly legible in this view. The overall composition suggests this might be a group gathering or social event of some kind.

Meta Llama

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-06-10

The image is a black-and-white photograph of a group of people gathered around a bed, likely taken in the early 20th century. The photograph appears to be a historical or archival image, possibly from a museum or archive.

Here are the details of the image:

- Group of People:

- There are approximately 10 people in the image.

- They are all dressed in clothing from the early 20th century, with men wearing suits and women wearing dresses.

- Some of the individuals are wearing hats or head coverings.

- Bed:

- The bed is positioned in the center of the image.

- It has a floral patterned cover and appears to be a four-poster bed.

- The bed is not made, with the sheets and blankets rumpled.

- Background:

- The background of the image is out of focus, but it appears to be a room with a high ceiling.

- There are several objects visible in the background, including what appears to be a chandelier and a piece of furniture.

- Overall:

- The image suggests that the group of people are gathered around the bed for some purpose, possibly a social gathering or a meeting.

- The image may have been taken for documentary or archival purposes, or it may have been intended for use in a publication or exhibition.

In summary, the image is a historical photograph of a group of people gathered around a bed, likely taken in the early 20th century. The image provides insight into the clothing and social norms of the time period, and may have been taken for documentary or archival purposes.

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-06-10

The image depicts a group of individuals gathered around a table, dressed in attire reminiscent of the 1930s or 1940s. The group consists of eight people, with seven standing and one seated on the table. They are all wearing clothing typical of the era, including dresses, overalls, and hats.

In the background, there appears to be a room with a high ceiling and a light fixture hanging from it. The atmosphere suggests that the group may be participating in some kind of event or activity, possibly related to a historical reenactment or a themed party.

The image is presented in black and white, which adds to its nostalgic feel. Overall, the scene captures a moment in time from a bygone era, evoking a sense of nostalgia and curiosity about the context and purpose of the gathering.

Amazon Nova

Created by amazon.nova-lite-v1:0 on 2025-06-06

The image shows a group of people gathered in a room, possibly for a formal event or ceremony. The people are dressed in traditional clothing, with some wearing hats and others wearing glasses. The image has a monochromatic filter applied, giving it a vintage or historical feel. In the foreground, there is a bed or couch covered with a patterned cloth, and a person is standing next to it. In the background, there are several people standing and looking at something.

Created by amazon.nova-pro-v1:0 on 2025-06-06

The image is a black-and-white photograph featuring a group of people standing together in a room. The individuals are dressed in various costumes, suggesting that they are participating in a theatrical performance or a costume party. The costumes range from historical attire to more modern, eclectic outfits. The room appears to be a stage or a large hall, with a visible ceiling fan and a rug on the floor.

Google Gemini

Created by gemini-2.0-flash on 2025-05-18

Certainly! Here's a description of the image:

General Impression:

The image is a black and white photograph, likely a scan of a negative, given its reversed tones. It appears to be a historical photograph, possibly from the mid-20th century. The photo is layered or double-exposed, with some elements appearing ghost-like.

Subject Matter:

The main subject seems to be a group of people, predominantly women, standing indoors. They are positioned behind a low, rectangular object that looks like a carpet or rug with a patterned design.

People:

The individuals are wearing what appear to be period-typical clothing for the time, with dresses, overalls, hats, and perhaps aprons. Some of them seem to be in conversation or examining the carpet. In the background, other figures are visible, possibly seated, suggesting an audience or a gathering.

Environment:

The setting is indoors, under a ceiling with what looks like a ceiling fan. The presence of a carpet or rug might indicate a shop, an exhibit, or a home.

Overlays/Double Exposure:

A significant aspect of the image is the apparent layering or double exposure. Large, abstract shapes that somewhat resemble wings or blades are superimposed over the people and the setting. This makes the image appear dreamlike or distorted and obscures certain details.

Overall Tone:

The photo has a vintage, somewhat mysterious feel due to its age and the double exposure effect. It likely captures a moment in time related to community, commerce, or local culture.

Created by gemini-2.0-flash-lite on 2025-05-18

Here is a description of the image:

Overall Impression: The image is a black and white negative, presenting a group of people seemingly gathered in a building. It appears to be an older photograph due to its style, the clothing worn by the individuals, and the aged look of the negative. There's a slightly surreal quality, with overlayed imagery or a double exposure adding an ethereal layer to the scene.

The Main Focus:

- The Group: A diverse group of people, likely of African descent, is the primary focus. They are arrayed in a line, seemingly interacting with something just out of frame, or possibly examining an item.

- Clothing & Time Period: Their clothing provides clues about the time period. There are dresses and outfits that could suggest the mid-20th century or earlier. The diversity of their attire, including overalls, gingham, and hats, adds an interesting layer to the scene.

- Object of Interest: They are gathered around a rug that is lying on the floor.

The Background:

- Setting: It seems they are indoors, possibly a community hall or meeting room. There's a ceiling fan and visible elements such as other people seated in the background.

Technical Aspects and Overlay:

- Negative: Being a negative, the image has its colors inverted, giving a stark and unique feel.

- Overlay/Double Exposure: The overlay or double exposure is an interesting aspect. Some large, abstract shapes are visible, along with what seems to be a different image superimposed upon the primary scene. This could be unintentional, from the photographic process, or intentionally used for an artistic effect.

Overall Interpretation: The photo could depict a community event, a gathering, or perhaps a ceremony. The double exposure makes it a little difficult to be sure but that adds mystery and intrigue to the scene.

Mistral Pixtral

Created by us.mistral.pixtral-large-2502-v1:0 on 2025-06-03

The image appears to be a double-exposed black-and-white photograph. Double exposure is a photographic technique where two images are combined into one, often resulting in a ghostly, layered effect.

In the foreground of the image, there are several people, primarily women, standing in a line. They are dressed in various styles of clothing, including dresses, skirts, and hats, suggesting a formal or semi-formal event. The attire and the setting indicate that the photograph might be from an earlier era, possibly the mid-20th century.

In the background, there is a large, indistinct shape that resembles an aircraft, with propellers visible. This suggests that the event might be taking place at an airport or an aviation-related location. The people in the foreground seem to be posing for the photograph, while the aircraft in the background adds a unique and intriguing element to the scene.

The overall composition of the image, with the double exposure effect, creates a sense of overlapping realities, blending the human subjects with the mechanical elements of the aircraft. The handwritten text at the bottom of the image, "3001," might be a reference number or identifier related to the photograph.

Qwen

Created by Qwen/Qwen2.5-VL-7B-Instruct on 2025-07-11

This is a black-and-white photograph that appears to be a double exposure, showing two distinct scenes layered on top of each other. In the foreground, there is a group of people standing indoors, dressed in traditional attire. Some of the individuals are wearing hats, and the attire suggests a cultural or ceremonial context. There is also a patterned rug on the floor in front of them. The background seems to show a different scene with people seated and possibly dining, but it is obscured by the double exposure effect, making it difficult to discern specific details. The photograph has some text written on it, including a series of numbers and letters, which might indicate a catalog or reference number for the image. The overall quality and style suggest it could be from the mid-20th century.

Created by Qwen/Qwen2.5-VL-72B-Instruct on 2025-07-11

This is a black and white photograph depicting a group of people gathered around a table covered with a decorative tablecloth. The individuals appear to be dressed in traditional or cultural attire, with some wearing hats and others in dresses with various patterns. The setting seems to be indoors, possibly in a community hall or similar venue, as indicated by the presence of a ceiling fan in the background. The image has some scratches and marks, suggesting it is an older photograph. There is also some text written on the bottom of the image, which appears to be an identifier or a label for the photograph.