Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

AWS Rekognition

| Age | 47-53 |

| Gender | Male, 88.1% |

| Sad | 62.1% |

| Calm | 33.2% |

| Confused | 1.1% |

| Angry | 1.1% |

| Disgusted | 0.9% |

| Surprised | 0.7% |

| Happy | 0.4% |

| Fear | 0.4% |

Feature analysis

Amazon

Clarifai

AWS Rekognition

| Person | 99.3% | |

Categories

Imagga

created on 2022-01-15

| interior objects | 61.5% | |

| people portraits | 22.2% | |

| events parties | 7.6% | |

| paintings art | 4.3% | |

| food drinks | 2% | |

| text visuals | 1% | |

Captions

Microsoft

created by unknown on 2022-01-15

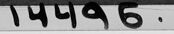

| a group of people posing for a photo | 93.2% | |

| a group of people posing for a picture | 93.1% | |

| a group of people posing for the camera | 93% | |

Salesforce

Created by general-english-image-caption-blip on 2025-05-16

a photograph of a group of people sitting around a table

OpenAI GPT

Created by gpt-4o-2024-11-20 on 2025-06-15

The image is a black-and-white negative photograph showing a group of individuals gathered indoors in a formal setting. The room contains elegant furnishings, including chairs, a small table, and decorative items such as a large floral arrangement and framed artwork on the wall. The group appears to be engaged in conversation, with some seated while others are standing. The atmosphere seems refined and organized, likely depicting a formal or social event.

Created by gpt-4o-2024-08-06 on 2025-06-15

The image appears to be a photographic negative showing a group of people in formal attire sitting and standing in a room. There are five individuals seated on chairs or a couch, while one person is standing. The room is elegantly decorated with a large bouquet of flowers on a table. A framed painting is hung on the wall, depicting a still life with flowers. The individuals in the image are dressed in suits and dresses, pointing to a formal or social gathering. A carpet is visible on the floor, and the furniture includes a table with a decorative object on it.

Anthropic Claude

Created by anthropic.claude-3-haiku-20240307-v1:0 on 2025-06-19

The image appears to depict a formal social gathering or event, with several people dressed in formal attire seated around a table. There is a floral arrangement on the table, and a framed artwork on the wall behind them. The individuals in the image are not identified, but they seem to be engaged in some kind of discussion or interaction.

Created by us.anthropic.claude-3-5-sonnet-20241022-v2:0 on 2025-06-19

This is a black and white photograph that appears to be from an earlier era, showing a group of people in a formal indoor setting. Several individuals are seated and standing, dressed predominantly in white suits and formal attire. There's a floral painting hanging on the wall in the background, and what appears to be a floral arrangement visible in the scene. The setting looks like it could be a social gathering or club setting. The image has a number "14496" visible at the top and bottom, suggesting it may be from an archive or collection. The room has a carpeted floor and appears to be well-lit, with the subjects arranged in a casual conversational grouping.

Meta Llama

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-05-29

The image is a black-and-white photograph of a group of people in a living room setting.

The group consists of seven individuals, all dressed in formal attire. The men are wearing suits and ties, while the women are wearing dresses. They are seated on chairs and a couch, with one man standing behind them. The room is decorated with a painting on the wall, a vase of flowers, and a coffee table with a hat on it.

The background of the image shows a plain wall with a doorway to the right. The overall atmosphere of the image suggests a formal gathering or social event, possibly a party or reception.

The image appears to be a vintage photograph, likely taken in the mid-20th century. The quality of the image is good, with clear details visible in the faces and clothing of the individuals. The image has a nostalgic feel to it, evoking a sense of a bygone era.

The image does not show any significant changes or comparisons, but rather presents a static scene of a group of people in a living room setting.

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-05-29

This image is a black-and-white photograph of a group of people in formal attire, gathered in a room. The group consists of seven individuals, with six men and one woman. They are all dressed in formal wear, with the men wearing suits and ties, and the woman wearing a dress.

The group is seated in various positions, with some sitting on chairs or couches, while others stand. They appear to be engaged in conversation, with some looking at each other and others looking away. The atmosphere seems relaxed and informal, despite their formal attire.

In the background, there is a painting on the wall, which appears to be a still life of flowers. There is also a vase of flowers on a table behind the group. The room is well-lit, with natural light coming from a window on the right side of the image.

Overall, the image suggests a social gathering or meeting of some kind, possibly a formal event or celebration. The formal attire and relaxed atmosphere suggest that the occasion may be a special one, but the exact nature of the event is not clear from the image alone.

Amazon Nova

Created by amazon.nova-lite-v1:0 on 2025-05-29

The image shows a group of six people sitting in a room. The people are all dressed in formal attire, with the men wearing suits and ties and the women wearing dresses. The man in the middle is holding a hat, and the man on the right is holding a rope. The room has a carpeted floor and a picture frame on the wall. The image has a black-and-white filter and a watermark in the top left corner.

Created by amazon.nova-pro-v1:0 on 2025-05-29

The black-and-white photo shows a group of people sitting on chairs and a couch in a room. They are all wearing formal attire, and some of them are wearing hats. A man is standing in front of them. A wooden table with two hats on top is in front of the man sitting on the couch. A flower arrangement is behind the standing man. A picture frame is mounted on the wall.

Google Gemini

Created by gemini-2.0-flash on 2025-05-18

Here's a description of the image:

The image appears to be a black-and-white photographic negative of a group of people. Six men and one woman are present. The men are all dressed in suits. Most of the men are seated and there is a man leaning behind another man who's sitting. There is a woman seated on the couch next to one of the men. In the foreground, there's a small table with what looks like two hats. Behind the group, there is a framed artwork hanging on the wall and a bouquet of flowers. The setting appears to be an indoor lounge or parlor. The image seems to be from an older era.

Created by gemini-2.0-flash-lite on 2025-05-18

Certainly! Here's a description of the image:

Overall Impression:

The image appears to be a vintage photograph, likely taken in the mid-20th century. The style suggests a studio portrait or a staged group photo.

Setting and Composition:

- The scene is set indoors, likely a living room or a lounge area.

- There is a painting on the wall, and a large floral arrangement adds a touch of elegance.

- Several people are arranged in a semi-circle.

- The composition and lighting suggest careful arrangement for a photograph.

People:

- There are a group of well-dressed men and a woman.

- They are dressed in suits, some with bow ties.

- A man is leaning over holding a necklace or chain.

- The expressions are calm and focused.

Additional Details:

- There are two hats on the small table.

- The photo has a distinctive border and markings indicating it might be a negative or a print from a negative.

Overall, it portrays a group of people from an earlier era, captured in a formal and posed setting.

Mistral Pixtral

Created by us.mistral.pixtral-large-2502-v1:0 on 2025-06-03

The image appears to be a black-and-white photograph featuring a group of people in a formal setting. Here are the details:

Setting: The scene takes place indoors, possibly in a room or hall with a plain wall in the background. There is a framed painting of flowers hanging on the wall.

People: There are seven individuals in the photograph.

- Four people are seated on chairs. They are dressed formally, with the men wearing suits and the woman wearing a dress.

- Three people are standing. One man is standing behind the seated individuals, while another man is standing to the right, leaning forward and holding a hat. The third standing individual is partially obscured by the man leaning forward.

Attire: The attire of the individuals suggests a formal or ceremonial occasion. The men are wearing suits, and some are wearing bow ties. The woman is dressed in a formal dress.

Objects: There is a small table in front of the seated individuals, with a hat placed on it. There is also a vase with flowers on the table.

Interaction: The individuals seem to be engaged in a conversation or interaction, with some looking at each other and others looking towards the camera.

Photographic Effects: There is a noticeable double exposure effect in the photograph, where some of the individuals appear ghostly or transparent, overlapping with other parts of the image.

The overall atmosphere of the image suggests a formal gathering or event, possibly from an earlier time period given the style of clothing and the black-and-white nature of the photograph.