Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

AWS Rekognition

| Age | 34-42 |

| Gender | Female, 71.2% |

| Calm | 87.7% |

| Angry | 3.2% |

| Happy | 3% |

| Sad | 2.4% |

| Surprised | 2.3% |

| Confused | 0.6% |

| Disgusted | 0.5% |

| Fear | 0.3% |

Feature analysis

Amazon

Clarifai

AWS Rekognition

| Person | 98.1% | |

Categories

Imagga

created on 2022-01-15

| streetview architecture | 39.6% | |

| paintings art | 30.7% | |

| interior objects | 13.8% | |

| text visuals | 6.8% | |

| events parties | 4.8% | |

| people portraits | 2.4% | |

| food drinks | 1.1% | |

Captions

Microsoft

created by unknown on 2022-01-15

| a man sitting in front of a building | 69.3% | |

| a man standing in front of a building | 69.1% | |

| a man sitting in a chair in front of a building | 69% | |

Clarifai

Created by general-english-image-caption-clip on 2025-07-11

a nurse prepares to remove a patient's head from the operating room.

Salesforce

Created by general-english-image-caption-blip on 2025-05-23

a photograph of a woman is getting her hair done by a hairdressucker

OpenAI GPT

Created by gpt-4o-2024-11-20 on 2025-06-13

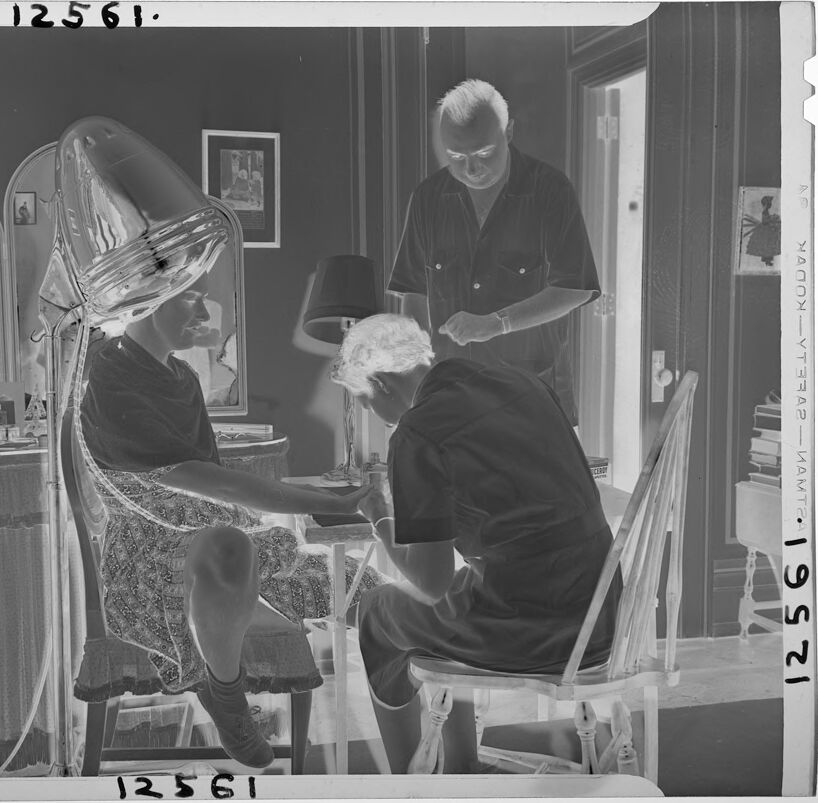

The image depicts a scene inside a room with vintage furnishings. A woman is seated under a hair drying hood, suggesting a beauty or grooming activity. Another individual is seated near her, possibly providing or assisting with some service. The room features a table or vanity draped with fabric, lamps, and framed artwork on the walls, creating a cozy, mid-century atmosphere.

Created by gpt-4o-2024-08-06 on 2025-06-13

The image is a black and white photo negative depicting a scene inside what appears to be a salon or personal grooming setting. The central figure is a woman seated under a large hair drying hood, which is part of a salon hairdryer chair. The woman is wearing a patterned dress and socks. A person with grey-white hair is seated opposite her, attending to the woman's hand, possibly engaged in a manicure or some other grooming service. Around the room are various furnishings typical of a salon or personal grooming setting, including lamps, mirrors, and an assortment of products on a table covered with a cloth. The room's walls have framed pictures, and there's a window partially visible near the edge of the image. The atmosphere conveys a vintage, early-to-mid 20th century style.

Anthropic Claude

Created by anthropic.claude-3-haiku-20240307-v1:0 on 2025-06-20

The image shows an interior scene with several people. In the foreground, there are two individuals seated at a table or desk, one of whom appears to be an older person receiving some kind of care or attention from the other. In the background, there is another person standing and observing the scene. The room contains various furnishings and decor, including a lamp, framed artwork on the walls, and other objects. The overall atmosphere suggests a domestic or personal setting.

Created by us.anthropic.claude-3-5-sonnet-20241022-v2:0 on 2025-06-20

This is a black and white photograph that appears to be from a beauty salon or home beauty treatment setting. The image shows someone sitting under a vintage-style hair dryer hood while receiving some kind of beauty treatment. There's a beautician or stylist seated next to them, and another person standing in the background. The room has table lamps and framed pictures on the walls, giving it a residential feel. The furniture includes what appears to be a vanity table with a cloth cover and some chairs. The photo has a very mid-20th century aesthetic, as evidenced by the style of the equipment and furnishings.

Meta Llama

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-06-10

The image is a black-and-white photograph of a woman receiving a manicure in a salon. The woman is seated in a chair with her hands extended, and a man is kneeling beside her, holding her hands. A hair dryer is positioned above her head, and a mirror is visible behind her. The room is decorated with lamps, a dresser, and a bookshelf.

The woman wears a dress and has her hair covered by the hair dryer. The man is dressed in a dark shirt and shorts. The background features a wall with a door and a window, as well as a framed picture on the wall. The overall atmosphere suggests a professional setting, likely a beauty salon or spa, where the woman is receiving a manicure treatment.

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-06-10

This image is a black and white photograph of a man seated in a chair, with a hair dryer hood placed over his head. He is wearing a dark shirt and patterned shorts, and has his right leg crossed over his left. The man is seated in front of a vanity, which features a mirror, a lamp, and various personal care items.

To the right of the man, another individual is kneeling on a chair, appearing to be engaged in an activity involving the man's hands. This person is also wearing a dark shirt and shorts. In the background, a third man stands near a doorway, wearing a dark shirt. The room is decorated with dark walls, a window, and framed pictures.

The overall atmosphere of the image suggests that it may be a scene from a film or television show, possibly depicting a character receiving a haircut or other beauty treatment. The presence of the hair dryer hood and the vanity suggests a focus on personal grooming, while the kneeling individual's activity adds an element of mystery to the scene.

Amazon Nova

Created by amazon.nova-lite-v1:0 on 2025-06-08

The image is a black-and-white photograph of a salon scene. In the foreground, a woman is seated in a chair, and a man is standing behind her, holding a hair dryer and styling her hair. The woman is looking at herself in a mirror that reflects the man and the hair dryer. On the left side of the image, there is a table with a lamp and a mirror. On the right side, there is a shelf with books and a picture frame. The image has a watermark with the numbers "12561" and "12521" in the top left and bottom right corners, respectively.

Created by amazon.nova-pro-v1:0 on 2025-06-08

The black-and-white image shows a woman sitting on a chair in front of a mirror with a lamp on the table. The woman is wearing a dress and a pair of socks. A man is sitting on a chair next to her, wearing a shirt and pants. He is holding something in his hand. There is another man standing behind them, wearing a polo shirt. There are picture frames on the wall.

Google Gemini

Created by gemini-2.0-flash on 2025-05-18

The image is a black and white photographic negative, depicting a domestic scene. A woman sits with a large salon-style hair dryer over her head. Another woman is sitting across from her, giving a manicure. A man stands behind the manicurist, appearing to supervise or observe.

The woman under the hair dryer is seated on a wooden chair, wearing a dress and socks. The hair dryer is a chrome-colored device with a large hood that covers her head. In the background, there is a vanity with a tri-fold mirror and a lamp on top of it.

The manicurist is seated on a wooden chair, facing the woman with the hair dryer. She is wearing a dress and focusing on the manicure. A lamp is on the table.

The man standing behind the manicurist is wearing a button-down short sleeved shirt and a watch. His expression is neutral.

The background of the room is filled with domestic furnishings, including lamps, a vanity, and framed pictures on the walls. A doorway is visible, suggesting another room beyond. The overall impression is one of an intimate, everyday moment captured in a mid-20th century setting.

Created by gemini-2.0-flash-lite on 2025-05-18

Here's a description of the image:

Overall Impression: This is a black and white, negative image, likely from the mid-20th century, depicting a scene in what appears to be someone's home. The lighting is fairly soft, and the subject matter suggests a domestic activity.

Setting: The scene takes place indoors, likely a bedroom or a room with a vanity/dressing table. There's a sense of a home setting, not a professional salon.

People and Activities:

- Foreground: A woman is seated, with a hair dryer hood over her head. She appears to be receiving a manicure from another person.

- Middle Ground: Another person, presumably the manicurist, is working on the seated woman's hands.

- Background: A man is standing nearby. He appears to be watching.

Objects of Note:

- Hair Dryer: The large, hooded hair dryer is a central element, giving a sense of the era.

- Vanity/Dressing Table: There's a table with a triple mirror, presumably a vanity, suggesting the location.

- Lamps: A couple of lamps are visible, contributing to the soft lighting in the room.

- Chairs: The simple wooden chairs are characteristic of a home environment.

- Artwork: There are a few framed pictures or paintings on the walls, adding to the domestic atmosphere.

Atmosphere: The image evokes a sense of intimacy and a glimpse into daily life during the era it was taken. The scene appears informal and personal, suggesting a home-based activity or perhaps a service provided in a home setting.

Mistral Pixtral

Created by us.mistral.pixtral-large-2502-v1:0 on 2025-06-03

The image is a black-and-white photograph depicting an indoor scene, likely from the mid-20th century. The setting appears to be a room in a house or salon, where a woman is having her hair styled.

In the foreground, a woman is seated on a chair, wearing a patterned skirt and a dark top. She is under a vintage hair dryer, which is a large, hooded device commonly used in the past for drying and setting hair. Her legs are crossed, and she is wearing socks and shoes.

To her right, another woman, presumably a hairdresser, is attending to her. The hairdresser is kneeling and appears to be adjusting or working on the woman's hair. She is dressed in a dark outfit, which could be a uniform or professional attire typical of hairdressers from that era.

In the background, a man is standing near a doorway, looking down, possibly at a magazine or a piece of paper. He is wearing a dark shirt and light-colored pants. The room is furnished with items such as a table with a lamp, a mirror, and various decorative elements, giving it a cozy and somewhat elegant appearance.

The photograph has markings along the edges, including the number "12561" repeated several times, which might be a reference or catalog number. The image has a nostalgic feel, capturing a moment in time that reflects the fashion, technology, and social norms of the period.

Qwen

Created by Qwen/Qwen2.5-VL-7B-Instruct on 2025-07-11

The image is a black-and-white photograph, likely from the mid-20th century, judging by the style of clothing and decor. It shows a scene of a hair salon or a similar setting where a person is seated in a chair, having their hair styled or treated. The individual seated has their hair in a bowl shape, likely due to a hairdryer or styling device. Another person, possibly a stylist or assistant, is standing behind them, focusing on their hair. The room is furnished with a table, lamps, and framed pictures on the wall, giving it a vintage, cozy ambiance. The overall tone of the image suggests a meticulous and attentive grooming process.

Created by Qwen/Qwen2.5-VL-72B-Instruct on 2025-07-11

The image shows a black-and-white scene in what appears to be a salon or a similar setting. There are two people in the foreground: one person is seated under a large hair dryer, possibly getting a perm or a similar hair treatment, while another person is kneeling on a chair and appears to be adjusting something on the floor. In the background, another person is standing and seems to be observing or working on something. The room has dark walls with framed pictures and a door leading to another room. There is a table with a lamp and various items on it, and a stack of books or papers on a small stand near the door. The overall atmosphere suggests a busy salon environment. The image has a vintage feel, likely from the mid-20th century.