Machine Generated Data

Tags

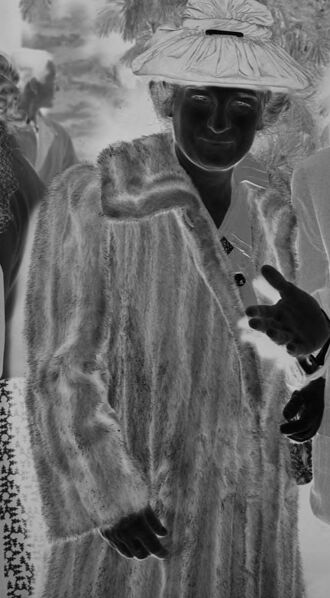

Amazon

created on 2022-01-09

| Clothing | 99.8 | |

|

| ||

| Apparel | 99.8 | |

|

| ||

| Person | 98.4 | |

|

| ||

| Human | 98.4 | |

|

| ||

| Overcoat | 97.3 | |

|

| ||

| Coat | 97.3 | |

|

| ||

| Person | 96.9 | |

|

| ||

| Tie | 95.6 | |

|

| ||

| Accessories | 95.6 | |

|

| ||

| Accessory | 95.6 | |

|

| ||

| Sunglasses | 95.6 | |

|

| ||

| Person | 84.5 | |

|

| ||

| Person | 84.1 | |

|

| ||

| Suit | 82.7 | |

|

| ||

| Tuxedo | 82.2 | |

|

| ||

| Person | 82 | |

|

| ||

| Text | 67 | |

|

| ||

| Shirt | 66.9 | |

|

| ||

| Person | 65.2 | |

|

| ||

| Hat | 62.3 | |

|

| ||

| Smoke | 62.2 | |

|

| ||

| Military Uniform | 59.1 | |

|

| ||

| Military | 59.1 | |

|

| ||

| Home Decor | 57 | |

|

| ||

| Sun Hat | 56.3 | |

|

| ||

| Person | 50.7 | |

|

| ||

Clarifai

created on 2023-10-25

Imagga

created on 2022-01-09

| man | 32.2 | |

|

| ||

| person | 25.8 | |

|

| ||

| people | 22.8 | |

|

| ||

| male | 22.7 | |

|

| ||

| work | 18.8 | |

|

| ||

| adult | 16.2 | |

|

| ||

| black | 16.2 | |

|

| ||

| old | 16 | |

|

| ||

| men | 15.4 | |

|

| ||

| job | 14.1 | |

|

| ||

| building | 13.8 | |

|

| ||

| business | 13.3 | |

|

| ||

| world | 12.8 | |

|

| ||

| architecture | 12.5 | |

|

| ||

| mask | 12.2 | |

|

| ||

| engineer | 12.2 | |

|

| ||

| group | 12.1 | |

|

| ||

| construction | 12 | |

|

| ||

| professional | 11.9 | |

|

| ||

| worker | 11.8 | |

|

| ||

| city | 11.6 | |

|

| ||

| businessman | 11.5 | |

|

| ||

| serious | 11.4 | |

|

| ||

| standing | 10.4 | |

|

| ||

| portrait | 10.3 | |

|

| ||

| clothing | 9.4 | |

|

| ||

| industry | 9.4 | |

|

| ||

| occupation | 9.2 | |

|

| ||

| tourism | 9.1 | |

|

| ||

| equipment | 8.6 | |

|

| ||

| builder | 8.5 | |

|

| ||

| human | 8.2 | |

|

| ||

| industrial | 8.2 | |

|

| ||

| working | 7.9 | |

|

| ||

| coat | 7.9 | |

|

| ||

| suit | 7.9 | |

|

| ||

| film | 7.8 | |

|

| ||

| manager | 7.4 | |

|

| ||

| safety | 7.4 | |

|

| ||

| brass | 7.3 | |

|

| ||

| protection | 7.3 | |

|

| ||

| danger | 7.3 | |

|

| ||

| dirty | 7.2 | |

|

| ||

| negative | 7.2 | |

|

| ||

| looking | 7.2 | |

|

| ||

| covering | 7.2 | |

|

| ||

| women | 7.1 | |

|

| ||

| face | 7.1 | |

|

| ||

| helmet | 7 | |

|

| ||

| white | 7 | |

|

| ||

Google

created on 2022-01-09

| Coat | 87.6 | |

|

| ||

| Sleeve | 87.2 | |

|

| ||

| Black-and-white | 86.1 | |

|

| ||

| Gesture | 85.2 | |

|

| ||

| Collar | 83.9 | |

|

| ||

| Style | 83.9 | |

|

| ||

| Hat | 79.8 | |

|

| ||

| Adaptation | 79.3 | |

|

| ||

| Blazer | 78 | |

|

| ||

| Eyewear | 77.4 | |

|

| ||

| Art | 76.7 | |

|

| ||

| Monochrome | 76.5 | |

|

| ||

| Monochrome photography | 76.3 | |

|

| ||

| Tints and shades | 75.8 | |

|

| ||

| Font | 74.1 | |

|

| ||

| Formal wear | 73.3 | |

|

| ||

| Vintage clothing | 73.2 | |

|

| ||

| Sun hat | 67 | |

|

| ||

| Pattern | 66.2 | |

|

| ||

| Fashion design | 64.8 | |

|

| ||

Microsoft

created on 2022-01-09

| text | 96.9 | |

|

| ||

| person | 95.9 | |

|

| ||

| clothing | 95.4 | |

|

| ||

| man | 91.7 | |

|

| ||

| black and white | 87.7 | |

|

| ||

| human face | 79.9 | |

|

| ||

| drawing | 71.9 | |

|

| ||

| glasses | 56.7 | |

|

| ||

| sunglasses | 55.1 | |

|

| ||

| monochrome | 52.4 | |

|

| ||

Color Analysis

Face analysis

Amazon

AWS Rekognition

| Age | 38-46 |

| Gender | Male, 99.7% |

| Calm | 51.1% |

| Sad | 27.3% |

| Disgusted | 10.9% |

| Confused | 3.3% |

| Surprised | 3% |

| Angry | 2.6% |

| Fear | 1% |

| Happy | 0.7% |

Feature analysis

Categories

Imagga

| streetview architecture | 94.6% | |

|

| ||

| nature landscape | 2.3% | |

|

| ||

| paintings art | 2.1% | |

|

| ||

Captions

Microsoft

created on 2022-01-09

| a group of people standing in front of a mirror posing for the camera | 85% | |

|

| ||

| a group of people standing in front of a mirror | 84.9% | |

|

| ||

| a group of people in front of a mirror posing for the camera | 84.8% | |

|

| ||

Text analysis

Amazon

110.79

ISO

LLC79

L079

OSI

HAGOX- YT33A2-NAMTZAR

L079

OSI

HAGOX-

YT33A2-NAMTZAR