Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

AWS Rekognition

| Age | 6-14 |

| Gender | Female, 97.2% |

| Fear | 83.7% |

| Calm | 11% |

| Surprised | 2.8% |

| Angry | 1.2% |

| Disgusted | 0.6% |

| Confused | 0.3% |

| Sad | 0.2% |

| Happy | 0.2% |

Feature analysis

Amazon

Clarifai

AWS Rekognition

| Person | 93.1% | |

Categories

Imagga

created on 2022-01-09

| streetview architecture | 58.6% | |

| beaches seaside | 15.3% | |

| paintings art | 9.2% | |

| cars vehicles | 5.2% | |

| interior objects | 4.8% | |

| pets animals | 2.8% | |

| nature landscape | 1.9% | |

| text visuals | 1.3% | |

Captions

Microsoft

created by unknown on 2022-01-09

| a boy in a cage | 84.7% | |

| a group of people in a cage | 84.6% | |

| a boy standing in front of a fence | 68.9% | |

Clarifai

Created by general-english-image-caption-clip on 2025-07-12

black and white photograph of a boy and girl sitting in a cage.

Salesforce

Created by general-english-image-caption-blip on 2025-05-22

a photograph of two children in helmets and helmets are standing in a cage

OpenAI GPT

Created by gpt-4o-2024-11-20 on 2025-06-11

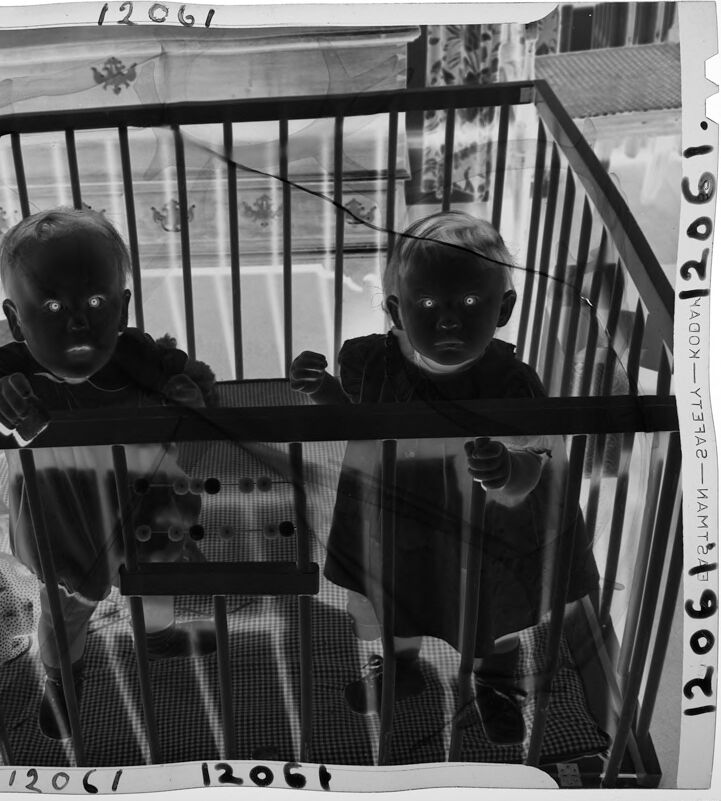

The image shows two young children standing inside a playpen. The playpen has vertical bars and contains some toys, including a stuffed animal and an abacus. The background features curtains and windows, providing natural light into the room. The photo appears to be a photographic negative based on its inverted tones and markings.

Created by gpt-4o-2024-08-06 on 2025-06-11

The image appears to be a black-and-white negative photograph of two children standing in a playpen. The playpen is made of wooden slats, and inside, there are visible toys, including a stuffed animal and a set of abacus-like beads. The floor of the playpen is covered with a patterned fabric, possibly a mat or blanket. Curtains with a floral pattern can be seen in the background, suggesting the playpen is positioned near a window. Handwritten numbers and markings are present along the edges of the negative, indicating it is a film strip.

Anthropic Claude

Created by anthropic.claude-3-haiku-20240307-v1:0 on 2025-06-19

The image shows two dark-colored dolls or figurines sitting in a crib or caged structure. The dolls have large, dark eyes and appear to be looking directly at the camera. The background is blurred, but it seems to be a domestic setting. The image has a somewhat unsettling or eerie quality to it.

Created by us.anthropic.claude-3-5-sonnet-20241022-v2:0 on 2025-06-19

This is a striking black and white photograph that appears to be a negative image, showing two small children in what looks like a crib or playpen. The negative effect makes their faces appear dark with bright eyes, creating an almost eerie or surreal quality to the image. The children are standing behind the bars of the crib/playpen, and there appears to be some patterned fabric or bedding visible at the bottom. The photograph has numbers "12061" visible at the edges, suggesting it might be from an archive or photo collection. The composition is taken from above, looking down into the enclosure, with the vertical bars creating strong geometric lines in the image.

Meta Llama

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-06-09

The image is a black-and-white photograph of two babies in a crib, with a handwritten number "12061" on the top and bottom of the image.

- The babies are both wearing dark clothing and have their hands grasping the bars of the crib.

- The baby on the left has short hair and is looking directly at the camera.

- The baby on the right has longer hair and is also looking directly at the camera.

- The crib has a checkered patterned mattress and a metal frame with vertical bars.

- There is a small stuffed animal or toy on the left side of the crib.

- The background of the image is out of focus, but it appears to be a room with a window and a door.

- The overall atmosphere of the image is one of innocence and curiosity, as the babies seem to be exploring their surroundings.

The image is a heartwarming and nostalgic snapshot of two young children in a crib, capturing a moment in time that is both familiar and endearing.

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-06-09

The image depicts a black-and-white photograph of two babies standing in a crib, with the photo itself appearing to be a negative. The babies are positioned on either side of the crib, each grasping the bars with both hands. They wear dark clothing and have short hair. The crib features a checkered mattress and is situated in front of a window, which allows natural light to enter the room. The overall atmosphere of the image suggests that it may have been taken for a family album or as a keepsake.

Amazon Nova

Created by amazon.nova-lite-v1:0 on 2025-06-08

The image features two children standing inside a crib, captured in black and white. The crib is placed in a room with a window, allowing some light to enter. The children are looking out of the crib, and their faces are illuminated by the light, creating a striking contrast. The image has a vintage or historical feel, possibly indicating that it was taken some time ago. The children's expressions are serious, and they seem to be focused on something outside the crib. The image is framed with a border, and there are numbers and text in the corners, possibly indicating the date or other information. Overall, the image is a poignant and evocative portrayal of childhood and the curiosity and wonder of young children.

Created by amazon.nova-pro-v1:0 on 2025-06-08

A black-and-white photo of two children in a crib with a number "12061" on the top and bottom of the photo. The children are standing on the crib, holding the bars, and looking up. The child on the left is wearing a long-sleeve shirt, while the child on the right is wearing a dress. There is a toy in the middle of the crib. Behind the crib is a window with curtains.

Google Gemini

Created by gemini-2.0-flash on 2025-05-17

Here is a description of the image:

The image is a black and white negative photograph of two infants in a crib. The babies are standing and leaning on the railing of the crib, and they have a creepy appearance due to the inverted coloring that makes their eyes appear to glow.

The crib is surrounded by vertical bars. A toy or stuffed animal is inside the crib along with a counting toy. The background is somewhat blurry, but a curtain can be seen with patterns on it.

The image is labeled with the number "12061" along the top and bottom edges, and vertically along the right edge, as well as the word "KODAK" (mirrored) indicating it is a film negative.

Created by gemini-2.0-flash-lite on 2025-05-17

Here's a description of the image:

This is a black and white, negative image of two young children in a crib. The image has a vintage feel due to its negative quality and the subject matter.

Composition:

- The main focus is on the two children, who are standing in a wooden crib.

- The crib's bars create a grid-like pattern that adds structure to the composition.

- The background is somewhat blurred, but we can make out curtains and possibly details of the room.

Subject:

- The children are the focal point. They appear to be toddlers, likely around 1-2 years old.

- Their faces are illuminated, with the pupils appearing as bright white dots due to the negative effect. This gives them an eerie, almost otherworldly look.

- They are both holding onto the top of the crib, suggesting they are trying to climb out or are looking out at the world.

- One child is holding a stuffed animal.

Technical Aspects:

- It's clearly a photographic negative, which reverses the colors, making light areas appear dark and vice versa.

- The image is surrounded by a white border with the numbers "12061" marked on it, possibly representing the date.

Overall Impression:

The image has a slightly unsettling but also nostalgic quality. The negative effect and the unusual appearance of the children's eyes create a unique atmosphere. It gives off a vintage, maybe even historical feel due to its grainy appearance.

Mistral Pixtral

Created by us.mistral.pixtral-large-2502-v1:0 on 2025-06-02

The image is a black-and-white photograph featuring two young children standing inside a crib. Both children have their faces painted black, which creates a striking visual effect. They are gripping the bars of the crib, looking directly at the camera with a somewhat intense gaze. The child on the left is holding a stuffed animal, while the child on the right is wearing a headband. The background shows a room with patterned curtains and some furniture, contributing to a domestic setting. The overall composition and the unusual face paint give the image a somewhat eerie or surreal quality. The number "12061" appears multiple times around the edges of the photo, likely indicating a catalog or reference number.

Qwen

Created by Qwen/Qwen2.5-VL-7B-Instruct on 2025-07-24

The image is a black and white photograph showing two identical dolls with large, eye-like features that resemble glowing eyes. The dolls are positioned in a cradle, with bars surrounding them, giving the impression that they are confined or imprisoned. The dolls are dressed in matching dark outfits and appear to be in a state of stillness, with their arms outstretched. The photograph has a vintage feel, and there is a label or text overlaid on the image that reads "12061" repeatedly. The background includes some blurred elements, possibly indicating a domestic setting.

Created by Qwen/Qwen2.5-VL-72B-Instruct on 2025-07-24

This black-and-white photograph depicts two babies inside a crib. The babies appear to be looking directly at the camera, and their eyes have a reflective quality, likely due to the camera flash. The crib has vertical bars, and the babies are holding onto them. There is a stuffed animal and a toy abacus inside the crib with them. The image has a vintage feel, possibly from the mid-20th century, and there are various markings and numbers on the photograph, suggesting it might be an archival or documentary image. The background includes some indistinct patterns and objects, possibly part of the room's decor.