Machine Generated Data

Tags

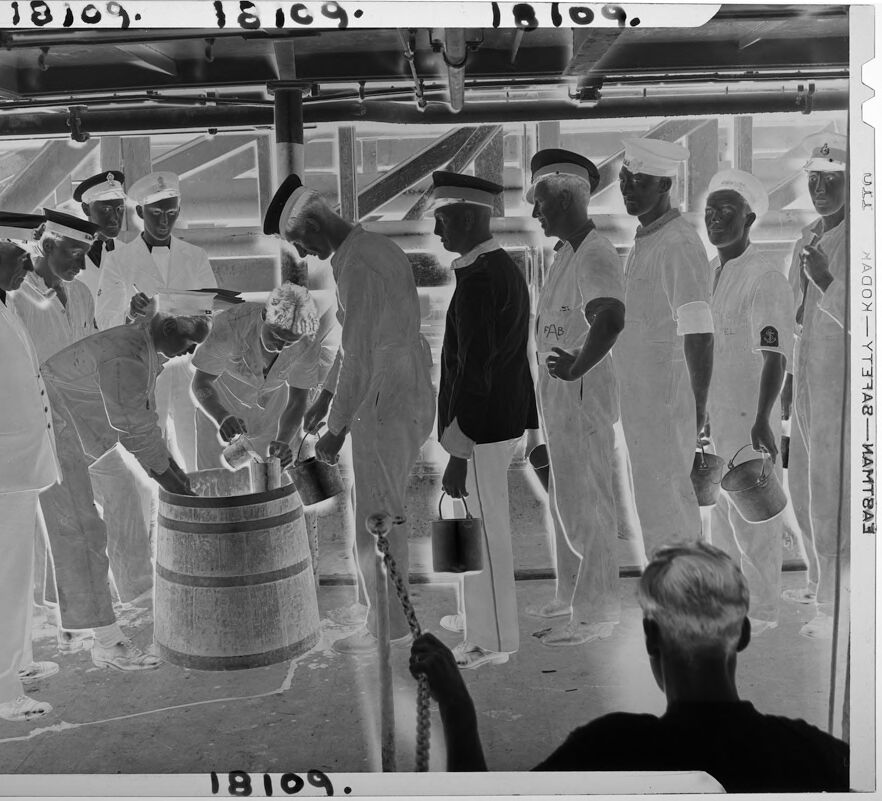

Amazon

created on 2022-01-09

| Person | 99.7 | |

|

| ||

| Human | 99.7 | |

|

| ||

| Person | 99.6 | |

|

| ||

| Person | 99.4 | |

|

| ||

| Person | 98.8 | |

|

| ||

| Person | 98.7 | |

|

| ||

| Person | 98.1 | |

|

| ||

| Person | 97.8 | |

|

| ||

| Person | 96.9 | |

|

| ||

| Person | 96.4 | |

|

| ||

| Person | 94.6 | |

|

| ||

| Person | 94.1 | |

|

| ||

| Clothing | 88.3 | |

|

| ||

| Apparel | 88.3 | |

|

| ||

| Person | 84.1 | |

|

| ||

| Airplane | 71 | |

|

| ||

| Transportation | 71 | |

|

| ||

| Vehicle | 71 | |

|

| ||

| Aircraft | 71 | |

|

| ||

| People | 70 | |

|

| ||

| Shorts | 69.6 | |

|

| ||

| Helicopter | 68.1 | |

|

| ||

| Person | 66.3 | |

|

| ||

| Person | 63.9 | |

|

| ||

| Crowd | 62.3 | |

|

| ||

| Clinic | 62.2 | |

|

| ||

| Text | 59.9 | |

|

| ||

| Photography | 56.2 | |

|

| ||

| Photo | 56.2 | |

|

| ||

| Indoors | 55.3 | |

|

| ||

Clarifai

created on 2023-10-25

| people | 99.7 | |

|

| ||

| adult | 97.7 | |

|

| ||

| man | 97.1 | |

|

| ||

| many | 96.7 | |

|

| ||

| group together | 96.6 | |

|

| ||

| indoors | 96.2 | |

|

| ||

| woman | 95.5 | |

|

| ||

| group | 95.4 | |

|

| ||

| monochrome | 95.1 | |

|

| ||

| several | 89.1 | |

|

| ||

| transportation system | 85.4 | |

|

| ||

| industry | 80 | |

|

| ||

| vehicle | 79.8 | |

|

| ||

| five | 78.3 | |

|

| ||

| wear | 75.8 | |

|

| ||

| watercraft | 74.6 | |

|

| ||

| horizontal | 74.2 | |

|

| ||

| employee | 73.5 | |

|

| ||

| military | 73.2 | |

|

| ||

| grinder | 72.6 | |

|

| ||

Imagga

created on 2022-01-09

| passenger | 55.9 | |

|

| ||

| dairy | 49.9 | |

|

| ||

| transportation | 26 | |

|

| ||

| man | 23.5 | |

|

| ||

| business | 21.2 | |

|

| ||

| work | 19.6 | |

|

| ||

| people | 19.5 | |

|

| ||

| male | 18.4 | |

|

| ||

| car | 17.6 | |

|

| ||

| industry | 16.2 | |

|

| ||

| train | 15.5 | |

|

| ||

| smiling | 15.2 | |

|

| ||

| machine | 14.8 | |

|

| ||

| industrial | 14.5 | |

|

| ||

| adult | 14.2 | |

|

| ||

| vehicle | 13.7 | |

|

| ||

| factory | 13.5 | |

|

| ||

| travel | 13.4 | |

|

| ||

| three quarter length | 12.8 | |

|

| ||

| inside | 12 | |

|

| ||

| happy | 11.9 | |

|

| ||

| equipment | 11.9 | |

|

| ||

| city | 11.6 | |

|

| ||

| person | 11.4 | |

|

| ||

| urban | 11.4 | |

|

| ||

| sitting | 11.2 | |

|

| ||

| power | 10.9 | |

|

| ||

| men | 10.3 | |

|

| ||

| women | 10.3 | |

|

| ||

| bullet train | 10.1 | |

|

| ||

| transport | 10 | |

|

| ||

| station | 9.7 | |

|

| ||

| outdoors | 9.7 | |

|

| ||

| indoors | 9.7 | |

|

| ||

| technology | 9.6 | |

|

| ||

| 20s | 9.2 | |

|

| ||

| building | 8.9 | |

|

| ||

| 20 24 years | 8.9 | |

|

| ||

| device | 8.8 | |

|

| ||

| standing | 8.7 | |

|

| ||

| lifestyle | 8.7 | |

|

| ||

| smile | 8.5 | |

|

| ||

| portrait | 8.4 | |

|

| ||

| modern | 8.4 | |

|

| ||

| occupation | 8.2 | |

|

| ||

| passenger train | 8.1 | |

|

| ||

| turnstile | 8.1 | |

|

| ||

| working | 8 | |

|

| ||

| businessman | 7.9 | |

|

| ||

| day | 7.8 | |

|

| ||

| machinery | 7.8 | |

|

| ||

| two people | 7.8 | |

|

| ||

| production | 7.8 | |

|

| ||

| airplane | 7.7 | |

|

| ||

| emergency | 7.7 | |

|

| ||

| outside | 7.7 | |

|

| ||

| engine | 7.7 | |

|

| ||

| automobile | 7.7 | |

|

| ||

| worker | 7.4 | |

|

| ||

| gate | 7.3 | |

|

| ||

| room | 7.3 | |

|

| ||

| team | 7.2 | |

|

| ||

| public transport | 7.1 | |

|

| ||

| seat | 7.1 | |

|

| ||

| to | 7.1 | |

|

| ||

| steel | 7.1 | |

|

| ||

Google

created on 2022-01-09

| Shorts | 93.8 | |

|

| ||

| Style | 83.8 | |

|

| ||

| Black-and-white | 83.3 | |

|

| ||

| Font | 78.6 | |

|

| ||

| Snapshot | 74.3 | |

|

| ||

| Monochrome photography | 73.9 | |

|

| ||

| Monochrome | 72.4 | |

|

| ||

| Motor vehicle | 70.1 | |

|

| ||

| Crew | 69.4 | |

|

| ||

| Public transport | 68.4 | |

|

| ||

| Boat | 65.6 | |

|

| ||

| Photo caption | 59.1 | |

|

| ||

| Room | 59 | |

|

| ||

| Crowd | 58.8 | |

|

| ||

| Team | 57.2 | |

|

| ||

| T-shirt | 57.1 | |

|

| ||

| Vintage clothing | 56.4 | |

|

| ||

| Passenger | 52.9 | |

|

| ||

| History | 52.3 | |

|

| ||

| Street | 51.6 | |

|

| ||

Microsoft

created on 2022-01-09

| person | 99.5 | |

|

| ||

| text | 99.1 | |

|

| ||

| clothing | 94.2 | |

|

| ||

| black and white | 87.3 | |

|

| ||

| man | 85.6 | |

|

| ||

| people | 78.9 | |

|

| ||

| street | 72.1 | |

|

| ||

| footwear | 64.7 | |

|

| ||

| preparing | 54.3 | |

|

| ||

| waste container | 52.5 | |

|

| ||

Color Analysis

Face analysis

Amazon

AWS Rekognition

| Age | 16-22 |

| Gender | Male, 76.2% |

| Calm | 96.4% |

| Sad | 2.6% |

| Surprised | 0.3% |

| Confused | 0.2% |

| Angry | 0.2% |

| Disgusted | 0.2% |

| Happy | 0.1% |

| Fear | 0% |

AWS Rekognition

| Age | 20-28 |

| Gender | Male, 81.9% |

| Calm | 95.1% |

| Angry | 2.7% |

| Sad | 0.6% |

| Surprised | 0.5% |

| Happy | 0.5% |

| Confused | 0.4% |

| Fear | 0.1% |

| Disgusted | 0.1% |

AWS Rekognition

| Age | 51-59 |

| Gender | Male, 99.8% |

| Calm | 100% |

| Surprised | 0% |

| Sad | 0% |

| Confused | 0% |

| Angry | 0% |

| Disgusted | 0% |

| Happy | 0% |

| Fear | 0% |

AWS Rekognition

| Age | 29-39 |

| Gender | Male, 72% |

| Calm | 98.3% |

| Surprised | 1.3% |

| Sad | 0.2% |

| Disgusted | 0.1% |

| Confused | 0.1% |

| Angry | 0% |

| Fear | 0% |

| Happy | 0% |

AWS Rekognition

| Age | 25-35 |

| Gender | Male, 99.6% |

| Calm | 65.3% |

| Sad | 28% |

| Disgusted | 1.7% |

| Surprised | 1.5% |

| Fear | 1.5% |

| Angry | 0.7% |

| Happy | 0.7% |

| Confused | 0.5% |

AWS Rekognition

| Age | 21-29 |

| Gender | Male, 82.4% |

| Calm | 72.9% |

| Sad | 24.4% |

| Confused | 0.8% |

| Surprised | 0.6% |

| Angry | 0.5% |

| Disgusted | 0.3% |

| Fear | 0.2% |

| Happy | 0.2% |

AWS Rekognition

| Age | 53-61 |

| Gender | Female, 51.9% |

| Calm | 99% |

| Happy | 0.6% |

| Surprised | 0.1% |

| Sad | 0.1% |

| Disgusted | 0.1% |

| Angry | 0% |

| Confused | 0% |

| Fear | 0% |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Possible |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Possible |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Possible |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Possible |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Feature analysis

Categories

Imagga

| streetview architecture | 95% | |

|

| ||

| paintings art | 1.6% | |

|

| ||

| interior objects | 1% | |

|

| ||

Captions

Microsoft

created on 2022-01-09

| a group of people standing in front of a crowd | 92.4% | |

|

| ||

| a group of people standing in front of a crowd of people | 91.4% | |

|

| ||

| a group of people standing around a plane | 76.2% | |

|

| ||

Text analysis

Amazon

18109.

AB

use

18109.

AP

18109.

সA00X-YTउ1A8-WAMT8A

18109.

AP

সA00X-YTउ1A8-WAMT8A