Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

AWS Rekognition

| Age | 39-47 |

| Gender | Male, 99.3% |

| Calm | 91.7% |

| Surprised | 3.3% |

| Confused | 1.4% |

| Disgusted | 1.2% |

| Sad | 1.2% |

| Angry | 0.5% |

| Fear | 0.4% |

| Happy | 0.3% |

Feature analysis

Amazon

Clarifai

AWS Rekognition

| Person | 99.8% | |

Categories

Imagga

created on 2022-01-09

| streetview architecture | 92.5% | |

| paintings art | 6.7% | |

Captions

Microsoft

created by unknown on 2022-01-09

| a group of people looking at a phone | 67.9% | |

| a group of people looking at a cell phone | 60.3% | |

| a group of people looking at the camera | 60.2% | |

Clarifai

Created by general-english-image-caption-clip on 2025-07-14

photograph of a family of four sitting at a table.

Salesforce

Created by general-english-image-caption-blip on 2025-05-03

a photograph of a group of people sitting at a table with drinks

OpenAI GPT

Created by gpt-4o-2024-11-20 on 2025-06-11

This image appears to be a negative of a photograph showing a formal dining setting with people seated at a table. The table is set with glassware, including goblets and glasses, and ornate candlesticks are positioned prominently in the foreground. In the background, there is detailed architectural decor, including columns, intricate molding, and a fireplace or mantel. Heavy curtains are visible on the side of the room, contributing to an elegant ambiance. The black-and-white inversion of the image highlights the contours and textures of the environment.

Created by gpt-4o-2024-08-06 on 2025-06-11

The image depicts a group of people seated at a table within a luxurious or intricately decorated interior setting. The room has carved columns and drapery, suggesting an elegant atmosphere. The table is set with various glassware and what appear to be candlesticks. The individuals in the scene are dressed in formal attire, indicating it might be a celebratory or special occasion. A person standing behind the seated guests appears to be holding a drink, suggesting social interaction or a toast. The image is presented in a negative format, which inverts the colors.

Anthropic Claude

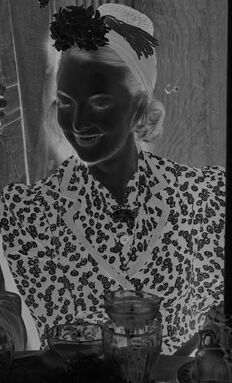

Created by anthropic.claude-3-haiku-20240307-v1:0 on 2025-06-19

The image appears to be a black and white photograph depicting a group of people in an ornate, decorated interior space. There are four individuals visible - three men and one woman. The woman is wearing a patterned dress and has a flower in her hair. The men are dressed in suits and ties. The background features elaborate architectural details, including columns and decorative elements. The overall scene suggests a formal or celebratory event taking place in this grand, historic setting.

Created by us.anthropic.claude-3-5-sonnet-20241022-v2:0 on 2025-06-19

This appears to be a vintage black and white negative photograph, likely from a formal dinner or social gathering. The image shows several people seated at what appears to be a dining table with glasses and place settings. There are tall black candles on the table, and the setting appears to be an elegant interior with decorative architectural details visible in the background, including ornate moldings and a radiator. The photograph has a distinctive negative effect where the tones are reversed, giving it an unusual appearance where light areas appear dark and dark areas appear light. The image has numbers "17780" written at the top, suggesting it may be from an archive or collection.

Meta Llama

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-06-09

The image is a black and white photograph of a group of people gathered around a table, likely in a restaurant or dining room. The scene appears to be from the 1930s or 1940s, based on the clothing and hairstyles of the individuals.

- Table Setting:

- The table is set with several glasses, plates, and silverware.

- There are two tall candles in candlesticks on the table, one on either side of the centerpiece.

- People:

- There are six people visible in the photo, all dressed in formal attire.

- The man standing behind the table is holding a drink in his hand.

- The woman sitting next to him is wearing a floral dress and a hat.

- The other four people are seated around the table, engaged in conversation.

- Background:

- The background of the photo shows a brick wall with a radiator and a window.

- There is a lamp hanging from the ceiling, and a curtain or drapes on the right side of the photo.

- Overall:

- The atmosphere of the photo appears to be one of elegance and sophistication.

- The people in the photo seem to be enjoying themselves, laughing and chatting over drinks and food.

The image captures a moment in time, frozen in black and white, and provides a glimpse into the past.

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-06-09

The image is a black-and-white photograph of a group of people gathered around a table, with a man standing behind them. The scene appears to be set in a formal dining room or restaurant.

In the foreground, there are several individuals seated at the table, each with their own unique attire and accessories. One woman wears a floral dress and a hat adorned with flowers, while another man sports a bow tie and a suit jacket. A third person, partially obscured by the table, seems to be wearing a striped shirt.

Behind the seated group stands a man dressed in a suit, holding a glass in his hand. He appears to be engaged in conversation with the others at the table.

The background of the image features a brick wall, a radiator, and a window with curtains drawn. The overall atmosphere suggests a social gathering or celebration, possibly a dinner party or special occasion.

The image has a nostalgic quality to it, evoking a sense of elegance and sophistication from a bygone era. The formal attire and refined surroundings create a sense of luxury and refinement, while the warm lighting and gentle expressions of the individuals add a touch of warmth and conviviality to the scene.

Amazon Nova

Created by amazon.nova-lite-v1:0 on 2025-06-08

The black-and-white photo shows a group of people gathered in a room. The people are sitting around a table, and some are standing. The man in the middle is holding a glass of wine and looking at the woman on the right. The woman is smiling and looking at the man. The room has a wooden wall with a lamp mounted on it. The photo has a watermark with the text "17780" and "0880" on the left and right sides.

Created by amazon.nova-pro-v1:0 on 2025-06-08

The black-and-white image features a group of people, probably gathered for a party. The group consists of three men and three women. Two of the men are standing, while the other one is sitting. The three women are sitting, and one of them is wearing a hat. In front of them is a table with several glasses, plates, and candles. The image has a watermark with the text "17780" and "1780" on the left side.

Google Gemini

Created by gemini-2.0-flash-lite on 2025-05-04

Here's a description of the image:

Overall Impression:

The image appears to be a black and white negative of a social gathering or a dinner party. The scene seems to be indoors, likely in a dining room or a similar elegant setting. The style of the people and the decor suggest a vintage era.

People and Actions:

- Group: There are several people in the image, suggesting a social event. They are all dressed in formal attire appropriate for the time.

- Center: The main focal point appears to be around a table where people are seated. A man in a suit and bow tie is in the foreground, possibly the host. Next to him, a woman wearing a floral patterned blouse and a headband with a flower is smiling.

- Others: Several other individuals can be seen in the background. Some are engaged in conversation, while others are holding glasses, suggesting a relaxed atmosphere.

- Servant: There is one man standing, holding a glass, and appears to be serving the others.

Setting and Details:

- Decor: The setting seems luxurious with architectural details such as columns, arches, and possibly decorative elements, suggesting the room is in a wealthy home or a high-end establishment. There are large candles on the table.

- Table: A dining table is set with glasses.

- Lighting: The lighting is subtle, which is common for this time period.

- Negative: The image is a negative, so the colors are inverted.

Overall, the photograph captures a moment of elegance and social interaction from a time gone by.

Created by gemini-2.0-flash on 2025-05-04

Here's a description of the image:

The image appears to be a black and white photograph of a group of people gathered around a table. The photo has an inverted color scheme, resembling a photographic negative.

Several people are visible in the frame. One person is standing behind the table holding a glass, possibly making a toast or serving drinks. A woman is seated at the table with a decorative headband. Another woman is seated, wearing a wide-brimmed hat. A man in a bow tie sits at the table. Tall, dark candles stand on the table, along with glasses and what appears to be a centerpiece.

The background shows architectural details such as columns and what appears to be a window with curtains. The lighting appears to be a mix of natural and artificial sources. The overall impression is a formal gathering or a social event. The number "17780" is printed on the top of the image and vertically on the left.

Mistral Pixtral

Created by us.mistral.pixtral-large-2502-v1:0 on 2025-06-02

The image appears to be a black-and-white photograph from the year 1950, as indicated by the number "1950" written on the top left corner. It depicts a group of people gathered in what seems to be a formal or celebratory setting.

In the foreground, there are three individuals seated at a table. The person on the left is a man wearing a suit with a bow tie, looking directly at the camera. The person in the middle is a woman wearing a patterned blouse and a headband with a decorative element. She is smiling and appears to be engaged in conversation with the person on the right, who is also a man wearing a suit and tie. He is holding a drink and seems to be in mid-conversation.

In the background, there are more people, including a man in a white shirt and tie and a woman wearing a hat. The setting includes a table with various items such as glasses, plates, and decorative elements. The room has a somewhat ornate design, with patterned walls and a radiator visible on the right side.

The overall atmosphere suggests a social gathering or party, with people dressed in formal attire and engaged in conversation.

Qwen

Created by Qwen/Qwen2.5-VL-7B-Instruct on 2025-07-10

The image is a black-and-white photograph depicting a formal dining scene. The setting appears to be a well-decorated room with ornate architectural details, including columns and a patterned ceiling. The table is elegantly set with glasses, dinnerware, and candles in tall holders.

Seated at the table are several individuals dressed in formal attire. The men are wearing suits, and the women are wearing dresses and hats typical of mid-20th-century fashion. The woman on the left is wearing a hat with a floral design, and the woman on the right is wearing a patterned dress with a headpiece. There is also a man standing at the back of the table, holding a glass, and another man seated behind him, also dressed formally. The overall atmosphere suggests a sophisticated, perhaps celebratory, dining event.

Created by Qwen/Qwen2.5-VL-72B-Instruct on 2025-07-10

This black-and-white photograph appears to capture a formal dining or social gathering, likely from the mid-20th century. Several individuals are seated at a table adorned with tall black candles and various glassware, including wine glasses and water glasses. The people are dressed in elegant attire, with a man in the foreground wearing a bow tie and a suit, and a woman next to him in a patterned dress with a decorative headpiece. Another woman in the background is wearing a hat and a striped dress. A man is standing in the background holding a drink, possibly serving the guests. The setting includes a fireplace with ornate decorations, a hanging lamp, and curtains, suggesting an upscale and refined environment. The photograph has a number "17780" printed at the top and bottom left corner.