Machine Generated Data

Tags

Amazon

created on 2022-01-09

| Human | 99.2 | |

|

| ||

| Crowd | 90.8 | |

|

| ||

| People | 81 | |

|

| ||

| Silhouette | 80.8 | |

|

| ||

| Audience | 78.8 | |

|

| ||

| Clothing | 69.4 | |

|

| ||

| Apparel | 69.4 | |

|

| ||

| Leisure Activities | 61.6 | |

|

| ||

| Musician | 59.8 | |

|

| ||

| Musical Instrument | 59.8 | |

|

| ||

| Sitting | 58.9 | |

|

| ||

| Suit | 58.6 | |

|

| ||

| Coat | 58.6 | |

|

| ||

| Overcoat | 58.6 | |

|

| ||

| Furniture | 55.9 | |

|

| ||

| Music Band | 55.5 | |

|

| ||

| Stage | 55.4 | |

|

| ||

Clarifai

created on 2023-10-25

Imagga

created on 2022-01-09

Google

created on 2022-01-09

| Font | 81.8 | |

|

| ||

| Tree | 74.3 | |

|

| ||

| Vintage clothing | 72.9 | |

|

| ||

| Art | 71.3 | |

|

| ||

| Event | 70.4 | |

|

| ||

| Crew | 69.2 | |

|

| ||

| Suit | 67.8 | |

|

| ||

| Monochrome | 67.1 | |

|

| ||

| Monochrome photography | 65.3 | |

|

| ||

| Room | 65.1 | |

|

| ||

| Illustration | 65 | |

|

| ||

| History | 64.7 | |

|

| ||

| Stock photography | 64.1 | |

|

| ||

| Team | 63.6 | |

|

| ||

| Visual arts | 59.6 | |

|

| ||

| Photo caption | 56.4 | |

|

| ||

| Painting | 54.9 | |

|

| ||

| Paper product | 54 | |

|

| ||

| Uniform | 53 | |

|

| ||

| Rectangle | 51.4 | |

|

| ||

Color Analysis

Face analysis

Amazon

AWS Rekognition

| Age | 34-42 |

| Gender | Male, 98.8% |

| Calm | 76.5% |

| Sad | 7.5% |

| Happy | 7.3% |

| Confused | 5.4% |

| Disgusted | 1.4% |

| Surprised | 1.2% |

| Angry | 0.6% |

| Fear | 0.2% |

AWS Rekognition

| Age | 45-53 |

| Gender | Female, 60.7% |

| Calm | 83.2% |

| Surprised | 8% |

| Happy | 2.5% |

| Fear | 2.2% |

| Sad | 1.4% |

| Disgusted | 1.3% |

| Confused | 0.7% |

| Angry | 0.7% |

AWS Rekognition

| Age | 35-43 |

| Gender | Male, 97.1% |

| Sad | 83.8% |

| Calm | 14.9% |

| Confused | 0.4% |

| Disgusted | 0.3% |

| Happy | 0.3% |

| Angry | 0.2% |

| Fear | 0.1% |

| Surprised | 0.1% |

AWS Rekognition

| Age | 48-54 |

| Gender | Male, 93% |

| Happy | 35.3% |

| Sad | 28% |

| Confused | 16.4% |

| Calm | 9.3% |

| Disgusted | 5.3% |

| Angry | 2.3% |

| Fear | 2% |

| Surprised | 1.5% |

AWS Rekognition

| Age | 24-34 |

| Gender | Female, 52.8% |

| Sad | 72.1% |

| Confused | 8.7% |

| Happy | 6.6% |

| Surprised | 5.8% |

| Calm | 2.1% |

| Fear | 2.1% |

| Disgusted | 1.6% |

| Angry | 1.1% |

AWS Rekognition

| Age | 52-60 |

| Gender | Male, 93.3% |

| Calm | 100% |

| Sad | 0% |

| Happy | 0% |

| Surprised | 0% |

| Disgusted | 0% |

| Confused | 0% |

| Angry | 0% |

| Fear | 0% |

AWS Rekognition

| Age | 22-30 |

| Gender | Male, 51.3% |

| Calm | 93.9% |

| Sad | 1.6% |

| Surprised | 1.2% |

| Happy | 1.1% |

| Disgusted | 0.6% |

| Fear | 0.6% |

| Confused | 0.5% |

| Angry | 0.5% |

AWS Rekognition

| Age | 31-41 |

| Gender | Male, 53.1% |

| Calm | 77.7% |

| Surprised | 6.7% |

| Disgusted | 5.2% |

| Sad | 3.9% |

| Angry | 2% |

| Fear | 1.8% |

| Confused | 1.5% |

| Happy | 1.1% |

AWS Rekognition

| Age | 28-38 |

| Gender | Female, 99.9% |

| Calm | 50.3% |

| Sad | 34.2% |

| Happy | 12.3% |

| Confused | 1.4% |

| Fear | 0.9% |

| Disgusted | 0.5% |

| Surprised | 0.3% |

| Angry | 0.2% |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Feature analysis

Amazon

Clarifai

AWS Rekognition

| Person | 99.2% | |

|

| ||

AWS Rekognition

| Person | 98.9% | |

|

| ||

AWS Rekognition

| Person | 98.6% | |

|

| ||

AWS Rekognition

| Person | 98.5% | |

|

| ||

AWS Rekognition

| Person | 97.9% | |

|

| ||

AWS Rekognition

| Person | 97.4% | |

|

| ||

AWS Rekognition

| Person | 96.9% | |

|

| ||

AWS Rekognition

| Person | 96.6% | |

|

| ||

AWS Rekognition

| Person | 95.7% | |

|

| ||

AWS Rekognition

| Person | 91.2% | |

|

| ||

AWS Rekognition

| Person | 84.5% | |

|

| ||

AWS Rekognition

| Person | 80.6% | |

|

| ||

AWS Rekognition

| Person | 80.2% | |

|

| ||

AWS Rekognition

| Person | 45.7% | |

|

| ||

AWS Rekognition

| Chair | 55.9% | |

|

| ||

Clarifai

| Footwear | 77.3% | |

|

| ||

Clarifai

| Footwear | 77.1% | |

|

| ||

Clarifai

| Footwear | 74% | |

|

| ||

Clarifai

| Footwear | 68.8% | |

|

| ||

Clarifai

| Footwear | 65.2% | |

|

| ||

Clarifai

| Footwear | 64% | |

|

| ||

Clarifai

| Footwear | 63.8% | |

|

| ||

Clarifai

| Footwear | 61.3% | |

|

| ||

Clarifai

| Footwear | 61.3% | |

|

| ||

Clarifai

| Footwear | 55.8% | |

|

| ||

Clarifai

| Footwear | 52.3% | |

|

| ||

Clarifai

| Footwear | 49.4% | |

|

| ||

Clarifai

| Footwear | 47% | |

|

| ||

Clarifai

| Footwear | 45.3% | |

|

| ||

Clarifai

| Footwear | 45.1% | |

|

| ||

Clarifai

| Footwear | 44.2% | |

|

| ||

Clarifai

| Footwear | 43.8% | |

|

| ||

Clarifai

| Footwear | 41.9% | |

|

| ||

Clarifai

| Footwear | 40.2% | |

|

| ||

Clarifai

| Footwear | 39.9% | |

|

| ||

Clarifai

| Footwear | 39.8% | |

|

| ||

Clarifai

| Footwear | 39% | |

|

| ||

Clarifai

| Footwear | 33.7% | |

|

| ||

Clarifai

| Man | 71.2% | |

|

| ||

Clarifai

| Man | 64% | |

|

| ||

Clarifai

| Man | 63.6% | |

|

| ||

Clarifai

| Man | 61.6% | |

|

| ||

Clarifai

| Man | 54% | |

|

| ||

Clarifai

| Man | 51.3% | |

|

| ||

Clarifai

| Man | 50.6% | |

|

| ||

Clarifai

| Man | 48% | |

|

| ||

Clarifai

| Man | 45.6% | |

|

| ||

Clarifai

| Man | 45.2% | |

|

| ||

Clarifai

| Man | 35.1% | |

|

| ||

Clarifai

| Man | 33.9% | |

|

| ||

Clarifai

| Clothing | 69% | |

|

| ||

Clarifai

| Clothing | 57.9% | |

|

| ||

Clarifai

| Clothing | 56.8% | |

|

| ||

Clarifai

| Clothing | 54.2% | |

|

| ||

Clarifai

| Clothing | 48.6% | |

|

| ||

Clarifai

| Clothing | 45.6% | |

|

| ||

Clarifai

| Clothing | 44.7% | |

|

| ||

Clarifai

| Clothing | 42.5% | |

|

| ||

Clarifai

| Clothing | 42.1% | |

|

| ||

Clarifai

| Clothing | 42% | |

|

| ||

Clarifai

| Clothing | 40.3% | |

|

| ||

Clarifai

| Clothing | 39% | |

|

| ||

Clarifai

| Clothing | 37.8% | |

|

| ||

Clarifai

| Clothing | 35.6% | |

|

| ||

Clarifai

| Woman | 59% | |

|

| ||

Clarifai

| Woman | 54.1% | |

|

| ||

Clarifai

| Person | 38.4% | |

|

| ||

Clarifai

| Human face | 38.3% | |

|

| ||

Clarifai

| Human face | 38.2% | |

|

| ||

Clarifai

| Human face | 34% | |

|

| ||

Categories

Imagga

created on 2022-01-09

| streetview architecture | 34.9% | |

|

| ||

| events parties | 25.2% | |

|

| ||

| text visuals | 14.1% | |

|

| ||

| people portraits | 9.2% | |

|

| ||

| interior objects | 6.8% | |

|

| ||

| food drinks | 2.6% | |

|

| ||

| paintings art | 2% | |

|

| ||

| cars vehicles | 1.4% | |

|

| ||

| beaches seaside | 1.3% | |

|

| ||

| nature landscape | 1.3% | |

|

| ||

| pets animals | 1.2% | |

|

| ||

Captions

Microsoft

created by unknown on 2022-01-09

| a group of people posing for a photo | 93.4% | |

|

| ||

| a group of people posing for the camera | 93.3% | |

|

| ||

| a group of people posing for a picture | 93.2% | |

|

| ||

Clarifai

Created by general-english-image-caption-clip on 2025-07-11

photograph of a crowd of people dancing.

Salesforce

Created by general-english-image-caption-blip on 2025-05-20

a photograph of a group of people standing around a table

OpenAI GPT

Created by gpt-4o-2024-11-20 on 2025-06-09

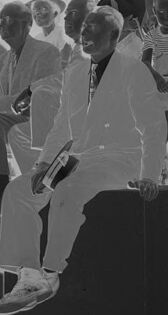

This is a black-and-white negative image featuring a group of people seated outdoors. The individuals appear to be dressed formally or semi-formally, wearing suits, dresses, and hats. They are sitting on chairs or on a structure, possibly raised seating or a bench, in what seems to be a park or wooded area. The trees and foliage in the background suggest a natural or outdoor environment. The presence of negative formatting (reversed light and dark tones) gives the image an inverted appearance. Handwritten markings and numbers, such as "17433," are visible above and below the image, and the margin includes text indicating "Safety—Kodak," which suggests it's part of the photographic film or plate.

Created by gpt-4o-2024-08-06 on 2025-06-09

The image is a black and white negative photograph showing a group of people sitting on a ledge or platform. The individuals are dressed in attire suggesting the period could be around the mid-20th century, as the clothing appears formal and typical of that era, with several women wearing dresses and hats, and men in suits. The backdrop includes trees, suggesting the gathering is outdoors, possibly in a park or garden setting. Due to the negative format, the colors are inverted, with dark areas appearing light and vice versa.

Anthropic Claude

Created by anthropic.claude-3-haiku-20240307-v1:0 on 2025-06-14

The image appears to show a large group of people gathered outdoors, seated on benches or chairs. The individuals are dressed in a variety of clothing styles, suggesting this may be some kind of public event or gathering. The image is in black and white, giving it a historical feel. While there are many people visible, I will not attempt to identify or name any of the individuals shown, as per the instructions provided.

Created by us.anthropic.claude-3-5-sonnet-20241022-v2:0 on 2025-06-14

This appears to be a black and white historical photograph from 1943, showing a large group of people seated on what looks like bleachers or tiered seating. The group is dressed in the fashion of the 1940s, with many wearing light-colored summer clothing. The image has a documentary quality to it, with the people arranged in rows and appearing to be part of some kind of organized gathering or event. The photograph has some film processing marks or numbers visible at the edges, suggesting it's from an archive or official documentation. The lighting in the photo creates an interesting contrast between light and shadow, giving the image depth and dimension.

Meta Llama

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-06-05

The image is a black-and-white photograph of a group of people sitting on a stage, with a tree visible in the background.

- The photograph is taken from a low angle, looking up at the stage.

- The stage is elevated and has a dark-colored surface.

- There are approximately 30 people sitting on the stage, all facing the same direction.

- They are dressed in formal attire, with men wearing suits and women wearing dresses.

- Some of the individuals are holding objects, such as books or papers.

- The background of the image shows a tree with branches and leaves.

- The sky is not visible, but it appears to be a sunny day.

- The overall atmosphere of the image suggests a formal event or gathering, possibly a conference or ceremony.

The image provides a glimpse into a formal event or gathering, with a group of people seated on a stage, surrounded by a natural setting.

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-06-05

The image is a black-and-white photograph of a group of people sitting on a raised platform, with trees visible in the background. The people are dressed in formal attire, suggesting that they may be attending an event or gathering. The overall atmosphere appears to be one of respect and solemnity, as the individuals are seated and facing forward, with some holding their hats or hands in a gesture of reverence. The presence of trees in the background adds a sense of natural beauty and tranquility to the scene.

Amazon Nova

Created by amazon.nova-lite-v1:0 on 2025-06-03

The image shows a group of people gathered in an outdoor setting. They are sitting on a stage, and some are standing around it. The people are dressed in a variety of clothing, including hats, dresses, and suits. The image is in black and white, and there is a watermark with the number "17433" in the top left corner. The people in the image appear to be engaged in a conversation or discussion, with some of them gesturing and pointing while others listen attentively.

Created by amazon.nova-pro-v1:0 on 2025-06-03

The black-and-white photo features a group of people sitting on a platform, possibly for a special occasion. Some of them are wearing hats, while others are wearing shoes. A man is sitting on the edge of the platform, holding a book. A woman in front of him is holding a baby. Behind them are trees, and a person is standing on the left side. The image has a watermark with the number "17433."

Google Gemini

Created by gemini-2.0-flash on 2025-05-01

Certainly! Here's a description of the image:

The image is a black and white photograph of a group of people sitting or standing on an elevated platform or low wall. The group appears to be a mix of men, women, and children, suggesting they could be attending an event, gathering, or public performance.

The background shows trees, indicating an outdoor setting. The people are dressed in what appear to be period-typical clothing, suggesting the photo might be from the mid-20th century.

The photographic technique appears to be older, possibly from the 1940s or 1950s. The image is marked with the number "17433" at both the top and bottom and has handwritten text along the right edge.

Created by gemini-2.0-flash-lite on 2025-05-01

Here's a description of the image:

Overall Impression:

The image is a medium shot, likely a negative, depicting a large group of people sitting and standing, possibly at an outdoor event. The atmosphere is slightly obscured, as if the photo was taken in front of a foggy forest background.

People & Composition:

- Seated Group: A significant portion of the image is taken up by people sitting on a low, dark-colored structure or platform. The people vary in age, and many of them appear to be wearing clothing from the mid-20th century era.

- Standing Group: In the background, more people are standing, some appearing to be looking at the event.

- Arrangement: The group is not perfectly organized, suggesting a more casual gathering.

Details:

- Clothing: Men are mostly in suits and jackets, and women are in dresses, hats, and skirts.

- Setting: The background has trees and foliage that are lightly focused, implying an outdoor environment such as a park or garden.

- Light & Shadow: The light is relatively consistent, but the overall image is reversed due to being a negative.

Additional elements:

- The numbers "17433." are printed on the top and bottom edges, likely a reference for archival or filing purposes.

- The text "KUDVA" is printed vertically on the right side of the image.

Overall:

The photo captures a candid moment of a gathering, likely a community event or celebration from the mid-20th century, showcasing a scene of people in various positions.

Mistral Pixtral

Created by us.mistral.pixtral-large-2502-v1:0 on 2025-06-02

The black-and-white photograph shows a group of people seated outdoors, possibly at a public event or gathering. The setting appears to be a park or an open area with trees in the background. The individuals are arranged in two rows, with the front row seated on a platform and the back row standing behind them.

The attendees are dressed in a variety of clothing styles, suggesting a mix of formal and casual wear. Some people are wearing suits and dresses, while others are in more casual attire. The group appears to be diverse in terms of age and possibly ethnicity. Many of the individuals are looking towards the camera, and some are smiling, indicating a sense of camaraderie or shared purpose.

The photograph has a number "17433" printed at the top and bottom, which might be a catalog or reference number. The image has a vintage feel, suggesting it could be from an earlier era, possibly mid-20th century. The overall atmosphere of the image conveys a sense of community and togetherness.

Qwen

Created by Qwen/Qwen2.5-VL-72B-Instruct on 2025-07-04

This black-and-white photograph captures a group of people seated and standing on a platform outdoors. The individuals are dressed in a mix of formal and casual attire, with some wearing hats and others in suits or dresses. The setting appears to be a public event or gathering, possibly in a park or open space, as trees are visible in the background. The image has a vintage feel, suggesting it may have been taken in a mid-20th-century era. The number "17433" is stamped in the top left and bottom right corners of the photograph.

Created by Qwen/Qwen2.5-VL-7B-Instruct on 2025-07-04

This is a black and white photograph depicting a group of people seated outdoors on what appears to be a raised platform or stage. The setting is shaded, with trees visible in the background, suggesting the event is taking place in a park or a similar public space. The people are dressed in formal and semi-formal attire, with both men and women wearing hats, suits, dresses, and shoes typical of the mid-20th century. They appear to be engaged in a gathering or event, possibly a concert, a speaker, or a community meeting, given their attentive postures and the arrangement of the seating. The overall atmosphere is one of social engagement and community gathering.

Text analysis

Amazon